Abstract

In the present review, we focus on how commonalities in the ontogenetic development of the auditory and tactile sensory systems may inform the interplay between these signals in the temporal domain. In particular, we describe the results of behavioral studies that have investigated temporal resolution (in temporal order, synchrony/asynchrony, and simultaneity judgment tasks), as well as temporal numerosity perception, and similarities in the perception of frequency across touch and hearing. The evidence reviewed here highlights features of audiotactile temporal perception that are distinctive from those seen for other pairings of sensory modalities. For instance, audiotactile interactions are characterized in certain tasks (e.g., temporal numerosity judgments) by a more balanced reciprocal influence than are other modality pairings. Moreover, relative spatial position plays a different role in the temporal order and temporal recalibration processes for audiotactile stimulus pairings than for other modality pairings. The effect exerted by both the spatial arrangement of stimuli and attention on temporal order judgments is described. Moreover, a number of audiotactile interactions occurring during sensory-motor synchronization are highlighted. We also look at the audiotactile perception of rhythm and how it may be affected by musical training. The differences emerging from this body of research highlight the need for more extensive investigation into audiotactile temporal interactions. We conclude with a brief overview of some of the key issues deserving of further research in this area.

Similar content being viewed by others

The boundaries between hearing and touch: the foundation of an analogy

We continuously interact with environments that provide a large amount of multisensory information to our various senses. Researchers have now convincingly demonstrated that the inputs delivered by the different sensory channels tend to be bound together by the brain (see the section Research on hearing and touch: a multisensory perspective for a fuller discussion of this topic). Unlike the audiovisual and visuotactile sensory pairings, those interactions taking place at both the neuronal and behavioral level between audition and touch have, to date, been explored in far less detail (see Kitagawa & Spence, 2006; Soto-Faraco & Deco, 2009, for reviews of the extant literature). The paucity of research covering this modality pairing is rather surprising when one considers the wide range of everyday situations in which we experience—even though often in subtle and unconscious ways—the interplay between these two senses. Examples include perceiving the “auditory” buzzing and the itchy “tactile” sensation of an insect landing on the back of our neck; reaching for a mobile phone ringing and vibrating in our pocket. What is common to these situations is the exclusive—or, at the very least, predominant—reliance on cues provided by the nonvisual spatial senses. In addition to these anecdotal reports, there is a growing body of empirical evidence demonstrating the existence of important similarities between the senses of hearing and touch (e.g., Soto-Faraco & Deco, 2009).

In his pioneering early work, von Békésy (1955, 1957, 1959) drew a number of parallels between the senses of audition and touch, which turned out to be so close as to lead him to consider the sense of touch as constituting a reliable model for the study of functional features of audition. For instance, von Békésy (1955) noted that audition and vibrotaction are analogous with regard to the level of the encoding mechanisms at their respective receptor surfaces. Indeed, both the basilar membrane of the inner ear and the mechanoreceptors embedded in the skin respond to the same type of physical energy—namely, mechanical pressure having a specific vibratory rate (e.g., either touching the surface of the skin with a vibrating body or stimulating the stapes footplate of the ear determines the propagation of travelling waves; von Békésy, 1959; cf. Nicolson, 2005).

The analogies in the physiological mechanisms (see Corey, 2003; Gillespie & Müller, 2009) underlying vibrotactile and auditory perception are likely rooted in the common origins of these two sensory systems (see Soto-Faraco & Deco, 2009; and von Békésy, 1959, for reviews). From this particular point of view, many pieces of evidence might be informative with regard to the existence of favored links between hearing and touch, in both animals (e.g., Bleckmann, 2008; Peck, 1994; Popper, 2000) and humans (Marks, 1983; von Békésy, 1959). The onset of function within the systems involved in sensory processing occurs in the following order: from the somesthetic and vestibular modalities to the chemosensory (oral and nasal), the auditory, and lastly, the visual modalities (see Gottlieb, 1971; Lickliter, 2000; Lickliter & Bahrick, 2000; see also Lagercrantz & Changeux, 2009; see Fig. 1).

The timeline of development of sensory systems during prenatal life (modified from Moore & Persaud, 2008)

The order in which the modality-specific as well as multisensory neurons in the anterior ectosylvian sulcus emerge also follows a precise time course, from tactile responsive to auditory responsive, and finally, to visually responsive neurons (Wallace, Carriere, Perrault, Vaughan, & Stein, 2006). Therefore, one cannot rule out the possibility that the line of development of the different sensory systems might not have some effect upon the successive strength, direction, and amount of reciprocal connections between them (e.g., Gregory, 1967; Katsuki, 1965; Lickliter & Bahrick, 2000).

Although the assessment of the nature of the stimulation that takes place during prenatal life, as well as the responsivity of the fetus to such stimulation, is problematic, the human fetus has been shown to respond to simultaneous stimulation in different sensory modalities since very early in development (e.g., Kisilevsky, Muir, & Low, 1992). Interestingly, the responses of the human fetus—as measured by heart rate and body movements—show an increase when stimulation is both vibratory and auditory as compared with when stimulation occurs in just one sensory modality in isolation (cf. Kisilevsky & Muir, 1991). Moreover, since the responsivity to vibroacoustic stimulation follows a specific maturational time line across gestation (Hoh, Park, Cha, & Park, 2009), any perturbation of the pattern of responses elicited by this kind of stimulation is thought to have a relevant diagnostic function during complicated pregnancies (D’Elia, Pighetti, Vanacore, Fabbrocini, & Arpaia, 2005; Morokuma et al., 2004). It is plausible that the presentation of stimuli in close temporal proximity could support the deployment during later development of the favored processing of specific co-occurring crossmodal sensory inputs (see Lecaunet & Schaal, 1996). Moreover, since the human fetus can respond to vibrotactile and acoustic information by the third trimester (Kisilevsky, 1995), whereas visual information is not fully transduced prior to birth, it is likely that crossmodal temporal synchrony is primarily experienced for the pairing of somatosensory and auditory signals (Lewkowicz, 2000).

The evidence emerging from embryology research is related with the commonality of some physical properties which, according to von Békésy (1959), are shared between audition and touch, such as pitch, loudness, volume, roughness, distance, on-and-off effects, and rhythm. For instance, in one experiment, participants were presented with a pair of clicks (one to either ear, separated by a variable time interval). As the time difference was increased from zero, the sound seemed to travel from one side of the participant’s head to the other (i.e., with a leftward or rightward direction), and the participants were asked to point to the direction from which the sound seemed to come. In certain conditions, air pulses were presented across the participant’s forehead. By adjusting the magnitude and timing of either auditory clicks or spatially coincident air-puffs so that the skin sensations matched the sensations produced by the acoustic clicks as closely as possible, von Békésy (1959) succeeded in demonstrating that observers found it very difficult to phenomenologically discriminate between auditory and tactile stimuli when they appeared to come from the same direction. This result points to the existence of remarkable analogies—at least under certain specific conditions of stimulus presentation—between the two senses, which could also possibly reflect how the signals from these two sensory modalities interact, at both the neural and behavioral levels.

In this review, a multisensory perspective will be adopted in order to provide an overview of those behavioral studies that have investigated interactions between auditory and tactile stimuli. The focus will be on the audiotactile interactions occurring within the temporal domain. Interest in temporal perception, both theoretical (e.g., Crystal, 2009; Droit-Volet & Gil, 2009; Gibbon, 1977; Glicksohn, 2001; Pöppel, 1997; Wittmann, 2009) and experimental (e.g., Grondin, 2010; Mauk & Buonomano, 2004), has been growing rapidly amongst the scientific community in recent years. According to certain authors, the emerging data are now consistent with humans having an amodal representation of time, one that is shared among different sensory modalities (cf. van Wassenhove, 2009). The remarks provided by van Wassenhove, though intriguing, are however currently confined to the auditory and visual modality pairing. In the present review, our aim has been to provide complementary coverage of the crossmodal nature of temporal perception by focusing on the audiotactile stimulus pairing instead.

Research on hearing and touch: a multisensory perspective

The process by which the human nervous system merges the available information into unique perceptual events is commonly known as multisensory integration (see Calvert, Spence, & Stein, 2004, for a review). Operationally, multisensory integration has been defined at the neuronal level as “a statistically significant difference between the number of impulses evoked by a crossmodal combination of stimuli and the number evoked by the most effective of these stimuli individually” (“multisensory enhancement;” Stein & Stanford, 2008, p. 255). This principle has been derived from a large body of studies conducted on the activity of neurons in the superior colliculus (SC), a midbrain structure involved in orienting behaviors. The specificity of this structure lies in the fact that it receives unisensory inputs from vision, touch, and audition (Rowland & Stein, 2008; see Stein & Meredith, 1993, for a review). On the basis of the available neurophysiological evidence, it is known that the processes by which the inputs delivered by different sensory pathways (e.g., visual, auditory, and somatosensory) are integrated are strongly affected by the spatial attributes of stimulation (this is known as the “spatial rule of multisensory integration;” Rowland & Stein, 2008; Stein & Stanford, 2008). Multisensory neurons have multiple excitatory receptive fields (RFs), one for each modality they are responsive to. Interestingly, the RFs of different sensory modalities overlap spatially (i.e., they are in approximate spatial register). Because of this characteristic, if multisensory inputs converge in this overlapping area, as when they originate from the same (or at least proximal) spatial locations, they can sometimes result in an enhancement of the neuronal response. If, on the other hand, the stimuli derive from spatially disparate locations, one of the stimuli may well fall within the inhibitory region of the neuron, thus determining a response depression. Moreover, multisensory enhancement is typically inversely related to the effectiveness of the single signals to be merged (this is known as the “law of inverse effectiveness;” Rowland & Stein, 2008; Stein & Stanford, 2008).

Particularly interesting in the present context, however, is the so-called “temporal rule of multisensory integration” (e.g., Calvert et al., 2004; Stein & Meredith, 1993; Stein & Stanford, 2008). Namely, only stimuli that occur in close temporal register (and hence that likely originate from the same event) result in response enhancement (i.e., evoke a rate of impulse firing that is significantly higher than the number of impulses evoked by the most effective of these stimuli when presented individually). Typically, the window of temporal tuning of multisensory neurons is a few tens to hundreds of milliseconds wide, with an optimal integration window estimated at approximately 250 ms (Meredith, Nemitz, & Stein, 1987). By contrast, stimuli separated in time just induce responses that are comparable to those evoked by unisensory stimuli (e.g., Beauchamp, 2005; Meredith et al., 1987; see also Kayser & Logothetis, 2007; van Wassenhove, 2009).

Along with the spatial rule and the law of inverse effectiveness, the temporal rule represents a core principle for the neural sensory integration processes and would possibly suggest a functional link between neuronal activity and the behavioral benefits of multisensory integration (see Holmes & Spence, 2005; Stein & Meredith, 1993; though see Holmes, 2007, 2009). Indeed, it has recently been demonstrated that multisensory integration yields a shortening of the latency between the stimulus arrival and the response elicited in an SC neuron (Rowland, Quessy, Stanford, & Stein, 2007). This very early effect parallels the so-called “initial response enhancement,” according to which the response enhancement is largest at the beginning of the response (Rowland & Stein, 2008). Both of these neuronal effects trigger and speed up the process by which crossmodal sources of information are integrated as soon the inputs reach the SC. This process results in faster behavioral responses to multisensory events as compared with those evoked by unisensory events (Rowland & Stein, 2008). The links between the neuronal activity and its behavioral effects that have emerged in temporal perception tasks will be discussed more extensively in the sections that follow.

Temporal resolution and temporal order

To the best of our knowledge, one of the first attempts to assess temporal perception within hearing and touch dates back to the 1960s, when Gescheider (1966, 1967a, 1970) measured auditory and tactile temporal resolution (see also von Békésy, 1959). In a series of studies, Gescheider (1966, 1967a, 1970) demonstrated that the skin and ear differed greatly in terms of their ability to resolve successive stimuli (i.e., the temporal resolution thresholds for pairs of brief stimuli presented in rapid succession were found to be 5–10 times higher for cutaneous stimulation than for auditory stimulation). For instance, two stimuli of equal subjective intensity were perceived as being temporally discrete if they were separated by ~2 ms for monaural and binaural stimulation, but by ~10–12 ms for cutaneous stimulation (Gescheider, 1966, 1967a). Moreover, pairs of auditory stimuli separated by less than 30 ms were perceived as being more disparate in time than pairs of cutaneous stimuli separated by the same temporal interval (Gescheider, 1970). However, when intervals greater than 30 ms were used, pairs of events in both modalities were perceived as equally separated (Gescheider, 1967b).

While Gescheider (1967a, 1967b) compared the temporal perception of auditory and tactile stimuli by testing them separately, Hirsh and Sherrick (1961) conducted the very first study to compare people’s ability to judge the temporal features of stimuli presented either within or across different pairs of sensory modalities. They used the temporal order judgment (TOJ) paradigm, in which participants are presented with pairs of stimuli at various different stimulus onset asynchronies (SOAs) and have to judge which stimulus appeared first. It should, however, be noted that the issue explored by Hirsh and Sherrick in their study differs slightly from the one investigated in Gescheider’s studies (1967a, 1967b). Indeed, the investigation of the perception of simultaneity or of temporal order likely activates different neuronal mechanisms, whose involvement is traditionally measured through distinct psychophysical estimates (cf. Van Eijk, Kohlrausch, Juola, & van de Par, 2010; van Wassenhove, 2009; Wackermann, 2007). The “fusion threshold” is defined as the frequency (expressed in Hz) at which observers perceive multiple events to be steady, the “simultaneity threshold” is the time interval required for two events to be correctly perceived as successive or simultaneous in time, and the “temporal order threshold” is the amount of time required for two events to be correctly ordered in time.

By measuring the just noticeable differences (JNDs, traditionally defined as the smallest temporal interval at which people can accurately discriminate the temporal order of the stimuli on 75% of the trials), Hirsh and Sherrick (1961) surprisingly found that the temporal separation required to correctly judge the temporal order was approximately 20 ms, in both unimodal (i.e., tactile, auditory, or visual; Experiments 1–3) and multisensory (e.g., audiotactile, audiovisual, and visuotactile; Experiment 4) conditions. Hirsh and Sherrick stated that:

“[W]hereas the time between successive stimuli that is necessary for the stimuli to be perceived as successive rather than simultaneous may depend upon the particular sense modality employed, the temporal separation that is required for the judgment of perceived temporal order is much longer and is independent of the sense modality employed.” (p. 432)

As we will see, many subsequent studies have provided evidence that has turned out to be fundamentally inconsistent with these claims (see, e.g., Fujisaki & Nishida, 2009; Spence, Baddeley, Zampini, James, & Shore, 2003; Zampini et al., 2005; Zampini, Shore, & Spence, 2003a, 2003b; see Table 1).

For instance, in one recent study, Fujisaki and Nishida (2009) addressed the question of whether people’s temporal perception differs as a function of the stimulus modality pairing under investigation. In particular, the authors studied whether there is any difference in terms of the temporal resolution of audiotactile, audiovisual, and visuotactile combinations of stimuli made of either single pulses (whose frequency changed from 1.4 to 26.7 Hz) or else repetitive-pulse trains (whose frequency changed between 6.25 and 356.25 Hz). In their experiment, they used a set of paradigms, traditionally used to assess temporal perception, such as a synchrony–asynchrony discrimination task, a simultaneity judgment task (SJ), and a TOJ task. In the synchrony–asynchrony judgment task, participants were presented with stimulus pairs having only one of two magnitudes of asynchrony—0 ms (synchrony) and X ms (asynchrony)—within a single block. The participants had to discriminate between the two alternatives. They were provided with trial-by-trial feedback regarding the accuracy of their responses. In the SJ task, pairs of crossmodal stimuli were presented, at a range of different stimulus onset asynchronies (SOAs), using the method of constant stimuli, and the participants had to judge whether the stimuli were presented simultaneously or successively. In the SJ task, the judgments are closely dependent on the criterion adopted by the participants (i.e., their subjective “simultaneous” category), whereas in the synchrony–asynchrony task, they might decide to adjust their judgments in light of the feedback received.

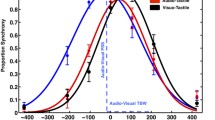

The results of the synchrony–asynchrony judgment and SJ tasks, and—to a lesser extent—of the TOJ task, consistently showed that the temporal resolution of synchrony perception was significantly better for the audiotactile stimulus pairing than for either the audiovisual or visuotactile stimulus pairing. Interestingly, by applying their analysis of the data to those reported by Hirsh and Sherrick (1961), Fujisaki and Nishida (2009) found an analogous pattern of results, with the audiotactile stimulus pairing being processed with a higher degree of temporal resolution as compared with the other sensory pairings. Moreover, the fact that the superior temporal resolution reported for the audiotactile stimulus pairing in the TOJ task is smaller than in the other two tasks suggests a partial dissociation of the perception of temporal order from the perception of simultaneity (see Wackermann, 2007). Furthermore, the threshold required to discriminate synchrony from asynchrony is lower for single- than for repetitive-pulse trains. This was found regardless of the stimulus combination used, possibly suggesting that both single-pulse and repetitive-pulse thresholds for the different stimulus combination may be coded by a common mechanism governing temporal resolution. If we assume that the threshold for single pulse trains indicates the width of the window of simultaneity reflecting the temporal precision of the temporal matching process, then the higher threshold observed for repetitive-pulse trains could be suggestive of an increased risk of false matching by the participants in this condition (Fig. 2).

Simultaneity judgments (SJs) and temporal order judgments (TOJs) for a audiovisual (AV), b visuotactile (VT), and c audiotactile (AT) stimulus pairs. Smooth curves represent the Gaussian function for the SJ data and the best cumulative Gaussian function for the TOJ data (modified from Fujisaki & Nishida, 2009, Fig. 4). AT has a narrower range of simultaneous response for SJ, and a steeper slope of psychometric function for TOJ as compared to both VT and AV stimulus pairings. Moreover, although both tasks are affected by stimulus combination, this effect is less pronounced in TOJ (vs. SJ) judgments (see Main Text for furher details)

The fact that audiotactile processing has a higher temporal resolution than that of the other stimulus modality pairings can be ascribed, according to Fujisaki and Nishida (2009), to two different explanations, which are by no means necessarily mutually exclusive. The first explanation takes into account the difference in temporal resolution that exists between the various senses. Since vision is known to have a lower temporal resolution than either audition or touch (Welch & Warren, 1980), whenever this sensory modality is involved, performance deteriorates. The alternative explanation takes into account the independent channels model proposed by Sternberg and Knoll (1973). According to their model, the perceived order of two stimuli is determined by evaluating the timing at which stimuli arrive at a central decision mechanism, or “comparator.” Fujisaki and Nishida (2009) argue that the higher temporal resolution for audiotactile stimuli reflects the more rapid operation of this comparator for audiotactile pairs of signals. The supposed higher degree of similarity in the temporal profile of the auditory and tactile inputs could possibly induce a facilitation of the comparison of their temporal characteristics as compared with when matching between stimuli presented to other sensory modalities (see also Cappe, Morel, Barone, & Rouiller, 2009; Hackett et al., 2007; Ley, Haggard, & Yarrow, 2009; Wang, Lu, Bendor, & Bartlett, 2008).

Moreover, Fujisaki and Nishida’s (2009) study covers the issue of the relationship between synchrony discrimination and temporal order discrimination (cf. Fujisaki & Nishida, 2010). The link between these two kinds of perception is well described by Wackermann (2007):

Temporal experience is primarily experience of succession. The relations of temporal order between events, “A occurs before B,” or “A occurs after B,” constitute the most elementary form of temporal judgment, preceding a metrical concept of time scale. Our notion of time as a perfectly ordered universe of events implies that any two events, A and B, are comparable as to their temporal order. If A occurs neither before B nor after B, then the events A and B are simultaneous. (p. 22)

This definition suggests that the processing of synchrony and temporal order between sensory stimuli are highly related mechanisms. However, the minimum temporal interval necessary for two stimuli to be perceived as nonsimultaneous (defined as the “fusion threshold;” Exner, 1875) does not coincide with the time interval required to indicate their relative order (what is known as the “order threshold”). This fact has been taken to suggest the existence of multiple brain mechanisms for two different aspects of temporal discrimination (i.e., one for the integration of a unitary percept, another for the determination of succession between different percepts; Hirsh & Fraisse, 1964; Piéron, 1952; van Wassenhove, 2009; see also the section Temporal synchrony and temporal recalibration).

The issue of a centralized versus distributed timing mechanism has been elegantly explored in a recent study by Fujisaki and Nishida (2010). The authors investigated whether the binding of synchronous attributes is specific to each attribute/sensory combination, or whether instead it occurs at a more central level. By using a psychophysical approach, they measured the processing speed of the judgments of the temporal relationship between two sequences of stimuli (i.e., cross-attribute phase judgments), either within single modalities or crossmodally. The rationale was that, in those cases in which the speed of binding is high and varies as a function of different attribute combinations, the underlying mechanisms are likely to be peripheral and attribute specific. By contrast, a low and invariant binding speed is expected when considering a shared underlying mechanism. In Fujisaki and Nishida’s (2010) study, participants had to perform a binding task and a synchrony–asynchrony discrimination task. In the first task, two sequences of stimuli were presented, each of which consisting of the repetitive alternation of two attributes (e.g., in the audiotactile condition, high- or low-pitched sounds were presented together with vibrations to the right or left index finger). The alternations always occurred synchronously between the two sequences, but the feature pairing varied as a function of the in-phase/reversed-phase conditions. The participants had to judge which features were presented simultaneously (e.g., whether the pitch was high or low when the right finger was vibrated). In the synchrony–asynchrony discrimination task, each stimulus sequence contained pulses at a given repetition rate, and the participants had to judge whether the pulses of the two sequences were presented synchronously or asynchronously. The results demonstrated that, whereas the temporal limit on cross-attribute binding was very low (2–3 Hz) and similar for all sensory modality combinations, the synchrony limit varied across the modality combinations (i.e., 4–5 Hz for audiovisual and visuotactile conditions, 7–9 Hz in the audiotactile condition).

Taken together, these results therefore suggest that crossmodal temporal binding and synchrony judgments are governed by different underlying neural mechanisms, with the first process being mediated by a central and amodal mechanism, whereas the perception of crossmodal synchrony appears to be mediated by a peripheral mechanism specific for each attribute combination (Weiss & Scharlau, 2011). However, according to Fujisaki & Nishida (2010), synchrony perception is also centrally represented, as demonstrated by the fact that the temporal limits of crossmodal synchrony perception are still much lower than the limits observed in the individual sensory modalities (cf. Fujisaki & Nishida, 2005). Moreover, synchrony perception is only slightly affected by the attribute combination as long as the modality combination does not change. Therefore, the authors conjectured that both the capability to accurately extract salient changes in time in each sensory channel and to compare them across sensory modalities contributes to improving synchrony judgments. In the binding task, beyond the capability to process the “when” dimension, the capability to judge which combination of stimulus attributes are presented at the same time (the “what” dimension) is also important (see Renier et al., 2009; Yau, Olenczak, Dammann, & Bensmaia, 2009). Thus, the crossmodal temporal binding and the synchrony judgment task would tap into different processes in the perception of an event, thus explaining the discrepancy in the performance observed in the two tasks. However, as pointed out by the authors themselves, the reason why the temporal limit should settle around 2–3 Hz in all of the conditions is unclear, and possibly involves a precise investigation of the timing of high-level sensory processing (Fujisaki & Nishida, 2010).

Spatial effects on the perception of temporal order

An additional aspect to emerge from the literature on TOJ tasks is that audiotactile TOJs seem to be unaffected by the spatial disparity between the stimuli being judged. In a series of experiments, Zampini and his colleagues (Zampini et al., 2005) had participants perform a TOJ task on pairs of stimuli, one tactile and the other auditory, presented at varying SOAs. The stimuli could either be presented from either the same spatial location (i.e., both on the right or the left side of the participant’s body midline) or different locations (i.e., one on the right and the other on the left side of the body midline). The results revealed that, contrary to what had been observed previously for audiovisual and visuotactile modality pairings (Spence et al., 2003; Zampini et al., 2003a, 2003b), the audiotactile version of the TOJ task was unaffected by whether the stimuli were presented from the same or different locations (sides). In previous studies, participants were found to be more sensitive (i.e., the results revealed smaller JNDs; Spence et al., 2003; Zampini et al., 2003a, 2003b) when the stimuli in the two modalities were presented from different spatial positions rather than from the same position (Fig. 3).

Just noticeable differences (JNDs) for the audiotactile stimulus pairs presented in Experiments 1–3 of Zampini et al.’s (2005) study compared with pairs of visuotactile stimuli (Spence et al., 2003, Experiment 1), and with audiovisual stimulus pairs (Zampini et al., 2003a, Experiment 1) in crossmodal TOJ studies. The error bars represent the within-observer standard errors of the means. The presentation of audiotactile stimulus pairs from different positions did not facilitate participants’ performance. By contrast, when visuotactile or audiovisual stimuli were presented from different positions (e.g., sides), performance was significantly better (i.e., the JND was smaller) than when the stimuli were presented from the same position (indicated by asterisks; modified from Zampini et al., 2005, Fig. 2)

The null effect of relative spatial position reported by Zampini et al. (2005) suggests that the audiotactile stimulus pairing may be somehow “less spatial” than the other multisensory pairings involving vision as one of the sensory modalities. These data add to previous research documenting a reduced magnitude of spatial interaction effects for this particular pair of modalities, as compared with the audiovisual and visuotactile pairings, possibly suggesting a finer spatial resolution of visual stimuli than of the auditory and tactile systems (e.g., Eimer, 2004; Gondan, Niederhaus, Rösler, & Röder, 2005; Lloyd, Merat, McGlone, & Spence, 2003; Murray et al., 2005).

Subsequent studies have, however, partially undermined this conclusion, demonstrating instead that the spatial arrangement of the stimuli, and the portion of space stimulated, can differentially affect the nature of the audiotactile interactions that may be observed (Kitagawa, Zampini, & Spence, 2005; Occelli, Spence, & Zampini, 2008). In Kitagawa et al.’s (Experiment 1) audiotactile TOJ experiment, the participants had to judge the temporal order of pairs of auditory and tactile stimuli presented from the left and/or right of fixation at varying SOAs and report which modality had been presented first on each trial. The auditory stimuli were presented from loudspeaker cones, whereas the tactile stimuli were delivered via electrotactile stimulators attached to the participants’ earlobes. The results highlighted higher sensitivity (i.e., a lower JND), for stimuli presented from different sides rather than from the same side (i.e., 55 vs. 64 ms).

The discrepancy between the results reported in audiotactile TOJ tasks for stimuli presented from the back and from frontal space (Kitagawa et al., 2005; Zampini et al., 2005) can be explained by taking into account the crucial role of vision in the processing of spatial information in frontal space (Eimer, 2004). That is, audiotactile interactions may be somewhat less “spatial” than other multisensory interactions involving vision as one of the component sensory modalities (e.g., think of audiovisual and visuotactile stimulus pairings; see Spence et al., 2003; Zampini et al., 2003a, 2003b), or when audiotactile stimuli are presented at locations in which visual cues are normally available. By contrast, the lack of visual cues, just as in Kitagawa et al.’s study, may have contributed to a better coding of auditory and tactile spatial cues, which, in turn, could have induced benefits in the processing of their temporal features.

This explanation has recently received further support from another study by Occelli et al. (2008). There, the potential modulatory effect of relative spatial position on audiotactile TOJs was examined as a function of the visual experience of the participants using the paradigm developed by Zampini et al. (2005). The results of Occelli et al.’s study demonstrated that although the performance of the sighted (blindfolded) participants was unaffected by whether or not the two stimuli were presented from the same spatial location, thus replicating Zampini et al.’s earlier findings, the blind participants (regardless of the age of onset of their blindness) were significantly more accurate when the auditory and tactile stimuli were presented from different spatial positions rather than from the same position (see Table 1). Thus, the relative spatial position from which the stimuli were presented had a selective effect on the performance of the blind, but not on the performance of the sighted participants. This pattern of results suggests that the exclusive reliance on those sensory modalities that are typically considered less adequate for conveying spatial information (see Welch & Warren, 1980) failed to induce any advantage in terms of the performance of the blindfolded sighted participants. On the contrary, visual deprivation results in an enhancement of the ability to use the spatial cues available in the intact residual senses (e.g., hearing and touch; see also Collignon, Renier, Bruyer, Tranduy, & Veraart, 2006; Röder, Rösler, & Spence, 2004; Röder et al., 1999). Taken together, these data therefore reveal that the absence of vision (Occelli et al., 2008) or of visual information, as for stimulation occurring behind a participant’s head (Farnè & Làdavas, 2002; Kitagawa et al., 2005), seems to be related to more prevalent audiotactile spatial interactions than those occurring in frontal space (Zampini et al., 2005). Moreover, the processing of the spatial cues within touch and audition is improved by presenting the stimuli from that portion of space in which visual cues are typically unavailable (Kitagawa et al., 2005; Experiment 1) or absent as a result of blindness (e.g., Collignon et al., 2006; Occelli et al., 2008; Röder et al., 2004; Röder & Rösler, 2004).

Temporal synchrony and temporal recalibration

As was already highlighted (see the section Research on Hearing and Touch: A Multisensory Perspective), multisensory integration can take place between stimuli that are not temporally coincident, but which fall within the “temporal window” of integration (Meredith et al., 1987; see also Spence, in press), thus indicating that the merging of information from different modalities can overcome the differences of the senses in terms of conduction speeds, response latencies, and neural processing times (e.g., Lestienne, 2001; Nicolas, 1997; Vroomen & Keetels, 2010). Even though its extent is still a matter of some debate (e.g., Vatakis & Spence, 2010; Vroomen & Keetels, 2010), the existence of a window of temporal tolerance has not only empirical but also theoretical implications (e.g., Pöppel, 2009; van Wassenhove, 2009). Indeed, it implies that the concept of “temporal coincidence” (or “time point;” von Baer, 1864) in perception cannot be experienced in real life, but is rather a construct coinciding not with a specific point in time, but with a window of time. It follows from this that two stimuli falling within this temporal window are likely to be bound together into a single multisensory percept (see also Pöppel, Schill, & von Steinbüchel, 1990). Next, those studies that have investigated the temporal window of integration between auditory and tactile signals will be reviewed.

One series of experiments has investigated people’s perceptual sensitivity to simultaneity between haptic and auditory events and whether this would be significantly affected by the physical characteristics of the stimuli that were presented. To address this question, realistic stimulation conditions—such as a hammer hitting a surface or a drum being tapped—were followed by their auditory consequences, and were either executed (Adelstein, Begault, Anderson, & Wenzel, 2003) or filmed (Levitin, MacLean, Mathews, & Chu, 1999). Despite the high between-participants performance variability (see also Begault, Adelstein, McClain, & Anderson, 2005), in both cases, the performance metrics calculated on basis of SJ data observed were significantly different from zero. It can be noted, however, that the values reported in these studies are much smaller than the 80 ms reported in Zampini et al.’s (2005) audiotactile TOJ study. Thus, even though the optimal impression of simultaneity for auditory and tactile stimuli is not perceived when the two stimuli are presented synchronously, it would seem that the conditions of stimulation have an effect in modulating the perceived relative temporal relationship between the stimuli, as measured by JNDs in synchrony/asynchrony tasks (see also Fink, Ulbrich, Churan, & Wittmann, 2006; Vroomen & Keetels, 2010, for other factors affecting the perception of intersensory synchrony). In particular, the use of ecological stimuli, such as those used in the studies of Adelstein et al. and Levitin et al., raises the question of how causality influences multisensory integration. On the basis of a number of recent audiovisual studies, it is known that when the stimulus presented in one modality in some sense predicts the stimulus in the other, multisensory integration is often enhanced (see Mitterer & Jesse, 2010; Schutz & Kubovy, 2009; Vroomen & Stekelenberg, 2010). A similar effect may have affected audiotactile integration in the previously described studies (Adelstein et al., 2003; Levitin et al., 1999), with possibly boosted consequences (i.e., higher tendency to merge multisensory inputs) where visual cues were involved, as in Levitin et al.’s study.

Closely related to the studies just described are those that have assessed the mechanisms of temporal recalibration/adaption between auditory and tactile stimuli (Hanson, Heron, & Whitaker, 2008; Harrar & Harris, 2008; Levitin et al., 1999; Navarra, Soto-Faraco, & Spence, 2007; Virsu, Oksanen-Hennah, Vedenpää, Jaatinen, & Lahti-Nuuttila, 2008). It has been observed that inputs from different sensory modalities that refer to the same external event (or occur at the same time) will likely reach the cortex at different times, due to differences in the speed of transmission of the signals through different sensory systems (King, 2005; Macefield, Gandevia, & Burke, 1989; Schroeder & Foxe, 2004, 2005; Spence, Shore, & Klein, 2001; Spence & Squire, 2003). It follows from this observation that our perceptual systems need to be able to accommodate a certain degree of asynchrony between the information arriving through different channels.

In the literature on crossmodal integration, it has been demonstrated that the point of subjective simultaneity (PSS; “amount of time by which one stimulus has to precede or follow the other order for the two stimuli to be perceived as simultaneous;” Spence & Parise, 2010, p. 365) measure can be significantly affected by adaptation to asynchrony (see Vroomen & Keetels, 2010, for a review). This process is typically assessed by measuring participants’ perceptions of crossmodal simultaneity both before and after exposure to a constant temporal discrepancy between the stimuli that happen to be presented in the two modalities. During the exposure phase of such studies, the perception of asynchronous stimuli should be progressively realigned. As a consequence, the perception of simultaneity changes, in a way that, after exposure, the impression of asynchrony is reduced. There are two candidate mechanisms for the process of temporal recalibration: (a) The realignment of sensory neural signals in time, with the processing of one of the sensory modalities shifting in time toward the other; and (b) The widening of the temporal window for multisensory integration (see Vroomen & Keetels, 2010).

In their study, Navarra et al. (2007) investigated whether exposure to audiotactile asynchrony would induce temporal recalibration between the processing of auditory and tactile stimuli. The participants in their study had to perform an audiotactile TOJ task both before and after an exposure phase in which paired auditory and vibrotactile stimuli could be presented either simultaneously or with the sound leading the vibration by 75 ms. In the exposure phase of the experiment, in order to ensure that participants attended to both auditory and tactile stimuli, they had to perform a control task involving the detection of stimuli that were longer than the standards. Navarra et al.’s results highlighted the fact that exposure to audiotactile asynchrony induced a temporal adaptation aftereffect that influenced the temporal processing of the subsequently presented auditory and tactile stimuli. More precisely, the minimal interval necessary to correctly judge the temporal order of the stimuli was larger after exposure to the desynchronized trains of stimuli (JND = 48 ms) than after exposure to the synchronous stimulus trains (JND = 36 ms), whereas no differences were observed in the PSS (i.e., 11 vs. 5 ms). This result differs from the temporal adaptation process taking place between visual and auditory stimuli, as assessed using similar experimental methods (e.g., Fujisaki, Shimojo, Kashino, & Nishida, 2004; Vroomen, Keetels, de Gelder, & Bertelson, 2004). It thus seems that the audiotactile temporal window is flexible and can be widened in order to compensate for the asynchronies occurring between these stimuli, differently from the audiovisual condition, in which a temporal realignment process seems to take place (cf. Fujisaki et al., 2004). Navarra et al. suggested that the discrepancy between the results could be explained by considering the rare occurrence and the small magnitude of the asynchronies occurring between hearing and touch experienced in everyday life. According to this speculation, the widening of the temporal window of multisensory integration could be considered as a nonspecific mechanism that allows for the integration of—infrequently-experienced—audiotactile stimuli presented in close temporal proximity, and the temporal realignment as a more specific compensatory mechanism, suitable for coping with the relatively large asynchronies that may occur between visual and auditory stimuli (Navarra et al., 2007).

Contrasting results have, however, been reported recently. Harrar and Harris (2008) compared the changes in the perception of simultaneity for three different combinations of stimulus modality (i.e., audiotactile, audiovisual, and visuotactile) as a function of the exposure to asynchronous stimulus pairs, which were presented in each of the three stimulus combinations. In contrast with Navarra et al.’s (2007) results, no temporal adaptation (i.e., neither a change of the JND nor of the PSS) was observed for the audiotactile pairings following exposure to any of the three stimulus combinations. According to Harrar and Harris, this discrepancy could be attributed to methodological differences. Specifically, in their study, the tactile (not the auditory, as in Navarra et al.’s [2007] study) stimulus led within the asynchronous pairs. Moreover, the exposure sequences differed not only in terms of their duration, but also in terms of the task that participants had to perform to maintain their attention focused. Further research could therefore help to clarify whether these factors may have contributed to the conflicting results obtained in these two studies (Fig. 4).

Average cumulative Gaussian curves before and after exposure to time-staggered bimodal stimulus pairs. The solid vertical line and filled black symbols represent responses in the preexposure phase. The dotted vertical line and open symbols are postexposure. The three columns are arranged according to the stimulus pair used in the exposure phase: sound/light (light leading by 100 ms), sound/touch (touch leading by 100 ms), and light/touch (touch leading by 100 ms), respectively. The rows are arranged according to the stimulus pairs tested: sound/light (positive means “light first”), sound/touch (positive means “touch first”), light/touch (positive means “touch first”). The three shaded graphs are the combinations involving auditory and tactile stimuli (modified from Harrar & Harris, 2008, Fig. 2)

Another interesting attempt to explore the crossmodal nature of the temporal recalibration process was reported recently by Di Luca, Machulla, and Ernst (2009). In their study, the authors investigated whether, and to what extent, the audiovisual temporal recalibration effect transfers to the perception of simultaneity for visuotactile and audiotactile stimulus pairs. The interesting result to emerge from this experiment was that the transfer of audiovisual recalibration of simultaneity to the other pairings of stimulus modalities was dependent on the location from which the stimuli happened to be presented. Specifically, when the stimuli were presented from the same spatial location in front of participants, the audiovisual recalibration effect transferred to visuotactile stimulus pairs (and not to the audiotactile pairings). By contrast, when the auditory stimuli were presented over headphones instead (i.e., when the auditory stimuli were not colocated with the visual and tactile stimuli), the audiovisual temporal recalibration effect transferred to the audiotactile stimulus pairs (and not to the visuotactile pairings). The fact that audiovisual temporal recalibration differently affected the other two sensory pairings could be due, at least according to Di Luca et al., to the different spatial arrangement of the stimuli. More precisely, in the colocation condition, a change in the perceptual latency (and in the reaction time; RT) of the visual stimuli was observed (see Fig. 5a), whereas in the different location condition, a change in the perceptual latency (and in RT) of the auditory stimuli (see Fig. 4b) was observed instead (cf. Sternberg & Knoll, 1973).

PSS obtained for the three stimulus pairs (i.e., AV audiovisual, AT audiotactile, VT visuotactile) when the auditory stimuli were presented without headphones (a; Experiment 1) or via headphones (b; Experiment 2). Error bars represent the standard error of the mean across participants. Significant effects are indicated by an asterisk (p < .05; modified from Di Luca et al., 2009, Figs. 5 and 6)

Di Luca et al. (2009) argued that the repeated exposure to asynchronous audiovisual stimuli gave rise to the adjustment of the perceptual latency of, separately, the visual or the auditory stimulus. This, in turn, caused a recalibration of perceived simultaneity within the stimulus pairings which, as in Di Luca et al.’s study, were different from those composing the stimuli repeatedly presented during the exposure phase of their experiment. Moreover, the mode of presentation has been shown to affect which signal estimate is trusted more, and hence which signal undergoes a change of temporal latency during recalibration. In Di Luca et al.’s study, when the auditory stimuli were presented over headphones (which constitutes a nonfixed external stimulus source, since it moves when the head moves), the auditory estimate was more likely to be biased (and thus trusted less) than the visual estimate. As a consequence, in this condition, it is the auditory estimate that is temporally recalibrated toward the visual standard. This is seen as an increased transfer to the perception of audiotactile simultaneity as compared with the perception of visuotactile simultaneity. By contrast, when the auditory stimuli were spatially fixed, the visual estimate was more likely to be biased, thus giving rise to an opposite pattern of results (Di Luca et al., 2009).

The fact that temporal recalibration transfers across sensory modality (see also Nagarajan, Blake, Wright, Byl, & Merzenich, 1998, for a study investigating the transfer of temporal rate discrimination from touch to audition) leads on to the crucial question of whether the extraction of temporal rate information should be considered as being coupled to a sensory modality or whether instead it is represented amodally (e.g., see Grondin, 2010; van Wassenhove, 2009; Wittmann, 2009). Despite the remarkable body of evidence accumulated on this topic, the large degree of inconsistency, as highlighted by the data reviewed here, seems to suggest that the exact nature of the temporal features that characterize audiotactile interactions are still unresolved, and thus are certainly worthy of further investigation.

Attention and temporal perception: the prior entry effect

A number of studies have addressed the question of whether temporal perception and, in particular, the impression of temporal simultaneity/successiveness can be influenced by the attentional focus of participants. One of the best-studied attentional effects on temporal perception is the phenomenon of “prior entry” (see Spence & Parise, 2010, for a recent review). According to the law of prior entry (Titchener, 1908), the attended stimuli (or modality) will be perceived sooner than when attention is focused elsewhere (or on another modality). This effect has traditionally been assessed by means of the aforementioned TOJ task, and is measured as a significant difference in the PSS between conditions in which one of the target stimuli is attended as compared with when the other stimulus is attended instead (or when attention is divided).

Studies investigating the audiotactile prior entry effect have demonstrated that the endogenous focusing of an observer’s attention on one sensory modality can effectively modulate the perceived temporal relationship between pairs of stimuli (Sternberg, Knoll, & Gates, 1971; Stone, 1926; see also Sternberg & Knoll, 1973; Van Damme, Gallace, Spence, Crombez, & Moseley, 2009). Moreover, the focusing of a person’s attention on a specific location or sensory modality, as well as toward a particular point in time, determines the relative speeding up in the processing of the auditory and tactile stimuli, as shown by Lange and Röder (2006). In their study, participants were presented with short (600 ms) and long (1,200 ms) empty intervals, marked by a tactile onset and an auditory or tactile offset marker (which could consist in a continous stimulation, or in a stimulation with a gap), and, on a block-by-block basis, were asked to attend to one interval and to one sensory modality. The participants had to decide as quickly and as accurately as possible whether the offset marker was a single or a double stimulus. The behavioral and electrophysiological results of this study demonstrated that focusing attention on a particular point in time facilitated the processing of auditory and tactile stimuli. More specifically, participants responded more rapidly to stimuli at an attended point in time as compared with stimuli that were relatively less attended, irrespective of which modality was task relevant. Moreover, an enhancement of early negative deflections of the auditory and somatosensory event-related potentials (ERPs; for audition, 100–140 ms; for touch, 130–180 ms) were observed when audition or touch were task relevant, respectively.

These results therefore suggest that the allocation of attention along the temporal dimension can affect the early stages of sensory processing. More interestingly, these data also demonstrate that focusing attention on a particular point in time results in the more efficient processing of stimuli presented in both audition and touch. In contrast with the results obtained in a sustained attention task, such as that conducted by Lange and Röder (2006), no modulation of sensory processing by temporal attention has been detected in a visual temporal cuing paradigm (cf. Griffin, Miniussi, & Nobre, 2001; Miniussi, Wilding, Coull, & Nobre, 1999). Lange and Röder suggested that this discrepancy between the effects seen for different modalities could be attributed to the fact that a sustained attention paradigm, such as that used in their study, could have facilitated a more stable representation of the time interval to be attended to. In this regard, their paradigm differs from the conditions in which the to-be-attended time point changes on a trial-by-trial basis (as in cuing paradigms). This, in turn, could have favored the emergence of earlier effects of temporal attention observed in their study. According to an alternative account for these data, the temporal acuity of audition and touch is simply higher than that of vision (see also Fujisaki & Nishida, 2009).

Numerosity

The decision to review those studies that have investigated numerosity judgments in the present review is supported by the functional link that exists between time and numerosity processing, as first proposed by Meck and Church (1983). In a series of experiments, these researchers demonstrated that rats automatically process duration and number when these two dimensions covary in a sequence of events (i.e., rats were equally sensitive to a 4:1 ratio for both counts and durations, with the other dimension being controlled; see also Brannon, Suanda, & Libertus, 2007, for evidence in children), suggesting that there is a similarity between the processes underlying counting and timing. Interestingly, this effect, which was first demonstrated with auditory inputs, has now been generalized to the case of cutaneous signals. Meck and Church proposed the existence of a single shared representational mechanism (i.e., an “internal accumulator”) that supervises both the duration and the numerosity of the events (and, for some authors, their spatial location; see Cappelletti, Freeman, & Cipolotti, 2009; Walsh, 2003).

In a typical temporal numerosity judgment task, a sequence of stimuli (i.e., flashes, beeps, or taps) is presented, and the observer has to try and judge how many stimuli have been presented (e.g., see Cheatham & White, 1952, 1954; Taubman, 1950a, 1950b). The first study to have compared the ability of participants to perform the tactile, visual, and auditory temporal numerosity discrimination of trains of stimuli (consisting of two to nine pulses) presented from a single location at different rates (varying from three to eight pulses per second) was conducted by Lechelt (1975). His results demonstrated that there was a generalized tendency toward the underestimation of the number of pulses, and the number of errors in number assessment was more pronounced as the number of pulses and/or the rate of stimulus presentation increased. More interestingly, in the context of the present review, was his finding that modality-specific differences were also observed. In all of the experimental conditions, the accuracy in the temporal numerosity judgment task was found to be much higher for audition than for either touch or vision.

A recent study investigated whether the combination of trains of stimuli presented simultaneously in more than one sensory modality could improve people’s temporal numerosity estimates (Philippi, van Erp, & Werkhoven, 2008). In contrast with other studies (e.g., Bresciani & Ernst, 2007; Bresciani et al., 2005; Hötting & Röder, 2004; Shams, Kamitani, & Shimojo, 2000) that explored whether there was any interfering effect between sequences of stimuli, the goal of Philippi et al.’s study was to explore whether the presentation of congruent sequences of stimuli would have a beneficial effect on participants’ temporal numerosity estimation judgments (see also Lee & Spence, 2008, 2009). The participants in this particular study were presented with sequences (i.e., 2 to 10) of stimuli at interstimulus intervals (ISIs) varying from 20 to 320 ms. The participants were instructed to use the multisensorially redundant information to their advantage when performing the task. Indeed, the results revealed that the degree of underestimation (which has been consistently found in previous unisensory temporal numerosity estimation judgment studies; e.g., Lechelt, 1975; White & Cheatham, 1959) and the variance in participants’ estimates were reduced as compared with those in the conditions of unisensory stimulus presentation. The results of Philippi et al.’s study confirmed that visual judgments were worse than the auditory judgments, and that these, in turn, were worse than the tactile judgments (see Lechelt, 1975; White & Cheatham, 1959). Overall, the results showed that the underestimation decreased for smaller ISIs (i.e., 20 and 40 ms) in the multisensory as compared with the unisensory presentation conditions. The authors explained this result by considering that the persistence of brief stimuli hampers the clear separation between rapidly presented within-modality signals, even leading to their fusion, under conditions in which the ISIs are much smaller than the persistence of each individual signal. Of interest for present purposes, Philippi et al. (2008) observed that the difference in temporal numerosity estimation judgments between unimodal auditory or tactile conditions and the bimodal audiotactile condition differed significantly only for short ISIs (20 and 40 ms).

As already mentioned, a large number of previous studies have investigated whether, and to what extent, the presentation of incongruent task-irrelevant multisensory sequences of pulses can influence people’s temporal numerosity judgments in the target modality. In the illusory flash paradigm, for instance, people are instructed to report the number of flashes presented with to-be-ignored incongruent sequences of beeps (Shams et al., 2000). The striking result to have emerged from this study was that, when presented with a single flash and multiple auditory pulses, observers typically perceived an illusory second flash. This illusory effect has been explained by taking into account the higher reliability of the auditory modality as compared with the visual modality in the temporal domain (see Shams, Ma, & Beierholm, 2005). This effect, which constitutes a robust perceptual phenomenon, has now been replicated in the audiotactile not to mention visuotactile domains (Bresciani et al., 2005; Bresciani & Ernst, 2007; Hötting, Friedrich, & Röder, 2009; Hötting & Röder, 2004). In one of their studies, Bresciani and Ernst (2007) presented series of beeps and taps and had their participants report on the number of tactile stimuli while ignoring the auditory distractors. The results revealed that participants’ tactile perception was modulated by the presentation of task-irrelevant auditory stimuli, with their responses being significantly affected by the number of beeps that were delivered. Such a modulation only occurred, however, when the auditory and tactile stimuli were similar enough (i.e., had the same duration) and were presented at around the same time (see Fig. 6).

Number of perceived events in the target modality as a function of both the actual number of events delivered (circle for two, square for three, and triangle for four events) and the background condition. The two graphs on the left correspond to the sessions in which the target modality was touch (i.e., the participants had to count the number of taps), and the two graphs on the right correspond to the sessions in which the target modality was audition (i.e., the participants had to count the number of beeps). The two upper graphs correspond to the sessions in which the beeps were louder, and the two lower graphs to the sessions in which the beeps were quieter (modified from Bresciani & Ernst, 2007, Fig. 2)

According to the maximum likelihood estimation model of multisensory integration (e.g., Alais, Newell, & Mamassian, 2010), the reliability of a sensory channel is related to the relative uncertainty of the information it conveys. The higher the relative variance of a sensory modality, the weaker its relative reliability (Ernst & Bülthoff, 2004). In another study, in order to investigate whether the auditory bias on tactile perception could be disrupted by manipulating the reliability of the auditory information, Bresciani and Ernst (2007) varied the intensity of the beeps. The auditory stimuli were presented at either 41 or 74 dB (signal-to-noise ratio of, respectively, –30 and 3 dB). They found that the participants were more sensitive (i.e., their estimates were less variable) in counting the number of the more intense (rather than the less intense) beeps presented with the irrelevant taps. Conversely, they were more sensitive in counting the number of taps presented with less intense (vs. more intense) irrelevant beeps (see also Wozny, Beierholm, & Shams, 2008). This pattern of results could reflect the fact that the decrease in the intensity of the auditory stimuli reduced the relative reliability of the auditory modality, thus inducing differential interactions with touch as a function of the intensity of the stimuli. Taken together, these results therefore show that audition and touch reciprocally bias each other (when alternatively used as target or distractor), with the degree of evoked bias depending on the relative reliability of the two modalities (see also Bresciani, Dammeier, & Ernst, 2008; Occelli, Spence, & Zampini, 2009).

To the best of our knowledge, however, the experimental investigations published to date have not applied a multisensory perspective to the assessment of the interactions between time and numerosity (and, possibly, space). Indeed, the currently available data refer to the visual (e.g., Dormal, Seron, & Pesenti, 2006) or auditory (Droit-Volet, Clement, & Favol, 2003; Xuan, Zhang, He, & Chen, 2007) modality, and typically report that when participants perform a numerosity judgment task, temporal intervals are perceived as shorter than their veridical duration. The investigation of this topic using a multisensory approach could lead to a better understanding of the interplay occurring between the mental representation of time, space, number—and, in general, of magnitudes—in both neurologically intact individuals and ultimately in those patients suffering from parietal lesions, a topic which has been, to our knowledge, by now investigated primarily in the visual modality (e.g., Oliveri et al., 2008, 2009; Vicario, Pecoraro, Turriziani, Koch, & Oliveri, 2008; see also Bueti & Walsh, 2009).

Audiotactile interactions based on frequency similarity

Perceptual interactions between hearing and touch are distinctive from those associations occurring between other pairings of sensory modalities (Gescheider, 1970; Soto-Faraco & Deco, 2009; von Békésy;, 1959; Zmigrod, Spapé, & Hommel, 2009, Experiment 2). As was already mentioned, auditory and vibrotactile stimuli are transduced by the same physical mechanism (i.e., mechanoreception), consisting of the mechanical stimulation of, respectively, the basilar membrane and the skin. Hence, both auditory and vibrotactile stimuli can be described according to their specific periodic patterns of stimulation (i.e., their frequency), defined as the number of repetitions of the sound waveforms (see Plack, 2004; Siebert, 1970) or of tactile pulses (see Luna, Hernández, Brody, & Romo, 2005), respectively, per unit time. Hence, it seems somehow surprising that the investigation of audiotactile interactions as a function of the frequency similarity between stimuli has rarely been carried out to date. Thus far, the focus has primarily been on the perception of lingual vibrotactile stimulation (Fucci, Petrosino, Harris, & Randolph-Tyler, 1988; Harris, Fucci, & Petrosino, 1986, 1989).

The ability of mammals to discriminate frequencies has been considered as reflecting the frequency resolution characterizing the auditory pathway at both the peripheral (i.e., the basilar membrane of the cochlea; Robles & Ruggero, 2001) and central (i.e., the primary auditory cortex; Langers, Backes, & van Dijk, 2007; Tramo, Cariani, Koh, Makris, & Braida, 2005) stages of auditory information processing. The systematic spatial mapping of frequency coding in the brain (known as tonotopy) and the filtering properties of auditory neurons and sensory receptors have been considered responsible for decoding the frequency of auditory stimulation (see Schreiner, Read, & Sutter, 2000, for a review; see also Elhilali, Ma, Micheyl, Oxenham, & Shamma, 2009; Romani, Williamson, & Kaufman, 1982; Schnupp & King, 2008). However, the tonotopic structure of the auditory system is not the only candidate for the representation of the temporal characteristics of auditory stimuli.

Indeed, the activity of neurons at different stages of the auditory pathway has been shown to change as a function of the repetition rates of the auditory events being processed (see Bendor & Wang, 2007, for a review). More specifically, acoustic signals within the flutter range (10–45 Hz) are coded by neurons that synchronize their activity to the temporal profile of repetitive signals. These neurons have been observed both along the auditory-nerve fibers and in the inferior colliculus, the medial geniculate body, and in a specific neuronal population along the anterolateral border of the primary auditory cortex (AI; Dicke, Ewert, Dau, & Kollmeier, 2007; Oshurkova, Scheich, & Brosch, 2008; Wang et al., 2008). Other mechanisms regulate the activity of the neural population coding for auditory signals presented at higher repetition rates (i.e., above the perceptual flutter range). These neurons modify their discharge rates—not their spike timing—as a function of the frequency of the auditory events that are being processed (Oshurkova et al., 2008; Wang et al., 2008). Thus, the temporal profile of auditory stimuli appears to be represented in AI by a dual process (i.e., stimulus-synchronized firing pattern and discharge rate), each involving specific subpopulations of neurons.

The distinct neural encoding of auditory stimuli differing in frequency may also be responsible for the different perceptual impression conveyed by auditory stimuli. Indeed, when auditory events are presented at rates within the 10–45 Hz (i.e., flutter) range, the resulting percepts tend to consist of sequential and discrete sounds (i.e., acoustic flutter; Bendor & Wang, 2007; see also Besser, 1967). According to Bendor and Wang, the discrete impression of the flutter percept could be considered as the direct outcome of the synchronized responses representing the event at different neural stages of the auditory pathway. On the other hand, neurons encoding stimuli with repetition rates beyond this range do not synchronize with the stimuli, thus failing to induce the impression of discrete auditory events and instead giving rise to continuous-sounding percepts that have a specific pitch (Bendor & Wang, 2007; Hall, Edmondson-Jones, & Fridriksson, 2006; Tramo et al., 2005; Wang et al., 2008).

Interestingly, the perceptual encoding boundary for repetition rates producing low- and high-frequency stimuli seems to be analogous in both hearing and touch (i.e., ~40–50 Hz). Just as in hearing, the sensation of flutter in touch is induced by periodic trains of impulses at frequencies between ~5 and ~40 Hz (e.g., Romo & Salinas, 2003), whereas higher repetition rates (~40–400 Hz) induce a sensation of “vibration/buzzing” (LaMotte & Mountcastle, 1975; Talbot, Darian-Smith, Kornhuber, & Mountcastle, 1968). Moreover, in the tactile domain, the identification and discrimination of stimuli differing in their frequency relies on the differential sensitivity of sensory receptors and afferent nerve fibers supplying different portions of the skin (Johansson & Vallbo, 1979a, 1979b; Morioka & Griffin, 2005). At the fingertips, the class of fibers classified as fast adapting fibers and the receptors known as “meissner corpuscles” are responsible for the processing of low vibrotactile frequencies (i.e., 5–50 Hz), whereas the pacinian fibers associated with the pacinian receptors are more sensitive to higher vibration frequencies (i.e., higher than 40 Hz; Francis et al., 2000; Iggo & Muir, 1969; Mahns, Perkins, Sahai, Robinson, & Rowe, 2006; Morley, Vickery, Stuart, & Turman, 2007; Talbot et al., 1968; Verrillo, 1985). Animal studies have suggested that one possible candidate for signalling information about the frequency of vibrotactile stimuli is an impulse pattern code, according to which the responses of rapidly adapting afferents are phase locked to the periodicity of the vibrotactile stimulus. The strict correspondence between the temporal features of the vibrotactile stimuli and the impulse patterns have not only been observed in the periphery (i.e., along the sensory nerve fibers), but also in neurons at higher levels along the ascending somatosensory pathway (Hernández, Salinas, García, & Romo, 1997; Mountcastle, Steinmetz, & Romo, 1990; Salinas, Hernández, Zainos, & Romo, 2000).

Even though the encoding of the frequency pattern of vibrotactile stimuli involves all of the stations along the somatosensory pathway, it is likely that more sophisticated processes, such as those involving the discrimination of different frequencies, occur more centrally. In primates performing a frequency discrimination task, the patterns of firing evoked in SI neurons by the comparison stimulus (i.e., usually presented second in each pair) are independent of those elicited by the standard stimulus (i.e., the first stimulus in each pair). It thus seems as though SI is an unlikely candidate for the encoding of the difference between the two stimuli (Romo & Salinas, 2003; Salinas et al., 2000). On the other hand, the fact that the response of neurons in the secondary somatosensory cortex (SII) to the second vibration is affected by the frequency of the first vibration suggests that these neurons contribute significantly to the coding of the frequency difference. Taken together, this evidence therefore suggests that, in primates at least, the perceptual comparison between different frequencies takes place in SII. Subsequent decisional processes are thought to involve the medial premotor cortex in the frontal lobe area, whose neuronal activity significantly covaried with monkeys’ perceptual reports (de Lafuente & Romo, 2005). The similarity of the performance demonstrated by monkeys and humans in detecting and discriminating between stimuli differing in frequency suggests that the neural mechanisms investigated in monkeys may be analogous to those that exist in humans (see Romo & Salinas, 2003; Salinas et al., 2000; Talbot et al., 1968). In humans, just as in monkeys, frequency discrimination does not rely exclusively on SI, but also involves downstream areas, such as SII and some regions in frontal cortex (Harris, Arabzadeh, Fairhall, Benito, & Diamond, 2006).

A recent fMRI study investigated which brain areas are involved in the discrimination of vibrotactile frequency in humans (Li Hegner et al., 2007). The participants in this study had to report whether the sequentially presented vibrotactile stimuli had the same or different frequency. The results revealed that an extended region was recruited during the performance of the task. Beyond the areas typically involved in this kind of task (i.e., SI and SII), other areas, such as the superior temporal gyrus, the precentral gyrus, ipsilateral insula, and supplementary motor area were also involved (Li Hegner et al., 2007). Interestingly, the superior temporal gyrus is known to mediate interactions between auditory and somatosensory stimuli, in both humans (Foxe et al., 2002; Schroeder et al., 2001) and monkeys (Fu et al., 2003; Kayser, Petkov, Augath, & Logothetis, 2005). Neurons in the auditory belt areas respond not only to pulsed tactile stimulation, but also to vibratory stimuli, thus suggesting that the auditory association cortex acts as a cortical location of convergence for auditory and tactile inputs during the discrimination of tactile frequency (Iguchi, Hoshi, Nemoto, Taira, & Hashimoto, 2007; Li Hegner et al., 2007; Schürmann, Caetano, Hlushchuk, Jousmäki, & Hari, 2006; see also Caetano & Jousmäki, 2006; Golaszewski et al., 2006). The evidence suggesting that the auditory areas involved in the processing of tactile stimuli are endowed with specific frequency profiles and contribute to the vibrotactile frequency discrimination processes, raises the intriguing possibility of anatomo-functional similarities between those cortical regions devoted to the processing of the periodicity of both vibrotactile and auditory stimulation.

A study by Bendor and Wang (2007) would appear to suggest that these similarities could, in fact, be the case. These authors distinguished between two populations of neurons in the auditory cortex, known as “positive monotonic” and “negative monotonic.” respectively. The first population typically increased its firing rate proportional to the increase of the repetition rates of an auditory stimulus, whereas the second population showed the opposite pattern of responding (see also Wang et al., 2008). Interestingly, neurons with positive and negative monotonic tuning to stimulus repetition rate have not only been observed in the auditory cortex, but also in the somatosensory cortex beyond SI (Bendor & Wang, 2007; Salinas et al., 2000). More specifically, neurons have been detected in SII whose spike rate can be positively or negatively related to the vibrotactile stimulus frequency (Luna et al., 2005; Salinas et al., 2000). The fact that neurons showing positive and negative monotonic tuning to stimulus repetition rate can be observed in both auditory and somatosensory cortices points to a commonality in how these two sensory systems might encode variations in the temporal profile of, respectively, auditory and vibrotactile stimuli (Bendor & Wang, 2007; Wang et al., 2008; see also Soto-Faraco & Deco, 2009), possibly indicating a potential neural basis for the discrimination of frequencies delivered crossmodally (see Bendor & Wang, 2007).

Preliminary evidence from Nagarajan et al. (1998) has suggested that temporal information processing could be governed by common mechanisms across the auditory and tactile sensory systems. In their study, participants were presented with pairs of vibratory pulses and were trained to discriminate the temporal interval separating them. The results not only suggested a decrease in the threshold as a function of training, but also the generalization of the improved interval discrimination to the auditory modality. Even though the generalization was constrained to an auditory base interval that was similar to the one that had been trained in touch, these results are intriguing in suggesting that the coding of temporal intervals could be represented centrally (i.e., shared among different sensory modalities; cf. van Wassenhove, 2009, see also Fujisaki & Nishida, 2010).

Additionally, recent neurophysiological evidence in humans has demonstrated that the discrimination of vibrotactile stimuli can be improved significantly in many people simply by providing auditory feedback—with the same frequency—after the presentation of the tactile stimulation (Iguchi et al., 2007; see Ro, Hsu, Yasar, Caitlin Elmore, & Beauchamp, 2009). The investigation of the neural substrates of this effect led to the conclusion that the increase of the perceptual accuracy and the speeding up of the discrimination of the tactile frequencies were subserved by the coactivation of SII and the supratemporal auditory cortices along with upper bank of the superior temporal sulcus. The data suggest that auditory feedback could have induced a complementary processing of tactile information by means of an intervening process of acoustic imagery. The results of this study therefore add weight to previous investigations showing considerable crossmodal convergence in the posterior auditory cortex of not only tactile stimulation (e.g., Foxe et al., 2002; Kayser et al., 2005), but also of vibrotactile stimulation, in both normal hearing (e.g., Caetano & Jousmäki, 2006; Schürmann et al., 2006) and deaf (Levänen & Hamdorf, 2001) humans.