Abstract

In order to determine the spatial location of an object that is simultaneously seen and heard, the brain assigns higher weights to the sensory inputs that provide the most reliable information. For example, in the well-known ventriloquism effect, the perceived location of a sound is shifted toward the location of a concurrent but spatially misaligned visual stimulus. This perceptual illusion can be explained by the usually much higher spatial resolution of the visual system as compared to the auditory system. Recently, it has been demonstrated that this cross-modal binding process is not fully automatic, but can be modulated by emotional learning. Here we tested whether cross-modal binding is similarly affected by motivational factors, as exemplified by reward expectancy. Participants received a monetary reward for precise and accurate localization of brief auditory stimuli. Auditory stimuli were accompanied by task-irrelevant, spatially misaligned visual stimuli. Thus, the participants’ motivational goal of maximizing their reward was put in conflict with the spatial bias of auditory localization induced by the ventriloquist situation. Crucially, the amounts of expected reward differed between the two hemifields. As compared to the hemifield associated with a low reward, the ventriloquism effect was reduced in the high-reward hemifield. This finding suggests that reward expectations modulate cross-modal binding processes, possibly mediated via cognitive control mechanisms. The motivational significance of the stimulus material, thus, constitutes an important factor that needs to be considered in the study of top-down influences on multisensory integration.

Similar content being viewed by others

Effective interaction with the world requires an optimal use of sensory input. This includes the integration of redundant information derived from the different sensory modalities, such as information about the spatial location of an object that is simultaneously seen and heard (Ernst & Bülthoff, 2004; Stein & Stanford, 2008). A fundamental question in multisensory research is how the brain integrates such independent sensory estimates into one coherent percept. According to the modality appropriateness hypothesis (Welch, 1999; Welch & Warren, 1980) and more recent computational models based on maximum likelihood estimation (Ernst & Bülthoff, 2004; Fiser, Berkes, Orbán, & Lengyel, 2010; Sato, Toyoizumi, & Aihara, 2007; Witten & Knudsen, 2005), the sensory modality that is most reliable for a given perceptual problem dominates the multisensory percept. Neither the modality appropriateness hypothesis nor the maximum likelihood estimation model, however, takes into account attentional, motivational, emotional, or any other factors that could potentially influence the process of cross-modal binding.

An example of visual dominance over audition that has been extensively studied in laboratory settings is the ventriloquism effect (Bertelson & de Gelder, 2004; Chen & Vroomen, 2013; Recanzone, 2009): When an auditory stimulus is presented together with a spatially misaligned visual stimulus, spatial localization of the auditory stimulus is biased toward the location of the visual stimulus, even if the participants are instructed to ignore the visual stimulus. If, however, the reliability of the visual input is reduced by severely blurring the visual stimulus, the effect reverses, and audition captures visual localization (Alais & Burr, 2004), in line with the assumptions of the maximum likelihood estimation model (Sato et al., 2007). On the basis of the available behavioral (Bertelson & Aschersleben, 1998; Bertelson, Vroomen, de Gelder, & Driver, 2000; Vroomen, Bertelson, & de Gelder, 2001), neuropsychological (Bertelson, Pavani, Làdavas, Vroomen, & de Gelder, 2000), and electrophysiological (Bonath et al., 2007; Colin, Radeau, Soquet, Dachy, & Deltenre, 2002; Stekelenburg, Vroomen, & de Gelder, 2004) evidence, it has generally been assumed that multisensory spatial integration, as observed in the ventriloquism effect, is an automatic process that is not modulated by top-down influences (Chen & Vroomen, 2013; though see Eramudugolla, Kamke, Soto-Faraco, & Mattingley, 2011).

So far, studies that investigated top-down influences on multisensory integration have almost exclusively focused on the role of attention (Talsma, Senkowski, Soto-Faraco, & Woldorff, 2010). By contrast, we recently investigated the influence of the emotion system on the process of multisensory binding (Maiworm, Bellantoni, Spence, & Röder, 2012). Participants were instructed to localize disyllabic pseudowords played randomly from one of eight loudspeaker locations during an initial learning phase. For the experimental group, aversive (fearful) stimuli were presented from one of these locations. Subsequently, a ventriloquism experiment was conducted with the same participants, that is, they were instructed to localize brief tones while ignoring concurrent light flashes. In the experimental group, the ventriloquism effect was reduced as compared to a control group (despite a lack of changes in unisensory auditory performance), indicating an influence of emotional learning on audiovisual binding processes. The authors speculated that the exposure to auditory emotional stimuli established the affective significance of the auditory modality, which might have diminished cross-modal integration processes in the subsequent ventriloquism experiment.

Affective significance can be induced both in a stimulus-driven fashion, based on sensory exposure to emotional stimuli (as in Maiworm et al., 2012), or in a state-dependent fashion, based on motivational manipulations involving reward expectancy, with often similar behavioral consequences (Pessoa, 2009). Thus, the question arises whether the multisensory binding process is influenced by motivational factors as well. It might be hypothesized that motivational manipulations induced by reward expectancy establish the affective significance of the modality that is rewarded, and thus task-relevant. If the mediating mechanisms of reward manipulations are similar to those for emotional learning (Pessoa, 2009), we would then expect to find a reduction of cross-modal binding in the ventriloquist illusion similar to what has been observed after emotional manipulations (Maiworm et al., 2012). More generally, this question directly addresses whether audiovisual spatial integration is influenced by top-down factors. A modulation of the spatial ventriloquism effect by reward manipulations would be in line with recent studies on the effects of attention on multisensory binding (Eramudugolla et al., 2011; Talsma et al., 2010) that have challenged the assumption that the ventriloquism effect is a totally automatic phenomenon (Bertelson, Pavani, et al., 2000; Bertelson, Vroomen, et al., 2000; Bonath et al., 2007; Colin et al., 2002; Stekelenburg et al., 2004; Vroomen et al., 2001).

In the present study, we tested whether motivational factors influence the ventriloquism effect by manipulating reward expectancy. It has been reported that manipulating the incentive value of stimuli can affect working memory (Krawczyk, Gazzaley, & D’Esposito, 2007; Miyashita & Hayashi, 2000), attention (Della Libera & Chelazzi, 2006; Engelmann, Damaraju, Padmala, & Pessoa, 2009; Small et al., 2005), and cognitive control mechanisms (Watanabe, 2007) in a top-down manner. Reward-mediated changes in unisensory processing have been observed in early sensory cortices, as well (David, Fritz, & Shamma, 2012; Laufer & Paz, 2012; Pleger, Blankenburg, Ruff, Driver, & Dolan, 2008; Pleger et al., 2009; Polley, Steinberg, & Merzenich, 2006; Schechtman, Laufer, & Paz, 2010; Seitz, Kim, & Watanabe, 2009; Serences, 2008). It is yet unknown, however, whether and how the genuine multisensory binding processes assessed with the ventriloquism effect are influenced by a manipulation of reward expectancy. Theoretically, reward expectancy could modulate multisensory binding via two mechanisms. First, reward expectancy might alter unisensory processing, resulting in a higher reliability of the unisensory input (e.g., Pleger et al., 2008; Pleger et al., 2009). As a consequence, the weight of the manipulated modality would be increased in subsequent multisensory binding processes, thereby leading to a changed multisensory percept (Ernst & Bülthoff, 2004; Sato et al., 2007). Alternatively, multisensory binding processes might be modulated directly by reward expectancy (i.e., independent of changes in unisensory processing; see Maiworm et al., 2012).

To investigate this question, we combined a classic ventriloquism paradigm with a spatial reward function. The participants were rewarded for precise and accurate spatial localization of tones while ignoring concurrent (spatially misaligned) visual stimuli. Thus, the motivational goal of maximizing their reward was put in conflict with the perceptual reliance on visual information in the ventriloquist situation. Crucially, the amount of reward that the participants could earn in each block of the experiment depended on whether the stimuli originated in the left or the right hemifield. If the ventriloquism effect is modulated by the expected amount of reward, we would predict that the degree to which the visual stimuli would bias the localization of the auditory stimuli would vary across the two hemifields.

Method

Participants

A group of 20 healthy adult volunteers from the University of Hamburg participated in the study. They were pseudorandomly assigned to one of two equally sized groups (left and right; see below). The data from one participant were excluded from the analysis because of deviant response behavior—that is, the standard deviation of the spatial responses was higher than 20° for some speaker positions, and the spatial responses exceeded 90° (i.e., a deviation of >60° from the most lateral speaker position) in the preexperiment; these values were clear outliers. Thus, 19 participants (five male, 14 female), from 20 to 46 years of age (mean 30.7 years), remained in the sample. They were naïve with regard to the purpose of the study. All of them reported normal hearing and normal or corrected-to-normal vision. Participants either received course credit or were compensated €7/h for their participation. Written informed consent was obtained from all participants prior to the study, and the study was performed in accordance with the ethical standards laid down in the 2008 Declaration of Helsinki.

Apparatus and stimuli

Participants were seated at the center of a semicircular array of eight loudspeakers (ConceptC Satellit, Teufel GmbH, Berlin, Germany), located at 7.2°, 14.4°, 21.6°, and 28.8° (azimuthal) to both sides from the midline. A distance of 90 cm separated the participant’s head (fixated by a chinrest) and any of the loudspeakers. The loudspeakers were hidden from view behind a black, acoustically transparent curtain extending peripherally to 90° from the midline on both sides. During the experimental sessions, a red laser beam was projected onto the curtain at 0°, indicating the point of fixation. A second laser beam served as the visual stimulus and was projected on the curtain for 10 ms at one of several possible azimuthal locations in a given trial. The auditory stimuli were 1000-Hz tones with a duration of 10 ms, played from one of the loudspeakers at 64 dB(A). Responses were given with a response pointer that was mounted in front of the participants. The spatial responses were confirmed by pressing a button that was attached to the pointer. In order to start the next trial, the participants had to redirect the pointer to a central azimuthal position (±10° from the midline) and again confirm this position by a buttonpress. A variable intertrial interval of 825 to 1,025 ms (drawn from a uniform distribution) was interposed between the confirmation of the central position of the response pointer and the onset of the next stimulus.

Procedure

An experimental session consisted of two parts that were separated by a short break:

-

1.

In two unisensory preexperiments, the participants had to localize unimodal auditory and visual stimuli, respectively. The preexperiments were conducted to assess the participants’ baseline localization performance and were not associated with a reward. The results from the auditory preexperiment were used to determine the criteria for obtaining a monetary reward in the main experiment.

-

2.

In the audiovisual main experiment, participants were rewarded for precise and accurate auditory localization performance. The main experiment was subdivided into four blocks. All trials in the main experiment consisted of an auditory stimulus accompanied by a spatially disparate visual stimulus. On each trial, participants had to localize the sound source while ignoring the concurrent visual stimuli.

Preexperiments

The preexperiments were visual and auditory localization tasks, respectively. Half of the participants started with the visual preexperiment, and the other half with the auditory one. In the visual preexperiment, visual stimuli were presented randomly from one of eight different locations. These locations coincided with the positions of the eight loudspeakers. In each trial, the participants had to indicate the perceived location of the stimulus as accurately as possible with the response pointer (and to confirm the response by a buttonpress). No emphasis was put on response speed, but participants were instructed to respond spontaneously. The auditory preexperiment was identical, except that instead of the visual stimuli, auditory stimuli originating from one of the eight loudspeakers were presented and had to be localized. Each of the preexperiments consisted of 320 trials (40 trials per location).

Main experiment

The main experiment was set up as a classic ventriloquism paradigm and consisted of four blocks of 192 trials each. In each trial, an auditory stimulus was presented randomly from one of the eight speaker locations. Concurrently, a spatially discrepant visual stimulus was presented at one of six possible locations relative to the sound source: ±2.7°, ±7.2°, or ±9.9° (negative numbers indicate positions to the left of the auditory location). Each combination of auditory and relative visual location was presented four times per block. Participants were asked to localize the sounds with the pointer while fixating the fixation point at the midline. They were not informed about the spatial relationship between the auditory and visual stimuli and were instructed to ignore the concurrent visual stimuli. After the participants had given their localization response, they again had to redirect the pointer to a central position. A second task was introduced to ensure that the participants did not close their eyes. Either once in a block, twice, or not at all, the fixation point flashed four times, once every 250 ms. The number of these rare deviants had to be counted and reported verbally by the participants after each block. Note that the number of deviants was randomly determined in each block. Thus, the overall number of deviants differed between participants (Mdn = 4, range = 1–7, summed across all four blocks). Trials containing such a fixation light flashing were excluded from the subsequent data analyses.

A spatial reward function was imposed on the stimulus space and was explained to the participants prior to the start of the main experiment (i.e., after they had completed the auditory and visual preexperiments). One hemifield was associated with a high reward (the left hemifield in the left group and the right hemifield in the right group), and the other hemifield with a low reward. In each of the four experimental blocks, participants earned a reward if their auditory localization performance in the respective hemifield (i.e., the mean absolute deviation of their localization responses from the physical sound locations) met a certain criterion (see below). Participants earned 450 points if their localization performance met the criterion for auditory stimuli presented in the high-reward hemifield, and/or 50 points if they met the criterion in the low-reward hemifield. Thus, participants could earn either 0, 50, 450, or 500 points in a given block. They were informed about the number of points they had won after each block, but no additional feedback about their localization performance was provided at any time throughout the experiment. After the experiment was finished, the point score was transferred to real money (one point was worth €0.01). This means that in the four blocks of the main experiment, participants could earn a maximum of €20 in addition to the monetary compensation or course credit they received for participating in the experiment.

This procedure was carefully explained to the participants, both in written form and orally. They were informed that they could earn more money with accurate spatial responses in the high-reward hemifield as compared to the low-reward hemifield, but that it was more difficult to meet the criterion in the high-reward hemifield. The high reward was linked to a stricter criterion than the low reward, in order to maximize differences in the participants’ motivation to perform well on the task between the two hemifields. The criteria for the high- and for the low-reward hemifield were computed individually on the basis of the localization performance of each participant in the auditory preexperiment. This was done to account for interindividual differences in auditory localization performance, and rendered meeting the criteria equally likely for all participants. In detail, the mean absolute deviation of the localization responses from the true sound position across all eight speaker locations (i.e., the mean absolute localization error) in the auditory preexperiment was calculated for each participant. In the first block of the main experiment, participants earned a high reward if the mean absolute localization error across the four speaker locations in the high-reward hemifield during that block was lower than 65 % of their mean absolute localization error in the preexperiment. A low reward was earned if the mean absolute localization error across the four speaker locations in the low-reward hemifield was lower than 75 % of the mean absolute localization error in the preexperiment.

In subsequent blocks, these criteria were adaptively adjusted in order to avoid both floor and ceiling effects. Separately for the high- and low-reward hemifields, the deviation from the criterion during the preceding block was calculated. For the next block, the criterion was then decreased by half of this deviation if the participant had met the criterion in the preceding block (i.e., obtained a reward), and increased by half of the deviation if the participant had failed the criterion in the preceding block (i.e., did not obtain a reward). For example, if a participant had a criterion of 4° in the high-reward hemifield (derived from the preexperiment) and then showed an absolute localization error of 6° in the first block of the main experiment, the criterion for the high-reward hemifield was set to 5° in the second block.

Data analysis

The data from the left and right groups were pooled by realigning the spatial responses from the main experiment according to the reward function. This was done by multiplying the azimuthal responses in the right group by −1 and mirror-inverting them across (a) the eight loudspeaker locations and (b) the six possible locations of the visual stimulus per loudspeaker. Thus, in the realigned data sets the eccentricity of the responses was preserved, but the left hemifield always corresponded to the high-reward hemifield, and the right hemifield always corresponded to the low-reward hemifield.

Individual localization performance in the unisensory preexperiments and the main experiment was quantified by conventional measures of error (Schmidt & Lee, 2011). In the main experiment, errors were calculated separately for each speaker location, but across trials from all six visual locations (i.e., irrespective of the location of the concurrent visual stimulus). Since participants were rewarded for their auditory localization performance, this procedure yielded a more informative measure of auditory localization performance than did the errors for each combination of auditory and visual locations (see below). In detail, the localization error (in degrees) in each trial was derived by subtracting the spatial position of the tone (for the auditory preexperiment and the main experiment) or the position of the visual stimulus (for the visual preexperiment) from the spatial position of the response (i.e., perceived location minus veridical location). The absolute error (AE) reflects an overall measure of performance and was used to determine the individual reward thresholds in the main experiment (see above). It was calculated by averaging the absolute values of the single-trial errors (i.e., the unsigned errors) in each condition, and thus took into account both the accuracy (or bias) and the precision (or variability) of the localization responses.

To allow for separate analyses of accuracy and precision, constant errors (CE) and variable errors (VE) were additionally derived. The constant error was calculated by averaging the signed values of the single-trial errors per condition (i.e., the mean deviation from the target), and thus indicates localization accuracy (disregarding precision). In the main experiment, the constant error reflects unisensory auditory localization accuracy (or spatial bias), because averaging the signed deviation from the auditory target location across visual locations to the left and to the right of the auditory stimulus would eliminate any visual bias of auditory localization. Note that the constant error can be positive or negative, reflecting both the amount and direction of deviation. Analyses were performed on the absolute values of the individual constant errors (i.e., absolute constant errors) when appropriate, in order to allow comparisons of the amounts of deviation (irrespective of direction) between the conditions. The variable error corresponds to the standard deviation (SD) of the individual localization responses in each condition (i.e., the variability of the responses), and thus indicates localization precision (disregarding accuracy).

The amount of the visual bias of auditory localization (i.e., the strength of the ventriloquism effect) was quantified as the difference between the mean localization responses with the visual stimulus to the right of the auditory location and with the visual stimulus to the left. Due to the subtraction, this procedure eliminated any unisensory spatial bias (i.e., constant error; see above) that would occur independent of the concurrent visual stimulation, and thus yielded an isolated measure of the ventriloquism effect. These ventriloquism scores were calculated separately for each audiovisual disparity (2.7°, 7.2°, and 9.9º) and speaker location. Theoretically, the value of the ventriloquism score could range between zero (if the visual stimuli did not exert any influence on auditory localization) and twice the audiovisual disparity (if localization relied solely on the visual stimuli), but would usually fall between these two extremes (Chen & Vroomen, 2013). Note that the variable error, as well as the absolute error, measures localization precision, and is thus affected by any variability in responses induced by the variation in the location of the visual stimuli (as reflected in the ventriloquism score). These measures, however, represent unisensory localization precision per se, and are also influenced by localization errors that are independent of the visual costimulation. Therefore, the ventriloquism score is a more sensitive measure of the specific influence of visual costimulation on auditory localization, and a difference in the ventriloquism score would not necessarily be reflected in a difference in the variable and absolute errors (and vice versa).

The individual absolute errors, (absolute) constant errors, variable errors, and ventriloquism scores were statistically analyzed with analyses of variance (ANOVAs; as well as t tests, where appropriate). For factors with more than two levels, the Greenhouse–Geisser correction was applied to compensate for any violations of the assumption of sphericity, and the corrected probabilities are reported.

Results

Preexperiments

The mean localization accuracies in the unisensory preexperiments are shown in Fig. 1, depicted as mean deviations from the actual target locations (i.e., constant errors). These data were submitted to a repeated measures ANOVA with the factors Modality (auditory, visual), Hemifield (left, right), and Eccentricity (7.2°, 14.4°, 21.6°, 28.8º). Participants overestimated the eccentricity of both auditory and visual stimuli, as reflected in a significant main effect of hemifield, F(1, 18) = 8.57, p < .01. This type of spatial bias has been observed previously, in both vision (Bruno & Morrone, 2007; Lewald & Ehrenstein, 2000) and audition (Lewald & Getzmann, 2006; Maiworm, König, & Röder, 2011). No other significant main effects or interactions were obtained (all ps > .23), indicating that constant errors did not vary between target modalities and eccentricities. Moreover, an ANOVA on the absolute values of the individual constant errors yielded neither a significant main effect of hemifield, F(1, 18) = 2.54, p = .13, nor any significant interaction involving hemifield (all ps > .38), suggesting that the magnitudes of spatial bias did not differ between hemifields. Like the signed constant errors, absolute constant errors were not modulated by target modality (see Fig. 2).

Response data from the unisensory preexperiments. Mean constant errors (i.e., the mean deviation of the spatial responses from the true location) are shown separately for the eight possible stimulus locations in the auditory (left panel) and visual (right panel) preexperiments. Negative values indicate constant errors to the left of the true stimulus location, and positive values indicate constant errors to the right. Error bars denote the SEMs

Localization errors in the unisensory preexperiments. Absolute constant errors (CE), variable errors (VE), and absolute errors (AE) are shown separately for the auditory and visual preexperiments, averaged across all eight stimulus locations. Error bars denote the SEMs. * p < .05, ns = not significant

Unlike the constant error (i.e., accuracy), the variable error (i.e., precision) of the localization responses, measured as the individual standard deviation of the localization responses, indicated better performance in vision than in audition (see Fig. 2), t(18) = 4.01, p < .01. This reflects the well-known fact that the reliability of the visual system is higher than that of the auditory system in spatial tasks (e.g., Alais & Burr, 2004). The absolute error, which represents a combined measure of both accuracy and precision, did not differ between audition and vision (see Fig. 2), t(18) = 1.26, p = .22. Finally, the response times to auditory stimuli (M = 1,866 ms, SEM = 224 ms) and visual stimuli (M = 1,809 ms, SEM = 248 ms) were in a similar range and did not differ significantly, t < 1.

With regard to the main experiment, the results from the preexperiments suggest that (a) constant errors similarly affected auditory and visual localization, and hence should not influence the magnitude of the ventriloquism effect; (b) absolute constant errors did not differ between the left and right hemifields, thus permitting data realignment according to the spatial reward function (see the “Method” section); and (c) variable errors were lower for visual than for auditory targets, which is a necessary precondition for the occurrence of the ventriloquism effect (Alais & Burr, 2004).

Main experiment

Mean localization responses in the main experiment are shown in Fig. 3 for each audiovisual stimulus combination, depicted as mean deviations from the auditory target locations (i.e., constant errors). Note that the individual data sets were realigned, so that the left hemifield always corresponded to the high-reward hemifield, and the right hemifield always corresponded to the low-reward hemifield (see the “Method” section). As in the preexperiments, participants overestimated the eccentricity of the auditory stimuli, and this constant error (averaged across visual locations) appeared to be equally pronounced in both the high- and low-reward hemifields (see the “Localization Performance” subsection below). Moreover, the localization responses were systematically shifted, depending on the position of the concurrent visual stimuli (to the left or right of the auditory stimulus), reflecting the ventriloquism effect. This visual modulation of auditory localization appeared to be stronger in the low-reward hemifield than in the high-reward hemifield (see the “Ventriloquism Effect” subsection below).

Response data from the main experiment. Mean constant errors (i.e., the mean deviations of the spatial responses from the true locations) are shown separately for the eight possible auditory locations and the six visual locations, relative to each loudspeaker location. The data have been realigned so that the left hemifield represents the high-reward hemifield, and the right hemifield represents the low-reward hemifield. Negative values indicate constant errors to the left of the true stimulus location, and positive values indicate constant errors to the right. Speaker x|y refers to the combined response data from speaker x in the left group and speaker y in the right group (in ascending order: −28.8°, −21.6°, −14.4°, −7.2°, 7.2°, 14.4°, 21.6°, and 28.8º). Error bars denote the SEMs. The data from the shaded loudspeaker locations were discarded from the statistical analysis of the ventriloquism effect (see the text for details)

Note that the ventriloquism effect was greater in the conditions in which the visual stimulus was presented at midline or in the hemifield opposite where the sound originated (see Speaker Positions 4|5 and 5|4 in Fig. 3; for similar findings, see also Maiworm et al., 2012). This affected both the medium and large audiovisual disparities (7.2° and 9.9º), but not the small disparity (2.7º). Moreover, in particular for the large audiovisual disparity (9.9º), mean localization responses were close to midline, putting in question whether the perceived origin of these stimuli fell in the high- or the low-reward hemifield. For these reasons, the data from the two central loudspeaker locations (±7.2º) were excluded from further analyses of the ventriloquism effect (see Maiworm et al., 2012, for a similar approach). Note that analyses of localization errors and response times included the data from all locations, because the online adjustment of the individual thresholds and the disbursement of rewards were based on the responses from all four loudspeaker locations per hemifield. However, all analyses reported yielded identical result patterns with the exclusion of the two central locations.

Localization performance

Participants received a low reward (50 points) and/or a high reward (450 points) if their absolute localization error in the respective hemifield fell below an individually adjusted threshold. Thus, the maximum obtainable score across the four experimental blocks was 2,000 points. Three out of 19 participants did not obtain any points; the highest total score was 1,500 points (Mdn = 550 points). Across the four experimental blocks, the frequencies of low- and high-reward occurrences were comparable: Low rewards were obtained 29 times, and high rewards were obtained 24 times. The numbers of participants who obtained a low and/or a high reward in each block are shown in Fig. 4.

As can be seen in Fig. 4, the frequency of reward occurrences increased from the first to the second block and then stabilized across the remaining blocks. This effect was mainly driven by the individual adjustment of the localization performance thresholds (see the leftmost panel in Fig. 5), which depended on the performance in the preceding block (see the “Method” section). Actual localization performance (i.e., absolute errors) was unchanged across the four experimental blocks and did not differ between the high- and low-reward hemifields (see Fig. 5), as indicated by a two-way repeated measures ANOVA with the factors Hemifield (high reward, low reward) and Block (1, 2, 3, 4), all Fs < 1.

Reward thresholds and localization errors in the main experiment. The leftmost panel shows the means of the individual thresholds of the absolute localization errors per block. Separate individual thresholds had to be met for obtaining rewards in the high- and low-reward hemifields. The mean absolute errors are shown in the adjacent panel, and the panels to the right show the absolute constant errors and variable errors. All values were averaged across all four speaker locations per hemifield. Error bars denote the SEMs

Since the absolute error represents a combined measure of both accuracy and precision, separate ANOVAs were performed to test whether localization accuracy (i.e., absolute constant errors) or precision (i.e., variable errors) were selectively affected by the reward manipulation. These analyses revealed that neither constant nor variable errors differed between the high- and low-reward hemifields; that is, neither the main effects of hemifield nor the interactions between hemifield and block were significant (all ps > .09). However, variable errors decreased (i.e., precision increased) over the four experimental blocks, irrespective of the reward manipulation (see Fig. 5), as indicated by a significant main effect of block, F(3, 54) = 7.11, p < .01. By contrast, constant errors (i.e., accuracy) were unchanged between blocks (F < 1).

To test whether the reward manipulation had an overt effect on the participants’ behavior, we additionally analyzed the response times in the high- and low-reward hemifields over the four experimental blocks. When the auditory stimulus originated in the hemifield associated with the high reward, participants took more time to respond (M = 2,136 ms, SEM = 271 ms) than in the low-reward hemifield (M = 2,069 ms, SEM = 274 ms), as indicated by a significant main effect of hemifield, F(1, 18) = 8.57, p < .01. Neither the main effect of block, F(3, 54) = 3.51, p = .06, nor the interaction between hemifield and block, F(3, 54) = 1.10, p = .36, was significant. This finding suggests that participants complied with the task instructions and prepared responses associated with a high reward more thoroughly, even though the longer response times in the high-reward hemifield were not reflected in a higher accuracy or precision of their localization responses.

Ventriloquism effect

Participants detected 95 % (SEM = 2 %) of the deviant visual stimuli (fixation light flashing) in the main experiment, verifying that the task-irrelevant visual stimuli were indeed attended and that participants did not close their eyes. The amount of the visual bias of auditory localization (i.e., the ventriloquism effect) was calculated separately for each level of audiovisual spatial disparity (2.7°, 7.2°, and 9.9º) by subtracting the participant’s mean localization response with the visual stimulus to the left of the auditory stimulus from the localization response with the visual stimulus to the right. Theoretically, the resulting values could range between zero (if auditory localization were not affected by the visual stimuli) and twice the audiovisual disparity (if auditory localization were to follow the visual location).

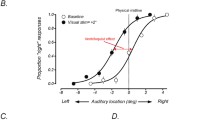

In order to test the hypothesis that the reward manipulation had an influence on the size of the ventriloquism effect, we pooled the ventriloquism scores across the three speaker positions per hemifield (excluding the most central location; see above) and submitted the resulting scores to a repeated measures ANOVA with the factors Hemifield (high reward, low reward) and Disparity (2.7°, 7.2°, 9.9º). This analysis revealed a significant main effect of disparity, F(2, 36) = 5.19, p = .03, as well as a significant interaction between hemifield and disparity, F(2, 36) = 3.83, p = .03. Figure 6 shows the means of the ventriloquism scores for all factor levels. The main effect of disparity reflects the overall increase of the ventriloquism effect with increasing audiovisual disparity. More importantly, the interaction effect between hemifield and disparity indicates that the magnitude of the ventriloquism effect was reduced in the high-reward hemifield as compared to the low-reward hemifield, particularly for the small (2.7º) and the large (9.9º) audiovisual disparities (see Fig. 6). Separate Bonferroni–Holm-corrected one-sample t tests showed that the ventriloquism effect was significantly above zero for all three audiovisual disparities in the low-reward hemifield (all ps < .05). In the high-reward hemifield, however, a significant ventriloquism effect was only obtained for the medium audiovisual disparity (7.2º), t(18) = 3.13, p < .05, but not for the small disparity (2.7º), t(18) = 1.77, p > .05, or the large disparity (9.9º), t(18) = 2.05, p > .05.

Ventriloquism effects in the high- and low-reward hemifields. The strength of the ventriloquism effect (i.e., the visual bias of auditory localization) is shown as a function of audiovisual spatial disparity and separately for the high- and low-reward hemifields (averaged across the three peripheral loudspeaker locations in each hemifield). Values were derived by subtracting the mean localization response with the visual stimulus to the left of the auditory stimulus from the mean localization response with the visual stimulus to the right. Error bars denote the SEMs. * p < .05 (one-sample t test against 0, Bonferroni–Holm corrected)

Unlike the ventriloquism effect, the response times did not differ between the three audiovisual disparities, as indicated by an ANOVA with the factors Hemifield (high reward, low reward) and Disparity (2.7°, 7.2°, 9.9º). This analysis yielded a significant main effect of hemifield, F(1, 18) = 12.15, p < .01, but neither the main effect of disparity nor the interaction approached significance (both Fs < 1). Thus, it appears unlikely that the observed effect of reward expectancy on the ventriloquism effect was confounded by the longer response times in the high- than in the low-reward hemifield.

We additionally calculated the ventriloquism effect scores separately for each block, to test whether the effect of reward manipulation on the strength of the ventriloquism effect changed over the course of the experiment. The resulting three-way repeated measures ANOVA again yielded a significant main effect of disparity, F(2, 36) = 5.01, p = .03, as well as a significant interaction between hemifield and disparity, F(2, 36) = 3.67, p = .04. No other significant main effects or interactions involving block were obtained (all ps > .22), suggesting that the effects of reward expectancy on the ventriloquism effect did not differ between the four experimental blocks.

Discussion

In the present study, we investigated the effect of a monetary incentive on the ventriloquism effect, an audiovisual illusion commonly thought to arise from an automatic integration of conflicting cross-modal input. Participants were rewarded for precise and accurate auditory localization performance. Thus, the visual bias of auditory localization induced by the ventriloquist situation was pitted against the participants’ motivational goal of maximizing their reward. Crucially, the amounts of reward differed between the two hemifields, and this manipulation modulated the strength of the ventriloquism effect: The ventriloquism effect was weaker for stimuli presented in the high-reward hemifield than for those presented in the low-reward hemifield. This finding suggests that reward expectation alters audiovisual spatial integration processes, and thus puts in question the full automaticity of audiovisual binding as assessed in the ventriloquism effect.

The overall magnitude of the ventriloquism effect was relatively small in the present study (around 20 % of the audiovisual disparity), as compared to previous studies, where auditory localization shifts of above 50 % had been observed (e.g., Hairston et al., 2003). This might suggest that reward expectation affected the ventriloquism effect in both the high- and low-reward hemifields, although to a smaller degree in the low-reward hemifield. Thus, the relatively moderate difference in the size of the ventriloquism effect that we observed between the high- and low-reward hemifields might underestimate the full magnitude of the reward expectation-related effects on audiovisual spatial integration—that is, the reduction of the ventriloquism effect relative to a no-reward baseline condition.

Previous studies in humans have shown that unisensory discrimination performance can be facilitated by monetary incentives, an effect that depended on dopaminergic influences from the reward system on primary sensory cortex (Pleger et al., 2008; Pleger et al., 2009; see also Pantoja et al., 2007). Similarly, our reward manipulation might have facilitated unisensory auditory processing. Such an increase in the reliability of the auditory input should have reduced the ventriloquism effect (Alais & Burr, 2004), because the auditory input would receive a higher weight in multisensory integration processes (Ernst & Bülthoff, 2004). Contrary to this assumption, however, we did not observe significant differences in auditory localization performance between the high- and low-reward hemifields. Whether this was due to a ceiling effect cannot be decided on the basis of our data. The lack of a difference in auditory localization performance between the reward-level conditions, however, suggests that our reward manipulation specifically affected the process of audiovisual binding.

According to causal inference models of multisensory processing (Körding et al., 2007; Shams & Beierholm, 2010), the brain infers the probability that two sensory signals have a common cause, and this causal inference has been associated with the process of cross-modal binding (Chen & Vroomen, 2013). Thus, causal inference models consider two hypotheses: either that the auditory and visual cues have one common cause (i.e., originate from the same event), in which case they should be integrated, or that they have independent causes, in which case they should be segregated (i.e., localization should follow the unisensory auditory estimate). Usually, there is uncertainty about the causal structure of the sensory signals; that is, the brain does not know whether or not two sensory inputs originated from the same event. In this case, the optimal estimate of the sound location is a weighted average of the estimates derived under the assumption of one common cause (i.e., cue integration) and the assumption of independent causes (i.e., cue segregation), each weighted by their respective probability (Shams & Beierholm, 2010). Our finding of a reduced ventriloquism effect under high reward might suggest that this weighting is subject to top-down influences. More specifically, the weighting in the high-reward hemifield might have been biased in favor of the assumption that the two sensory signals were segregated, which would have been a reasonable strategy, given that the visual stimuli were task-irrelevant in the present study and rewards were dependent on auditory localization performance only. This might suggest that the process of cue integration is automatic and solely determined by the perceptual reliabilities of the involved sensory signals, whereas the process of cross-modal binding (i.e., causal inference) is modifiable by state-dependent factors such as motivation. This idea is in line with the observation that the degree of cross-modal binding can be strongly affected by the participant’s prior expectation of a common cause (Helbig & Ernst, 2007).

The ventriloquism effect most likely emerges at a relatively late processing stage, around 260 ms poststimulus (Bonath et al., 2007; Bruns & Röder, 2010), presumably via feedback connections from posterior parietal cortex (Renzi et al., 2013) to secondary auditory cortex (Bonath et al., 2007). Thus, it is conceivable that audiovisual binding in the ventriloquist situation is subject to modulatory top-down influences. Possibly, top-down influences on the ventriloquism effect come into play only when motivational (as in the present study) or emotional (as in Maiworm et al., 2012) factors establish behavioral significance. This assumption is supported by a recent study on the sound-induced flash illusion, in which a single light flash accompanied by two auditory beeps tends to be perceived as two flashes. The occurrence of this illusion was resistant to feedback training, except when performance was linked to a monetary reward, thereby increasing the motivational significance of the task (Rosenthal, Shimojo, & Shams, 2009).

The effect of reward expectancy on audiovisual binding in the present study resembles the recently reported reduction of the ventriloquism effect after an emotional learning phase in which threatening auditory stimuli were presented (Maiworm et al., 2012). As in the present study, unisensory auditory localization performance was not affected in Maiworm et al.’s (2012) study, suggesting that emotional learning selectively changed the process of audiovisual binding. This congruence of the result patterns raises the question of whether the influences of the emotion and reward systems on audiovisual binding share the same underlying mechanisms. This assumption appears plausible, given that both manipulations established the sustained affective significance of the auditory modality, either by stimulus-driven emotional learning, in the study by Maiworm et al. (2012), or by top-down directed motivation to receive a reward, in the present study. In fact, emotion and motivation are closely linked concepts, due to their interactive roles in determining behavior (Pessoa, 2009).

Emotional stimuli and reward expectancy activate an overlapping network of brain regions including the striatum, amygdala, orbitofrontal cortex, dorsolateral prefrontal cortex, and anterior cingulate (Pessoa, 2009; Rolls & Grabenhorst, 2008; Salzman & Fusi, 2010; Schultz, 2000, 2006; Watanabe, 2007). Prefrontal areas (Romanski, 2007; Stein & Stanford, 2008) including the orbitofrontal cortex (Kringelbach, 2005) have been associated with the processing of multisensory stimuli, as well, indicating a potential neural substrate for influences of the emotion and reward systems on cross-modal binding. This is in line with the assumption that both emotion and motivation influence cognitive control mechanisms via connections from the orbitofrontal cortex to the anterior cingulate (Pessoa, 2009), which has been implicated in conflict monitoring (Botvinick, Cohen, & Carter, 2004).

Incongruent cross-modal stimuli indeed seem to activate the anterior cingulate, and this activation has been associated with increased attention to the goal-relevant sensory modality (Weissman, Warner, & Woldorff, 2004; Zimmer, Roberts, Harshbarger, & Woldorff, 2010). Moreover, it has been shown that attentional control is enhanced by monetary incentives (Engelmann et al., 2009; Small et al., 2005) and emotional processes (Vuilleumier, 2005). This raises the question of whether the effect of monetary incentives on the strength of the ventriloquism effect observed in the present study was mediated by attention. Although reward and attention are distinct concepts, it is difficult to attribute behavioral effects to one or the other in situations with sustained reward expectancies (Awh, Belopolsky, & Theeuwes, 2012; Maunsell, 2004), as in the present study.

Previous studies have suggested that the ventriloquism effect is not modulated by attention (Chen & Vroomen, 2013). Importantly, however, these studies manipulated spatial attention toward or away from the location of the visual distractor (Bertelson, Vroomen, et al., 2000; Vroomen et al., 2001). By contrast, the reward manipulation in the present study might have rather directed attention to the auditory modality, and in particular to auditory stimuli in the hemifield associated with a high reward, but not to a specific spatial location. Under such circumstances, attentional selection can indeed suppress the processing of stimuli in a task-irrelevant sensory modality (Johnson & Zatorre, 2005; Laurienti et al., 2002; Santangelo & Macaluso, 2012). Behaviorally, reduced cross-modal binding has been observed when attention was directed to only one sensory modality (Mozolic, Hugenschmidt, Pfeiffer, & Laurienti, 2008) or to a secondary task (Alsius, Navarra, Campbell, & Soto-Faraco, 2005; see also Eramudugolla et al., 2011).

Interestingly, in a study by Mozolic et al. (2008) a reduction of cross-modal binding was observed mainly for congruent cross-modal stimuli; that is, attending to one sensory modality specifically reduced the performance facilitation usually observed with congruent cross-modal stimuli, rather than improving performance with incongruent stimuli (see Padmala & Pessoa, 2011, for similar findings in a unisensory visual task). This might explain why in the present study the ventriloquism effect was selectively reduced for both small (2.7º) and large (9.9º) audiovisual spatial disparities, but not for intermediate disparities (7.2º). On the one hand, the small audiovisual disparity of only 2.7° was close to actual spatial congruence of the two stimuli. Previous studies have shown that the spatial integration of congruent and incongruent audiovisual stimuli depends on independent mechanisms (Bertini, Leo, Avenanti, & Làdavas, 2010; Leo, Bolognini, Passamonti, Stein, & Làdavas, 2008). Hence, the small spatial disparity might have been processed differently from the two larger disparities. Considering the difference between congruent and incongruent cross-modal stimuli in the study by Mozolic et al., a reduction of cross-modal binding would then be expected specifically for the small spatial disparity (i.e., “congruent” audiovisual stimuli), but not for the two larger disparities (i.e., incongruent audiovisual stimuli).

On the other hand, however, participants might have detected the audiovisual spatial incongruence with the large disparity of 9.9°, particularly under higher attentional control in the high-reward hemifield. It is well known that the ventriloquism effect is reduced or absent if participants consciously detect that the auditory and visual stimuli originate from different locations (Hairston et al., 2003). Moreover, it has been shown that distractor stimuli that are presented suprathreshold rather than subthreshold are inhibited more effectively, and that this suppression depends on prefrontal cortex activity (Tsushima, Sasaki, & Watanabe, 2006). Hence, increased prefrontal control for stimuli associated with a high reward might have rendered the detection of the large spatial disparity more likely, leading to more effective inhibition of the conflicting visual input. By contrast, the intermediate audiovisual disparity of 7.2° might have been too small to violate the participants’ unity assumption, and thus have escaped inhibition by prefrontal mechanisms, similar to the absence of cross-modal inhibition for incongruent stimuli in the study by Mozolic et al. (2008).

An open question is whether valence (positive or negative) modulates the effect of motivationally significant stimuli on cross-modal binding. In the study by Maiworm et al. (2012), emotional learning was induced with aversive auditory stimuli (fearful voices) played from a specific location. The resulting reduction of the ventriloquism effect, however, was spatially unspecific, and generalized across both hemifields. By contrast, in the present study a monetary reward led to a spatially specific reduction of the ventriloquism effect in the hemifield that was associated with a high reward. Previous studies had shown that positive and negative reinforcers exert differential effects on unisensory discrimination performance (Laufer & Paz, 2012; Resnik, Sobel, & Paz, 2011; Schechtman et al., 2010): Although negative reinforcers decreased auditory frequency discrimination performance, positive reinforcers rather increased performance. Similarly, it could be hypothesized that the effect of negative reinforcers on cross-modal binding generalizes to untrained locations, whereas the effect of positive reinforcers is more restricted to the stimulus properties associated with the reward—in our case, mainly to one hemifield.

In conclusion, we observed a reduction of the ventriloquism effect for auditory stimuli that were associated with a high rather than a low monetary reward, even though motivational significance did not change task-relevant unisensory localization performance. Thus, our results show that reward expectations can specifically influence cross-modal binding processes, possibly mediated via prefrontal control mechanisms. Top-down influences on multisensory integration might thus be more prevalent than has previously been thought (Talsma et al., 2010), asking for a more careful consideration of motivational and emotional factors in theories of multisensory integration.

References

Alais, D., & Burr, D. (2004). The ventriloquist effect results from near-optimal bimodal integration. Current Biology, 14, 257–262. doi:10.1016/j.cub.2004.01.029

Alsius, A., Navarra, J., Campbell, R., & Soto-Faraco, S. (2005). Audiovisual integration of speech falters under high attention demands. Current Biology, 15, 839–843.

Awh, E., Belopolsky, A. V., & Theeuwes, J. (2012). Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends in Cognitive Sciences, 16, 437–443. doi:10.1016/j.tics.2012.06.010

Bertelson, P., & Aschersleben, G. (1998). Automatic visual bias of perceived auditory location. Psychonomic Bulletin & Review, 5, 482–489. doi:10.3758/BF03208826

Bertelson, P., & de Gelder, B. (2004). The psychology of multimodal perception. In C. Spence & J. Driver (Eds.), Crossmodal space and crossmodal attention (pp. 141–177). Oxford, UK: Oxford University Press.

Bertelson, P., Pavani, F., Làdavas, E., Vroomen, J., & de Gelder, B. (2000a). Ventriloquism in patients with unilateral visual neglect. Neuropsychologia, 38, 1634–1642. doi:10.1016/S0028-3932(00)00067-1

Bertelson, P., Vroomen, J., de Gelder, B., & Driver, J. (2000b). The ventriloquist effect does not depend on the direction of deliberate visual attention. Perception & Psychophysics, 62, 321–332. doi:10.3758/BF03205552

Bertini, C., Leo, F., Avenanti, A., & Làdavas, E. (2010). Independent mechanisms for ventriloquism and multisensory integration as revealed by theta-burst stimulation. European Journal of Neuroscience, 31, 1791–1799.

Bonath, B., Noesselt, T., Martinez, A., Mishra, J., Schwiecker, K., Heinze, H.-J., & Hillyard, S. A. (2007). Neural basis of the ventriloquist illusion. Current Biology, 17, 1697–1703.

Botvinick, M. M., Cohen, J. D., & Carter, C. S. (2004). Conflict monitoring and anterior cingulate cortex: An update. Trends in Cognitive Sciences, 8, 539–546. doi:10.1016/j.tics.2004.10.003

Bruno, A., & Morrone, M. C. (2007). Influence of saccadic adaptation on spatial localization: Comparison of verbal and pointing reports. Journal of Vision, 7(5), 16.1–13. doi:10.1167/7.5.16

Bruns, P., & Röder, B. (2010). Tactile capture of auditory localization: An event-related potential study. European Journal of Neuroscience, 31, 1844–1857.

Chen, L., & Vroomen, J. (2013). Intersensory binding across space and time: A tutorial review. Attention, Perception, & Psychophysics, 75, 790–811. doi:10.3758/s13414-013-0475-4

Colin, C., Radeau, M., Soquet, A., Dachy, B., & Deltenre, P. (2002). Electrophysiology of spatial scene analysis: The mismatch negativity (MMN) is sensitive to the ventriloquism illusion. Clinical Neurophysiology, 113, 507–518.

David, S. V., Fritz, J. B., & Shamma, S. A. (2012). Task reward structure shapes rapid receptive field plasticity in auditory cortex. Proceedings of the National Academy of Sciences, 109, 2144–2149.

Della Libera, C., & Chelazzi, L. (2006). Visual selective attention and the effects of monetary rewards. Psychological Science, 17, 222–227. doi:10.1111/j.1467-9280.2006.01689.x

Engelmann, J. B., Damaraju, E., Padmala, S., & Pessoa, L. (2009). Combined effects of attention and motivation on visual task performance: Transient and sustained motivational effects. Frontiers in Human Neuroscience, 3(4), 1–17. doi:10.3389/neuro.09.004.2009

Eramudugolla, R., Kamke, M. R., Soto-Faraco, S., & Mattingley, J. B. (2011). Perceptual load influences auditory space perception in the ventriloquist aftereffect. Cognition, 118, 62–74.

Ernst, M. O., & Bülthoff, H. H. (2004). Merging the senses into a robust percept. Trends in Cognitive Sciences, 8, 162–169. doi:10.1016/j.tics.2004.02.002

Fiser, J., Berkes, P., Orbán, G., & Lengyel, M. (2010). Statistically optimal perception and learning: From behavior to neural representations. Trends in Cognitive Sciences, 14, 119–130. doi:10.1016/j.tics.2010.01.003

Hairston, W. D., Wallace, M. T., Vaughan, J. W., Stein, B. E., Norris, J. L., & Schirillo, J. A. (2003). Visual localization ability influences cross-modal bias. Journal of Cognitive Neuroscience, 15, 20–29.

Helbig, H. B., & Ernst, M. O. (2007). Knowledge about a common source can promote visual–haptic integration. Perception, 36, 1523–1533.

Johnson, J. A., & Zatorre, R. J. (2005). Attention to simultaneous unrelated auditory and visual events: Behavioral and neural correlates. Cerebral Cortex, 15, 1609–1620.

Körding, K. P., Beierholm, U., Ma, W. J., Quartz, S., Tenenbaum, J. B., & Shams, L. (2007). Causal inference in multisensory perception. PLoS ONE, 2, e943. doi:10.1371/journal.pone.0000943

Krawczyk, D. C., Gazzaley, A., & D’Esposito, M. (2007). Reward modulation of prefrontal and visual association cortex during an incentive working memory task. Brain Research, 1141, 168–177. doi:10.1016/j.brainres.2007.01.052

Kringelbach, M. L. (2005). The human orbitofrontal cortex: Linking reward to hedonic experience. Nature Reviews Neuroscience, 6, 691–702.

Laufer, O., & Paz, R. (2012). Monetary loss alters perceptual thresholds and compromises future decisions via amygdala and prefrontal networks. Journal of Neuroscience, 32, 6304–6311.

Laurienti, P. J., Burdette, J. H., Wallace, M. T., Yen, Y.-F., Field, A. S., & Stein, B. E. (2002). Deactivation of sensory-specific cortex by cross-modal stimuli. Journal of Cognitive Neuroscience, 14, 420–429.

Leo, F., Bolognini, N., Passamonti, C., Stein, B. E., & Làdavas, E. (2008). Cross-modal localization in hemianopia: New insights on multisensory integration. Brain, 131, 855–865.

Lewald, J., & Ehrenstein, W. H. (2000). Visual and proprioceptive shifts in perceived egocentric direction induced by eye-position. Vision Research, 40, 539–547.

Lewald, J., & Getzmann, S. (2006). Horizontal and vertical effects of eye-position on sound localization. Hearing Research, 213, 99–106.

Maiworm, M., Bellantoni, M., Spence, C., & Röder, B. (2012). When emotional valence modulates audiovisual integration. Attention, Perception, & Psychophysics, 74, 1302–1311. doi:10.3758/s13414-012-0310-3

Maiworm, M., König, P., & Röder, B. (2011). Integrative processing of perception and reward in an auditory localization paradigm. Experimental Psychology, 58, 217–226.

Maunsell, J. H. R. (2004). Neuronal representations of cognitive state: Reward or attention? Trends in Cognitive Sciences, 8, 261–265. doi:10.1016/j.tics.2004.04.003

Miyashita, Y., & Hayashi, T. (2000). Neural representation of visual objects: Encoding and top-down activation. Current Opinion in Neurobiology, 10, 187–194.

Mozolic, J. L., Hugenschmidt, C. E., Pfeiffer, A. M., & Laurienti, P. J. (2008). Modality-specific selective attention attenuates multisensory integration. Experimental Brain Research, 184, 39–52.

Padmala, S., & Pessoa, L. (2011). Reward reduces conflict by enhancing attentional control and biasing visual cortical processing. Journal of Cognitive Neuroscience, 23, 3419–3432. doi:10.1162/jocn_a_00011

Pantoja, J., Ribeiro, S., Wiest, M., Soares, E., Gervasoni, D., Lemos, N. A. M., & Nicolelis, M. A. L. (2007). Neuronal activity in the primary somatosensory thalamocortical loop is modulated by reward contingency during tactile discrimination. Journal of Neuroscience, 27, 10608–10620.

Pessoa, L. (2009). How do emotion and motivation direct executive control? Trends in Cognitive Sciences, 13, 160–166. doi:10.1016/j.tics.2009.01.006

Pleger, B., Blankenburg, F., Ruff, C. C., Driver, J., & Dolan, R. J. (2008). Reward facilitates tactile judgments and modulates hemodynamic responses in human primary somatosensory cortex. Journal of Neuroscience, 28, 8161–8168.

Pleger, B., Ruff, C. C., Blankenburg, F., Klöppel, S., Driver, J., & Dolan, R. J. (2009). Influence of dopaminergically mediated reward on somatosensory decision-making. PLoS Biology, 7, e1000164. doi:10.1371/journal.pbio.1000164

Polley, D. B., Steinberg, E. E., & Merzenich, M. M. (2006). Perceptual learning directs auditory cortical map reorganization through top-down influences. Journal of Neuroscience, 26, 4970–4982.

Recanzone, G. H. (2009). Interactions of auditory and visual stimuli in space and time. Hearing Research, 258, 89–99.

Renzi, C., Bruns, P., Heise, K.-F., Zimerman, M., Feldheim, J.-F., Hummel, F. C., & Röder, B. (2013). Spatial remapping in the audio-tactile ventriloquism effect: A TMS investigation on the role of the ventral intraparietal area. Journal of Cognitive Neuroscience, 25, 790–801.

Resnik, J., Sobel, N., & Paz, R. (2011). Auditory aversive learning increases discrimination thresholds. Nature Neuroscience, 14, 791–796.

Rolls, E. T., & Grabenhorst, F. (2008). The orbitofrontal cortex and beyond: From affect to decision-making. Progress in Neurobiology, 86, 216–244.

Romanski, L. M. (2007). Representation and integration of auditory and visual stimuli in the primate ventral lateral prefrontal cortex. Cerebral Cortex, 17, i61–i69.

Rosenthal, O., Shimojo, S., & Shams, L. (2009). Sound-induced flash illusion is resistant to feedback training. Brain Topography, 21, 185–192.

Salzman, C. D., & Fusi, S. (2010). Emotion, cognition, and mental state representation in amygdala and prefrontal cortex. Annual Review of Neuroscience, 33, 173–202.

Santangelo, V., & Macaluso, E. (2012). Spatial attention and audiovisual processing. In B. E. Stein (Ed.), The new handbook of multisensory processing (pp. 359–370). Cambridge, MA: MIT Press.

Sato, Y., Toyoizumi, T., & Aihara, K. (2007). Bayesian inference explains perception of unity and ventriloquism aftereffect: Identification of common sources of audiovisual stimuli. Neural Computation, 19, 3335–3355.

Schechtman, E., Laufer, O., & Paz, R. (2010). Negative valence widens generalization of learning. Journal of Neuroscience, 30, 10460–10464.

Schmidt, R. A., & Lee, T. D. (2011). Motor control and learning: A behavioral emphasis (5th ed.). Champaign, IL: Human Kinetics.

Schultz, W. (2000). Multiple reward signals in the brain. Nature Reviews Neuroscience, 1, 199–207.

Schultz, W. (2006). Behavioral theories and the neurophysiology of reward. Annual Review of Psychology, 57, 87–115.

Seitz, A. R., Kim, D., & Watanabe, T. (2009). Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron, 61, 700–707.

Serences, J. T. (2008). Value-based modulations in human visual cortex. Neuron, 60, 1169–1181.

Shams, L., & Beierholm, U. R. (2010). Causal inference in perception. Trends in Cognitive Sciences, 14, 426–432. doi:10.1016/j.tics.2010.07.001

Small, D. M., Gitelman, D., Simmons, K., Bloise, S. M., Parrish, T., & Mesulam, M.-M. (2005). Monetary incentives enhance processing in brain regions mediating top-down control of attention. Cerebral Cortex, 15, 1855–1865.

Stein, B. E., & Stanford, T. R. (2008). Multisensory integration: Current issues from the perspective of the single neuron. Nature Reviews Neuroscience, 9, 255–266.

Stekelenburg, J. J., Vroomen, J., & de Gelder, B. (2004). Illusory sound shifts induced by the ventriloquist illusion evoke the mismatch negativity. Neuroscience Letters, 357, 163–166.

Talsma, D., Senkowski, D., Soto-Faraco, S., & Woldorff, M. G. (2010). The multifaceted interplay between attention and multisensory integration. Trends in Cognitive Sciences, 14, 400–410. doi:10.1016/j.tics.2010.06.008

Tsushima, Y., Sasaki, Y., & Watanabe, T. (2006). Greater disruption due to failure of inhibitory control on an ambiguous distractor. Science, 314, 1786–1788.

Vroomen, J., Bertelson, P., & de Gelder, B. (2001). The ventriloquist effect does not depend on the direction of automatic visual attention. Perception & Psychophysics, 63, 651–659. doi:10.3758/BF03194427

Vuilleumier, P. (2005). How brains beware: Neural mechanisms of emotional attention. Trends in Cognitive Sciences, 9, 585–594. doi:10.1016/j.tics.2005.10.011

Watanabe, M. (2007). Role of anticipated reward in cognitive behavioral control. Current Opinion in Neurobiology, 17, 213–219.

Weissman, D. H., Warner, L. M., & Woldorff, M. G. (2004). The neural mechanisms for minimizing cross-modal distraction. Journal of Neuroscience, 24, 10941–10949.

Welch, R. B. (1999). Meaning, attention, and the “unity assumption” in the intersensory bias of spatial and temporal perceptions. In G. Aschersleben, T. Bachmann, & J. Müsseler (Eds.), Advances in psychology: Vol. 129. Cognitive contributions to the perception of spatial and temporal events (pp. 371–387). Amsterdam: Elsevier.

Welch, R. B., & Warren, D. H. (1980). Immediate perceptual response to intersensory discrepancy. Psychological Bulletin, 88, 638–667. doi:10.1037/0033-2909.88.3.638

Witten, I. B., & Knudsen, E. I. (2005). Why seeing is believing: Merging auditory and visual worlds. Neuron, 48, 489–496.

Zimmer, U., Roberts, K. C., Harshbarger, T. B., & Woldorff, M. G. (2010). Multisensory conflict modulates the spread of visual attention across a multisensory object. NeuroImage, 52, 606–616.

Author note

This research was supported by a grant from the Deutsche Forschungsgemeinschaft (GK 1247/1). P.B. and M.M. contributed equally to this study.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bruns, P., Maiworm, M. & Röder, B. Reward expectation influences audiovisual spatial integration. Atten Percept Psychophys 76, 1815–1827 (2014). https://doi.org/10.3758/s13414-014-0699-y

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-014-0699-y