SRI-EEG: State-Based Recurrent Imputation for EEG Artifact Correction

- Department of Computer Science, University of California, Santa Barbara, Santa Barbara, CA, United States

Electroencephalogram (EEG) signals are often used as an input modality for Brain Computer Interfaces (BCIs). While EEG signals can be beneficial for numerous types of interaction scenarios in the real world, high levels of noise limits their usage to strictly noise-controlled environments such as a research laboratory. Even in a controlled environment, EEG is susceptible to noise, particularly from user motion, making it highly challenging to use EEG, and consequently BCI, as a ubiquitous user interaction modality. In this work, we address the EEG noise/artifact correction problem. Our goal is to detect physiological artifacts in EEG signal and automatically replace the detected artifacts with imputed values to enable robust EEG sensing overall requiring significantly reduced manual effort than is usual. We present a novel EEG state-based imputation model built upon a recurrent neural network, which we call SRI-EEG, and evaluate the proposed method on three publicly available EEG datasets. From quantitative and qualitative comparisons with six conventional and neural network based approaches, we demonstrate that our method achieves comparable performance to the state-of-the-art methods on the EEG artifact correction task.

1. Introduction

Electroencephalography (EEG) is a non-invasive and widely-adopted approach to capture surface electrical activity in the human brain with electrodes placed on the scalp. EEG data has been widely used in domains including neuroscience, cognitive science, cognitive psychology, and mental health (Henry, 2006). One line of research has explored EEG as a potential interaction method, e.g., brain computer interfaces (BCIs). Specifically, by extracting context or intention from EEG data, hands-free BCI applications have been tested. Those applications include playing games (van de Laar et al., 2013), controlling a mouse cursor (Aydemir and Kayikcioglu, 2014), controlling robotic arms (Latif et al., 2017), managing smart home appliances (Anindya et al., 2016), and using smartphones (Kumar et al., 2017; Rashid et al., 2018). EEG has also been used as an accessible input modality when traditional input methods are not a feasible option. For example, brain-to-text communication (Willett et al., 2021) and hands-free wheelchair control (Singla et al., 2014) have enabled paralyzed or disabled users to communicate and move without assistance. Moreover, there is a growing number of EEG enabled BCI devices for consumers. Galea1, Emotiv2, Advanced Brain Monitoring3, and Muse4 are some representative devices that allow integration of various brain signals into a single headset for use in daily life and virtual reality applications. Research-grade EEG caps such as those developed by OpenBCI5 are being used to support both lab-based researchers as well biosensing enthusiasts. In addition, less bulky devices, such as the InEar BioFeed Controller (Matthies, 2013) or the Kokoon EEG-based sleep headphones6 are part of a new set of devices called hearables designed to track neural and physiological function of a user over long periods of time (Goverdovsky et al., 2017).

Although EEG-aided BCIs have seen significant interest and development since the 1970s, an open and challenging research question is to build robust user experiences under unconstrained (i.e., general and everyday use) conditions, given that EEG signals are easily contaminated by artifacts from users and their environment (Aricò et al., 2018). To deal with artifacts, the recorded EEG signals usually need to be heavily processed by sophisticated software, proprietary algorithms, or manual inspection before use. For example, independent component analysis (ICA) is a widely used approach that plays an important role in the EEG pre-processing phase for artifact removal. Though ICA variants and other advanced methods (e.g., canonical correlation analysis, wavelet transform algorithm, empirical-mode decomposition) have been introduced over the years, the EEG artifact correction procedure still requires manual inspection of the signal data to pick out noise . Furthermore, random and irregularly-occurring artifacts make signal correction increasingly challenging. The complex, time consuming, and labor intensive pre-processing requirements thus limit the robustness and generalization of EEG supported BCIs for many consumer use cases, and hinder the development of ubiquitous EEG-based applications. Therefore, developing an automatic EEG artifact correction approach that can significantly reduce manual tagging time and expense is imperative to making BCI broadly useful as a general purpose input method.

Automated artifact correction methods using deep-learning-based signal processing have achieved state-of-the-art performance on a variety of time series data. One line of research has explored the use of recurrent neural networks (RNNs) for time series data imputation to predict noisy or missing portions from the remaining segments of data. A large set of relevant works [e.g., GRU-D (Che et al., 2018a), MR-HDMM (Che et al., 2018b), MRNN (Yoon et al., 2017), GAIN (Yoon et al., 2018), and BRITS (Cao et al., 2018)] have shown that RNNs can effectively encode the temporal relationships in time series data and are, thus, able to achieve satisfactory performance on the data imputation task. Additionally, transformer-based neural networks have achieved impressive performance in various research domains including natural language processing and computer vision. Motivated by their success, recent work has started to tackle time series data in a sequence-to-sequence manner by applying the self-attention mechanism common in transformer-based models. For example, Zerveas et al. (2021) and Tran et al. (2021) present two representative works to adapt transformer-based encoders on the classification, regression and imputation problems in time series data and achieve superior performance.

While EEG is a type of time series data, the automatic imputation designed for correcting artifacts, caused by EEG's susceptibility to various types of noise during data collection, remains a relatively unexplored and challenging area. To address this challenge and take advantage of recent success of deep learning methods for time series data imputation, we propose a state-based recurrent neural network (RNN) for EEG artifact correction (SRI-EEG) in an automatic manner. Our network is built upon an RNN as its backbone structure. It takes into account the state information encoded in EEG signal representing features of stimuli applied during EEG data recording. Added to the state information is a spatial decay matrix to model EEG channel dependencies. We evaluate the performance of our proposed network on three publicly available datasets: Bike (Bullock et al., 2015), Kaggle (Margaux et al., 2012), and SMR (Tangermann et al., 2012) (Section 6).

In summary, our main contributions include: (1) an automatic imputation method to correct irregularly-occurring artifacts in EEG data leading to significantly reduced manual effort that could encourage broader usage of EEG based interaction modalities; (2) a novel bidirectional long short-term memory (LSTM) model for imputing artifacts in EEG signals. This approach captures the temporal and spatial dependencies of recorded data, and leverages EEG state features to enable automatic artifact correction through data imputation; (3) results from a qualitative and quantitative evaluation of SRI-EEG on three publicly available EEG datasets. Experimental results show that our algorithm achieves state-of-the-art performance on EEG imputation.

2. Related Work

2.1. Time Series Imputation

Time series data imputation is defined as replacing data gaps with predicted values computed from the remaining data. Simple methods replace the missing data with the mean or median of non-empty values, or the last observed value. Such methods offer a fast and easy way to impute missing portions from known data. However, these approaches are based on simple statistical rules and can easily introduce large errors, e.g., imputing long-range missing segments from limited known data.

Non-deep-learning-based techniques have been commonly adopted as they outperform the aforementioned simple imputation methods and do not demand dense computation. K nearest neighbors (KNNs)-based imputation (Zhang and Zhou, 2007) utilizes the statistical dependency in neighboring data, and imputes missing parts using the mean of K nearest neighbors. Factorization-based techniques (Friedman et al., 2001), decompose the non-missing signal into basis vectors to approximate values at missing points. Adaptations of factorization-based algorithms, such as multivariate imputation by chained equations (MICE) (Azur et al., 2011) and SoftImpute (Mazumder et al., 2010), have also been explored with varying levels of success. However, one of the main limitations of these approaches is the inability to capture long-term temporal dependencies in time series data.

Recent advancements in deep learning have shown desirable capability to encode long-range temporal correlations and have achieved state-of-the-art imputation performance on time series datasets (Moritz and Bartz-Beielstein, 2017; Luo et al., 2018; Miller et al., 2018; Yuan et al., 2018; Suo et al., 2019; Zhang and Yin, 2019; Tang et al., 2020; Miao et al., 2021; Zerveas et al., 2021). Among these works, BRITS (Cao et al., 2018) and MRNN (Yoon et al., 2017) use bidirectional RNNs, which impute missing values based on hidden states updated in both the forward and backward directions. These two approaches have achieved state-of-the-art performance on one of the most popular multivariate time series datasets, PhysioNet Challenge 2012 (Goldberger et al., 2000). MRNN takes the values to-be-imputed as constraints and does not encode correlations between the missing segments. BRITS does not impose strong assumptions on the imputation setup such as linear dynamics in the hidden states, and enables imputing correlated missing segments.

Motivated by the successful adoption of bidirectional RNNs on the time series imputation problem, we build our network's backbone using a bidirectional LSTM. The LSTM processes time series data in both the forward and backward directions with two separate sequences of hidden states. The two sequences are then averaged to predict imputed values. Such a backbone network has mostly been used for general time series data in prior work (e.g., air quality, human activity, and health care datasets). It fails to impute EEG signals containing a large amount of irregularly occurring artifacts. To tackle this limitation, we introduce encoded EEG state constraints into the network along with taking as input the spatial correlations between signal collected by all the electrodes in an EEG cap. Through our evaluation, reported in Section 6, we demonstrate the effectiveness of our approach targeted at the EEG artifact imputation problem.

2.2. EEG Artifact Correction

Artifacts in EEG are undesired noise mainly originating from two types of sources: (1) extrinsic artifacts include environment noise and experimental errors, and (2) intrinsic artifacts include physiological artifacts (Jiang et al., 2019) such as body motions or eye movements. Extrinsic artifacts can be eliminated by filtering recorded signal or following proper experimental procedures. However, preventing intrinsic artifacts is more challenging and their removal requires particular algorithms (Anderer et al., 1999). In this work, we focus on eliminating physiological artifacts stemming from the human body, specifically body motions, eye movements, and cardiac activities. Therefore, in this section we focus on work most closely related to handling physiological artifacts in EEG data.

Blind source separation (BSS) approaches, including principal component analysis (PCA) and independent component analysis (ICA), are widely used for EEG artifact removal. PCA (Berg and Scherg, 1991) is a widely used BSS technique. It decomposes EEG signals into uncorrelated variables, called principal components (PCs), through an orthogonal transformation. The PCs representing artifacts are removed to denoise the signal, while the remaining PCs are used to reconstruct clean EEG data. PCA typically fails to unravel signal dependencies and can mistakenly discard non-artifact signal (Casarotto et al., 2004). ICA (Somers and Bertrand, 2016) is a flexible BSS method. It assumes that collected EEG signals are linear mixtures of artifacts and non-artifacts. The first step involves decomposing recorded signals into independent components (ICs). Sejnowski (1996) introduces artifactual and non-artifactual ICs as independent ICs, such that obvious artifactual ICs can be segregated easily. By eliminating ICs representing artifacts, ICA reconstructs clean signal with the remaining ICs. Built upon the conception of ICA, recent research has studied EEG artifact removal with various ICA variants and demonstrated their effectiveness (Flexer et al., 2005; Bian et al., 2006; Li et al., 2006; Ting et al., 2006; Zhou and Gotman, 2009; Winkler et al., 2011; Rejer and Górski, 2015, 2019; Dimigen, 2020; Klug and Gramann, 2020). Although a large number of ICA variants have been developed over the last twenty years to control EEG artifacts in different scenarios, the inherent idea of how ICA works imposes assumptions on the recorded signal but the assumptions are not always satisfied. For example, ICA can estimate non-Gaussian signals (one of the premises of ICA), but recorded signals are usually unknown to be Gaussian or non-Gaussian (Jiang et al., 2019).

Though ICA is an effective standard for EEG artifact correction in general, using ICA alone still requires careful manual effort and time to inspect the source signal, which may not have satisfactory properties to fit ICA. We use ICA to detect artifacts followed by automatically imputing them using our proposed method. Combining ICA with the proposed automatic imputation approach achieves improved performance compared to using ICA alone as shown in Section 6.1.

2.3. Brain Computer Interfaces and Brain Signals

Depending on the features of interest, EEG based BCIs can be categorized into two classes (Lotte, 2015): (1) event-related potential (ERP) based BCI, and (2) oscillatory activity based BCI. ERP BCIs can detect high-amplitude and low-frequency brain responses to known stimuli. They usually contain well-stereotyped waveforms and are robust across subjects (Fazel-Rezai et al., 2012). The robustness of ERPs thus enables efficient learning of ERP features using machine learning based algorithms. For example, the P300 response, one type of ERP, is evoked by visual stimuli, and forms identifiable electrical waveforms. Other types of ERPs, such as motor imagery, also induce recognizable waveforms. Prior deep-learning-based methods have deployed various classifiers to distinguish between different ERP types (Lawhern et al., 2018; Santamaŕıa-Vázquez et al., 2019, 2020; Wen et al., 2019; Zhao et al., 2020; Zang et al., 2021). Oscillatory BCIs can detect the signal power of EEG frequency bands. Due to low signal-to-noise ratio and great variations across subjects (Pfurtscheller and Neuper, 2001), oscillatory BCIs are typically challenging to model using machine learning models. An example of oscillatory BCIs is the sensorimotor rhythm (SMR). Subjects produce lower SMR amplitudes when the corresponding sensorimotor regions are active, and higher amplitudes otherwise (Arroyo et al., 1993). SMR data is greatly variable across and even within subjects, and thus demands subject training for data collection, and longer signal calibration session for practical use (Krusienski et al., 2008).

To assess the generality and robustness of the proposed EEG artifact imputation method under different EEG paradigms, we evaluate SRI-EEG on two ERP datasets: Bike (Bullock et al., 2015) and Kaggle (Margaux et al., 2012), and one oscillatory dataset: SMR (Tangermann et al., 2012).

3. Method

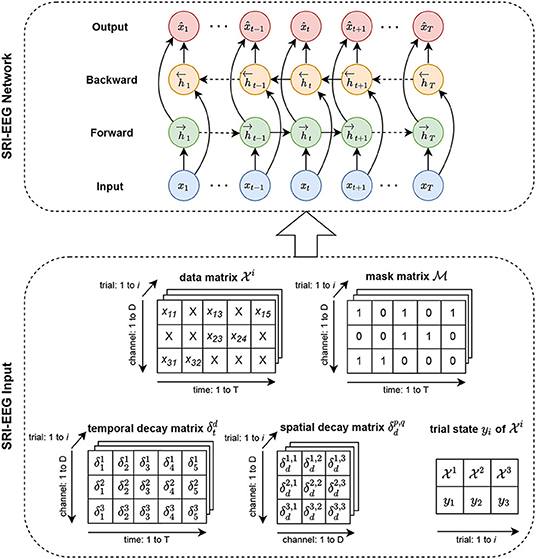

Similar to the standard bidirectional LSTM for time series data imputation, our network takes as input a data matrix, a mask matrix and a time gap matrix processed from raw data. However, unlike using a bidirectional LSTM designed for general time series data, we also take into account a spatial gap matrix for modeling EEG channel dependency. Added to that is a state vector of encoded EEG event features to handle irregularly occurring artifacts that form a typical but challenging problem for EEG based BCI modalities. Section 3.1 introduces the preliminaries covering notation and definitions. Section 3.2 presents the backbone bidirectional LSTM for general time series imputation and discusses its limitations. Sections 3.3 and 3.4 present our design based on the RNN backbone network to specifically tackle the EEG imputation problem. Section 3.5 discusses the learning objectives. Figure 1 shows an overview of the SRI-EEG architecture.

Figure 1. SRI-EEG input and network structure. In the SRI-EEG Input, based on the EEG data matrix , we compute the mask matrix , and the temporal decay matrix . Additionally, we generate a spatial decay matrix based on channel locations in the EEG cap. A trial state matrix yi is also calculated for each EEG trial. All the matrices computed for a single trial i are passed as input to the SRI-EEG Network. We adopt a bidirectional LSTM as the backbone network where the hidden states are updated in both the forward and backward directions. Once the imputed values are obtained, mean squared error is computed, and back-propagated for subsequent iterations.

3.1. Preliminary

We denote the raw EEG data as a two-dimensional matrix where the first dimension represents time: is a sequence of data with T timestamp observations collected at trial i. The t-th observation consists of values from D EEG channels at timestamp t. Note that the time range 1 to T and the channels 1 to D in this section are from any single trial of data, where trial i is defined as a segment/epoch of interest during EEG data collection.

The time difference between successive valid (non-artifact) observations varies due to artifacts of random lengths. To model the changing time gaps between neighboring valid observations, we define a temporal decay matrix: at time t and channel d similar to Cao et al. (2018):

where we denote a binary mask matrix along the time domain from channel d∈D to mask out the artifacts in the data matrices. Specifically,

Additionally, we denote by yi the EEG state vector representing the state of the i-th trial. It is a learned feature vector using EEGNet (Lawhern et al., 2018), one of the state-of-the-art EEG classifiers. EEGNet allows us to extract a state feature vector to represent stimuli applied to subjects at trial i (Section 3.4). The state feature vector is fed into our network as supervised information together with the data matrix, mask matrix, temporal decay matrix, and spatial decay matrix (defined in Section 3.3). Figure 1 depicts all the input matrices.

3.2. Bidirectional LSTM for Time Series Imputation

In this section, we briefly introduce unidirectional LSTMs, and our backbone network which consists of a bidirectional LSTM with input mask and temporal decay matrices. Following the background information, we discuss the backbone structure's limitations and introduce our improvements.

3.2.1. Unidirectional LSTM

In a unidirectional LSTM, the hidden layer receives an input vector xt, and predicts an output vector . At each timestamp t, the hidden layer maintains a hidden state ht, and updates the hidden state based on the current input xt and the previous hidden state ht−1. We formulate this procedure as:

where σh is the hidden layer's activation function, Wxh is the weight matrix from the input layer to the hidden layer, Whh is the weight matrix between two temporally consecutive hidden states, and bh is the hidden layer's bias vector. Consequently, we compute the output vector as:

where σx is the output layer's activation function, Wx is the weight matrix from the hidden layer to the output layer, and bx is the output layer's bias vector.

3.2.2. Unidirectional LSTM With Input Mask

The unidirectional LSTM takes continuous input without data gaps to update hidden states progressively. However, for the imputation task, where the input data matrix consists of both artifacts and non-artifacts, the raw data cannot be fed into the network to update the hidden states. The artifacts will induce distracting features and thus introduce undetermined bias in the imputation process. To resolve this issue, we leverage a mask matrix to filter out artifacts and pass only the valid data into the network, similar to Cao et al. (2018) and Yoon et al. (2018). Accordingly, the input matrix is computed by:

where ⊙ is element-wise multiplication.

3.2.3. Unidirectional LSTM With Input Mask and Temporal Decay

Although the hidden states of LSTMs enable the modeling of decayed influence along the time axis (i.e., distant values have less impact than close ones), the length of effective time window is automatically learned and thus not easily controllable by hyper-parameter tuning. To address this limitation, we utilize the defined temporal decay matrix to control the temporal influence on hidden state update, similar to Cao et al. (2018). Specifically, the temporal decay factor is computed by:

where Wβ is the time gap's weight matrix, and bβ is the bias vector. Consequently, the hidden state is updated by applying an element-wise multiplication between the previous hidden state (at timestamp t−1) and the current temporal decay factor (at timestamp t):

where βt represents the temporal decay factor at time t from all the D channels.

3.2.4. Bidirectional LSTM With Input Mask and Temporal Decay

We denote by the hidden state sequence of the forward-directional LSTM, and we iteratively update the sequence based on inputs from timestamps 1 to T. Conversely, the backward-direction LSTM updates the hidden state sequence by taking in reversed inputs from timestamps T to 1. Combining both the forward and backward hidden states, we compute the estimated values by:

where σ is an average function to combine the forward and backward hidden states. and are the forward-directional LSTM's weight matrix and bias vector from the hidden layer to the output layer, respectively. It is similar for and in the backward-direction LSTM.

3.2.5. Our Improvements

Introducing the mask matrix and temporal decay into the bidirectional LSTM proposed by prior work forms an effective backbone network to impute multiple time series datasets as evaluated by Yoon et al. (2017) and Cao et al. (2018). Nevertheless, EEG is a special type of time series data with unique characteristics such as spatial dependency between electrodes and state-related global waveforms. For example, when a region of the human brain is stimulated by a certain event, the imputation is likely to benefit from assigning higher weights to the EEG signals recorded on the scalp above that active brain region, e.g., all electrodes above the motor cortex. Additionally, the imputation performance can be potentially improved by capturing EEG waveform patterns from EEG sequences in a different trial that are temporally similar, i.e., in the same EEG state. Such EEG state correlation introduces favorable inductive biases. We take into account the aforementioned EEG properties and design the imputation algorithm by exploring the channel/electrode spatial correlations (Section 3.3), including EEG state modeling (Section 3.4).

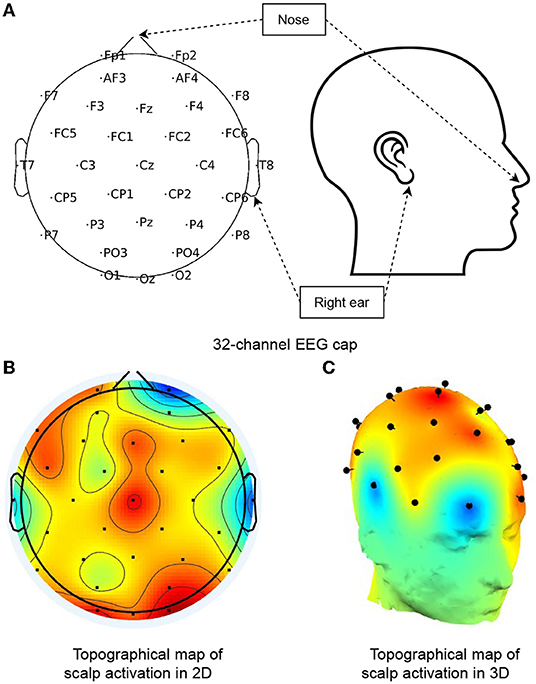

3.3. Learning the Spatial Correlations of EEG Channels

As shown in Figure 2, the 32 electrodes are positioned on a cap in a particular layout (the International 10-20 system). The electrode topology presents varying proximity between different pairs of electrodes. For example, when certain brain regions are active, triggered by some stimuli (red regions in Figure 2), all the electrodes within that region collect related signals. These signals, thus, become important neighboring values to impute contaminated channels affected by artifacts. To model the spatial correlations between the electrodes, which prior work has not extensively studied, we propose a novel spatial decay matrix . It consists of the Euclidean distance between any EEG channel pair: p and q. We position the channels in a 3D Euclidean coordinate system, and denote the coordinates of any two channels as p = (px, py, pz) and q = (qx, qy, qz), respectively. Accordingly, the distance between any EEG electrode pair is calculated by:

Similar to the temporal decay factor βt, we introduce a spatial decay factor ηd to model the decay dependency between EEG channel pairs as their distance increases:

where δd denotes the distances between any channel d and all the remaining channels, Wη is the weight matrix, and bη is the bias vector.

Figure 2. Topological map of a 32-channel EEG cap. (A) Shows the channel distribution of a 32-electrode EEG cap (as used in the Bike dataset) with channel names, nose, and right ear annotated. (B,C) Present an example of cortex activity in 2D and 3D topographical maps, respectively. The red regions signify active, while blueness stands for the opposite, and the gradual change of colors are computed by linear interpolations between channel signals.

Combining the temporal and spatial decay factors with the weight hyper-parameter λ∈[0, 1], we formulate the temporal-spatial decay matrix as:

We update the bidirectional LSTM's hidden states by element-wise multiplying the temporal-spatial decay matrix and the last hidden state (forward and backward):

3.4. Modeling the State of EEG Trials

Studies on EEG classification (Lotte et al., 2007; Fazel-Rezai et al., 2012; Lawhern et al., 2018) have demonstrated that different stimuli applied to subjects during each trial can lead to different features in the collected EEG data. These features can be differentiated to enable classifying the EEG signals, e.g., classifying ERPs. Motivated by these findings and to take advantage of the recognizable features of EEG waveforms, we utilize the state features to provide supervised information on the EEG signal imputation task. Specifically, we obtain the features learned from the state-of-the-art EEG classifier EEGNet (Lawhern et al., 2018). We subsequently transform the features into a 1-dimensional vector (state vector) to serve as supervised information for our network's input. More formally, we denote the state of EEG trial i as yi, and concatenate yi with the masked data matrix to pass them together into the network.

In summary, the update of hidden states considering temporal-spatial decay and the EEG state is computed by:

where ⊕ signifies concatenation.

3.5. Learning Objective

We train our model using the Mean Squared Error (MSE) loss in the back-propagation process:

where n denotes the total number of observations in each trial. The MSE is calculated as an averaged sum of the difference between the observed and imputed EEG values along the time domain and across all EEG channels.

4. EEG Dataset

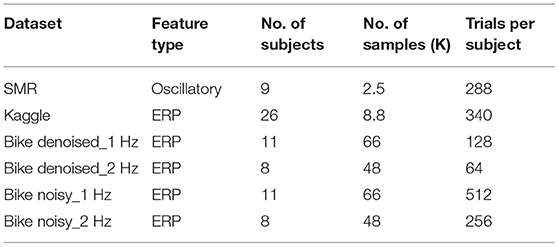

For evaluation, we use three publicly available datasets, namely Bike, Kaggle7, and SMR8. The feature type, the number of subjects and samples, and the number of trials per subject in all three datasets are summarized in Table 1.

4.1. Bike

The Bike dataset was introduced by Bullock et al. (2015) and provides P300 event related potentials (ERPs). Twelve subjects performed two different versions of three-stimuli oddball tasks while seated on a stationary bike. Participants were asked to respond to the target stimuli and ignore the remaining two types of distracting stimuli. The stimuli were presented at different rates: 1 Hz [200 ms stimulus presentation with 800 ms inter-stimulus interval (ISI)], and 2 Hz (200 ms stimulus presentation with 300 ms ISI). Participants completed the two-version tasks at rest (sat on the bike but not pedaling), and during exercise (sat on the bike and pedaling).

We followed the train-test split protocol and pre-processing procedure used in Ding et al. (2019). We used both the 1 and 2 Hz datasets (pedaling or noisy, and not pedaling or denoised). Specifically, the Noisy_1 Hz and Noisy_2 Hz datasets are the “noisy” versions of the Bike dataset since pedaling introduced a large amount of artifacts in the collected EEG signals. Additionally, we analyzed “denoised” versions of the Bike dataset, named Denoised_1 Hz and Denoised_2 Hz, where the subjects were not pedaling during EEG collection. For the data pre-processing, we applied a bandpass filter and kept the 1–40 Hz frequency band and lower the sampling rate from 512 to 128 Hz.

4.2. Kaggle

For the Kaggle dataset (Margaux et al., 2012), 26 participants engaged in a P300 speller task. The system presented a random sequence of flashing letters and numbers, arranged in a 6 ×6 grid, to elicit the P300 responses (Krusienski et al., 2008). The goal of this task was to determine whether the displayed item is the target item by analyzing recorded EEG signals. Following the same pre-processing procedure as the Bike dataset, the EEG signal was band-pass filtered to 1–40 Hz and down-sampled to 128 Hz. The training and testing sets were split as suggested in the official release.

4.3. SMR

We use the BCI Competition IV Dataset 2A (Tangermann et al., 2012) involving nine subjects for oscillatory EEG data analysis. The SMR data consists of four classes of imagined movements of left and right hands, feet and tongue. The EEG data was originally recorded using 22 electrodes, sampled at 250 Hz and bandpass filtered between 0.5 and 100 Hz (Schirrmeister et al., 2017). We re-sampled the data to 128 Hz, and band-pass filtered it to 1–40 Hz to keep consistent pre-processing setups. The training and testing sets were split as in the official release.

5. Experimental Setup

5.1. Evaluation Setup and Metrics

We train our imputation model using the Adam optimizer (Kingma and Ba, 2015) with a learning rate of 0.001 and batch size of 32. The weight λ between temporal and spatial decay factors is set to 0.8 which was determined to be a suitable value through experimentation. We adopt the early stopping strategy by randomly selecting 15% of the training sets to form the validation dataset. Training is stopped when the lowest validation error is obtained. The training weights are saved for subsequent evaluation on the testing sets.

We adopt two metrics for performance evaluation: mean absolute error (MAE) and root mean squared error (RMSE). We define as the observed data matrix, and as the corresponding imputed data matrix. n is the total number of observations in each trial i. Accordingly, the metrics are defined as:

5.2. Baseline and Comparison Methods

We compare the proposed imputation model with the following non-deep-learning algorithms: Mean, KNN, SoftImpute, and ICA, and with two deep learning algorithms: MRNN and BRITS. The non-deep-learning approaches compute comparatively fast and are widely adopted as baselines in prior studies. The deep-learning methods have achieved great improvements in imputation performance on time series datasets allowing us comparisons with the state-of-the-art.

• Mean: Mean imputation replaces artifacts with the global average along the time domain for each trial.

• KNN9: KNN uses the k-nearest neighbors to find similar samples, and imputes the artifacts with weighted average of the k neighbors.

• SoftImpute9[]: SoftImpute is a matrix completion based technique that works by applying iterative soft thresholding of SVD decompositions.

• ICA10: ICA decomposes the original signal into independent components, and then removes the components consisting of artifacts for denoising the signal. ICA is a popular EEG artifact correction approach widely adopted by researchers.

• MRNN11 (Yoon et al., 2017): MRNN is a bidirectional RNN based algorithm, and treats the imputed values as constants without modeling correlations between channels.

• BRITS (see textfootnote 11) (Cao et al., 2018): BRITS is an extension of MRNN. It considers the correlations between different imputed values.

5.3. Dataset Preparation

We evaluate our proposed method on two setups: (1) we detect artifacts in EEG with ICA and manual inspection, and impute the detected artifacts using our proposed imputation method, and (2) we synthesize EEG artifacts by removing varying percentages of the recorded EEG data, and impute the synthesized artifacts using our proposed imputation method.

5.3.1. EEG Artifact Detection

We extract when and where the artifacts occur during EEG recording via ICA (Winkler et al., 2011). Additionally, we detect obvious missing values caused by bad channels using functions in EEGLAB (Delorme and Makeig, 2004) by manually investigating kurtosis in the channel and looking at how well each channel correlates with the surrounding channels.

Although this setup needs manual effort to detect artifacts in the recorded EEG signal, it lays a practical basis to evaluate our network's effectiveness. This setup also incurs complexity in extracted artifacts, such as different artifact quantities across trials and different artifact patterns caused by various sources of noise.

5.3.2. Synthesized EEG Artifacts

We synthesize EEG noise by randomly removing certain portions of the observed data, and replacing the removed parts with NaNs. The synthesized artifact rates in our experiment are: 2.5, 5, 7.5, 10, 12.5, 15, 17.5, 20%. This setup offers controllable artifact rates to compare our approach's performance with other imputation methods at fixed artifact rates. The artifact ratios help to analyze an imputation method's performance on varying artifact rates (studied in Section 6.2).

The motivation for utilizing the synthesized artifacts is to prevent the network from getting scoped by certain types of artifacts, such as those caused by heart beats. This goal is achieved by introducing randomness and increasing complexity of the input data. Specifically, the synthesized artifacts may consist of clean EEG, real artifacts or both. The synthesized random and complex artifacts prevent the model from simply “remembering” artifact patterns but failing to effectively capture the temporal and spatial relationships between data points. By evaluating the imputation methods based on the synthesized artifacts setup, we aim to test the algorithm's robustness and further boost EEG usage for scenarios that introduce different artifact types. The synthesized artifacts are obtained automatically, as opposed to the manual effort in detecting real artifacts using ICA. This setup is therefore, a step toward the potential goal of building automatic EEG artifact correction pipelines where people can detect real artifacts without heavily relying on human effort. Following automatic artifact detection, our proposed imputation algorithm can be applied in the artifact correction process to generate denoised EEG signal.

6. Results

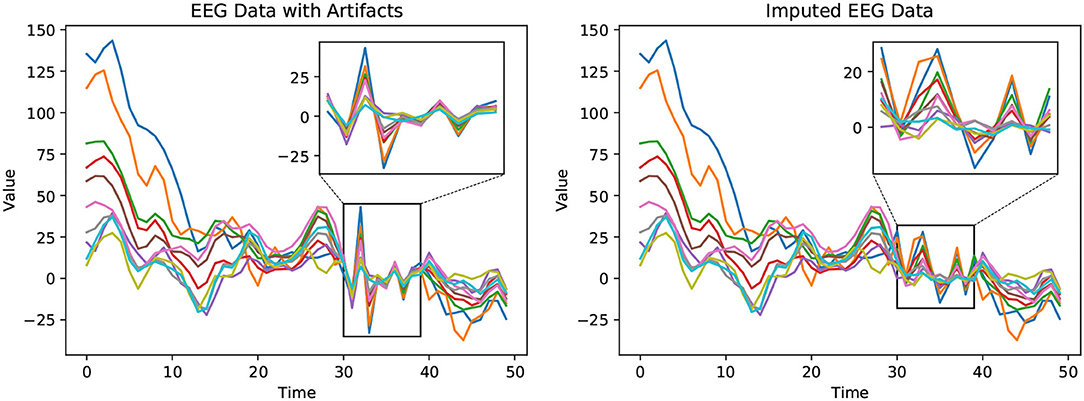

6.1. Detected Artifacts Imputation

We qualitatively compare the observed EEG data and the corresponding corrected EEG data output by our proposed imputation method, shown in Figure 3. In the Bike-Denoised_1 Hz dataset, we randomly select EEG data collected by 10 out of 32 channels from a random trial. This is done to avoid crowding in the plots while still ensuring generality. Each channel is plotted in a unique color. Marked by the boxes, signals from timestamps 30–40 are detected as contaminated data. The artifacts are visible as mostly extreme values in this time window. After applying our proposed imputation method, as shown in the right plot, the contaminated data has been corrected by taking into account values from neighboring channels and timestamps, and by considering the trial state feature. The imputed values eliminate the waveforms consisting of extreme large or small values to maintain the waveform trend as a whole.

Figure 3. Observed EEG data with artifacts (left) and the corrected EEG data with imputed values (right). The plots depict EEG waveforms recorded from ten randomly selected channels (32 channels in total) in the Bike-Denoised_1Hz dataset. In the left figure, values in the rectangle are detected as signals with artifacts. The right figure shows the corrected EEG signal with imputed values in the box. The extreme values caused by artifacts have been corrected by our proposed method, SRI-EEG.

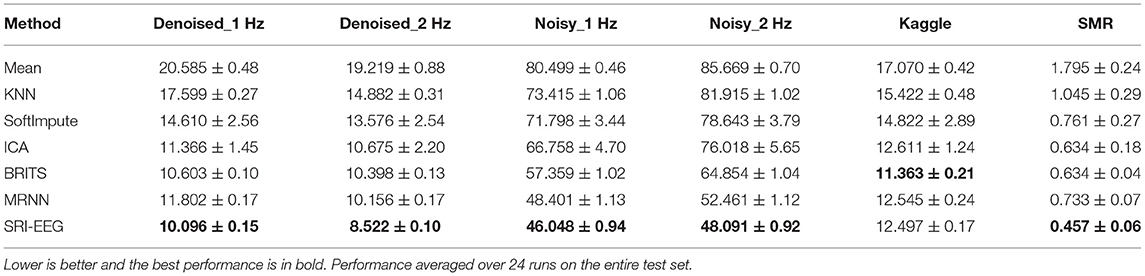

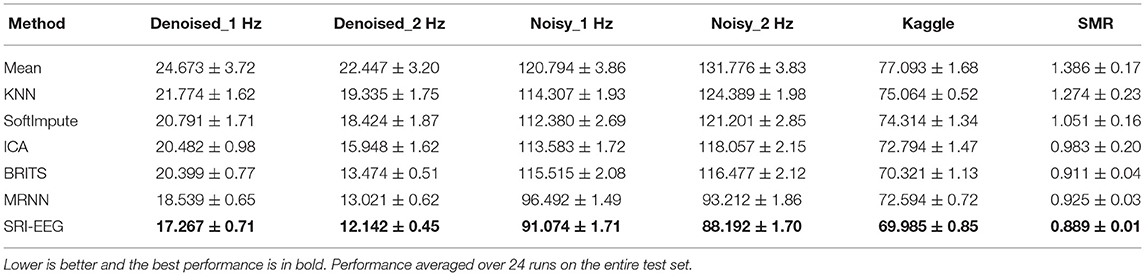

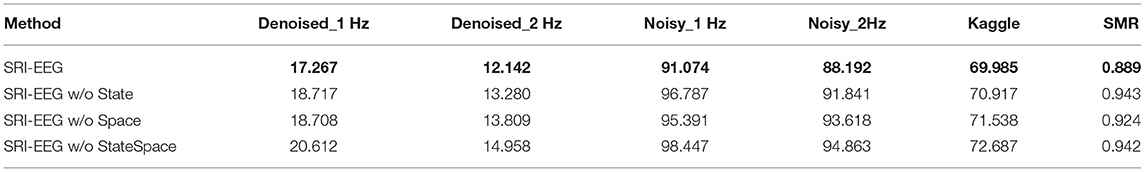

We also conduct a quantitative study and Tables 2, 3 show the detected EEG artifact imputation performance comparisons. The results show great variance across different datasets as the occurrence of detected artifacts differs considerably. The two denoised Bike datasets present lower MAE and RMSE compared with the corresponding noisy sets. For each dataset, we can see an improvement in imputation performance from the top to the bottom. Our method performs well on all three datasets and achieves lower errors compared to the competing approaches in general. Overall, the results demonstrate our proposed method's ability to correct real EEG artifacts.

To measure imputation performance significance on data reported in Table 2, we performed a Wilcoxon signed rank test over all the imputed values averaged over 10 runs on the Denoised-1 Hz, Denoised-2 Hz, Noisy-1 Hz, Noisy-2Hz, Kaggle and SMR test sets. We report the full results in Supplementary Tables 1–6 and Supplementary Figures 1–6. Here we summarize the main findings.

Denoised_1 Hz dataset. Absolute error for the imputed values was significantly different for the compared imputation methods as determined using the Friedman test, , p < 0.0001, n = 1, 980. All pairwise differences between SRI-EEG and the other methods were significant to at least a level of p < 0.001. Exception is SRI-EEG vs ICA, which was significant to a level of p < 0.05 instead.

Denoised_2 Hz dataset. Absolute error for the imputed values was significantly different for the compared methods as determined using the Friedman test, , p < 0.0001, n = 1, 980. All pairwise differences between SRI-EEG and the other methods were significant to at least a level of p < 0.001.

Noisy_1 Hz dataset. Absolute error for the imputed values was significantly different for the compared imputation methods as determined using the Friedman test, , p < 0.0001, n = 1, 980. All pairwise differences between SRI-EEG and the other methods were significant to at least a level of p < 0.001. Exceptions are SRI-EEG vs MRNN and SRI-EEG vs BRITS, both of which were non-significant (p >0.05).

Noisy_2 Hz dataset. Absolute error for the imputed values was significantly different for the compared imputation methods as determined using the Friedman test, , p < 0.0001, n = 1, 980. All pairwise differences between SRI-EEG and the other methods were significant to at least a level of p < 0.001.

Kaggle dataset. Absolute error for the imputed values was significantly different for the compared imputation methods as determined using the Friedman test, , p < 0.0001, n = 1, 980.

SMR dataset. Absolute error for the imputed values was significantly different for the different compared methods as determined using the Friedman test, , p < 0.0001, n = 1, 980. All pairwise differences between SRI-EEG and the other methods were significant to at least a level of p < 0.001.

6.2. Synthesized Artifacts Imputation

6.2.1. Imputation With the Same Synthesized Artifact Rate

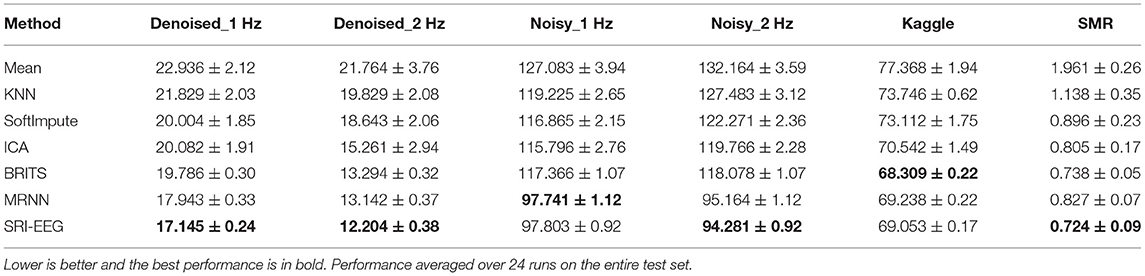

Tables 4, 5 show the performance of different imputation methods evaluated on all the datasets with 10% artifact rate. Evidently, simply replacing artifacts with the mean of observed data is highly inaccurate. KNN and SoftImpute perform much better than simple averaging imputation. Using ICA to detect and remove artifacts outperforms the aforementioned three methods. In contrast, the two RNN based methods, BRITS and MRNN, demonstrate significantly improved performance compared to the non-deep-learning-based approaches. SRI-EEG shows comparable performance to BRITS and MRNN.

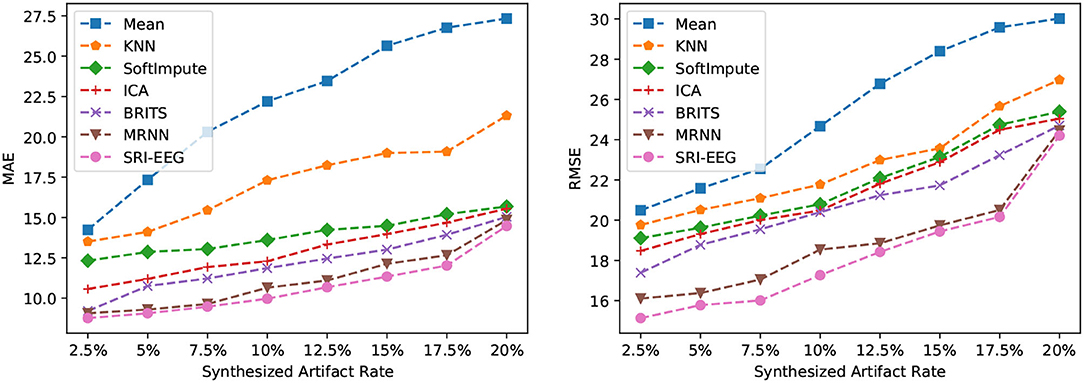

6.2.2. Imputation With Varying Synthesized Artifact Rates

On the Bike-Denoised_1Hz dataset, we evaluate the imputation performance at various artifact rates. Figure 4 reveals the MAE (left) and RMSE (right) using different imputation methods at artifact rates from 2.5 to 20%. As is clear, the two errors increase with increase in artifact rates. In particular, simple mean imputation is very sensitive to artifacts, where the errors grow rapidly as the number of artifacts increases. KNN, SoftImpute, and ICA perform much better than the mean imputation. The two RNN based methods (BRITS and MRNN) and our method achieve further lower imputation errors than the non-RNN based approaches at all artifact rates. This study demonstrates the robustness of our model's ability to handle varying proportions of artifacts in the signal, and the effectiveness of deep-learning-based methods in general to tackle the imputation problem at different artifact rates.

Figure 4. Imputation performance of the different synthesized artifact rates on the Bike-Denoised_1Hz dataset. (Left) Performance evaluated by MAE. (Right) Performance evaluated by RMSE. For both metrics, lower is better.

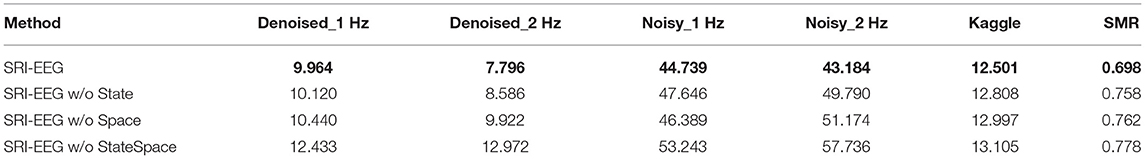

6.3. Ablation Study

6.3.1. Influence of EEG Channel Spatial Dependency and EEG Trial State

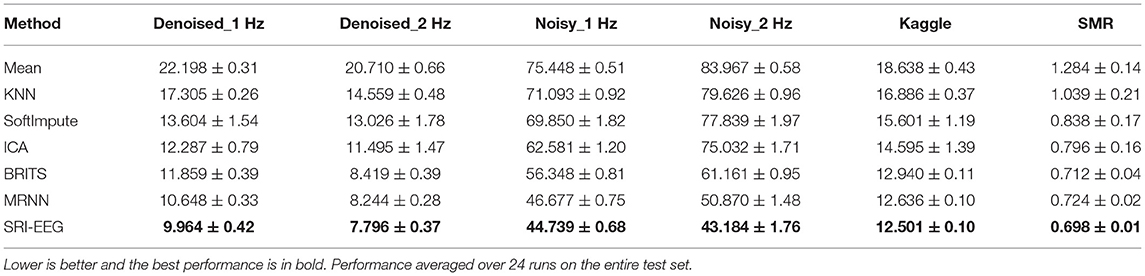

We perform an ablation study to evaluate the effectiveness of including EEG state and channel spatial correlation as the network input. As shown in Tables 6, 7, after removing either or both the trial state supervision and spatial decay in SRI-EEG, the imputation performance degenerates on all the datasets evaluated by MAE and RMSE. Based on these results, both factors appear to be important components contributing to our algorithm's performance demonstrating effectiveness for the EEG imputation task compared to other methods.

Table 6. Ablation study of EEG state and channel spatial correlation in SRI-EEG on the three datasets with 10% artifact rate (MAE, lower is better and the best performance is in bold).

Table 7. Ablation study of EEG state and channel spatial correlation in SRI-EEG on the three datasets with 10% artifact rate (RMSE, lower is better and the best performance is in bold).

6.4. Post-imputation Analysis

6.4.1. Goal

To evaluate SRI-EEG on a practical task, we conducted post-imputation analysis in the form of an EEG classification problem. Our aim was to analyze if our model can correct artifacts in EEG and further boost the EEG classification performance. We designed this study as an indirect reflection of the imputation performance and a first step toward using imputation based EEG artifact correction to support EEG-based applications.

6.4.2. Dataset and Metrics

We performed analysis on the Bike dataset, i.e., Denoised_1 Hz, Denoised_2 Hz, Noisy_1 Hz, and Noisy_2 Hz. The Bike dataset consists of P300 ERPs from three-stimuli oddball tasks (introduced in Section 4.1). Therefore, we conduct the classification experiments to evaluate the three-class classification performance.

We use precision, recall and F1 score as the classification metrics:

where TP, FP, and FN stand for “true positive,” “false positive,” and “false negative,” respectively.

6.4.3. Setup

We take the classification performance without imputation as a baseline. Specifically, based on the recorded EEG data, i.e., no imputation applied, we perform baseline classification using xDAWN+RG, which is a multi-class EEG classifier (Barachant et al., 2011). Compared against this baseline, we classify the imputed EEG data using BRITS, MRNN, and SRI-EEG as the imputation methods, respectively.

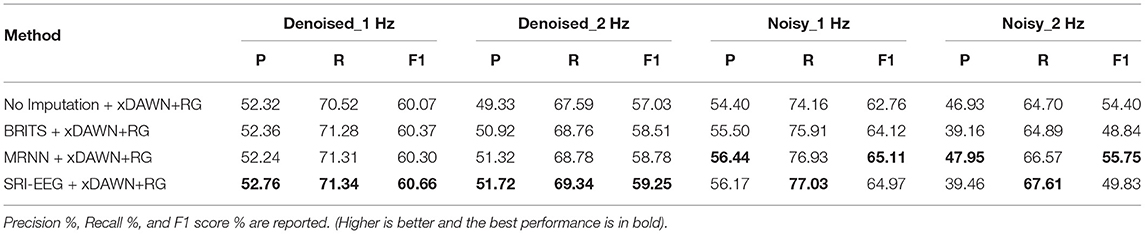

6.4.4. Results and Analysis

We present the classification comparisons in Table 8 with the best performance in bold. Higher precision and recall correspond to better performance. We find that by classifying imputed EEG signals, both the precision and recall are improved in general on the denoised and noisy Bike sets compared with directly classifying the raw data without artifact correction. Importantly, compared with the two RNN-based imputation methods, SRI-EEG achieves improved precision and recall on the classification task evaluated on Denoised_1Hz and Denoised_2 Hz datasets. Although the precision on Noisy_1 Hz and Noisy_2 Hz datasets is less satisfactory than that using MRNN based post-imputation analysis, the recall shows improved performance.

To measure any significance in performance based on data in Table 8, we performed a McNemar's test over all the classified labels (1,200 labels) on the Denoised-1 Hz, Denoised-2 Hz, Noisy-1 Hz, and Noisy-2 Hz test sets. McNemar's test has been proven to be a suitable and effective way to compare machine learning classifiers (Dietterich, 1998). We report the full results in Supplementary Tables 7–10. Here we summarize the main findings.

(1) On the Denoised_1Hz dataset, all pairwise differences between imputation with SRI-EEG and other methods were significant to at least a level of p < 0.01. Exception is SRI-EEG vs MRNN, which was significant to a level of p < 0.05 instead. (2) On the Denoised_2 Hz dataset, all pairwise differences between imputation with SRI-EEG and other methods were significant to at least a level of p < 0.05. (3) On the Noisy_1Hz dataset, all pairwise differences between imputation with SRI-EEG and other methods were significant to at least a level of p < 0.01. (4) On the Noisy_2 Hz dataset, all pairwise differences between imputation with SRI-EEG and other methods were significant to at least a level of p < 0.01. Exception is SRI-EEG vs MRNN, which was significant to a level of p < 0.05 instead.

In summary, this study demonstrates that applying imputation for artifact correction can enable using EEG for practical scenarios such as classification. Our proposed EEG imputation technique can effectively recover EEG signals through data imputation and help further improve the post-imputation classification performance.

7. Limitations and Future Work

From the assessments presented in Section 6.1, our method achieves improved performance on imputing detected artifacts and thus demonstrates potential for use in real EEG artifact correction. However, in some scenarios when subjects perform long-term body motions during EEG data collection, the artifacts caused by muscle movements and cardiac activities can exist in a long time window or for the entire trial. These long-range artifacts significantly limit the availability of enough clean data which is required for RNN based imputation approaches. We can observe this issue from the experiments on synthesized artifacts (Section 6.2.2): when the artifact rate reaches 17.5% and beyond, the imputation error increases rapidly. Thus, RNN based imputation methods rely on having enough valid signal to predict/impute artifacts confidently. In future work, we plan to tackle this limitation inherent to RNNs by exploring the use of reconstruction based denoising approaches that can aid RNN imputation.

We model the spatial dependency of EEG signals as 3D distances between pairs of electrodes. This setup has been proven effective to handle the linearly decayed influence on the EEG cap across channels. This linear decay dependency holds under the assumption that a single event/state is applied to the subject at each EEG trial and the applied stimulus activates a specific region of human brain. When multiple tasks are taken into account, e.g., click the mouse when you see a certain letter on the screen, multiple cortical regions (e.g., the motor cortex and the visual cortex) are activated. In such cases, a simple distance based decay model may fail to capture the complex spatial relationships between electrodes placed above those cortical regions. Future research should address this limitation by using EEG state to support the modeling of EEG channel relationships knowing that channel state and active brain regions are dependent. New work could also explore dynamic capture and computation of electrode relevance given different stimuli.

8. Conclusion

In this paper, we introduced an imputation supported artifact removal algorithm to correct irregular and non-uniformly distributed artifacts that ubiquitously exist in EEG signal data. Our imputation approach enables automatic EEG artifact correction with significantly reduced human effort compared to other methods. Our method is based on a bidirectional LSTM as the backbone network, which has been proven effective by prior work, to learn the temporal dependencies in EEG signal. Into this backbone, we introduce EEG trial state data and spatial correlations between EEG electrodes as supervised information to update the bidirectional hidden states. We evaluated our proposed method via qualitative and quantitative analyses. From the evaluations, we demonstrated that SRI-EEG can greatly improve EEG imputation performance under multiple setups on all the tested datasets. Furthermore, we examined our network design with ablation studies and conducted post-imputation analysis to discuss potential applications that can be supported by imputation based EEG artifact correction. We believe that imputation approaches can improve the effectiveness, robustness and generalization of EEG enabled BCI applications, and see our approach as an important step toward that goal.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author/s.

Author Contributions

The ideas presented in the paper were formulated through a series of meetings to which all authors contributed. All authors contributed to the conception and design of the evaluation. YL organized the database, performed the experiments and analyses, and wrote the first draft of the manuscript. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported in part by NSF award IIS-1845587 as well as ONR awards N00014-19-1-2553 and N00174-19-1-0024.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to thank Barry Giesbrecht and Tom Bullock for providing and contextualizing the bike dataset and also Yi Ding for valuable discussions. We would also like to thank the reviewers for providing insightful and constructive feedback.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fncom.2022.803384/full#supplementary-material

Footnotes

1. ^Galea: https://openbci.com/community/introducing-galea-bci-hmd-biosensing/.

2. ^Emotiv: https://www.emotiv.com/.

3. ^Advanced Brain Monitoring: https://www.advancedbrainmonitoring.com/.

4. ^Muse: https://choosemuse.com/.

5. ^OpenBCI: https://openbci.com/.

6. ^Kokoon: https://kokoon.io/.

7. ^https://www.kaggle.com/c/inria-bci-challenge

8. ^http://bbci.de/competition/iv/

9. ^KNN and SoftImpute are implemented using the Fancy Impute package: https://github.com/iskandr/fancyimpute.

10. ^ICA is implemented using the Predictive Imputer package: https://github.com/log0ymxm/predictive_imputer, and sklearn FastICA: https://scikit-learn.org/stable/modules/generated/sklearn.decomposition.FastICA.html.

11. ^MRNN and BRITS are implemented based on the public BRITS repository: https://github.com/caow13/BRITS.

References

Anderer, P., Roberts, S., Schlögl, A., Gruber, G., Klösch, G., Herrmann, W., et al. (1999). Artifact processing in computerized analysis of sleep EEG-a review. Neuropsychobiology 40, 150–157. doi: 10.1159/000026613

Anindya, S. F., Rachmat, H. H., and Sutjiredjeki, E. (2016). “A prototype of SSVEP-based BCI for home appliances control,” in 2016 1st International Conference on Biomedical Engineering (IBIOMED) (Yogyakarta), 1–6 doi: 10.1109/IBIOMED.2016.7869810

Aricó, P., Borghini, G., Di Flumeri, G., Sciaraffa, N., and Babiloni, F. (2018). Passive BCI beyond the lab: current trends and future directions. Physiol. Meas. 39:08TR02. doi: 10.1088/1361-6579/aad57e

Arroyo, S., Lesser, R. P., Gordon, B., Uematsu, S., Jackson, D., and Webber, R. (1993). Functional significance of the mu rhythm of human cortex: an electrophysiologic study with subdural electrodes. Electroencephalogr. Clin. Neurophysiol. 87, 76–87. doi: 10.1016/0013-4694(93)90114-B

Aydemir, O., and Kayikcioglu, T. (2014). Decision tree structure based classification of EEG signals recorded during two dimensional cursor movement imagery. J. Neurosci. Methods 229, 68–75. doi: 10.1016/j.jneumeth.2014.04.007

Azur, M. J., Stuart, E. A., Frangakis, C., and Leaf, P. J. (2011). Multiple imputation by chained equations: what is it and how does it work? Int. J. Methods Psychiatr. Res. 20, 40–49. doi: 10.1002/mpr.329

Barachant, A., Bonnet, S., Congedo, M., and Jutten, C. (2011). Multiclass brain-computer interface classification by Riemannian geometry. IEEE Trans. Biomed. Eng. 59, 920–928. doi: 10.1109/TBME.2011.2172210

Berg, P., and Scherg, M. (1991). Dipole modelling of eye activity and its application to the removal of eye artefacts from the EEG and MEG. Clin. Phys. Physiol. Meas. 12:49. doi: 10.1088/0143-0815/12/A/010

Bian, N.-Y., Wang, B., Cao, Y., and Zhang, L. (2006). “Automatic removal of artifacts from EEG data using ICA and exponential analysis,” in International Symposium on Neural Networks (Berlin; Heidelberg: Springer), 719–726. doi: 10.1007/11760023_106

Bullock, T., Cecotti, H., and Giesbrecht, B. (2015). Multiple stages of information processing are modulated during acute bouts of exercise. Neuroscience 307, 138–150. doi: 10.1016/j.neuroscience.2015.08.046

Cao, W., Wang, D., Li, J., Zhou, H., Li, L., and Li, Y. (2018). “BRITS: bidirectional recurrent imputation for time series,” in Advances in Neural Information Processing Systems (Montréal, QC), 6775–6785.

Casarotto, S., Bianchi, A. M., Cerutti, S., and Chiarenza, G. A. (2004). Principal component analysis for reduction of ocular artefacts in event-related potentials of normal and dyslexic children. Clin. Neurophysiol. 115, 609–619. doi: 10.1016/j.clinph.2003.10.018

Che, Z., Purushotham, S., Cho, K., Sontag, D., and Liu, Y. (2018a). Recurrent neural networks for multivariate time series with missing values. Sci. Rep. 8:6085. doi: 10.1038/s41598-018-24271-9

Che, Z., Purushotham, S., Li, G., Jiang, B., and Liu, Y. (2018b). “Hierarchical deep generative models for multi-rate multivariate time series,” in International Conference on Machine Learning (Stockholm), 783–792.

Delorme, A., and Makeig, S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods, 134, 9–21. doi: 10.1016/j.jneumeth.2003.10.009

Dietterich, T. G.. (1998). Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput. 10, 1895–1923. doi: 10.1162/089976698300017197

Dimigen, O.. (2020). Optimizing the ICA-based removal of ocular EEG artifacts from free viewing experiments. NeuroImage 207:116117. doi: 10.1016/j.neuroimage.2019.116117

Ding, Y., Huynh, B., Xu, A., Bullock, T., Cecotti, H., Turk, M., et al. (2019). “Multimodal classification of EEG during physical activity,” in 2019 International Conference on Multimodal Interaction (ICMI'19) (Suzhou). doi: 10.1145/3340555.3353759

Fazel-Rezai, R., Allison, B. Z., Guger, C., Sellers, E. W., Kleih, S. C., and Kübler, A. (2012). P300 brain computer interface: current challenges and emerging trends. Front. Neuroeng. 5:14. doi: 10.3389/fneng.2012.00014

Flexer, A., Bauer, H., Pripfl, J., and Dorffner, G. (2005). Using ICA for removal of ocular artifacts in EEG recorded from blind subjects. Neural Netw. 18, 998–1005. doi: 10.1016/j.neunet.2005.03.012

Friedman, J., Hastie, T., and Tibshirani, R. (2001). The Elements of Statistical Learning, Vol. 1. Springer Series in Statistics. New York, NY. doi: 10.1007/978-0-387-21606-5_1

Goldberger, A. L., Amaral, L. A., Glass, L., Hausdorff, J. M., Ivanov, P. C., Mark, R. G., et al. (2000). Physiobank, physiotoolkit, and physionet: components of a new research resource for complex physiologic signals. Circulation 101, e215–e220. doi: 10.1161/01.CIR.101.23.e215

Goverdovsky, V., Von Rosenberg, W., Nakamura, T., Looney, D., Sharp, D. J., Papavassiliou, C., et al. (2017). Hearables: multimodal physiological in-ear sensing. Sci. Rep. 7, 1–10. doi: 10.1038/s41598-017-06925-2

Henry, J. C.. (2006). Electroencephalography: basic principles, clinical applications, and related fields. Neurology 67, 2092–2092. doi: 10.1212/01.wnl.0000243257.85592.9a

Jiang, X., Bian, G.-B., and Tian, Z. (2019). Removal of artifacts from EEG signals: a review. Sensors 19:987. doi: 10.3390/s19050987

Kingma, D. P., and Ba, J. (2015). “Adam: a method for stochastic optimization,” in International Conference on Learning Representations (ICLR) (San Diego, CA).

Klug, M., and Gramann, K. (2020). Identifying key factors for improving ICA-based decomposition of EEG data in mobile and stationary experiments. Eur. J. Neurosci 54, 8406–8420. doi: 10.1101/2020.06.02.129213

Krusienski, D. J., Sellers, E. W., McFarland, D. J., Vaughan, T. M., and Wolpaw, J. R. (2008). Toward enhanced P300 speller performance. J. Neurosci. Methods 167, 15–21. doi: 10.1016/j.jneumeth.2007.07.017

Kumar, P., Saini, R., Sahu, P. K., Roy, P. P., Dogra, D. P., and Balasubramanian, R. (2017). “Neuro-phone: an assistive framework to operate smartphone using EEG signals,” in 2017 IEEE Region 10 Symposium (TENSYMP) (Cochin), 1–5. doi: 10.1109/TENCONSpring.2017.8070065

Latif, M. Y., Naeem, L., Hafeez, T., Raheel, A., Saeed, S. M. U., Awais, M., et al. (2017). “Brain computer interface based robotic arm control,” in 2017 International Smart Cities Conference (ISC2) (Wuxi), 1–5. doi: 10.1109/ISC2.2017.8090870

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., and Lance, B. J. (2018). EEGNET: a compact convolutional neural network for EEG-based brain-computer interfaces. J. Neural Eng. 15:056013. doi: 10.1088/1741-2552/aace8c

Li, Y., Ma, Z., Lu, W., and Li, Y. (2006). Automatic removal of the eye blink artifact from EEG using an ica-based template matching approach. Physiol. Meas. 27:425. doi: 10.1088/0967-3334/27/4/008

Lotte, F.. (2015). Signal processing approaches to minimize or suppress calibration time in oscillatory activity-based brain-computer interfaces. Proc. IEEE 103, 871–890. doi: 10.1109/JPROC.2015.2404941

Lotte, F., Congedo, M., Lécuyer, A., Lamarche, F., and Arnaldi, B. (2007). A review of classification algorithms for EEG-based brain-computer interfaces. J. Neural Eng. 4:R1. doi: 10.1088/1741-2560/4/2/R01

Luo, Y., Cai, X., Zhang, Y., Xu, J., and Yuan, X. (2018). “Multivariate time series imputation with generative adversarial networks,” in Proceedings of the 32nd International Conference on Neural Information Processing Systems (Montréal, QC), 1603–1614.

Margaux, P., Emmanuel, M., Sébastien, D., Olivier, B., and Jérémie, M. (2012). Objective and subjective evaluation of online error correction during p300-based spelling. Adv. Hum. Comput. Interact. 2012:578295. doi: 10.1155/2012/578295

Matthies, D. J.. (2013). “InEar biofeedcontroller: a headset for hands-free and eyes-free interaction with mobile devices,” in CHI'13 Extended Abstracts on Human Factors in Computing Systems (Paris), 1293–1298. doi: 10.1145/2468356.2468587

Mazumder, R., Hastie, T., and Tibshirani, R. (2010). Spectral regularization algorithms for learning large incomplete matrices. J. Mach. Learn. Res. 11, 2287–2322.

Miao, X., Wu, Y., Wang, J., Gao, Y., Mao, X., and Yin, J. (2021). “Generative semi-supervised learning for multivariate time series imputation,” in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 35 (Virtual), 8983–8991.

Miller, D., Ward, A., Bambos, N., Scheinker, D., and Shin, A. (2018). “Physiological waveform imputation of missing data using convolutional autoencoders,” in 2018 IEEE 20th International Conference on e-Health Networking, Applications and Services (Healthcom) (Ostrava), 1–6. doi: 10.1109/HealthCom.2018.8531094

Moritz, S., and Bartz-Beielstein, T. (2017). imputeTS: time series missing value imputation in R. R J. 9:207. doi: 10.32614/RJ-2017-009

Pfurtscheller, G., and Neuper, C. (2001). Motor imagery and direct brain-computer communication. Proc. IEEE 89, 1123–1134. doi: 10.1109/5.939829

Rashid, M., Sulaiman, N., Mustafa, M., Khatun, S., and Bari, B. S. (2018). “The classification of EEG signal using different machine learning techniques for BCI application,” in International Conference on Robot Intelligence Technology and Applications (Putrajaya: Springer), 207–221. doi: 10.1007/978-981-13-7780-8_17

Rejer, I., and Górski, P. (2015). “Benefits of ICA in the case of a few channel EEG,” in 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Milan), 7434–7437. doi: 10.1109/EMBC.2015.7320110

Rejer, I., and Górski, P. (2019). MAICA: an ICA-based method for source separation in a low-channel EEG recording. J. Neural Eng. 16:056025. doi: 10.1088/1741-2552/ab36db

Santamaría-Vázquez, E., Martínez-Cagigal, V., Gomez-Pilar, J., and Hornero, R. (2019). “Deep learning architecture based on the combination of convolutional and recurrent layers for ERP-based brain-computer interfaces,” in Mediterranean Conference on Medical and Biological Engineering and Computing (Coimbra: Springer), 1844–1852. doi: 10.1007/978-3-030-31635-8_224

Santamaría-Vázquez, E., Martínez-Cagigal, V., Vaquerizo-Villar, F., and Hornero, R. (2020). Eeg-inception: a novel deep convolutional neural network for assistive ERP-based brain-computer interfaces. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 2773–2782. doi: 10.1109/TNSRE.2020.3048106

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., et al. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 38, 5391–5420. doi: 10.1002/hbm.23730

Sejnowski, T. J.. (1996). “Independent component analysis of electroencephalographic data,” in Advances in Neural Information Processing Systems 8: Proceedings of the 1995 Conference, Vol. 8 (Denver, CO: MIT Press), 145.

Singla, R., Khosla, A., and Jha, R. (2014). Influence of stimuli colour in SSVEP-based BCI wheelchair control using support vector machines. J. Med. Eng. Technol. 38, 125–134. doi: 10.3109/03091902.2014.884179

Somers, B., and Bertrand, A. (2016). Removal of eye blink artifacts in wireless EEG sensor networks using reduced-bandwidth canonical correlation analysis. J. Neural Eng. 13:066008. doi: 10.1088/1741-2560/13/6/066008

Suo, Q., Yao, L., Xun, G., Sun, J., and Zhang, A. (2019). “Recurrent imputation for multivariate time series with missing values,” in 2019 IEEE International Conference on Healthcare Informatics (ICHI) (Xi'an), 1–3. doi: 10.1109/ICHI.2019.8904638

Tang, X., Yao, H., Sun, Y., Aggarwal, C., Mitra, P., and Wang, S. (2020). “Joint modeling of local and global temporal dynamics for multivariate time series forecasting with missing values,” in Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 34 (New York, NY), 5956–5963. doi: 10.1609/aaai.v34i04.6056

Tangermann, M., Müller, K.-R., Aertsen, A., Birbaumer, N., Braun, C., Brunner, C., et al. (2012). Review of the BCI competition IV. Front. Neurosci. 6:55. doi: 10.3389/fnins.2012.00055

Ting, K., Fung, P., Chang, C., and Chan, F. (2006). Automatic correction of artifact from single-trial event-related potentials by blind source separation using second order statistics only. Med. Eng. Phys. 28, 780–794. doi: 10.1016/j.medengphy.2005.11.006

Tran, A., Mathews, A., Ong, C. S., and Xie, L. (2021). “Radflow: A recurrent, aggregated, and decomposable model for networks of time series,” in Proceedings of the Web Conference 2021 (Virtual), 730–742. doi: 10.1145/3442381.3449945

van de Laar, B., Gürkök, H., Bos, D. P.-O., Poel, M., and Nijholt, A. (2013). Experiencing bci control in a popular computer game. IEEE Trans. Comput. Intell. AI Games 5, 176–184. doi: 10.1109/TCIAIG.2013.2253778

Wen, D., Wei, Z., Zhou, Y., Bian, Z., and Yin, S. (2019). “Classification of ERP signals from mild cognitive impairment patients with diabetes using dual input encoder convolutional neural network,” in 2019 IEEE International Conference on Computational Intelligence and Virtual Environments for Measurement Systems and Applications (CIVEMSA) (Tianjin), 1–4. doi: 10.1109/CIVEMSA45640.2019.9071592

Willett, F. R., Avansino, D. T., Hochberg, L. R., Henderson, J. M., and Shenoy, K. V. (2021). High-performance brain-to-text communication via handwriting. Nature 593, 249–254. doi: 10.1038/s41586-021-03506-2

Winkler, I., Haufe, S., and Tangermann, M. (2011). Automatic classification of artifactual ica-components for artifact removal in EEG signals. Behav. Brain Funct. 7, 1–15. doi: 10.1186/1744-9081-7-30

Yoon, J., Jordon, J., and Schaar, M. (2018). “Gain: missing data imputation using generative adversarial nets,” in International Conference on Machine Learning (Stockholm), 5689–5698.

Yoon, J., Zame, W. R., and van der Schaar, M. (2017). “Multi-directional recurrent neural networks: a novel method for estimating missing data,” in Time Series Workshop at the 34th International Conference on Machine (Sydney), 1–5.

Yuan, H., Xu, G., Yao, Z., Jia, J., and Zhang, Y. (2018). “Imputation of missing data in time series for air pollutants using long short-term memory recurrent neural networks,” in Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers (Singapore), 1293–1300. doi: 10.1145/3267305.3274648

Zang, B., Lin, Y., Liu, Z., and Gao, X. (2021). A deep learning method for single-trial EEG classification in RSVP task based on spatiotemporal features of ERPs. J. Neural Eng. 18:0460c8. doi: 10.1088/1741-2552/ac1610

Zerveas, G., Jayaraman, S., Patel, D., Bhamidipaty, A., and Eickhoff, C. (2021). “A transformer-based framework for multivariate time series representation learning,” in Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining (Virtual), 2114–2124. doi: 10.1145/3447548.3467401

Zhang, J., and Yin, P. (2019). “Multivariate time series missing data imputation using recurrent denoising autoencoder,” in 2019 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (San Diego, CA), 760–764. doi: 10.1109/BIBM47256.2019.8982996

Zhang, M.-L., and Zhou, Z.-H. (2007). ML-KNN: a lazy learning approach to multi-label learning. Pattern Recogn. 40, 2038–2048. doi: 10.1016/j.patcog.2006.12.019

Zhao, H., Yang, Y., Karlsson, P., and McEwan, A. (2020). Can recurrent neural network enhanced EEGNet improve the accuracy of ERP classification task? An exploration and a discussion. Health Technol. 10, 979–995. doi: 10.1007/s12553-020-00458-x

Keywords: EEG artifact correction, time series imputation, robust EEG sensing, brain computer interface, recurrent neural networks

Citation: Liu Y, Höllerer T and Sra M (2022) SRI-EEG: State-Based Recurrent Imputation for EEG Artifact Correction. Front. Comput. Neurosci. 16:803384. doi: 10.3389/fncom.2022.803384

Received: 27 October 2021; Accepted: 21 April 2022;

Published: 20 May 2022.

Edited by:

Yuanyuan Mi, Chongqing University, ChinaReviewed by:

Aleksandra Dagmara Kawala-Sterniuk, Opole University of Technology, PolandJason Scott Metcalfe, US Army Research Laboratory, United States

Copyright © 2022 Liu, Höllerer and Sra. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yimeng Liu, yimengliu@ucsb.edu

Yimeng Liu

Yimeng Liu Tobias Höllerer

Tobias Höllerer Misha Sra

Misha Sra