Neural Code—Neural Self-information Theory on How Cell-Assembly Code Rises from Spike Time and Neuronal Variability

- 1Brain and Behavior Discovery Institute, Medical College of Georgia, Augusta University, Augusta, GA, United States

- 2The Brain Decoding Center, BanNa Biomedical Research Institute, Yunnan Academy of Science and Technology, Yunnan Province, China

A major stumbling block to cracking the real-time neural code is neuronal variability - neurons discharge spikes with enormous variability not only across trials within the same experiments but also in resting states. Such variability is widely regarded as a noise which is often deliberately averaged out during data analyses. In contrast to such a dogma, we put forth the Neural Self-Information Theory that neural coding is operated based on the self-information principle under which variability in the time durations of inter-spike-intervals (ISI), or neuronal silence durations, is self-tagged with discrete information. As the self-information processor, each ISI carries a certain amount of information based on its variability-probability distribution; higher-probability ISIs which reflect the balanced excitation-inhibition ground state convey minimal information, whereas lower-probability ISIs which signify rare-occurrence surprisals in the form of extremely transient or prolonged silence carry most information. These variable silence durations are naturally coupled with intracellular biochemical cascades, energy equilibrium and dynamic regulation of protein and gene expression levels. As such, this silence variability-based self-information code is completely intrinsic to the neurons themselves, with no need for outside observers to set any reference point as typically used in the rate code, population code and temporal code models. Moreover, temporally coordinated ISI surprisals across cell population can inherently give rise to robust real-time cell-assembly codes which can be readily sensed by the downstream neural clique assemblies. One immediate utility of this self-information code is a general decoding strategy to uncover a variety of cell-assembly patterns underlying external and internal categorical or continuous variables in an unbiased manner.

Two hard problems lie at the heart of brain decoding research; namely, what is the basic wiring logic of the brain? And what is the basic operational rule for representing real-time information? With 86 billion neurons and 100 trillion synaptic connections in the human brain, it is conceivable that the understanding of the brain's basic wiring logic is the foundation upon which dynamic coding of cognitive information can be meaningfully executed (Hebb, 1949; Brenner and Sejnowski, 2011; Tsien, 2015a,b). In the absence of such overarching framework under which neurons connect or organize themselves, merely reading out neural signals corresponding to external stimulus identity is very much like a fictional biologist who may discern a foreign message from a radio yet has no idea about how radios work. We refer to this Connectivity Logic problem as “The Dead Brain's problem,” because the wiring logic in a live brain would remain the same even if the brain suddenly died (or were dropped into a liquid nitrogen tank). Emerging studies have revealed that neural clique assemblies—the brain's basic computational motif—are organized via the power-of-two-based permutation logic to generate not only specific perceptions and memories, but also generalized knowledge and adaptive behaviors (Tsien, 2015a,b; Xie et al., 2016). Along this line of investigation, there are many fascinating questions as to how development and evolution might use various components to construct complex neural circuits and the brains across a wide range of animal species to give rise to both specific and general functions (Rakic, 2009; Grillner et al., 2013; Tsien et al., 2013; Defelipe, 2015; Kiehn, 2016; Tsien, 2016).

The second hard problem relates to cracking neural code—the rule under which information is signaled inside the brain in real time. We refer it as “The Live Brain's problem,” that is, how information is dynamically represented by patterns of action potential, or spike, generated by neurons in relevant brain regions corresponding to moment-to-moment perceptions, memories, creative thoughts and behaviors (Tulving, 1972; Squire and Zola, 1998; Brown et al., 2004; Tsien, 2007; Zhang et al., 2013; Kiehn, 2016). This effort in examining how spike patterns signal stimulus identity has often been dubbed as “to crack neural code.”

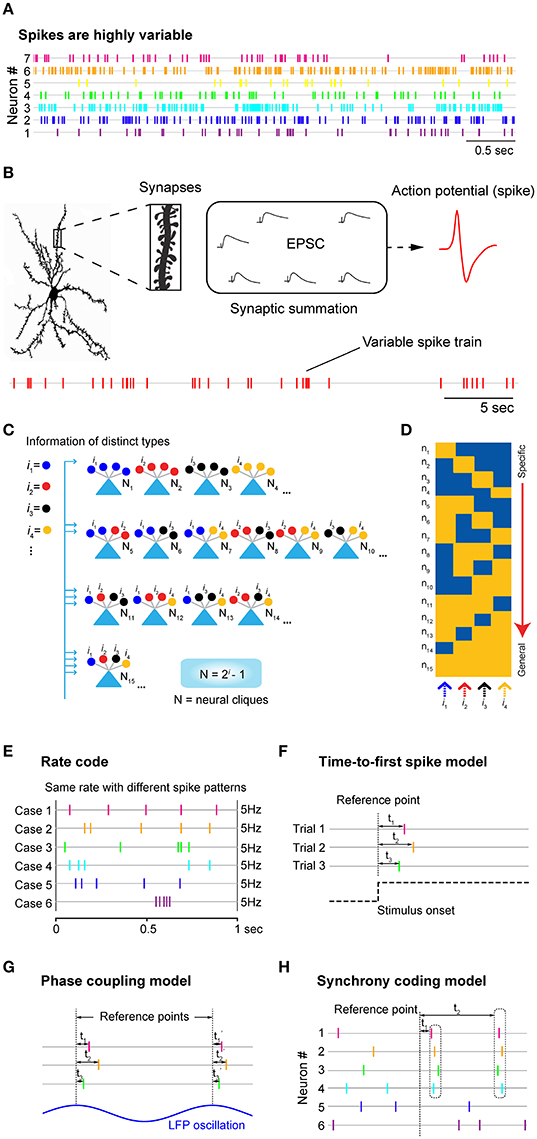

The search for neural code has its long history due to Edgar Adrian and Yngve Zotterman's original observation in the 1920s that sensory nerves innervating muscle emitted more spikes in response to increased amounts of weight hung from a muscle (Adrian and Zotterman, 1926). This landmark work has established the central dogma that neurons encode information by changing firing rates. Yet, the stumbling block in cracking the real-time neural code is neuronal variability. Over the decades, researchers have realized that neurons in every type of neural circuit—whether they are engaged in processing information such as touch, smell, vision, hearing, motor action or spatial navigation, or associative memories, etc.—discharge spikes all the time with tremendous variability in both the “control” resting states (including awake or sleep) and across trials within the same experiments (Figure 1A; Werner and Mountcastle, 1963; Poggio and Viernstein, 1964; Ratliff et al., 1968; Georgopoulos et al., 1986; Shadlen and Newsome, 1994; Fenton and Muller, 1998; Stein et al., 2005; Churchland et al., 2010). This notorious spike variability has made the rate code and temporal code models ill-suited to reliably predict stimulus identity on a moment-to-moment basis (Eggermont, 1998; Fenton and Muller, 1998; Faisal et al., 2008). The current dogma is that firing variability reflects noise or is a nuisance to outside observers. This view is reflected in popular practice by averaging spike trains over multiple trials, such as peri-stimulus time histogram (PSTH). Although, such an averaging approach is useful to characterize the tuning properties of the recorded neurons, it is generally agreed that it bears no resemblance to how neurons would signal information in real time.

Figure 1. Neuronal variability, underlying logic at synaptic and cell-assembly levels, and the traditional neural coding models. (A) Neurons discharge spikes all the time with enormous variability. Spike trains shown here are simultaneously recorded seven units from mice prefrontal cortex during animal's quiet-awake period using tetrodes. (B) A cortical neuron may contain tens of thousands of synapses which can contribute to changes in excitatory postsynaptic potential (EPSP), leading to the generation of action potential or spike at the soma. Stochastic nature of synaptic patterns leads to highly variable spike trains in both the resting “control” condition and stimulus-presentation experiments. (C) Power-of-two-based Cell-Assembly Wiring Logic as the brain's basic functional computational motif (FCM). A schematic illustration of a power-of-two connectivity motif consisted of 15 distinct neural cliques (N1-15) based on all the possible connectivity patterns for processing 4 distinct inputs (i = 4). (D) This motif gives rise to a specific-to-general feature extraction assembly. (E) The rate code model emphasizes the number of spikes within a defined time window, while ignoring the temporal structures in spike patterns. Five examples of the same firing rate (5 Hz) with completely different spike patterns were used for illustration. (F) The time-to-first-spike model of the temporal code emphasizes that key information is encoded in the relative arrival time of the first spike after stimulus onset. (G) The phase-coupling model focused on the temporal relationship between spike changes and local field potential (LFP) oscillation phases. (H) The synchrony code proposed that information coding and binding were achieved by some “uniquely meaningful” spikes which were transiently synchronized among different cells. In all cases, the rate code, population code, and temporal code models require a reference point (i.e., time zeros of stimulation, or oscillation phase, etc.) for data analyses. As such, these approaches are generally known as the biased methods. Panels (E–H) are artistic illustrations for better visualizing the four popular coding models.

Does Neuronal Variability Reflect Noise or Something Else?

Currently, two schools of thoughts come to describe what neuronal variability stands for. The first one is the widely held view that firing fluctuations in neurons reflect noise derived from molecular, synaptic, and circuitry levels (Eggermont, 1998; Ermentrout et al., 2008; Faisal et al., 2008; Masquelier, 2013). This view has led to intense studies of the source and degree of noise in experiments and simulations (Shadlen and Newsome, 1994; Stein et al., 2005; Faisal et al., 2008; Rolls and Deco, 2010; Boerlin and Deneve, 2011; Hartmann et al., 2015).

The second view is that neuronal variability is not entirely noise, rather it may also contain uncontrolled internal variables influenced by attention or intent, because the observed noise seems to be correlated within the recorded population (measured as noise correlation; Lee et al., 1998; Churchland et al., 2010; Marcos et al., 2013; Lin et al., 2015). A series of studies also suggested that neuronal variability can be beneficial for boosting weak signals (Stacey and Durand, 2001) or serving as modulatory signals (Lee et al., 1998; Boerlin and Deneve, 2011; Kohn et al., 2016; Saberi-Moghadam et al., 2016).

While the question of whether neuronal variability reflects noise or unidentified modulatory signals is still under debate, it is known that neurons are capable of generating precisely-timed spikes in response to fluctuating currents injected at the soma (Mainen and Sejnowski, 1995; Abbott and Sejnowski, 1999; Toups et al., 2012). Thus, spike variability is not due to imprecision in spike generation at the soma per se. At the structural and conceptual levels, it is not very difficult to appreciate why such variability should be fully expected. Neurons in the mammalian brain contain many thousands of synaptic connections, ranging from ~30,000 synapses per pyramidal cell in the neocortex up to 200,000 synapses per Purkinje cell in the cerebellum (Andersen, 1990; Megias et al., 2001; Guillery, 2005; Herculano-Houzel, 2009; Defelipe, 2015). Summation of these postsynaptic currents triggers action potentials in the postsynaptic cell soma (Figure 1B). With ongoing synaptic inputs coming from tens of thousands of excitatory synapses, as well as hundreds or thousands of highly localized inhibitory synapses (Klausberger and Somogyi, 2008), spike emission in any given neuron would be stochastic with enormous variability. In addition, other mechanisms and factors (i.e., slow neurotransmitters, hormonal peptides, and activity-dependent modulation of non-receptor ion channels) can also exert great influence on synaptic and membrane properties (Hille, 2001; Brenner et al., 2005; Gu et al., 2007) leading to the variability in spike train.

Why Does a Neuron Need Tens of Thousands of Synapses? Binding vs. Approximation?

Theoretically, an increased number of synapses would permit neurons to possess greater information binding capacity. This seems to make perfect sense when synapse numbers were at a smaller scale, say, with several dozen synapses each processing distinct features so that binding can be performed (to form complex “grandmother cells” encoding a face, famous people or a nest; Gross et al., 2002; Quiroga et al., 2005; Lin et al., 2007). A recent analysis of face patches in the monkey brain suggests that face cells may only utilize 50 or less dimensional facial features (Chang and Tsao, 2017). Therefore, we would argue that with 30,000 or more synapses per pyramidal neuron, the underlying logic may go beyond the mere feature integration and information-binding.

What good are tens of thousands of synapses? We would like to suggest that the major purpose of such an elaborate structural arrangement is to achieve maximal feature approximation and efficient utilization of the evolutionarily selected neural clique assemblies. This maximal approximation strategy is necessary for the brain to best utilize the developmentally pre-configured functional computational motifs (FCM) described by the Theory of Connectivity (Tsien, 2015a,b). The theory posits that as the basic computational unit of the brain, FCM organizes its principal cell assemblies via the power-of-two-based permutation logic to form a comprehensive set of specific-to-general neural-clique assemblies (N = 2i-1; N is neural clique numbers, whereas i is distinct information; Figure 1C; Tsien, 2015a,b; Li et al., 2016). The proposed power-of-two-based computational logic has been observed in at least seven different brain regions, ranging from the amygdala to the hippocampus to the cortex in mice and hamsters in the form of cell-assembly activation patterns (Xie et al., 2016; Figure 1D). In contrast to Hebb's postulate that cell assembly is formed by learning, this basic logic is pre-configured by development since the logic remains largely intact in the NMDA receptor knockout mice (Xie et al., 2016). Due to the power-of-two-based logic, as the number of distinct-information inputs increase, the number of principal cells required to construct FCMs can grow dramatically. For example, at least 1,023 principal-projection cells would be required if the functional connectivity motif were set to process 10 distinct features or events (assuming one neuron per clique, according to the N = 2i -1 equation). As categorically distinct inputs increase to 15, the minimal cell numbers would be 32,383.

If one neuron were to have only several dozen synapses merely for information integration and binding, such a narrow bandwidth would mean that this entire FCM would unlikely be used in a lifetime of animals unless information from peripheral sensors could match exactly with these cells' tuning properties. On the other hand, the utility of the power-of-two-based FCM logic can be best realized via dramatically increasing the number of synapses per neuron so that the approximation capacity—or degree in detecting similar features or categorical variables—can be dramatically expanded (Tsien, 2016). At the structural level, synapses processing similar variables can be localized on the same dendritic branches (Lai et al., 2012) or different branches (Basu et al., 2016). In any case, with tens of thousands of synapses per neuron, the FCM can be best structured not only for information-binding (which can be largely taken care of by the FCM's specific-to-general neural cliques), but also for maximal approximation and utilization. It would be of considerable interest to examine how synapse numbers and spike variability may correlate.

How Do Rate Code, Temporal Code and Population Code Deal with Neuronal Variability?

The rate code refers to the notion that information about the stimulus is encoded by the firing rate of the neuron (Figure 1E). In practice, the rate is measured by averaging the number of spikes per second or a defined (often smaller) time bin before and after stimulus presentation and typically over multiple stimulus trials. This averaging procedure inherently assumes that spike variability reflects noise—and most, if not all, information is conveyed by spike numbers. Any information possibly encoded in the temporal structure of the spike train is purposely ignored (Figure 1E). While the rate code model is convenient for researchers to define the tuning properties of the neurons by averaging spike responses over multiple trials, the brain is unlikely to generate perception, memory or action in real time using this procedure.

To potentially overcome such neuronal variability of individual neurons, researchers have applied a population vector or dimensionality-reduction classification methods to analyze the rate code information using the population activity of many neurons (Georgopoulos et al., 1986; Wilson and Mcnaughton, 1993; Lin et al., 2005; Chen et al., 2009; Luczak et al., 2015). Conceptually, neurons with similar tuning properties, termed neural cliques, can be temporally averaged to reduce individual variability (Lin et al., 2005, 2006; Zhang et al., 2013; Luczak et al., 2015). It should be noted that the population-code approach has allowed researchers to sidestep the problem of neuronal variability as observed at the single neuron level, yet the underlying assumption that neuronal variability is noise still remains the same.

The second major type of the proposed neural coding model is referred to as the temporal code, which utilizes timing information of spike discharges to signal the stimulus identity [for a classic review, see (Perkel and Bullock, 1968; Theunissen and Miller, 1995; Singer, 1999)]. There are at least three models in the literature that offer different ideas about how to use time information (Figures 1F–H). The first classic temporal-code model is the “time-to-first-spike” model (Figure 1F). In sensory neurons, several laboratories reported that most of the information about a stimulus is conveyed during the first 20~50 ms after the onset of the cell response (Optican and Richmond, 1987; Tovee et al., 1993; Kjaer et al., 1994; Tovee and Rolls, 1995). This time-to-first-spiking model requires the experimental observers to set the time reference points (i.e., time zeros of stimulation) for data analysis. Because spike discharge is a continuous process, a neuron sitting inside the brain would not have the leverage to know which spike is the first spike and who should be doing the counting of these special spikes.

The second temporal coding model is the “spike-phase code” which uses a periodic signal, such as the specific phase of local field potential (LFP) oscillations, as the reference signal (Figure 1G). For example, decoding reliability of odor identity or an animal's spatial location can be increased if one combines changes in spike rates with a selectively filtered LFP oscillation phase (O'keefe and Recce, 1993; Hopfield, 1995; Jensen and Lisman, 1996; Wehr and Laurent, 1996). In practice, the spike phase-coupling code often involved the data averaging of stimulus-triggered spikes over the trials, like the rate-code model, and then aligning them with phases of LFP oscillation. Thus, this temporal coding model still treats spike variability as noise that degrades decoding accuracy. Behaviorally, oscillations seem to reflect ongoing motor activity such as respiration (used for analyzing odor cell-phase coupling) or running (used for analyzing CA1 place cell-phase coupling). In addition, membrane oscillations are well known to be highly variable over the spatial domain (i.e., dendrites vs. soma), as well as the temporal domain. To reduce variability that corrupts tuning properties of cells, several artificial manipulations were often employed. For instance, place-cell analyses were usually limited to the locomotion state during which animals need to reach a certain running speed; any spike data occurring during a momentary pause, grooming, eating, or below the defined running speed were artificially excluded, artificially excluded, a procedure which the brain is unlikely to do. Another unresolved issue is that LFPs are intermixed voltage signals that do not separate themselves neatly into different frequency bands. As such, it is unclear how neurons would filter out specific oscillations (i.e., theta or gamma frequencies) and then perform spike phase coupling analysis as experimenters did via Matlab software.

The third type of temporal coding models is the spike-synchrony code (Figure 1H). It refers to the hypothesis that neurons encode information about the stimulus identity by modulating not only the firing rate of individual neurons, but also by temporally synchronized spiking across different neurons. Studies from the primary auditory cortex, retina and primary visual cortex found precise firing responses to auditory or visual stimuli, respectively, with millisecond precision from trial to trial [see a comprehensive review, (Singer, 1999)]. Highly synchronous oscillatory discharges of retinal responses that reach oscillation frequencies of up to 100 Hz are also transmitted reliably from the retina to the primary visual cortex (Neuenschwander and Singer, 1996; Castelo-Branco et al., 1998; Herculano-Houzel et al., 1999; Neuenschwander et al., 1999). In addition, spike synchrony has been proposed for binding or grouping to achieve dynamic and flexibility of coding. Still, analysis of these binding operations requires measurements of temporal relations among distributed cell responses that will need more sophisticated statistical procedures than pairwise correlations. While correlation analysis can be useful in measuring degrees of spike synchrony, fluctuating spike-discharge patterns can easily overwhelm and corrupt synchrony-based code (Softky and Koch, 1993; Stevens and Zador, 1998; Shadlen and Movshon, 1999).

Self-Information Code: Variability is the Self-information Expressor

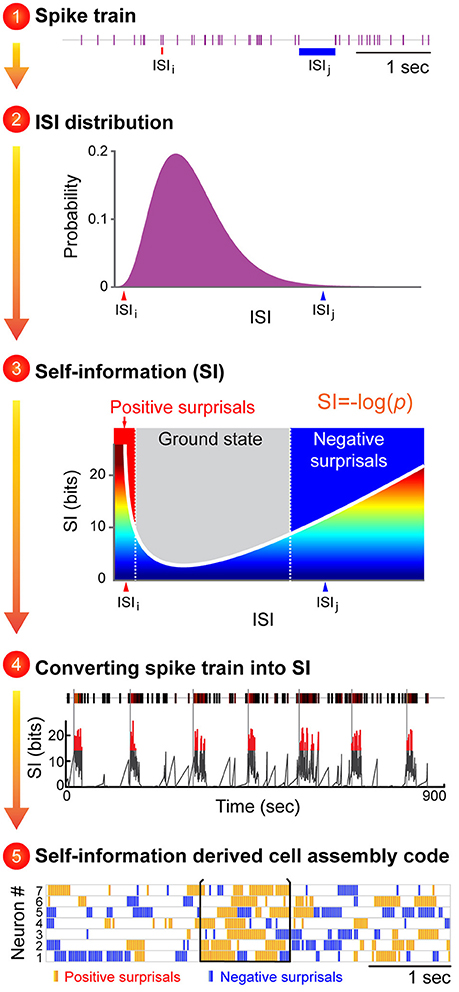

Here we present the Neural Self-Information Theory that neural coding is a self-information process based on inter-spike-interval (neuronal silence duration) variability and its variability history. Contrary to the prevalent view that spike variability reflects noise or is merely correlated with some unknown modulatory variables, we postulate that neuronal variability is what carries information by itself. Specifically, information is encoded by utilizing real-time inter-spike-interval (ISI) variability under the probability-based statistical self-information principles. According to this neural self-information theory, neuronal variability operates as the self-information generator and expressor; higher-probability ISIs, which reflect the balanced excitation-inhibition ground-state, convey less information, whereas lower-probability ISIs (the rare-occurrence of silence durations, such as unusually brief or prolonged ISIs), which signify statistical surprisals, convey more information (Figure 2A).

Figure 2. An illustration to describe how the proposed Neural Self-Information Theory can be used to decode cell-assembly patterns from neuronal spike trains. The Self-Information Code is proposed to explain how real-time neural code is generated via spike-timing variability, and how cell-assembly patterns can be identified in an unbiased manner. A general strategy to apply the neural self-information theory to uncover cell assemblies from spike-train datasets. Cell-assembly code is identified in four steps based on the conversion of an individual neuron's spike train into variability distribution of ISIs, followed by its conversion to a real-time self-information value. The temporally coordinated self-information surprisal patterns across cell population can be detected in an unbiased manner by pattern classification methods such as blind-source analyses. The unique feature of this self-information code is that this neural coding principle is completely intrinsic to the neurons themselves, with no need for any reference point to be set by outside observers.

In other words, under this Self-Information Code, any given ISI is self-tagged with a discrete amount of information based on its silence duration in a relationship with the silence-duration probability-distribution history (Figure 2). This probability distribution of ISI fluctuations or neuronal silence time-duration is intrinsically sensed by and coupled with ongoing biochemical reactions and energy equilibrium. For example, a prolonged neuronal silence requires less ATP consumption, whereas the production of multiple extremely short ISIs which reflect strong spike bursting requires more ATP. Thus, this silence-based self-information process is naturally unified with intracellular biochemical reaction cascades, including receptor turnover, protein endocytosis, and new protein synthesis and gene expression.

At the physiological level, these self-information ISI surprisals can be either positive surprisals (when a neuron's ISI becomes much shorter than is typical, reflecting strong excitation) or negative surprisals (when ISI becomes much longer than is typical, reflecting strong inhibition; Figure 2, see Step 3). In practice, the measurement of ISI probability distribution can be determined easily. In contrast to the prevalent notion that spike variability is a Poisson distribution, emerging studies have suggested that ISI variability in many neural circuits conform to the gamma distribution (Li et al., 2017). As such, instead of measuring the mean value of the information contained in a spike train (namely, the information entropy), each ISI can be precisely calculated for its information content (I) based on a simple self-information equation [I = −log(P), where P is the probability of each ISI (the time period between two successful spikes)]. In statistical term, those events with low-occurrence probability is called surprisals. Subsequently, these dynamic, transient surprisal ISI patterns would act as the critical real-time information packets at a single neuron level. When these surprisal ISIs are emitted across a cell population in a temporally coordinated manner, they can seamlessly give rise to robust real-time cell-assembly code.

The variability-surprisal-based neural self-information theory makes several testable predictions: if neuronal variability acts as a self-information generator, this variability should remain similar across various brain regions. On the other hand, if neuronal variability reflects system noise, one would expect that variability would grow larger as information is transmitted from low subcortical structures to the high-cognition cortices.

To differentiate these two scenarios, one can record large numbers of neurons from various cortical and sub-cortical regions in freely behaving animals. To facilitate systematic comparisons, one can initially focus on putatively classified principal cells after these units have been separated from fast-spiking putative interneurons and their variability distributions analyzed across these different regions. In addition, to examine the potential state-dependent influence on neuronal variability, one can assess the spike datasets collected from the quiet-awake state as animals rested in their home-cage environments, and compare them with those patterns obtained during various cognitive tasks.

One can characterize neuronal variability by using three well-defined statistics to describe quantitatively neuronal variability of a neuron's ISI—namely, a coefficient of variation (CV), skewness and kurtosis. In probability theory and statistics, CV is a standardized measure of dispersion of a probability distribution, and skewness is a measure of the asymmetry of a probability distribution, whereas kurtosis is a measure of the “tailedness” of a probability distribution. We would predict that principal cells in various brain regions should exhibit similar neuronal-variability distributions. To further test the idea that neuronal variability serves as a self-information carrier, we also predicted that variability would diminish under the condition that both external and internal neural computations were artificially shut down (i.e., upon anesthesia). Pharmacological intervention experiments can be used to demonstrate that the shutting down of external and internal coding processes would indeed greatly reduce neuronal variability. If so, it would be consistent with the notion that spike variability reflects the ongoing cognitive processing of both external and internal information.

A General Decoding Strategy to Uncover Various Cell Assemblies

One immediate application of this “self-information code” concept is that it should enable researchers to identify a variety of cell assemblies from large-scale recording datasets. Overall, this variability-surprisal-based, cell-assembly decoding (VCAD) strategy can consist of the following four major steps (Figure 2):

The first step is to convert each neuron's spike train into the probability distribution of ISI variability. Since ISI patterns in various neural circuits seem to conform to the gamma distribution model (Li et al., 2017), one can be achieved by fitting a single neuron's ISI with a gamma distribution model which can assign each neuron's ISI with a probability.

The second step is to convert the probability distribution of ISI variability into real-time self-information distribution for each ISI. These frequent ISI variations with high probability represent the low self-information or ground state (Figure 2, subtitle #3, the gray zone in the mid-section of the Self-information plot). As a neuron increased its firing, it generates positive surprisals (the red curve inside the Self-information plot) as ISIs entered the left tail zone of the distribution probability (a low-probability state). On the other hand, if the neuron's firing is dramatically suppressed, negative surprisals are generated (the blue curve inside the Self-information plot) when ISIs shifted to the right tail zone (also a low-probability state).

In the third step, a spike train emitted by a neuron is transformed into a surprisal-based self-information code using the dynamic evolution of ISI patterns (silence time patterns). The time-window for practical estimation of the instantaneous ISI distribution for each neuron may depend on the averaged firing rate, with low firing neuron requiring longer time duration (i.e., 15 min) and high-firing neuron requiring shorter time duration. Biologically speaking, the time durations for defining the probability distribution patterns may be best examined by measuring the molecular and synaptic turnover rates or other key biochemical processes which reflect the intracellular memory time-scale of neuronal equilibrium.

The fourth step is to uncover joint surprisal-spike patterns across simultaneously-recorded cells on a moment-to-moment basis. Blind-source-separation (BSS) methods, such as independent component analysis (ICA), can identify a set of independent information sources from simultaneously observed signals such as structured patterns or relationships. Each independent signal source decoded by BSS would correspond to a distinct real-time activation pattern given by a cell assembly.

To discover its functional meaning, one can compare each real-time activation temporal pattern with various other experimental parameters [such as the dynamic evolution of local field potential (LFP), the time points of stimulus presentations, videotapes of an animal's behavioral state, actions, and corresponding locations, etc.].

Moreover, the top-ranking membership with the highest contribution weights in the cell assembly can be directly identified from demixing matrix W. This will allow researchers to assess quantitative membership information that other dimensionality-reduction-based, pattern-classification methods (i.e., principal component analysis or multiple discriminant analysis) could not provide. By further mapping cell-assembly activity patterns onto specific cell types and network states (Klausberger and Somogyi, 2008; Colgin, 2016; Van De Ven et al., 2016), we expect that researchers can gain greater insights into how neural code is generated within and across the evolutionarily conserved computational motifs (Grillner, 2006; Brenner and Sejnowski, 2011; Marcus et al., 2014; Tsien, 2015a,b; Kiehn, 2016; Xie et al., 2016).

In summary, we present a new hypothesis on how to crack the real-time neural code. Specifically, we put forth the Neural Self-Information Theory that neuronal variability operates as the self-information generator and expressor to convey a variable amount of information in the form of silence variability-surprisals. Coordination of these surprisal ISIs in space (across cells) and time can seamlessly give rise to robust real-time cell-assembly code. It should be noted that while the ground state corresponds to the most probable ISI which carry less self-information (and yet may consume a lot of energy during non-coding state), they can be extremely important in terms of providing both the rapid responses to changes and ternary coding structure once combined with positive and negative surprisals, which leads to enormous information capacity and flexibility at the cell-assembly level. The generality of this silence-based self-information code can be demonstrated by identifying real-time cell assemblies processing internal states, external experiences—including continuous variables—and categorical variables. Most importantly, this Self-Information Code is completely intrinsic to neurons themselves, with no need for outside observers to set any reference point such as time zeros of stimulation or filtered local field potential oscillation phases. Because the self-information code is operated in the form of the ISI variability-based probability distribution, the downstream neurons can naturally sense these surprisal shift in ISI variability as manifested by sudden deviations from the equilibrium (a form of intracellular memory or variability distribution) of post-synaptic neurons' biochemical states (i.e., energy production, receptor activation, ion channel open/close state distribution patterns, protein phosphorylation/dephosphorylation rate, receptor insertion/removal, etc.).

In light of the theoretical and practical implications, the proposed Neural Self-Information Theory can be examined via large-scale in vivo recording experiments. Moreover, this silence-duration varibility-based surprisal coding concept can also be exploited for the design of a novel neuromorphic chip for brain-inspired computing with robust resilience to interference. As Wolf Singer once noted, “We nonetheless shall have made a great step forward, because it is the unexpected result that contains maximal information.”

Ethics Statement

All animal work described in the study was carried out in accordance with the guidelines laid down by the National Institutes of Health in the United States, regarding the care and use of animals for experimental procedures, and was approved by the Institutional Animal Care and Use Committee of Augusta University (Approval AUP number: BR07-11-001).

Author Contributions

ML and JT conceived the idea. JT wrote the paper with ML.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The handling Editor currently co-hosts a Research Topic with one of the authors JT, and confirms the absence of any other collaboration.

Acknowledgments

This work is supported by an NIH grant (R01NS079774), a GRA equipment grant to JT, the Brain Decoding Center grant Yunnan Science Commission (2014DG002) to ML and JT, NSF grant (1564736) JT, grants (2012BA004, 2014DG002) from Yunnan Province Department of Science and Technology and International S&T Cooperation Program of China (2015DFA11470). We would like to express our gratitude for the funding support from the above agencies.

References

Abbott, L., and Sejnowski, T. J. (1999). Neural Codes and Distributed Representations: Foundations of Neural Computation. Cambridge, MA: MIT Press.

Adrian, E. D., and Zotterman, Y. (1926). The impulses produced by sensory nerve endings: part 3. Impulses set up by Touch and Pressure. J. Physiol. 61, 465–483. doi: 10.1113/jphysiol.1926.sp002308

Andersen, P. (1990). Synaptic integration in hippocampal CA1 pyramids. Prog. Brain Res. 83, 215–222. doi: 10.1016/S0079-6123(08)61251-0

Basu, J., Zaremba, J. D., Cheung, S. K., Hitti, F. L., Zemelman, B. V., Losonczy, A., et al. (2016). Gating of hippocampal activity, plasticity, and memory by entorhinal cortex long-range inhibition. Science 351:aaa5694. doi: 10.1126/science.aaa5694

Boerlin, M., and Deneve, S. (2011). Spike-based population coding and working memory. PLoS Comput. Biol. 7:e1001080. doi: 10.1371/journal.pcbi.1001080

Brenner, R., Chen, Q. H., Vilaythong, A., Toney, G. M., Noebels, J. L., and Aldrich, R. W. (2005). BK channel beta4 subunit reduces dentate gyrus excitability and protects against temporal lobe seizures. Nat. Neurosci. 8, 1752–1759. doi: 10.1038/nn1573

Brenner, S., and Sejnowski, T. J. (2011). Understanding the human brain. Science 334:567. doi: 10.1126/science.1215674

Brown, E. N., Kass, R. E., and Mitra, P. P. (2004). Multiple neural spike train data analysis: state-of-the-art and future challenges. Nat. Neurosci. 7, 456–461. doi: 10.1038/nn1228

Castelo-Branco, M., Neuenschwander, S., and Singer, W. (1998). Synchronization of visual responses between the cortex, lateral geniculate nucleus, and retina in the anesthetized cat. J. Neurosci. 18, 6395–6410.

Chang, L., and Tsao, D. Y. (2017). The code for facial identity in the primate brain. Cell 169, 1013–1028.e1014. doi: 10.1016/j.cell.2017.05.011

Chen, G., Wang, L. P., and Tsien, J. Z. (2009). Neural population-level memory traces in the mouse hippocampus. PLoS ONE 4:e8256. doi: 10.1371/journal.pone.0008256

Churchland, M. M., Yu, B. M., Cunningham, J. P., Sugrue, L. P., Cohen, M. R., Corrado, G. S., et al. (2010). Stimulus onset quenches neural variability: a widespread cortical phenomenon. Nat. Neurosci. 13, 369–378. doi: 10.1038/nn.2501

Colgin, L. L. (2016). Rhythms of the hippocampal network. Nat. Rev. Neurosci. 17, 239–249. doi: 10.1038/nrn.2016.21

Defelipe, J. (2015). The anatomical problem posed by brain complexity and size: a potential solution. Front. Neuroanat. 9:104. doi: 10.3389/fnana.2015.00104

Eggermont, J. J. (1998). Is there a neural code? Neurosci. Biobehav. Rev. 22, 355–370. doi: 10.1016/S0149-7634(97)00021-3

Ermentrout, G. B., Galan, R. F., and Urban, N. N. (2008). Reliability, synchrony and noise. Trends Neurosci. 31, 428–434. doi: 10.1016/j.tins.2008.06.002

Faisal, A. A., Selen, L. P., and Wolpert, D. M. (2008). Noise in the nervous system. Nat. Rev. Neurosci. 9, 292–303. doi: 10.1038/nrn2258

Fenton, A. A., and Muller, R. U. (1998). Place cell discharge is extremely variable during individual passes of the rat through the firing field. Proc. Natl. Acad. Sci. U.S.A. 95, 3182–3187. doi: 10.1073/pnas.95.6.3182

Georgopoulos, A. P., Schwartz, A. B., and Kettner, R. E. (1986). Neuronal population coding of movement direction. Science 233, 1416–1419. doi: 10.1126/science.3749885

Grillner, S. (2006). Biological pattern generation: the cellular and computational logic of networks in motion. Neuron 52, 751–766. doi: 10.1016/j.neuron.2006.11.008

Grillner, S., Robertson, B., and Stephenson-Jones, M. (2013). The evolutionary origin of the vertebrate basal ganglia and its role in action selection. J. Physiol. 591, 5425–5431. doi: 10.1113/jphysiol.2012.246660

Gross, C. G., Lettvin, J., and Konorski, J. (2002). Genealogy of the “grandmother cell.” Neuroscientist 8, 512–518. doi: 10.1177/107385802237175

Gu, N., Vervaeke, K., and Storm, J. F. (2007). BK potassium channels facilitate high-frequency firing and cause early spike frequency adaptation in rat CA1 hippocampal pyramidal cells. J. Physiol. 580, 859–882. doi: 10.1113/jphysiol.2006.126367

Guillery, R. W. (2005). Observations of synaptic structures: origins of the neuron doctrine and its current status. Philos. Trans. R. Soc. Lond. Ser. B. Biol. Sci. 360, 1281–1307. doi: 10.1098/rstb.2003.1459

Hartmann, C., Lazar, A., Nessler, B., and Triesch, J. (2015). Where's the noise? Key features of spontaneous activity and neural variability arise through learning in a deterministic network. PLoS Comput. Biol. 11:e1004640. doi: 10.1371/journal.pcbi.1004640

Hebb, D. O. (1949). The Organization of Behavior: A Neuropsychological Approach. New York, NY: John Wiley & Sons.

Herculano-Houzel, S. (2009). The human brain in numbers: a linearly scaled-up primate brain. Front. Hum. Neurosci. 3:31. doi: 10.3389/neuro.09.031.2009

Herculano-Houzel, S., Munk, M. H., Neuenschwander, S., and Singer, W. (1999). Precisely synchronized oscillatory firing patterns require electroencephalographic activation. J. Neurosci. 19, 3992–4010.

Hopfield, J. J. (1995). Pattern recognition computation using action potential timing for stimulus representation. Nature 376, 33–36. doi: 10.1038/376033a0

Jensen, O., and Lisman, J. E. (1996). Hippocampal CA3 region predicts memory sequences: accounting for the phase precession of place cells. Learn. Mem. 3, 279–287. doi: 10.1101/lm.3.2-3.279

Kiehn, O. (2016). Decoding the organization of spinal circuits that control locomotion. Nat. Rev. Neurosci. 17, 224–238. doi: 10.1038/nrn.2016.9

Kjaer, T. W., Hertz, J. A., and Richmond, B. J. (1994). Decoding cortical neuronal signals: network models, information estimation and spatial tuning. J. Comput. Neurosci. 1, 109–139. doi: 10.1007/BF00962721

Klausberger, T., and Somogyi, P. (2008). Neuronal diversity and temporal dynamics: the unity of hippocampal circuit operations. Science 321, 53–57. doi: 10.1126/science.1149381

Kohn, A., Coen-Cagli, R., Kanitscheider, I., and Pouget, A. (2016). Correlations and Neuronal Population Information. Ann. Rev. Neurosci. 39, 237–256. doi: 10.1146/annurev-neuro-070815-013851

Lai, C. S. W., Franke, T. F., and Gan, W.-B. (2012). Opposite effects of fear conditioning and extinction on dendritic spine remodelling. Nature 483, 87–91. doi: 10.1038/nature10792

Lee, D., Port, N. L., Kruse, W., and Georgopoulos, A. P. (1998). Variability and correlated noise in the discharge of neurons in motor and parietal areas of the primate cortex. J. Neurosci. 18, 1161–1170.

Li, M., Liu, J., and Tsien, J. Z. (2016). Theory of connectivity: nature and nurture of cell assemblies and cognitive computation. Front. Neural Circuits 10:34. doi: 10.3389/fncir.2016.00034

Li, M., Xie, K., Kuang, H., Liu, J., Wang, D., Fox, G. E., et al. (2017). Spike-timing patterns conform to gamma distribution with regional and cell type-specific characteristics. Biorxiv 145813. doi: 10.1101/145813

Lin, I. C., Okun, M., Carandini, M., and Harris, K. D. (2015). The nature of shared cortical variability. Neuron 87, 644–656. doi: 10.1016/j.neuron.2015.06.035

Lin, L., Chen, G., Kuang, H., Wang, D., and Tsien, J. Z. (2007). Neural encoding of the concept of nest in the mouse brain. Proc. Natl. Acad. Sci. U.S.A. 104, 6066–6071. doi: 10.1073/pnas.0701106104

Lin, L., Chen, G., Xie, K., Zaia, K. A., Zhang, S., and Tsien, J. Z. (2006). Large-scale neural ensemble recording in the brains of freely behaving mice. J. Neurosci. Methods 155, 28–38. doi: 10.1016/j.jneumeth.2005.12.032

Lin, L., Osan, R., Shoham, S., Jin, W., Zuo, W., and Tsien, J. Z. (2005). Identification of network-level coding units for real-time representation of episodic experiences in the hippocampus. Proc. Natl. Acad. Sci. U.S.A. 102, 6125–6130. doi: 10.1073/pnas.0408233102

Luczak, A., Mcnaughton, B. L., and Harris, K. D. (2015). Packet-based communication in the cortex. Nat. Rev. Neurosci. 16, 745–755. doi: 10.1038/nrn4026

Mainen, Z. F., and Sejnowski, T. J. (1995). Reliability of spike timing in neocortical neurons. Science 268, 1503–1506. doi: 10.1126/science.7770778

Marcos, E., Pani, P., Brunamonti, E., Deco, G., Ferraina, S., and Verschure, P. (2013). Neural variability in premotor cortex is modulated by trial history and predicts behavioral performance. Neuron 78, 249–255. doi: 10.1016/j.neuron.2013.02.006

Marcus, G., Marblestone, A., and Dean, T. (2014). The atoms of neural computation. Science 346, 551–552. doi: 10.1126/science.1261661

Masquelier, T. (2013). Neural variability, or lack thereof. Front. Comput. Neurosci. 7:7. doi: 10.3389/fncom.2013.00007

Megias, M., Emri, Z., Freund, T. F., and Gulyas, A. I. (2001). Total number and distribution of inhibitory and excitatory synapses on hippocampal CA1 pyramidal cells. Neuroscience 102, 527–540. doi: 10.1016/S0306-4522(00)00496-6

Neuenschwander, S., and Singer, W. (1996). Long-range synchronization of oscillatory light responses in the cat retina and lateral geniculate nucleus. Nature 379, 728–732. doi: 10.1038/379728a0

Neuenschwander, S., Castelo-Branco, M., and Singer, W. (1999). Synchronous oscillations in the cat retina. Vision Res. 39, 2485–2497. doi: 10.1016/S0042-6989(99)00042-5

O'keefe, J., and Recce, M. L. (1993). Phase relationship between hippocampal place units and the EEG theta rhythm. Hippocampus 3, 317–330. doi: 10.1002/hipo.450030307

Optican, L. M., and Richmond, B. J. (1987). Temporal encoding of two-dimensional patterns by single units in primate inferior temporal cortex. III. Information theoretic analysis. J. Neurophysiol. 57, 162–178.

Poggio, G. F., and Viernstein, L. J. (1964). Time series analysis of impulse sequences of thalamic somatic sensory neurons. J. Neurophysiol. 27, 517–545.

Quiroga, R. Q., Reddy, L., Kreiman, G., Koch, C., and Fried, I. (2005). Invariant visual representation by single neurons in the human brain. Nature 435, 1102–1107. doi: 10.1038/nature03687

Rakic, P. (2009). Evolution of the neocortex: a perspective from developmental biology. Nat. Rev. Neurosci. 10, 724–735. doi: 10.1038/nrn2719

Ratliff, F., Hartline, H. K., and Lange, D. (1968). Variability of interspike intervals in optic nerve fibers of Limulus: effect of light and dark adaptation. Proc. Natl. Acad. Sci. 60, 464–469. doi: 10.1073/pnas.60.2.464

Rolls, E., and Deco, G. (2010). The Noisy Brain. Stochastic Dynamics As a Principle of Brain Function. London: Oxford University Press.

Saberi-Moghadam, S., Ferrari-Toniolo, S., Ferraina, S., Caminiti, R., and Battaglia-Mayer, A. (2016). Modulation of neural variability in premotor, motor, and posterior parietal cortex during change of motor intention. J. Neurosci. 36, 4614–4623. doi: 10.1523/JNEUROSCI.3300-15.2016

Shadlen, M. N., and Movshon, J. A. (1999). Synchrony unbound: a critical evaluation of the temporal binding hypothesis. Neuron 24, 67–77, 111–125. doi: 10.1016/S0896-6273(00)80822-3

Shadlen, M. N., and Newsome, W. T. (1994). Noise, neural codes and cortical organization. Curr. Opin. Neurobiol. 4, 569–579. doi: 10.1016/0959-4388(94)90059-0

Singer, W. (1999). Neuronal synchrony: a versatile code for the definition of relations? Neuron 24, 49–65, 111–125. doi: 10.1016/S0896-6273(00)80821-1

Softky, W. R., and Koch, C. (1993). The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. J. Neurosci. 13, 334–350.

Squire, L. R., and Zola, S. M. (1998). Episodic memory, semantic memory, and amnesia. Hippocampus 8, 205–211.

Stacey, W. C., and Durand, D. M. (2001). Synaptic noise improves detection of subthreshold signals in hippocampal CA1 neurons. J. Neurophysiol. 86, 1104–1112.

Stein, R. B., Gossen, E. R., and Jones, K. E. (2005). Neuronal variability: noise or part of the signal? Nat. Rev. Neurosci. 6, 389–397. doi: 10.1038/nrn1668

Stevens, C. F., and Zador, A. M. (1998). Input synchrony and the irregular firing of cortical neurons. Nat. Neurosci. 1, 210–217. doi: 10.1038/659

Theunissen, F., and Miller, J. P. (1995). Temporal encoding in nervous systems: a rigorous definition. J. Comput. Neurosci. 2, 149–162. doi: 10.1007/BF00961885

Toups, J. V., Fellous, J.-M., Thomas, P. J., Sejnowski, T. J., and Tiesinga, P. H. (2012). Multiple spike time patterns occur at bifurcation points of membrane potential dynamics. PLoS Comput. Biol. 8:e1002615. doi: 10.1371/journal.pcbi.1002615

Tovee, M. J., and Rolls, E. T. (1995). Information encoding in short firing rate epochs by single neurons in the primate temporal visual cortex. Visual Cogn. 2, 35–58. doi: 10.1080/13506289508401721

Tovee, M. J., Rolls, E. T., Treves, A., and Bellis, R. P. (1993). Information encoding and the responses of single neurons in the primate temporal visual cortex. J. Neurophysiol. 70, 640–654.

Tsien, J. Z. (2015a). A postulate on the brain's basic wiring logic. Trends Neurosci. 38, 669–671. doi: 10.1016/j.tins.2015.09.002

Tsien, J. Z. (2015b). Principles of intelligence: on evolutionary logic of the brain. Front. Syst. Neurosci. 9:186. doi: 10.3389/fnsys.2015.00186

Tsien, J. Z. (2016). Cre-Lox Neurogenetics: 20 years of versatile applications in brain research and counting. Front. Genet. 7:19. doi: 10.3389/fgene.2016.00019

Tsien, J. Z., Li, M., Osan, R., Chen, G., Lin, L., Wang, P. L., et al. (2013). On initial brain activity mapping of episodic and semantic memory code in the hippocampus. Neurobiol. Learn. Memory 105, 200–210. doi: 10.1016/j.nlm.2013.06.019

Tulving, E. (1972). “Episodic and semantic memory,” in Organization of Memory, eds E. Tulving and W. Donaldson (New York, NY: Academic Press), 381–403.

Van De Ven, G. M., Trouche, S., Mcnamara, C. G., Allen, K., and Dupret, D. (2016). Hippocampal offline reactivation consolidates recently formed cell assembly patterns during sharp wave-ripples. Neuron. 92, 968–974. doi: 10.1016/j.neuron.2016.10.020

Wehr, M., and Laurent, G. (1996). Odour encoding by temporal sequences of firing in oscillating neural assemblies. Nature 384, 162–166. doi: 10.1038/384162a0

Werner, G., and Mountcastle, V. B. (1963). The variability of central neural activity in a sensory system, and its implications for the central reflection of sensory events. J. Neurophysiol. 26, 958–977.

Wilson, M. A., and Mcnaughton, B. L. (1993). Dynamics of the hippocampal ensemble code for space. Science 261, 1055–1058. doi: 10.1126/science.8351520

Xie, K., Fox, G. E., Liu, J., Lyu, C., Lee, J. C., Kuang, H., et al. (2016). Brain Computation is organized via power-of-two-based permutation logic. Front. Syst. Neurosci. 10:95. doi: 10.3389/fnsys.2016.00095

Keywords: neural code, self-information, neural computing, neural spike variability, variability-surprisal, surprisal code, cell assembly, code of silence

Citation: Li M and Tsien JZ (2017) Neural Code—Neural Self-information Theory on How Cell-Assembly Code Rises from Spike Time and Neuronal Variability. Front. Cell. Neurosci. 11:236. doi: 10.3389/fncel.2017.00236

Received: 04 April 2017; Accepted: 25 July 2017;

Published: 30 August 2017.

Edited by:

Egidio D'Angelo, University of Pavia, ItalyReviewed by:

Bailu Si, University of Chinese Academy of Sciences (UCAS), ChinaJu Lu, University of California, Santa Cruz, United States

Copyright © 2017 Li and Tsien. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Joe Z. Tsien, jtsien@augusta.edu

Meng Li

Meng Li Joe Z. Tsien

Joe Z. Tsien