Abstract

Efforts to identify meaningful functional imaging-based biomarkers are limited by the ability to reliably characterize inter-individual differences in human brain function. Although a growing number of connectomics-based measures are reported to have moderate to high test-retest reliability, the variability in data acquisition, experimental designs, and analytic methods precludes the ability to generalize results. The Consortium for Reliability and Reproducibility (CoRR) is working to address this challenge and establish test-retest reliability as a minimum standard for methods development in functional connectomics. Specifically, CoRR has aggregated 1,629 typical individuals’ resting state fMRI (rfMRI) data (5,093 rfMRI scans) from 18 international sites, and is openly sharing them via the International Data-sharing Neuroimaging Initiative (INDI). To allow researchers to generate various estimates of reliability and reproducibility, a variety of data acquisition procedures and experimental designs are included. Similarly, to enable users to assess the impact of commonly encountered artifacts (for example, motion) on characterizations of inter-individual variation, datasets of varying quality are included.

Design Type(s) | Test-retest Reliability |

Measurement Type(s) | nuclear magnetic resonance assay |

Technology Type(s) | functional MRI scanner |

Factor Type(s) | protocol |

Sample Characteristic(s) | Homo sapiens • brain |

Machine-accessible metadata file describing the reported data (ISA-Tab format)

Similar content being viewed by others

Background & Summary

Functional connectomics is a rapidly expanding area of human brain mapping1–4. Focused on the study of functional interactions among nodes in brain networks, functional connectomics is emerging as a mainstream tool to delineate variations in brain architecture among both individuals and populations5–8. Findings that established network features and well-known patterns of brain activity elicited via task performance are recapitulated in spontaneous brain activity patterns captured by resting-state fMRI (rfMRI)3–6,9–12, have been critical to the wide-spread acceptance of functional connectomics applications.

A growing literature has highlighted the possibility that functional network properties may explain individual differences in behavior and cognition4,7,8—the potential utility of which is supported by studies that suggest reliability for commonly used rfMRI measures13. Unfortunately, the field lacks a data platform by which researchers can rigorously explore the reliability of the many indices that continue to emerge. Such a platform is crucial for the refinement and evaluation of novel methods, as well as those that have gained widespread usage without sufficient consideration of reliability. Equally important is the notion that quantifying the reliability and reproducibility of the myriad connectomics-based measures can inform expectations regarding the potential of such approaches for biomarker identification13–16.

To address these challenges, the Consortium for Reliability and Reproducibility (CoRR) has aggregated previously collected test-retest imaging datasets from more than 36 laboratories around the world and shared them via the 1000 Functional Connectomes Project (FCP)5,17 and its International Neuroimaging Data-sharing Initiative (INDI)18. Although primarily focused on rfMRI, this initiative has worked to promote the sharing of diffusion imaging data as well. It is our hope that among its many possible uses, the CoRR repository will facilitate the: (1) Establishment of test-retest reliability and reproducibility for commonly used MR-based connectome metrics, (2) Determination of the range of variation in the reliability and reproducibility of these metrics across imaging sites and retest study designs, (3) Creation of a standard/benchmark test-retest dataset for the evaluation of novel metrics.

Here, we provide an overview of all the datasets currently aggregated by CoRR, and describe the standardized metadata and technical validation associated with these datasets, thereby facilitating immediate access to these data by the wider scientific community. Additional datasets, and richer descriptions of some of the studies producing these datasets, will be published separately (for example, A high resolution 7-Tesla rfMRI test-retest dataset with cognitive and physiological measures19). A list of all papers describing these individual studies will be maintained and periodically updated at the CoRR website (http://fcon_1000.projects.nitrc.org/indi/CoRR/html/data_citation.html).

Methods

Experimental design

At the time of submission, CoRR has received 40 distinct test-retest datasets that were independently collected by 36 imaging groups at 18 institutions. All CoRR contributions were based on studies approved by a local ethics committee; each contributor’s respective ethics committee approved submission of de-identified data. Data were fully deidentified by removing all 18 HIPAA (Health Insurance Portability and Accountability)-protected health information identifiers, and face information from structural images prior to contribution. All data distributed were visually inspected before release. While all samples include at least one baseline scan and one retest scan, the specific designs and target populations employed across samples vary given the aggregation strategy used to build the resource. Since many individual (uniformly collected) datasets have reasonably large sample sizes allowing stable test-retest estimates, this variability across datasets provides an opportunity to generalize reliability estimates across scanning platforms, acquisition approaches, and target populations. The range of designs included is captured by the following classifications:

-

Within-Session Repeat.

-

o Scan repeated on same day

-

o Behavioral condition may or may not vary across scans depending on sample

-

-

Between-Session Repeat.

-

o Scan repeated one or more days later

-

o In most cases less than one week

-

-

Between-Session Repeat (Serial).

-

o Scan is repeated for 3 or more sessions in a short time-frame that is believed to be developmentally stable

-

-

Between-Session Repeat (Longitudinal developmental).

-

o Scan repeated at a distant time-point not believed to be developmentally equivalent. There is no exact definition of the minimum time for detecting developmental effects across scans, though designs typically span at least 3–6 months

-

-

Hybrid Design.

-

o Scans repeated one or more times on same day, as well as across one or more sessions

-

Table 1 presents an overview of the specific samples included in CoRR (Data Citations 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31). The vast majority included a single retest scan (48% within-session, 52% between-session). Three samples employed serial scanning designs, and one sample had a longitudinal developmental component. Most samples included presumed neurotypical adults; exceptions include the pediatric samples from Institute of Psychology at Chinese Academy of Sciences (IPCAS 2/7), University of Pittsburgh School of Medicine (UPSM) and New York University (NYU) and the lifespan samples from Nathan Kline Institute (NKI 1).

Data Records

Data privacy

Prior to contribution, each investigator confirmed that the data in their contribution was collected with the approval of their local ethical committee or institutional review board, and that sharing via CoRR was in accord with their policies. In accord with prior FCP/INDI policies, face information was removed from anatomical images (FullAnonymize.sh V1.0b; http://www.nitrc.org/frs/shownotes.php?release_id=1902) and Neuroimaging Informatics Technology Initiative (NIFTI) headers replaced prior to open sharing to minimize the risk of re-identification.

Distribution for use

CoRR data sets can be accessed through either the COllaborative Informatics and Neuroimaging Suite (COINS) Data Exchange (http://coins.mrn.org/dx)20, or the Neuroimaging Informatics Tools and Resources Clearinghouse (NITRC; http://fcon_1000.projects.nitrc.org/indi/CoRR/html/index.html). CoRR datasets at the NITRC site are stored in .tar files sorted by site, each containing the necessary imaging data and phenotypic information. The COINS Data Exchange offers an enhanced graphical query tool, which enables users to target and download files in accord with specific search criteria. For each sharing venue, a user login must be established prior to downloading files. There are several groups of samples which were not included in the data analysis as they were in the data contribution/upload, preparation or correction stage at the time of analysis: Intrinsic Brain Activity, Test-Retest Dataset (IBATRT), Dartmouth College (DC 1), IPCAS 4, Hangzhou Normal University (HNU 2), Fudan University (FU 1), FU 2, Chengdu Huaxi Hospital (CHH 1), Max Planck Institute (MPG 1)19, Brain Genomics Superstruct Project (GSP) and New Jersey Institute of Technology (NJIT 1) (see more details on these sites at the CoRR website). Table 1 provides a static representation of the samples included in CoRR at the time of submission.

Imaging data

Consistent with its popularity in the imaging community and prior usage in FCP/INDI efforts, the NIFTI file format was selected for storage of CoRR imaging datasets, independent of modalities such as rfMRI, structural MRI (sMRI) and dMRI. Tables 2, 3, 4 (available online only) provide descriptions of the MRI sequences used for the various modalities for each of the imaging data file types.

Phenotypic information

All phenotypic data are stored in comma separated value (.csv) files. Basic information such as age and gender has been collected for each site to facilitate aggregation with minimal demographic variables. Table 5 (available online only) depicts the data legend provided to CoRR contributors.

Technical Validation

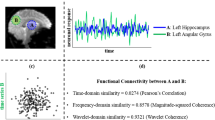

Consistent with the established FCP/INDI policy, all data contributed to CoRR was made available to users regardless of data quality. Justifications for this decision include the lack of consensus within the functional imaging community on criteria for quality assurance, and the utility of ‘lower quality’ datasets for facilitating the development of artifact correction techniques. For CoRR, the inclusion of datasets with significant artifacts related to factors such as motion are particularly valuable, as it enables the determination of the impact of such real-world confounds on reliability and reproducibility21,22. However, the absence of screening for data quality in the data release does not mean that the inclusion of poor quality datasets in imaging analyses is routine practice for the contributing sites. Figure 1 provides a summary map describing the anatomical coverage for rfMRI scans included in the CoRR dataset.

To facilitate quality assessment of the contributed samples and selection of datasets for analyses by individual users23, we made use of the Preprocessed Connectome Project quality assurance protocol (http://preprocessed-connectomes-project.github.io), which includes a broad range of quantitative metrics commonly used in the imaging literature for assessing data quality, as follows. They are itemized below:

-

Spatial Metrics (sMRI, rfMRI)

-

o Signal-to-Noise Ratio (SNR)24. The mean within gray matter values divided by the standard deviation of the air values.

-

o Foreground to Background Energy Ratio (FBER)

-

o Entropy Focus Criteria (EFC)25. Shannon’s entropy is used to summarize the principal directions distribution.

-

o Smoothness of Voxels26. The full-width half maximum (FWHM) of the spatial distribution of image intensity values.

-

o Ghost to Signal Ratio (GSR) (only rfMRI)27. A measure of the mean signal in the ‘ghost’ image (signal present outside the brain due to acquisition in the phase encoding direction) relative to mean signal within the brain.

-

o Artifact Detection (only sMRI)28. The proportion of voxels with intensity corrupted by artifacts normalized by the number of voxels in the background.

-

o Contrast-to-Noise Ratio (CNR) (only sMRI)24. Calculated as the mean of the gray matter values minus the mean of the white matter values, divided by the standard deviation of the air values.

-

-

Temporal Metrics (rfMRI)

-

o Head Motion

-

▪Mean framewise displacement (FD)29. A measure of subject head motion, which compares the motion between the current and previous volumes. This is calculated by summing the absolute value of displacement changes in the x, y and z directions and rotational changes about those three axes. The rotational changes are given distance values based on the changes across the surface of a 50 mm radius sphere.

-

▪Percent of volumes with FD greater than 0.2 mm

-

▪Standardized DVARS. The spatial standard deviation of the temporal derivative of the data (D referring to temporal derivative of time series, VARS referring to root-mean-square variance over voxels)29, normalized by the temporal standard deviation and temporal autocorrelation (http://blogs.warwick.ac.uk/nichols/entry/standardizing_dvars).

-

-

o General

-

▪Outlier Detection. The mean fraction of outliers found in each volume using 3dTout command in the software package for Analysis of Functional NeuroImages (AFNI: http://afni.nimh.nih.gov/afni).

-

▪Median Distance Index. The mean distance (1-spearman’s rho) between each time-point’s volume and the median volume using AFNI’s 3dTqual command.

-

▪Global Correlation (GCOR)30. The average of the entire brain correlation matrix, which is computed as the brain-wide average time series correlation over all possible combinations of voxels.

-

-

Imaging data preprocessing was carried out with the Configurable Pipeline for the Analysis of Connectomes (C-PAC: http://www.nitrc.org/projects/cpac). Results for the sMRI images (spatial metrics) are depicted in Supplementary Figure 1, for the rfMRI scans in Supplementary Figure 2 (general spatial and temporal metrics) and Supplementary Figure 3 (head motion). For both sMRI and rfMRI, the battery of quality metrics revealed notable variations in image properties across sites. It is our hope that users will explore the impact of such variations in quality on the reliability of data derivatives, as well as potential relationships with acquisition parameters. Recent work examining the impact of head motion on reliability suggests the merits of such lines of questioning. Specifically, Yan and colleagues found that motion itself has moderate test-retest reliability, and appears to contribute to reliability when low, though it compromises reliability when high31–33. Although a comprehensive examination of this issue is beyond the scope of the present work, we did verify that motion does have moderate test-retest reliability in the CoRR datasets (see Figure 2) as previously suggested. Interestingly, this relationship appeared to be driven by the lower motion datasets (mean FD<0.2mm). Future work will undoubtedly benefit from further exploration of this phenomena and its impact of findings.

Beyond the above quality control metrics, a minimal set of rfMRI derivatives for the datasets were calculated for the datasets included in CoRR to further facilitate comparison of images across sites:

-

o Fractional Amplitude of Low Frequency Fluctuations (fALFF)34,35. The total power in the low frequency range (0.01–0.1 Hz) of an fMRI image, normalized by the total power across all frequencies measured in that same image.

-

o Voxel-Mirrored Homotopic Connectivity (VMHC)36,37. The functional connectivity between a pair of geometrically symmetric, inter-hemispheric voxels.

-

o Regional Homogeneity (ReHo)38–40. The synchronicity of a voxel’s time series and that of its nearest neighbors based on Kendall’s coefficient of concordance to measure the local brain functional homogeneity.

-

o Intrinsic Functional Connectivity (iFC) of Posterior Cingulate Cortex (PCC)41. Using the mean time series from a spherical region of interest (diameter=8 mm) centered in PCC (x=−8, y=−56, z=26)42, functional connectivity with PCC is calculated for each voxel in the brain using Pearson’s correlation (results are Fisher r-to-z transformed).

To enable rapid comparison of derivatives, we: (1) calculated the 50th, 75th, and 90th percentile scores for each participant, and then (2) calculated site means and standard deviations for each of these scores (see Table 6 (available online only)). We opted to not use increasingly popular standardization approaches (for example, mean-regression, mean centering +/− variance normalization) in the calculation of derivative values, as the test-retest framework provides users a unique opportunity to consider the reliability of site-related differences. As can be seen in Supplementary Figure 4, for all the derivatives, the mean value or coefficient of variation obtained for a site was highly reliable. In the case of fALFF, site-specific differences can be directly related to the temporal sampling rate (that is, TR; see Figure 3), as lower TR datasets include a broader range of frequencies in the denominator—thereby reducing the resulting fALFF scores (differences in aliasing are likely to be present as well). This note of caution about fALFF raises the general issue that rfMRI estimates can be highly sensitive to acquisition parameters7,13. Specific factors contributing to differences in the other derivatives are less obvious (it is important to note that the correlation-based derivatives have some degree of standardization inherent to them). Interestingly, the coefficient of variation across participants also proved to be highly reliable for the various derivatives; while this may point to site-related differences in the ability to detect differences across participants, it may also be some reflection of the specific populations obtained at a site (or the sample size). Overall, these site-related differences highlight the potential value of post-hoc statistical standardization approaches, which can be used to handle unaccounted for sources of variation within-site as well43.

Finally, in Figure 4, we demonstrate the ability of the CoRR datasets to: (1) replicate prior work showing regional differences in inter-individual variation for the various derivatives that occur at ‘transition zones’ or boundaries between functional areas (even after mean-centering and variance normalization), and (2) show them to be highly reproducible across imaging sessions in the same sample. It is our hope that this demonstration will spark future work examining inter-individual variation in these boundaries and their functional relevance. These surface renderings and visualizations are carried out with the Connectome Computation System (CCS) documented at http://lfcd.psych.ac.cn/ccs.html and will be released to the public via github soon (https://github.com/zuoxinian/CCS).

Detection of functional boundaries was achieved via examination of voxel-wise coefficients of variation (CV) for fALFF, PCC, ReHo and VMHC maps. For the purpose of visualization, coefficients of variation were rank-ordered, whereby the relative degree of variation across participants at a given voxel, rather than the actual value, was plotted to better contrast brain regions. Ranking coefficients of variation (R-CV) efficiently identified regions of greatest inter-individual variability, thus delineating putative functional boundaries.

To facilitate replication of our work, for each of Figures 1, 2,3 and Supplementary Figures 1–4, we include a variable in the COINS phenotypic data that indicates whether or not each dataset was included in the analyses depicted. We also included this information in the phenotypic files on NITRC.

Usage Notes

While formal test-retest reliability or reproducibility analyses are beyond the scope of the present data description, we illustrate the broad range of potential questions that can be answered for rfMRI, dMRI and sMRI using the resource. These include the impact of:

-

Image quality13

-

Image processing decisions13,30,38,43,46–48 (for example, nuisance signal regression for rfMRI, spatial normalization algorithms, computational space)

-

Standardization approaches43

Of note, at present, the vast majority of studies do not collect physiological data, and this is reflected in the CoRR initiative. With that said, recent advances in model-free correction (for example, ICA-FIX54,55, CORSICA56, PESTICA57, PHYCAA58,59) can be of particular value in the absence of physiological data.

Additional questions may include:

-

How reliable are image quality metrics?

-

How does reliability and reproducibility impact prediction accuracy?

-

How do imaging modalities (for example, rfMRI, dMRI, sMRI) differ with respect to reproducibility and reliability? And within modality, are some derivatives more reliable than others?

-

Can reliability and reproducibility be used to optimize imaging analyses? How can such optimizations avoid being driven by artifacts such as motion?

-

How much information regarding inter-individual variation is shared and distinct among imaging metrics?

-

Which features best differentiate one individual from another?

One example analytic framework that can be used with the CoRR test-retest datasets is Non-Parametric Activation and Influence Reproducibility reSampling (NPAIRS60). By combining prediction accuracy and reproducibility, this computational framework can be used to assess the relative merits of differing image modalities, image metrics, or processing pipelines, as well as the impact of artifacts61–63.

Open access connectivity analysis packages that may be useful (list adapted from http://RFMRI.org):

-

Brain Connectivity Toolbox (BCT; MATLAB)64

-

BrainNet Viewer (BNV; MATLAB)65

-

Configurable Pipeline for the Analysis of Connectomes (C-PAC; PYTHON)66

-

CONN: functional connectivity toolbox (CONN; MATLAB)67

-

Dynamic Causal Model (DCM; MATLAB) as part of Statistical Parameter Mapping (SPM)68,69

-

Data Processing Assistant for Resting-State FMRI (DPARSF; MATLAB)70

-

Functional and Tractographic Connectivity Analysis Toolbox (FATCAT; C) as part of AFNI71,72

-

Seed-based Functional Connectivity (FSFC; SHELL) as part of FreeSurfer73

-

Graph Theory Toolkit for Network Analysis (GRETNA; MATLAB)74

-

Group ICA of FMRI Toolbox (GIFT; MATLAB)75

-

Multivariate Exploratory Linear Optimized Decomposition into Independent Components (MELODIC; C) as part of FMRIB Software Library (FSL)76,77

-

Neuroimaging Analysis Kit (NIAK: MATLAB/OCTAVE)78

-

Ranking and averaging independent component analysis by reproducibility (RAICAR; MATLAB)79,80

-

Resting-State fMRI Data Analysis Toolkit (REST; MATLAB)81

References

References

Alivisatos, A. P. et al. The brain activity map project and the challenge of functional connectomics. Neuron 74, 970–974 (2012).

Seung, H. S. Neuroscience: Towards functional connectomics. Nature 471, 170–172 (2011).

Smith, S. M. et al. Functional connectomics from resting-state fMRI. Trends Cogn. Sci. 17, 666–682 (2013).

Buckner, R. L., Krienen, F. M. & Yeo, B. T. Opportunities and limitations of intrinsic functional connectivity MRI. Nat. Neurosci. 16, 832–837 (2013).

Sporns, O. Contributions and challenges for network models in cognitive neuroscience. Nat. Neurosci. 17, 652–660 (2014).

Biswal, B. B. et al. Toward discovery science of human brain function. Proc. Natl Acad. Sci. USA 107, 4734–4739 (2010).

Kelly, C., Biswal, B. B., Craddock, R. C., Castellanos, F. X. & Milham, M. P. Characterizing variation in the functional connectome: promise and pitfalls. Trends Cogn. Sci. 16, 181–188 (2012).

Van Dijk, K. R. et al. Intrinsic functional connectivity as a tool for human connectomics: theory, properties, and optimization. J. Neurophysiol. 103, 297–321 (2010).

Biswal, B., Yetkin, F. Z., Haughton, V. M. & Hyde, J. S. Functional connectivity in the motor cortex of resting human brain using echo-planar MRI. Magn. Reson. Med. 34, 537–541 (1995).

Zuo, X. N. et al. Network centrality in the human functional connectome. Cereb. Cortex 22, 1862–1875 (2012).

Smith, S. M. Correspondence of the brain’s functional architecture during activation and rest. Proc. Natl Acad. Sci. USA 106, 13040–13045 (2009).

Yeo, B. T. et al. The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J. Neurophysiol. 106, 1125–1165 (2011).

Zuo, X. N. & Xing, X. X. Test-retest reliabilities of resting-state FMRI measurements in human brain functional connectomics: A systems neuroscience perspective. Neurosci. Biobehav. Rev. 45, 100–118 (2014).

Castellanos, F. X., Di Martino, A., Craddock, R. C., Mehta, A. D. & Milham, M. P. Clinical applications of the functional connectome. Neuroimage 80, 527–540 (2013).

Kapur, S., Phillips, A. G. & Insel, T. R. Why has it taken so long for biological psychiatry to develop clinical tests and what to do about it? Mol. Psychiatry 17, 1174–1179 (2012).

Singh, I. & Rose, N. Biomarkers in psychiatry. Nature 460, 202–207 (2009).

Mennes, M., Biswal, B. B., Castellanos, F. X. & Milham, M. P. Making data sharing work: The FCP/INDI experience. Neuroimage 82, 683–691 (2013).

Milham, M. P. Open neuroscience solutions for the connectome-wide association era. Neuron 73, 214–218 (2012).

Gorgolewski, J. K. et al. A high resolution 7-Tesla resting-state fMRI test-retest dataset with cognitive and physiological measures. Sci. Data 1, 140053 (2014).

Scott, A. et al. COINS: An innovative informatics and neuroimaging tool suite built for large heterogeneous datasets. Front. Neuroinform 5, 33 (2011).

Power, J. D. et al. Methods to detect, characterize, and remove motion artifact in resting state fMRI. Neuroimage 84, 320–341 (2014).

Jo, H. J. et al. Effective preprocessing procedures virtually eliminate distance-dependent motion artifacts in resting state FMRI. J. Appl. Math 13, 935154 (2014).

Glover, G. H. et al. Function biomedical informatics research network recommendations for prospective multicenter functional MRI studies. J. Magn. Reson. Imaging 36, 39–54 (2012).

Magnotta, V. A. & Friedman, L. Measurement of signal-to-noise and contrast-to-noise in the fBIRN multicenter imaging study. J. Digit. Imaging 19, 140–147 (2006).

Farzinfar, M. et al. Entropy based DTI quality control via regional orientation distribution. Proc. IEEE Int. Symp. Biomed. Imaging 9, 22–26 (2012).

Friedman, L. et al. Test-retest and between-site reliability in a multicenter fMRI study. Hum. Brain Mapp. 29, 958–972 (2008).

Davids, M. et al. Fully-automated quality assurance in multi-center studies using MRI phantom measurements. Magn. Reson. Imaging 32, 771–780 (2014).

Mortamet, B. et al. Automatic quality assessment in structural brain magnetic resonance imaging. Magn. Reson. Med 62, 365–372 (2009).

Power, J. D., Barnes, K. A., Snyder, A. Z., Schlaggar, B. L. & Petersen, S. E. Spurious but systematic correlations in functional connectivity MRI networks arise from subject motion. Neuroimage 59, 2142–2154 (2012).

Saad, Z. S. et al. Correcting brain-wide correlation differences in resting-state FMRI. Brain Connect 3, 339–352 (2013).

Yan, C. G. et al. A comprehensive assessment of regional variation in the impact of head micromovements on functional connectomics. Neuroimage 76, 183–201 (2013).

Van Dijk, K. R., Sabuncu, M. R. & Buckner, R. L. The influence of head motion on intrinsic functional connectivity MRI. Neuroimage 59, 431–438 (2012).

Zeng, L. L. et al. Neurobiological basis of head motion in brain imaging. Proc. Natl Acad. Sci. USA 111, 6058–6062 (2014).

Zou, Q. H. et al. An improved approach to detection of amplitude of low-frequency fluctuation (ALFF) for resting-state fMRI: fractional ALFF. J. Neurosci. Methods 172, 137–141 (2008).

Zuo, X. N. et al. The oscillating brain: complex and reliable. Neuroimage 49, 1432–1445 (2010).

Zuo, X. N. et al. Growing together and growing apart: regional and sex differences in the lifespan developmental trajectories of functional homotopy. J. Neurosci. 30, 15034–15043 (2010).

Anderson, J. S. et al. Decreased interhemispheric functional connectivity in autism. Cereb. Cortex 21, 1134–1146 (2011).

Zang, Y., Jiang, T., Lu, Y., He, Y. & Tian, L. Regional homogeneity approach to fMRI data analysis. Neuroimage 22, 394–400 (2004).

Zuo, X. N. et al. Toward reliable characterization of functional homogeneity in the human brain: preprocessing, scan duration, imaging resolution and computational space. Neuroimage 65, 374–386 (2013).

Jiang, L. et al. Toward neurobiological characterization of functional homogeneity in the human cortex: Regional variation, morphological association and functional covariance network organization. Brain Struct. Funct. http://dx.doi.org/10.1007/s00429-014-0795-8 (2014).

Greicius, M. D., Krasnow, B., Reiss, A. L. & Menon, V. Functional connectivity in the resting brain: a network analysis of the default mode hypothesis. Proc. Natl Acad. Sci. USA 100, 253–258 (2003).

Andrews-Hanna, J. R., Reidler, J. S., Sepulcre, J., Poulin, R. & Buckner, R.L. Functional-anatomic fractionation of the brain’s default network. Neuron 65, 550–562 (2010).

Yan, C. G., Craddock, R. C., Zuo, X. N., Zang, Y. F. & Milham, M. P. Standardizing the intrinsic brain: towards robust measurement of inter-individual variation in 1000 functional connectomes. Neuroimage 80, 246–262 (2013).

Birn, R. M. et al. The effect of scan length on the reliability of resting-state fMRI connectivity estimates. Neuroimage 83C, 550–558 (2013).

Yan, C. G., Craddock, R. C., He, Y. & Milham, M. P. Addressing head motion dependencies for small-world topologies in functional connectomics. Front. Hum. Neurosci 7, 910 (2013).

Zuo, X. N. & Xing, X. X. Effects of non-local diffusion on structural MRI preprocessing and default network mapping: Statistical comparisons with isotropic/anisotropic diffusion. PLoS ONE 6, e26703 (2011).

Xing, X. X., Xu, T., Yang, Z. & Zuo, X. N. Non-local means smoothing: A demonstration on multiband resting state MRI. in 19th Annual Meeing of the Organization for Human Brain Mapping (2013).

Zhu, L. et al. Temporal reliability and lateralization of resting-state language network. PLoS ONE 9, e85880 (2014).

Liao, X. H. et al. Functional hubs and their test-retest reliability: a multiband resting-state functional MRI study. Neuroimage 83, 969–982 (2013).

Liang, X. et al. Effects of different correlation metrics and preprocessing factors on small-world brain functional networks: a resting-state functional MRI study. PLoS ONE 7, e32766 (2012).

Thomason, M. E. et al. Resting-state fMRI can reliably map neural networks in children. Neuroimage 55, 165–175 (2011).

Guo, C. C. et al. One-year test-retest reliability of intrinsic connectivity network fMRI in older adults. Neuroimage 61, 1471–1483 (2012).

Song, J. et al. Age-related differences in test-retest reliability in resting-state brain functional connectivity. PLoS ONE 7, e49847 (2012).

Salimi-Khorshidi, G. et al. Automatic denoising of functional MRI data: combining independent component analysis and hierarchical fusion of classifiers. Neuroimage 90, 449–468 (2014).

Griffanti, L. et al. ICA-based artefact removal and accelerated fMRI acquisition for improved resting state network imaging. Neuroimage 95, 232–247 (2014).

Perlbarg, V. et al. CORSICA: correction of structured noise in fMRI by automatic identification of ICA components. Magn. Reson. Imaging 25, 35–46 (2007).

Beall, E. B. & Lowe, M. J. Isolating physiologic noise sources with independently determined spatial measures. Neuroimage 37, 1286–12300 (2007).

Churchill, N. W. et al. PHYCAA: data-driven measurement and removal of physiological noise in BOLD fMRI. Neuroimage 59, 1299–12314 (2012).

Churchill, N. W. & Strother, S. C. PHYCAA+: an optimized, adaptive procedure for measuring and controlling physiological noise in BOLD fMRI. Neuroimage 82, 306–225 (2013).

Strother, S. C. et al. The quantitative evaluation of functional neuroimaging experiments: the NPAIRS data analysis framework. Neuroimage 15, 747–771 (2002).

LaConte, S. et al. The evaluation of preprocessing choices in single-subject BOLD fMRI using NPAIRS performance metrics. Neuroimage 18, 10–27 (2003).

Churchill, N. W. et al. Optimizing preprocessing and analysis pipelines for single-subject fMRI: I. Standard temporal motion and physiological noise correction methods. Hum Brain Mapp. 33, 609–627 (2012).

Churchill, N. W. et al. Optimizing preprocessing and analysis pipelines for single-subject fMRI: 2. Interactions with ICA, PCA, task contrast and inter-subject heterogeneity. PLoS ONE 7, e31147 (2012).

Rubinov, M. & Sporns, O. Complex network measures of brain connectivity: uses and interpretations. Neuroimage 52, 1059–1069 (2010).

Xia, M., Wang, J. & He, Y. BrainNet Viewer: a network visualization tool for human brain connectomics. PLoS ONE 8, e68910 (2013).

Di Martino, A. et al. The autism brain imaging data exchange: towards a large-scale evaluation of the intrinsic brain architecture in autism. Mol. Psychiatry 19, 659–667 (2014).

Whitfield-Gabrieli, S. & Nieto-Castanon, A. Conn: a functional connectivity toolbox for correlated and anticorrelated brain networks. Brain Connect 2, 125–141 (2012).

Friston, K. J., Kahan, J., Biswal, B. & Razi, A. A DCM for resting state fMRI. Neuroimage 94, 396–407 (2014).

Ashburner, J. SPM: a history. Neuroimage 62, 791–800 (2012).

Yan, C. G. & Zang, Y. F. DPARSF: A MATLAB Toolbox for "Pipeline" Data Analysis of Resting-State fMRI. Front. Syst. Neurosci 4, 13 (2010).

Taylor, P. A. & Saad, Z. S. FATCAT: (an efficient) Functional and Tractographic Connectivity Analysis Toolbox. Brain Connect 3, 523–535 (2013).

Cox, R. W. AFNI: what a long strange trip it’s been. Neuroimage 62, 743–747 (2012).

Fischl, B. FreeSurfer. . Neuroimage 62, 774–781 (2012).

Software registered at the Neuroimaging Informatics Tools and Resources Clearinghouse (NITRC) http://www.nitrc.org/projects/gretna/ (2012).

Calhoun, V. D., Adali, T., Pearlson, G. D. & Pekar, J. J. A method for making group inferences from functional MRI data using independent component analysis. Hum. Brain Mapp. 14, 140–151 (2001).

Beckmann, C. F., DeLuca, M., Devlin, J. T. & Smith, S. M. Investigations into resting-state connectivity using independent component analysis. Philos. Trans. R. Soc. Lond. B Biol. Sci. 360, 1001–1013 (2005).

Jenkinson, M., Beckmann, C. F., Behrens, T. E., Woolrich, M. W. & Smith, S. M. FSL. Neuroimage 62, 782–790 (2012).

Bellec, P. et al. The pipeline system for Octave and Matlab (PSOM): a lightweight scripting framework and execution engine for scientific workflows. Front. Neuroinform 6, 7 (2012).

Yang, Z., LaConte, S., Weng, X. & Hu, X. Ranking and averaging independent component analysis by reproducibility (RAICAR). Hum. Brain Mapp. 29, 711–725 (2008).

Yang, Z. et al. Generalized RAICAR: discover homogeneous subject (sub)groups by reproducibility of their intrinsic connectivity networks. Neuroimage 63, 403–414 (2012).

Song, X. W. et al. REST: a toolkit for resting-state functional magnetic resonance imaging data processing. PLoS ONE 6, e25031 (2011).

Data Citations

Liu, X., Nan, W. Z., & Wang, K. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.ipcas4 (2014)

Zuo, X. N. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.ipcas7 (2014)

Castellanos, F. X., Di Martino, A., & Kelly, C. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.nyu1 (2014)

Chen, A. T., & Lei, X. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.swu3 (2014)

Chen, A. T., & Chen, J. T. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.swu2 (2014)

Zang, Y. F. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.bnu3 (2014)

Margulies, D. S., & Villringer, A. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.bmb1 (2014)

Jiang, T. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.iacas1 (2014)

Blautzik, J., & Meidl, T. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.lmu3 (2014)

He, Y., Lin, Q. X., Gong, G., & Xia, M. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.bnu1 (2014)

Liu, J., & Zhen, Z. L. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.bnu2 (2014)

Wei, G. X., Xu, T., Luo, J., & Zuo, X. N. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.ipcas8 (2014)

Lu, G. M., & Zhang, Z. Q. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.jhnu1 (2014)

Qiu, J., Wei, D. T., & Zhang, Q. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.swu4 (2014)

Milham, M. P., & Colcombe, S. J. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.nki1 (2014)

Li, H. J., Hou, X. H., Xu, Y., Jiang, Y., & Zuo, X. N. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.ipcas2 (2014)

Chen, B., Ge, Q., Zuo, X. N., & Weng, X. C. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.hnu1 (2014)

Hallquist, M., Paulsen, D., & Luna, B. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.upsm1 (2014)

Blautzik, J., & Meindl, T. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.lmu1 (2014)

Blautzik, J., & Meindl, T. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.lmu2 (2014)

Yang, Z. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.ipcas6 (2014)

LaConte, S., & Craddock, C. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.ibatrt1 (2014)

Zhao, K., Qu, F., Chen, Y., & Fu, X. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.ipcas1 (2014)

Liu, X., & Wang, K. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.ipcas3 (2014)

Di Martino, A., Castellanos, F. X., & Kelly, C. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.nyu2 (2014)

Anderson, J. S. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.utah1 (2014)

Chen, A. T., & Liu, Y. J. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.swu1 (2014)

Bellec, P. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.um1 (2014)

Anderson, J. S., Nielsen, J. A., & Ferguson, M. A. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.utah2 (2014)

Birn, R. M., Prabhakaran, V., & Meyerand, M. E. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.uwm1 (2014)

Mayer, A. R., & Calhoun, V. D. Functional Connectomes Project International Neuroimaging Data-Sharing Initiative http://dx.doi.org/10.15387/fcp_indi.corr.mrn1 (2014)

Acknowledgements

This work is partially supported by the National Basic Research Program (973) of China (2015CB351702, 2011CB707800, 2011CB302201, 2010CB833903), the Major Joint Fund for International Cooperation and Exchange of the National Natural Science Foundation (81220108014, 81020108022) and others from Natural Science Foundation of China (11204369, 81270023, 81171409, 81271553, 81422022, 81271652, 91132301, 81030027, 81227002, 81220108013, 31070900, 81025013, 31070987, 31328013, 81030028, 81225012, 31100808, 30800295, 31230031, 91132703, 31221003, 30770594, 31070905, 31371134, 91132301, 61075042, 31200794, 91132728, 31271079, 31170980, 81271477, 31070900, 31170983, 31271087), the National Social Science Foundation of China (11AZD119), the National Key Technologies R&D Program of China (2012BAI36B01, 2012BAI01B03), the National High Technology Program (863) of China (2008AA02Z405, 2014BAI04B05), the Key Research Program (KSZD-EW-TZ-002) of the Chinese Academy of Sciences, the NIH grants (BRAINS R01MH094639, R01MH081218, R01MH083246, R21MH084126, R01MH081218, R01MH083246, R01MH080243, K08MH092697, R24-HD050836, R21-NS064464-01A1, 3R21NS064464-01S1), the Stavros Niarchos Foundation and the Phyllis Green Randolph Cowen Endowment. Dr Xi-Nian Zuo acknowledges the Hundred Talents Program of the Chinese Academy of Sciences. Dr Michael P. Milham acknowledges partial support for FCP/INDI from an R01 supplement by National Institute on Drug Abuse (NIDA; PAR-12-204), as well as gifts from Joseph P. Healey, Phyllis Green and Randolph Cowen to the Child Mind Institute. Dr Jiang Qiu acknowledges the Program for New Century Excellent Talents in University (2011) by the Ministry of Education. Dr Antao Chen acknowledges the support from the Foundation for the Author of National Excellent Doctoral Dissertation of PR China (201107) and the New Century Excellent Talents in University (NCET-11-0698). Dr Qiyong Gong would like to acknowledge the Program for Changjiang Scholars and Innovative Research Team in University of China (IRT1272) and his Visiting Professorship appointment in the Department of Psychiatry at the School of Medicine, Yale University. The Department of Energy (DE-FG02-99ER62764) supported the Mind Research Network. Drs Xi-Nian Zuo and Michael P. Milham had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis.

Author information

Authors and Affiliations

Contributions

Consortium Leadership: Michael P. Milham, Xi-Nian Zuo. COINS Database Organization: Vince Calhoun, William Courtney, Margaret King. Data Organization: Hao-Ming Dong, Ye He, Erica Ho, Xiao-Hui Hou, Dan Lurie, David O’Connor, Ting Xu, Ning Yang, Lei Zhang, Zhe Zhang, Xing-Ting Zhu. Quality Control Protocol Development: R. Cameron Craddock, Zarrar Shehzad. Data Analysis: Ting Xu, Dan Lurie, Zarrar Shehzad, Michael P. Milham, Xi-Nian Zuo. Data Contribution: Jeffrey S. Anderson, Pierre Bellec, Rasmus M. Birn, Bharat Biswal, Janusch Blautzik, John C.S. Breitner, Randy L. Buckner, Vince D. Calhoun, F. Xavier Castellanos, Antao Chen, Bing Chen, Jiangtao Chen, Xu Chen, Stanley J. Colcombe, R. Cameron Craddock, Adriana Di Martino, Xiaolan Fu, Qiyong Gong, Krzyszotof J. Gorgolewski, Ying Han, Yong He, Avram Holmes, Jeremy Huckins, Tianzi Jiang, Yi Jiang, William Kelley, Clare Kelly, Stephen M. LaConte, Janet E. Lainhart, Xu Lei, Hui-Jie Li, Kaiming Li, Kuncheng Li, Qixiang Lin, Dongqiang Liu, Jia Liu, Xun Liu, Yijun Liu, Guangming Lu, Jie Lu, Beatriz Luna, Jing Luo, Ying Mao, Daniel S. Margulies, Andrew R. Mayer, Thomas Meindl, Mary E. Meyerand, Michael P. Milham, Weizhi Nan, Jared A. Nielsen, David Paulsen, Vivek Prabhakaran, Zhigang Qi, Jiang Qiu, Chunhong Shao, Weijun Tang, Arno Villringer, Huiling Wang, Kai Wang, Dongtao Wei, Gao-Xia Wei, Xu-Chu Weng, Xuehai Wu, Zhi Yang, Yu-Feng Zang, Qinglin Zhang, Zhiqiang Zhang, Ke Zhao, Zonglei Zhen, Yuan Zhou, Xi-Nian Zuo.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing financial interests.

ISA-Tab metadata

Supplementary information

Rights and permissions

This work is licensed under a Creative Commons Attribution 4.0 International License. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in the credit line; if the material is not included under the Creative Commons license, users will need to obtain permission from the license holder to reproduce the material. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0 Metadata associated with this Data Descriptor is available at http://www.nature.com/sdata/ and is released under the CC0 waiver to maximize reuse.

About this article

Cite this article

Zuo, XN., Anderson, J., Bellec, P. et al. An open science resource for establishing reliability and reproducibility in functional connectomics. Sci Data 1, 140049 (2014). https://doi.org/10.1038/sdata.2014.49

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/sdata.2014.49

This article is cited by

-

Big Brain Data Initiatives in Universiti Sains Malaysia: Data Stewardship to Data Repository and Data Sharing

Neuroinformatics (2023)

-

A longitudinal neuroimaging dataset on language processing in children ages 5, 7, and 9 years old

Scientific Data (2022)

-

A longitudinal resource for studying connectome development and its psychiatric associations during childhood

Scientific Data (2022)

-

A longitudinal multi-scanner multimodal human neuroimaging dataset

Scientific Data (2022)

-

Multiplex graph matching matched filters

Applied Network Science (2022)