Abstract

We developed a behavioral task in rats to assess the influence of risk of punishment on decision making. Male Long–Evans rats were given choices between pressing a lever to obtain a small, ‘safe’ food reward and a large food reward associated with risk of punishment (footshock). Each test session consisted of 5 blocks of 10 choice trials, with punishment risk increasing with each consecutive block (0, 25, 50, 75, 100%). Preference for the large, ‘risky’ reward declined with both increased probability and increased magnitude of punishment, and reward choice was not affected by the level of satiation or the order of risk presentation. Performance in this risky decision-making task was correlated with the degree to which the rats discounted the value of probabilistic rewards, but not delayed rewards. Finally, the acute effects of different doses of amphetamine and cocaine on risky decision making were assessed. Systemic amphetamine administration caused a dose-dependent decrease in choice of the large risky reward (ie, it made rats more risk averse). Cocaine did not cause a shift in reward choice, but instead impaired the rats’ sensitivity to changes in punishment risk. These results should prove useful for investigating neuropsychiatric disorders in which risk taking is a prominent feature, such as attention deficit/hyperactivity disorder and addiction.

Similar content being viewed by others

INTRODUCTION

Few decisions with which individuals are faced on a daily basis are entirely without risk of adverse consequences. Even everyday decisions such as ‘what to do when faced with a yellow traffic signal while driving’ (accelerate or slow down) involve consideration of both the risks and rewards associated with each option. A better understanding of this type of decision making under conditions in which highly rewarding choices are accompanied by risks of adverse consequences (punishment) may have considerable implications for understanding psychopathological conditions characterized by alterations in risk-based decision making, such as attention deficit/hyperactivity disorder, major depressive disorder, schizophrenia, and addiction (Bechara et al, 2001; Drechsler et al, 2008; Ernst et al, 2003; Heerey et al, 2008; Taylor Tavares et al, 2007). Such risky decision making is commonly studied in human subjects (Lejuez et al, 2002; Leland and Paulus, 2005); however, there are few animal models that systematically assess the degree to which risk of punishment (rather than reward omission) influences reward-based choice (see Negus, 2005).

There were three main goals of the experiments described below: the first was to establish the performance parameters of a discrete-trials risky decision-making choice task, in which rats chose between a small, ‘safe’ reward and a large reward associated with a risk of punishment that varied within each test session. The second goal was to determine how choice performance in the risky decision-making task was related to performance in other decision-making tasks that assess the degree to which choices are influenced by reward probability and delays to reward delivery (and which are also commonly altered in the same neuropsychiatric conditions in which altered risky decision making is observed). The third goal was to determine how risky decision making is affected by acute administration of psychostimulant drugs that have been shown previously to affect delay- and probability-based decision making in both human and animal subjects (Cardinal et al, 2000; De Wit et al, 2002; DeVito et al, 2008; Evenden and Ryan, 1996; St Onge and Floresco, 2009; Stanis et al, 2008; Winstanley et al, 2007).

METHODS

Subjects

Male Long–Evans rats (n=28, weighing 275–300 g on arrival; Charles River Laboratories, Raleigh, NC) were individually housed and kept on a 12 h light/dark cycle (lights on at 0800 hours) with free access to food and water except as noted. During testing, rats were maintained at 85% of their free-feeding weight, with allowances for growth. All procedures were conducted in accordance with the Texas A&M University Laboratory Animal Care and Use Committee and NIH guidelines.

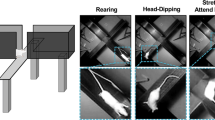

Apparatus

Testing took place in standard behavioral test chambers (Coulbourn Instruments, Whitehall, PA) housed within sound attenuating cubicles. Each chamber was equipped with a recessed food pellet delivery trough fitted with a photobeam to detect head entries and a 1.12 W lamp to illuminate the food trough. The trough, into which 45 mg grain-based food pellets (PJAI, Test Diet: Richmond, IN) were delivered, was located 2 cm above the floor in the center of the front wall. Two retractable levers were located to the left and right of the food delivery trough, 11 cm above the floor. A 1.12 W house light was mounted on the rear wall of the sound-attenuating cubicle. The floor of the test chamber was composed of steel rods connected to a shock generator (Coulbourn) that delivered scrambled footshocks. Locomotor activity was assessed throughout each session with an overhead infrared activity monitor. Test chambers were interfaced with a computer running Graphic State software (Coulbourn), which controlled task event delivery and data collection.

Behavioral Procedures

Shaping

Shaping procedures were identical to those used previously (Simon et al, 2007b). Following magazine training, rats were trained to press a single lever (either left or right, counterbalanced across groups; the other was retracted during this phase of training) to receive a single food pellet. After reaching a criterion of 50 lever presses in 30 min, rats were shaped to press the opposite lever under the same criterion. This was followed by further shaping sessions in which both levers were retracted and rats were shaped to nose poke into the food trough during simultaneous illumination of the trough and house lights. When a nose poke occurred, a single lever was extended (left or right), and a lever press resulted in immediate delivery of a single food pellet. Immediately following the lever press, the house and trough lights were extinguished and the lever was retracted. Rats were trained to a criterion of at least 30 presses of each lever in 60 min. This shaping procedure was conducted only once at the start of all behavioral testing.

Risky decision-making task

Test sessions were 60 min long and consisted of 5 blocks of 18 trials each. Each 40 s trial began with a 10 s illumination of the food trough and house lights. A nose poke into the food trough during this time extinguished the food trough light and triggered extension of either a single lever (forced choice trials) or of both levers simultaneously (choice trials). If the rats failed to nose poke within the 10 s time window, the lights were extinguished and the trial was scored as an omission.

A press on one lever (either left or right, balanced across animals) resulted in one food pellet (the small, safe reward) delivered immediately following the lever press. A press on the other lever resulted in immediate delivery of three food pellets (the large reward). However, selection of this lever was also accompanied immediately by a possible 1 s footshock contingent on a preset probability specific to each trial block. The large food pellet reward was delivered following every choice of the large reward lever, regardless of whether the footshock occurred. The intensity of the footshock varied by experiment (see below). With the exception of Experiment 1C, the probability of footshock accompanying the large reward was set at 0% during the first 18-trial block. In subsequent 18-trial blocks, the probability of footshock increased to 25, 50, 75, and 100%. Each 18-trial block began with 8 forced choice trials used to establish the punishment contingencies (4 for each lever), followed by 10 choice trials (Cardinal and Howes, 2005; Simon et al, 2007b; St Onge and Floresco, 2009). Once either lever was pressed, both levers were immediately retracted. Food delivery was accompanied by reillumination of both the food trough and house lights, which were extinguished on entry to the food trough to collect the food or after 10 s, whichever occurred sooner.

Delay-discounting task

A detailed description of this procedure is provided in Simon et al (2007b). Each 100 min session consisted of 5 blocks of 12 trials each. Each 100 s trial began with illumination of the food trough and house lights. A nose poke into the food trough during this time extinguished the food trough light and triggered extension of either a single lever (forced choice trials) or of both levers simultaneously (choice trials). Trials on which rats failed to nose poke during this window were scored as omissions.

Each block consisted of 2 forced choice trials followed by 10 choice trials. A press on one lever (either left or right, counterbalanced across subjects) resulted in one food pellet delivered immediately. A press on the other lever resulted in four food pellets delivered after a variable delay. Once either lever was pressed, both levers were retracted for the remainder of the trial. The delay duration increased with each block of trials (0 s, 10 s, 20 s, 40 s, 60 s; Cardinal et al, 2000; Evenden and Ryan, 1996; Simon et al, 2007b; Winstanley et al, 2003).

Probability-discounting task

The parameters of this task were identical to the risky decision-making task, with the only difference following selection of the large reward lever. During the first block of trials, the large reward was delivered with 100% probability. During each of the four subsequent blocks, the probability of large reward delivery was systematically decreased (75, 50, 25, 0%). The large reward was accompanied by neither punishment nor a delay period.

Experiment 1: Establishing The Risky Decision-Making Task

Experiment 1A: Effects of shock intensity

To determine the optimal shock intensity for subsequent experiments, rats were divided into three groups and tested on the risky decision-making task for 20 sessions at shock intensities of 0.35 mA (n=12), 0.4 mA (n=10), or 0.45 mA (n=6). Percent choice of the large reward lever was averaged across the final five days of testing, during which performance was stable.

Experiment 1B: Effects of food satiation level

To assess the effects of alterations in food motivation on performance, rats trained at the 0.35 mA shock intensity were tested after being given access to freely available food in their home cage for either 1 or 24 h immediately before testing. This testing occurred over 4 days for each satiation level, with rats food restricted as normal (85% of free-feeding weight) on days 1 and 3, and with access to food on days 2 and 4. For each satiation level, the two satiation days and the two nonsatiation days were averaged together for analysis.

Experiment 1C: Reversal of punishment probabilities

To determine whether task performance was specific to ascending risks of punishment, rats trained with a shock intensity of 0.35 mA were tested for 10 sessions in a modified version of the task in which the order of risk presentations accompanying the large reward was reversed (100% in the first block of trials, followed by 75, 50, 25, and 0%). All other aspects of the task remained constant. Choice behavior was averaged across the final five sessions.

Experiment 2: Comparing Decision Making Across Tasks

Following testing in the risky decision-making task, rats tested with a shock intensity of 0.35 mA were tested in the delay-discounting task for 20 sessions, followed by testing in the probability-discounting task for 15 sessions. In each task, performance was assessed by averaging across the final five sessions (during which performance was stable).

Experiment 3: Acute Drug Treatments

The acute effects of d-amphetamine sulfate and cocaine hydrochloride on risky decision making were examined in rats tested at a shock intensity of 0.35 mA. Rats were tested following i.p. injections (1 ml/kg) of one of three doses of d-amphetamine sulfate (Sigma, St Louis, MO; 0.33, 1.0, 1.5 mg/kg) or 0.9% saline vehicle. Injections were administered before testing over a period of 6 days using the following schedule: saline, amphetamine dose 1, saline, amphetamine dose 2, saline, amphetamine dose 3. The order of drug doses was counterbalanced across subjects. This 6-day experimental procedure was later repeated (after stable performance was obtained) using cocaine hydrochloride (Drug Supply Program, NIDA; 5, 10, 15 mg/kg in 0.9% saline vehicle). All drug treatments were administered in the vivarium; between transportation to the test chambers and the initial 5 min of forced-choice trials, 10 min elapsed between drug administration and collection of choice preference data in the first block of trials.

Timeline of Experiments

Rats in the 0.35 mA shock-intensity group from Experiment 1 were the subjects in Experiments 2 and 3. The progression of tasks and treatments was as follows: risky decision making (RDM)/amphetamine treatment/baseline RDM/delay-discounting/probability-discounting/baseline RDM/cocaine treatment/baseline RDM/RDM satiation tests/baseline RDM/RDM reversed probabilities.

Data Analysis

Raw data files were exported from Graphic State software and compiled using a custom macro written for Microsoft Excel (Dr. Jonathan Lifshitz, University of Kentucky). Statistical analyses were conducted in SPSS 16.0. Stable behavior was defined by the absence of either a main effect of session or an interaction between session and trial block in a repeated measures ANOVA over a five-session period (Cardinal et al, 2000; Simon et al, 2008b; Winstanley et al, 2006b). The effects of behavioral or pharmacological manipulations in all tasks were assessed using two-way ANOVAs, with trial block (ie, level of risk) as a repeated measures variable. Performance between tasks was compared using bivariate Pearson's correlations. In all cases, p<0.05 were considered significant.

RESULTS

Experiment 1: Establishing The Risky Decision-Making Task

Experiment 1A: Effects of shock intensity

Performance was stable for rats in all three shock-intensity groups in sessions 16–20. A two-way repeated measures ANOVA (shock intensity (0.35, 0.40, 0.45 mA) × risk (0, 25, 50, 75, 100%)) revealed main effects of both shock intensity (F(2,24)=12.31, p<0.001) and risk (F(4,96)=14.75, p<0.001), with choice of the large reward decreasing with both shock intensity and risk in a combined analysis of all three groups (Figure 1). There was no interaction between shock-intensity groups (F(8,96)=1.75, n.s.); however, LSD post hoc analyses revealed significant differences in reward choice between the three groups (p<0.01). Additional planned one-way repeated measures ANOVAs showed main effects of punishment risk in rats trained with the 0.35 mA shock (F(4,44)=7.20, p<0.001) and the 0.4 mA shock (F(4,32)=12.18 p<.001), indicating that these groups discounted the large reward as a function of risk (ie, they were sensitive to risk of punishment). There was no effect of punishment risk for the 0.45 mA shock intensity (F(4,20),=1.00, n.s.), with this group demonstrating almost complete preference for the small reward across all blocks (Figure 1).

Additionally, there was no main effect of shock intensity on the number of choice trials completed during testing (mean % completed trials, 0.35 mA, 97.00; 0.40 mA, 96.62, 0.45 mA, 99.93; F(2,24)=1.52, n.s.), although there was a main effect of shock intensity on trials completed during forced choice trials with the large lever (with no option for the small, safe reward) when the punishment risk was 100% (mean % completed trials: 0.35 mA: 75.00, 0.40 mA: 53.89, 0.45 mA: 5.83; F(2,24)=14.54, p <0.001). LSD post hoc analyses conducted on these latter data revealed no difference in completed trials between the 0.35 and 0.40 mA groups (n.s.) but strong group differences between the 0.35 and 0.40 groups and the 0.45 mA group (p<0.001), indicating that rats would often forego selection of the large reward altogether at the high shock intensity when there was no safe reward option.

There was considerable variance in reward preference within both the 0.35 and 0.4 mA shock groups, such that some rats demonstrated a strong preference for the large, risky reward whereas others preferred the small, safe reward (Figure 2). To analyze the source of this variance, linear regressions were used to assess the ability of initial reward preference (during the first, 0% risk block) to predict reward choice averaged across the other four blocks. Reward choice during the 0% block was correlated with choice during the next four blocks for both the 0.35 (r=0.86, p<0.001) and 0.40 mA (r=0.82, p<0.01) shock-intensity groups. For the 0.35 mA shock group, there were no correlations between reward preference (risk taking) and baseline body weight (r=0.33, n.s.) or shock reactivity as assessed by locomotion during the 1 s shock-delivery period (r=−0.15, n.s.). For the 0.4 mA shock group, there was a strong correlation between baseline body weight and reward preference (r=0.82, p <0.05) but not between reward preference and shock reactivity (r=−0.13, n.s.) or body weight and shock reactivity (r=−0.08, n.s.).

Based on the data from Experiment 1A, the 0.35 mA shock intensity was used for all subsequent experiments.

Experiment 1B: Effects of food satiation level

For the 1 h satiation condition (Figure 3a), a two-way repeated measures ANOVA showed a main effect of punishment risk (F(4,40)=7.72, p<0.001) but no main effect of satiation (F(1,40)=0.68, n.s.), indicating that 1 h of free feeding before testing did not influence choice behavior. There was also no effect of 1 h satiation on the number of trials omitted during testing (F(1,10)=1.61, n.s.).

The effects of 24 h of free feeding on choice performance were similar, in that there was a main effect of punishment risk (F(4,36)=5.82, p<0.01) but no main effect of satiation level (F(1,9)=3.19, n.s.), indicating that even substantial satiation caused no change in reward choice (Figure 3b). However, rats completed significantly fewer trials after 24 h of free feeding than under food-restricted conditions (mean percentage of completed trials; satiated, 67.80 vs restricted, 79.50; F(1,9)=9.48, p<0.05).

Experiment 1C: Reversal of punishment probabilities

After the order of presentation of punishment risks was reversed, there was still a main effect of risk (F(4,36)=7.59, p<0.001), with rats showing less preference for the large reward under conditions of greater risk. These data were consistent with data from Experiment 1A, as a within-subjects comparison of performance under ascending and descending orders of risk presentation revealed no main effects or interactions involving the order of risk presentation (F<1.45, n.s.) (Figure 4).

Effects of reversal of punishment risks. After the order of risk presentations was reversed, rats continued to demonstrate discounting of the large risky reward in a manner similar to that under ascending risk presentations. The ascending risk data are replotted from Figure 1 (0.35 mA shock intensity) for comparison.

Experiment 2: Comparing Decision Making Across Tasks

Risky decision-making task

Rats tested at 0.35 mA in Experiment 1 were retested in the risky decision-making task (using an ascending order of punishment risk) until behavior was stable. There was a main effect of risk (F(4,44)=4.72, p<0.01), indicating that rats discounted the value of the large reward as a function of risk of punishment (Figure 5a). Moreover, rats’ performance in this session was highly correlated with their previous performance in Experiment 1, indicating that risky decision making is stable across time (r=0.92, p<0.001).

Comparison of the risky decision-making task with other decision-making tasks. (a) Performance on the risky decision-making task with 0.35 mA shock intensity. (b) Performance on the delay-discounting task. (c) Performance on the probability-discounting task. Insets show scatter plots and regression lines for comparisons of performance on different tasks.

Delay-discounting task

Behavior was stable across sessions 16–20. There was a main effect of delay during these sessions (F(4,44)=32.36, p<0.001), indicating that rats discounted the large reward as a function of delay duration (Figure 5b).

Probability-discounting task

Behavior was stable across sessions 11–15. There was a main effect of reward probability during these sessions (F(4,44)=37.61, p<0.001), indicating that rats discounted the large reward as a function of the probability of its delivery (Figure 5c).

Relationships between tasks

A Pearson's correlation test revealed that choice of the large, risky reward in the risky decision-making task was not correlated with choice of the large, delayed reward in the delay-discounting task (r=0.23, n.s.). There was also no correlation between reward choice in the delay-discounting and probability-discounting tasks (r=0.23, n.s.). However, there was a significant correlation in reward choice between the risky decision-making and probability-discounting tasks (Figure 5, insets; r=0.59, p<0.05).

Experiment 3: Acute Drug Treatments

Amphetamine

There was no difference in performance across the three days of saline injections (F(2,22)=2.53, n.s.), so the mean of these days was used in the analysis. A two-way repeated measures ANOVA (risk × drug dose) revealed a main effect of punishment risk (F(4,44)=13.52, p<0.001), indicating that, across doses, rats decreased their choice of the large reward with increasing risk (Figure 6a). Most importantly, there was a main effect of drug dose (F(3,33)=3.87, p<0.05) such that rats became more risk averse with increasing doses of amphetamine (although the risk × drug interaction did not quite reach significance (F(12,132)=1.80, p=0.055)). Individual pairwise comparisons between saline and amphetamine conditions showed that the 1.5 mg/kg dose caused a significant decrease in preference for the large reward (p<0.05). In addition to its effects on reward choice, amphetamine also increased the number of omitted trials (F(3,33)=3.92, p<0.05), with omissions increasing as a function of dose (% completed choice trials: saline=98.66, 0.33 mg/kg=94.00, 1.0 mg/kg=94.34, 1.5 mg/kg=84.00). However, the effects of amphetamine on omissions appeared to be separate from its effects on reward choice, as there were no correlations between these two variables (r<0.35, n.s.). There was also no difference in shock reactivity (locomotion during the 1 s shock presentations) across drug doses (F(3,21)=0.12, n.s.), indicating that the observed behavioral changes were likely not a result of amphetamine-induced alterations in shock sensitivity.

Effects of pharmacological treatments on risky decision making. (a) Rats were tested under the influence of systemic 0.33, 1.0, and 1.5 mg/kg doses of amphetamine (AM). Amphetamine decreased preference for the large risky reward in a dose-dependent fashion, with the 1.5 mg/kg dose differing significantly from saline conditions (p<0.05). (b) Rats were tested under the influence of systemic 5, 10, and 15 mg/kg cocaine (CO). Rats exposed to cocaine at the 5 and 15 mg/kg doses failed to adjust reward choice as the risk of punishment increased.

Cocaine

There were no differences between the 3 days of saline injections F(2,22)=2.94, n.s.), so these days were averaged for the analysis. A two-way repeated measures ANOVA revealed a main effect of punishment risk when all doses were combined into a single analysis (F(4,44)=4.00, p<0.05; Figure 6b). There was no main effect of drug treatment on reward choice (F(3,33)=0.39, n.s.); however, there was an interaction between drug dose and punishment risk (F(12,132)=2.26, p<0.05). The nature of this interaction was further investigated by performing one-way repeated measures ANOVAs assessing the effects of punishment risk on each drug dose individually. Simple main effects of punishment risk were evident under both saline and the 10 mg/kg dose of cocaine, such that the rats discounted the large reward as a function of risk (p<0.001). However, there was no main effect of risk when rats were given either the 5 mg/kg (F(4,44)=1.79, n.s.) or 15 mg/kg (F(4,44)=0.50, n.s.) doses of cocaine, indicating that risk of punishment failed to influence reward choice at these doses. As reward choice during the first block (0% footshock accompanying large reward) appeared to differ between groups, a repeated measures ANOVA was performed between doses for the first block only. There was no main effect of dose (F(3,33)=2.34, n.s.), and paired t-tests between saline and each dose individually revealed no differences in reward preference (n.s.), although the difference between saline and 5 mg/kg conditions approached significance (p=0.07). There was no main effect of drug dose on the number of trials omitted (F(3,33)=1.77, n.s.), and no main effect of drug dose on shock reactivity (F(3,9)=0.32, n.s.). There were also no correlations between reward choice and shock reactivity for any doses (r<0.61, n.s.).

DISCUSSION

We developed a task that assessed the degree to which risk of punishment (footshock) influenced reward choice. This risky decision-making task differs from previous conflict paradigms such as the Geller–Seifter conflict and the thirsty-rat conflict tasks (File et al, 2003) in that (1) rats are given a choice between the potentially punished response and a second, safe alternative response, and (2) the risky response was only accompanied by punishment according to a specific probability that shifted within each session. We found that rats reliably shifted preference from a large, risky reward to a small, safe reward as the risk of punishment accompanying the large reward increased. This preference was mediated by the magnitude of the punishment, as preference for the small safe reward increased with greater shock intensity. Importantly, rats were able to recognize changes in punishment risk within sessions, as reward preference shifted as risk was altered. During initial testing, the risky reward began with 0% probability of shock and systematically ascended to 100% probability. It may be argued that the performance curves observed were a result of a lack of motivation due to satiation or frustration as trial blocks progressed. However, reversing the order of risk presentations did not alter performance (ie, rats continued to show increased preference for the small safe reward with greater risks of punishment) and thus it is unlikely that the order of risk presentations was the cause of the observed pattern of reward preference.

Performance in the risky decision-making task showed large between-subjects variability (at least at lower shock intensities), but was stable across multiple test sessions over the course of several months and was not related to differences in body weight or shock reactivity in rats trained at the 0.35 mA shock intensity. In rats trained at the 0.4 mA shock intensity only, there was a correlation between body weight and risk-taking behavior such that heavier rats preferred the large, risky reward. This may have been due to the differences in body weight altering the experience of the footshock (eg, the shock was less aversive to heavier rats), although there was no correlation between weight and shock reactivity in the 0.4 mA group. It is also possible that rats with higher baseline weights were simply more highly motivated to obtain food rather than less influenced by the shock; however, this alternative explanation also seems unlikely, as rats showed no shift in reward preference after a 24-h satiation period (which caused an increase in body weight). This latter finding was somewhat surprising, as satiation can reduce food's motivational value, resulting in reduced choice of the devalued food (Johnson et al, 2009). Although we did observe an increase in overall omitted trials after satiation (indicating the effectiveness of this procedure), satiation did not significantly affect preference for the large, risky reward. This finding could indicate that choice behavior in this task (but not overall responding) is only minimally controlled by reinforcer value. Alternatively, it is possible that the long duration of testing experienced by the rats by that point in the experiment resulted in choice behavior being mediated by ‘stimulus-response’-type mechanisms (and thus less controlled by reinforcer value (Balleine and Dickinson, 1998)).

Further analysis of the individual variability demonstrated that risky decision-making performance was related to each subject's selection of the large reward during the initial block, even though the reward was accompanied by a 0% probability of footshock during this block. Despite this observation, the patterns of decision making observed here likely were not solely a function of response perseveration from baseline levels of responding. Rats were able to adjust their baseline responding throughout the 20 days of training (such that the relationship between baseline responding and overall responding shifted throughout training; NW Simon et al, unpublished observations). Additionally, a separate experiment showed that rats were able to adjust responding when the shock intensity was increased (Simon et al, 2008a). Although the fact that some rats failed to choose the large reward even under 0% risk conditions is somewhat surprising, it can likely be accounted for by ‘carryover’ effects from the previous day's training (ie, because in the final block of trials, they were always shocked when choosing the large reward, this experience likely biased their choices on the following day). In support of this possibility, choice of the large reward in the 0% risk block varied directly with shock intensity (Figure 1), even though no shocks were received in this block.

The individual differences in risky decision making observed in this task may mimic the diversity in propensity for risk taking observed in human subjects (DeVito et al, 2008; Gianotti et al, 2009; Lejuez et al, 2003; Reyna and Farley, 2006; Sobanski et al, 2008; Taylor Tavares et al, 2007; Weber et al, 2004) but, importantly, the use of an animal model allows a degree of experimental control that is not possible in human studies. Thus, any behavioral differences observed are more likely due to intrinsic rather than experiential factors. This variability should prove useful in future studies for identifying behavioral and neurobiological correlates of risky decision making.

Risky decision-making behavior was compared to behavior in two other reward-related decision-making tasks: delay discounting (commonly used to measure impulsive choice (Ainslie, 1975; Evenden and Ryan, 1996; Simon et al, 2007b; Winstanley et al, 2006a)) and probability discounting (characterized as an assessment of risky behavior (Cardinal and Howes, 2005; St Onge and Floresco, 2009)). Correlational evidence suggests that rats with a preference for the large, risky reward in the risky decision-making task also demonstrate preference for the large reward in the probability-discounting task (preference for the large, probabilistic reward over the small, certain reward). These data suggest that either the assessment of probabilities (of punishment and reward omission, respectively) or the integration of probabilistic information with reward value may be mediated by similar neurobiological mechanisms. Conversely, rats with a greater propensity for risky choice did not consistently demonstrate greater impulsive choice in the delay-discounting task (preference for the small, immediate reward over the large, delayed reward). Although this runs counter to some theoretical and experimental data suggesting similarities between the influence of delay and probability on reward value (see Hayden and Platt, 2007; Yi et al, 2006), other findings suggest that integration of delays with reward value requires a different set of neural substrates than probability assessment (Cardinal, 2006; Floresco et al, 2008; Kobayashi and Schultz, 2008; Mobini et al, 2000; Schultz et al, 2008). It could be argued that the correlation between performance in the risky decision-making and probability-discounting tasks was a result of response perseveration, as both tasks used the same levers to produce the small and large rewards. However, rats were tested in the delay-discounting task after the risky decision-making task, and demonstrated a sizable shift in behavior. Rats then shifted their behavior again when tested in the probability-discounting task. Were perseveration the only explanation for the similarity between the risky decision-making and probability-discounting tasks, the performance curves would be expected to follow similar trends for all three tasks.

Systemic amphetamine administration produced a dose-dependent increase in risk aversion, shifting rats’ preference toward the ‘safe’ reward. It is possible that this shift in behavior, which led to an overall reduction in food consumption, was a result of amphetamine-induced suppression of food intake (Wellman et al, 2008). However, neither 1- nor 24-h periods of free feeding before testing had an effect on reward choice, although 24-h free feeding did increase the number of trials omitted (an effect that was also observed under amphetamine). Additionally, when acute amphetamine was tested in the delay-discounting task, reward preference was shifted in the opposite direction, toward greater choice of the large, delayed reward, resulting in greater food consumption (Simon et al, 2007a). Thus, it seems unlikely that amphetamine altered reward choice simply by altering hunger levels or food motivation. Another possibility is that the increased preference for the small, safe reward induced by amphetamine was a result of hypersensitivity to footshock. This explanation seems unlikely for two reasons: first, amphetamine has been characterized as an analgesic agent (Connor et al, 2000; Drago et al, 1984). If pain sensitivity were indeed the critical mediator of reward selection in this task, rats given amphetamine would be expected to find the shock less aversive and shift their preference toward the large, risky reward as a result of a higher pain threshold. Second, amphetamine did not alter locomotion during the footshock, which can be used as a behavioral marker for pain/shock sensitivity (Chhatwal et al, 2004).

Interestingly, results similar to those found here with amphetamine have been obtained in human subjects with various psychopathological disorders. Children with ADHD and patients with frontotemporal dementia show reduced risky choices in the Cambridge gambling task when treated with methylphenidate, a monoamine reuptake inhibitor with effects similar to amphetamine (DeVito et al, 2008; Rahman et al, 2005). As methylphenidate is thought primarily to affect decision making through actions on prefrontal cortex (Berridge et al, 2006), a structure that has been implicated in risky decision making (Bechara et al, 2000; Clark et al, 2008; St Onge and Floresco, 2008), it is possible that alterations in prefrontal cortex activity are responsible for the changes in risk-taking behavior observed after administration of amphetamine (in rats) or methylphenidate (in humans).

The amphetamine-induced decrease in risk-taking behavior observed in this study contrasts with the increased risk-taking behavior in rats tested in a probability-discounting task observed by St Onge and Floresco (2009). Although both tasks involve assessment of probabilities (indeed, we observed that reward choice was correlated between these two tasks), it is possible that amphetamine affects these types of decision making in different ways. The risky decision-making task utilized in this study used probabilities of punishment rather than reward omission as the discounting factor associated with the large reward. The difference in amphetamine's effects may be a result of dopaminergic mediation of aversive states induced by expectation of footshock. The same mesolimbic dopaminergic structures implicated in reward (such as nucleus accumbens and ventral tegmental area) also appear to be involved with emotional reactions to aversive stimuli (Carlezon and Thomas, 2009; Liu et al, 2008; Setlow et al, 2003). Thus, it is possible that amphetamine-induced enhancements in dopamine transmission increase the ability of aversive stimuli to control behavior (rather than solely enhancing the influence of rewarding stimuli), which could explain the amphetamine-induced shift in reward choice away from the large, risky reward. This explanation is consistent with previous findings showing that acute amphetamine administration at doses similar to those used here increased the degree to which rats avoided making a response that produced an aversive conditioned stimulus previously associated with footshock (ie, amphetamine increased control over responding by the aversive conditioned stimulus (Killcross et al, 1997)).

Somewhat surprisingly, cocaine administration did not affect risky decision making in the same manner as amphetamine. Subjects given cocaine at relatively high doses, although not high enough to confound performance with excessive stereotypy (Wellman et al, 2002), no longer demonstrated a shift in reward choice with increasing risk of punishment. This may be a result of a cocaine-induced enhancement in response perseveration (ie, an inability to shift choice from the large reward to the smaller reward across the course of the session). Indeed, enhancements in perseverative behavior have been observed in human cocaine but not amphetamine abusers (Ersche et al, 2008). Interestingly, the dissociation between the effects of amphetamine and cocaine may be due in part to cocaine's relatively higher affinity for the serotonin (5-HT) transporter (White and Kalivas, 1998). It has been suggested that 5-HT signaling may be critically involved in prediction of punishment (Daw et al, 2002). As acute depletion of the 5-HT precursor tryptophan enhances predictions of punishment in human subjects (Cools et al, 2008), it is possible that enhancements in 5-HT neurotransmission by cocaine might impair such predictions, resulting in apparent insensitivity to risk of punishment.

Another possibility is that the previous exposure to amphetamine influenced the subjects’ response to subsequent acute cocaine administration. A previous regimen of chronic cocaine administration can produce tolerance to cocaine's acute effects on decision making (Winstanley et al, 2007), although chronic amphetamine fails to influence the acute effects of amphetamine in a similar manner (Stanis et al, 2008). Although this possibility cannot be entirely ruled out, it seems unlikely for behavioral tolerance to manifest itself given the short regimen of amphetamine administered to the subjects (three injections of ⩽1.5 mg/kg across 6 days), as tolerance to the effects of psychostimulants on cognition has only been demonstrated with considerably higher doses and much longer regimens (Dalley et al, 2005; Simon et al, 2007a; Winstanley et al, 2007).

An interesting aspect of performance during this experiment is the discrepancy in reward choice between cocaine- and saline-exposed trials during the first block (0% risk). Although there were no statistically significant differences between treatments, the lowest dose of cocaine caused a near-significant reduction in selection of the large reward during this block. This maladaptive shift in decision making could be a result of an impaired ability to discriminate between the response levers, perhaps due to the anxiogenic properties of acute cocaine (Goeders, 1997).

Elevated risk taking is characteristic of many psychopathological disorders, and can lead to persisting financial, social, and medical problems. A better understanding of the behavioral and neural substrates underlying risky decision making will allow more efficacious treatment of patients affected adversely by excessive risk taking. The risky decision-making task described here offers a novel method of assessing the role of punishment risk in decision making. Given the large between-subjects variability and high test-retest reliability, this task may have great utility as a model of human risk-taking behavior, and for further investigation of its neurobiological substrates.

References

Ainslie G (1975). Specious reward: a behavioral theory of impulsiveness and impulse control. Psychol Bull 82: 463–496.

Balleine BW, Dickinson A (1998). Goal-directed instrumental action: continency and incentive learning and their cortical substrates. Neuropharmacology 37: 407–419.

Bechara A, Dolan S, Denburg N, Hindes A, Anderson SW, Nathan PE (2001). Decision-making deficits, linked to dysfunctional ventromedial prefrontal cortex, revealed in alcohol and stimulant abusers. Neuropsychologia 39: 376–389.

Bechara A, Tranel D, Damasio H (2000). Characterization of the decision-making deficit of patients with ventromedial prefrontal cortex lesions. Brain 123: 2189–2202.

Berridge CW, Devilbiss DM, Andrzejewski ME, Arnsten AF, Kelley AE, Schmeichel B et al (2006). Methylphenidate preferentially increases catecholamine neurotransmission within the prefrontal cortex at low doses that enhance cognitive function. Biol Psychiatry 60: 1111–1120.

Cardinal R, Howes N (2005). Effects of lesions of the nucleus accumbens core on choice between small certain rewards and large uncertain rewards in rats. BMC Neurosci 6: 37.

Cardinal RN (2006). Neural systems implicated in delayed and probabilistic reinforcement. Neural Netw 19: 1277–1301.

Cardinal RN, Robbins TW, Everitt BJ (2000). The effects of d-amphetamine, chlordiazepoxide, α-flupenthixol and behavioural manipulations on choice of signalled and unsignalled delayed reinforcement in rats. Psychopharmacology (Berl) 152: 362–375.

Carlezon WA, Thomas MJ (2009). Biological substrates of reward and aversion: a nucleus accumbens activity hypothesis. Neuropharmacology 56: 122–132.

Chhatwal JP, Davis M, Maguschak KA, Ressler KJ (2004). Enhancing cannabinoid neurotransmission augments the extinction of conditioned fear. Neuropsychopharmacology 30: 516–524.

Clark L, Bechara A, Damasio H, Aitken MRF, Sahakian BJ, Robbins TW (2008). Differential effects of insular and ventromedial prefrontal cortex lesions on risky decision-making. Brain 131: 1311–1322.

Connor J, Makonnen E, Rostom A (2000). Comparison of analgesic effects of khat (Catha edulis Forsk) extract, d-amphetamine and ibuprofen in mice. J Pharm Pharmacol 52: 107–110.

Cools R, Robinson OJ, Sahakian B (2008). Acute tryptophan depletion in healthy volunteers enhances punishment prediction but does not affect reward prediction. Neuropsychopharmacology 33: 2291–2299.

Dalley JW, Laane K, Pena Y, Theobald DE, Everitt BJ, Robbins TW (2005). Attentional and motivational deficits in rats withdrawn from intravenous self-administration of cocaine or heroin. Psychopharmacology (Berl) 182: 579–587.

Daw ND, Kakade S, Dayan P (2002). Opponent interactions between serotonin and dopamine. Neural Netw 15: 603–616.

De Wit H, Enggasser JL, Richards JB (2002). Acute administration of d-amphetamine decreases impulsivity in healthy volunteers. Neuropsychopharmacology 27: 813–825.

DeVito EE, Blackwell AD, Kent L, Ersche KD, Clark L, Salmond CH et al (2008). The effects of methylphenidate on decision-making in attention-deficit/hyperactivity disorder. Biol Psychiatry 64: 636–639.

Drago F, Caccamo G, Continella G, Scapagnini U (1984). Amphetamine-induced analgesia does not involve brain opioids. Eur J Pharmacol 101: 267–269.

Drechsler R, Rizzo P, Steinhausen HC (2008). Decision-making on an explicit risk-taking task in preadolescents with attention-deficit/hyperactivity disorder. J Neural Transm 115: 201–209.

Ernst M, Kimes AS, London ED, Matochik JA, Eldreth D, Tata S et al (2003). Neural substrates of decision making in adults with attention deficit hyperactivity disorder. Am J Psychiatry 160: 1061–1070.

Ersche K, Roiser J, Robbins T, Sahakian B (2008). Chronic cocaine but not chronic amphetamine use is associated with perseverative responding in humans. Psychopharmacology (Berl) 197: 421–431.

Evenden JL, Ryan CN (1996). The pharmacology of impulsive behavior in rats: the effects of drugs on response choice with varying delays of reinforcement. Psychopharmacology (Berl) 128: 161–170.

File SE, Lippa AS, Beer B, Lippa MT (2003). Animal tests of anxiety. Curr Protoc Neurosci 8: 3.

Floresco SB, St. Onge JR, Ghods-Sharifi S, Winstanley CA (2008). Cortico-limbic-striatal circuits subserving different forms of cost-benefit decision-making. Cogn Affect Behav Neurosci 8: 375–389.

Gianotti LRR, Knoch D, Faber PL, Lehmann D, Pascual-Marqui RD, Diezi C et al (2009). Tonic activity level in the right prefrontal cortex predicts individuals’ risk-taking. Psychol Sci 20: 33–38.

Goeders NE (1997). A neuroendocrine role in cocaine reinforcement. Psychoneuroendocrinology 22: 237–259.

Hayden BY, Platt ML (2007). Temporal discounting predicts risk sensitivity in Rhesus macaques. Curr Biol 17: 49–53.

Heerey EA, Bell-Warren KR, Gold JM (2008). Decision-making impairments in the context of intact reward sensitivity in schizophrenia. Biol Psychiatry 64: 62–69.

Johnson AW, Gallagher M, Holland PC (2009). The basolateral amygdala is critical to the expression of Pavlovian and instrumental outcome-specific reinforcer devaluation effects. J Neurosci 29: 696–704.

Killcross AS, Everitt BJ, Robbins TW (1997). Symmetrical effects of amphetamine and alpha-flupenthixol on conditioned punishment and conditioned reinforcement: contrasts with midazolam. Psychopharmacology (Berl) 129: 141–152.

Kobayashi S, Schultz W (2008). Influence of reward delays on responses of dopamine neurons. J Neurosci 28: 7837–7846.

Lejuez CW, Aklin WM, Zvolensky MJ, Pedulla CM (2003). Evaluation of the Balloon Analogue Risk Task (BART) as a predictor of adolescent real-world risk-taking behaviours. J Adolesc 26: 475–479.

Lejuez CW, Read JP, Kahler CW, Richards JB, Ramsey SE, Stuart GL et al (2002). Evaluation of risk taking: the Balloon Analogue Risk Task (BART). J Exp Psychol Appl 8: 75–84.

Leland DS, Paulus MP (2005). Increased risk-taking decision-making but not altered response to punishment in stimulant-using young adults. Drug Alcohol Depend 78: 83–90.

Liu Z-H, Shin R, Ikemoto S (2008). Dual role of medial A10 dopamine neurons in affective encoding. Neuropsychopharmacology 33: 3010–3020.

Mobini S, Chiang TJ, Ho MY, Bradshaw CM, Szabadi E (2000). Effects of central 5-hydroxytryptamine depletion on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology (Berl) 152: 390–397.

Negus S (2005). Effects of punishment on choice between cocaine and food in rhesus monkeys. Psychopharmacology (Berl) 181: 244–252.

Rahman S, Robbins TW, Hodges JR, Mehta MA, Nestor PJ, Clark L et al (2005). Methylphenidate (‘Ritalin’) can ameliorate abnormal risk-taking behavior in the frontal variant of frontotemporal dementia. Neuropsychopharmacology 31: 651–658.

Reyna V, Farley F (2006). Risk and rationality in adolescent decision-making: implications for theory, practice, and public policy. Psychol Sci Public Interest 7: 1–44.

Schultz W, Preuschoff K, Camerer C, Hsu M, Fiorillo CD, Tobler PN et al (2008). Review. Explicit neural signals reflecting reward uncertainty. Philos Trans R Soc Lond B Biol Sci 363: 3801–3811.

Setlow B, Schoenbaum G, Gallagher M (2003). Neural encoding in ventral striatum during olfactory discrimination learning. Neuron 38: 625–636.

Simon NW, Busch TN, Richardson AJ, Walls KJ, Setlow B (2007a). Cocaine exposure and impulsive choice: a parametric analysis. Society for Neuroscience Abstracts 933: 4/WW24.

Simon NW, Gilbert RJ, Mayse JD, Setlow B (2008a). A rat model of risky decision-making: the role of dopamine in balancing risk and reward. Society for Neuroscience Abstracts 875: 1/RR63.

Simon NW, LaSarge CL, Montgomery KS, Williams MT, Mendez IA, Setlow B et al (2008b). Good things come to those who wait: attenuated discounting of delayed rewards in aged Fischer 344 rats. Neurobiol Aging e-pub ahead of print 25 July 2008.

Simon NW, Mendez IA, Setlow B (2007b). Cocaine exposure causes long term increases in impulsive choice. Behav Neurosci 121: 543–549.

Sobanski E, Sabljic D, Alm B, Skopp G, Kettler N, Mattern R et al (2008). Driving-related risks and impact of methylphenidate treatment on driving in adults with attention-deficit/hyperactivity disorder (ADHD). J Neural Transm 115: 347–356.

St Onge JR, Floresco SB (2008). Dissociable roles for different subregions of the rat prefrontal cortex in risk-based decision making. Society for Neuroscience Abstracts 88: 15/SS11.

St Onge JR, Floresco SB (2009). Dopaminergic modulation of risk-based decision making. Neuropsychopharmacology 34: 681–697.

Stanis J, Marquez Avila H, White M, Gulley J (2008). Dissociation between long-lasting behavioral sensitization to amphetamine and impulsive choice in rats performing a delay-discounting task. Psychopharmacology (Berl) 199: 539–548.

Taylor Tavares JV, Clark L, Cannon DM, Erickson K, Drevets WC, Sahakian BJ (2007). Distinct profiles of neurocognitive function in unmedicated unipolar depression and bipolar II depression. Biol Psychiatry 62: 917–924.

Weber EU, Shafir S, Blais AR (2004). Predicting risk sensitivity in humans and lower animals: risk as variance or coefficient of variation. Psychol Rev 111: 430–445.

Wellman PJ, Ho D, Cepeda-Benito A, Bellinger L, Nation JR (2002). Cocaine-induced hypophagia and hyperlocomotion in rats are attenuated by prazosin. Eur J Pharmacol 455: 117–126.

Wellman PJ, Davis KW, Clifford PS, Rothman RB, Blough BE (2009). Changes in feeding and locomotion induced by amphetamine analogs in rats. Drug Alcohol Depend 100: 234–239.

White FJ, Kalivas PW (1998). Neuroadaptations involved in amphetamine and cocaine addiction. Drug Alcohol Depend 51: 141–153.

Winstanley CA, Dalley JW, Theobald DE, Robbins MJ (2003). Global 5-HT depletion attenuates the ability of amphetamine to decrease impulse choice on a delay-discounting task in rats. Psychopharmacology (Berl) 170: 320–331.

Winstanley CA, Eagle DM, Robbins TW (2006a). Behavioral models of impulsivity in relation to ADHD: translation between clinical and preclinical studies. Clin Psychol Rev 26: 379–395.

Winstanley CA, LaPlant Q, Theobald DE, Green TA, Bachtell RK, Perrotti LI et al (2007). DeltaFosB induction in orbitofrontal cortex mediates tolerance to cocaine-induced cognitive dysfunction. J Neurosci 27: 10497–10507.

Winstanley CA, Theobald DEH, Dalley JW, Cardinal RN, Robbins TW (2006b). Double dissociation between serotonergic and dopaminergic modulation of medial prefrontal and orbitofrontal cortex during a test of impulsive choice. Cereb Cortex 16: 106–114.

Yi R, de la Piedad X, Bickel WK (2006). The combined effects of delay and probability in discounting. Behav Processes 73: 149–155.

Acknowledgements

We thank Bradley Crain for technical assistance and Dr Jim Grau, Dr Brian Doss, and Dr Aaron Taylor for statistical consulting and helpful comments on the article. This study was supported by the Department of Psychology at Texas A&M University and NIH DA018764 (BS), NIH AG029421 (JLB), and NIH DA023331 (NWS).

Author information

Authors and Affiliations

Corresponding author

Additional information

DISCLOSURES/CONFLICTS OF INTEREST

The authors declare that, except for income received from their primary employer, no financial support or compensation has been received from any individual or corporate entity over the past 3 years for research or professional service and there are no personal financial holdings that could be perceived as constituting a potential conflict of interest.

Rights and permissions

About this article

Cite this article

Simon, N., Gilbert, R., Mayse, J. et al. Balancing Risk and Reward: A Rat Model of Risky Decision Making. Neuropsychopharmacol 34, 2208–2217 (2009). https://doi.org/10.1038/npp.2009.48

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/npp.2009.48

Keywords

This article is cited by

-

Effects of fentanyl self-administration on risk-taking behavior in male rats

Psychopharmacology (2023)

-

Regulation of risky decision making by gonadal hormones in males and females

Neuropsychopharmacology (2021)

-

The ventral hippocampus is necessary for cue-elicited, but not outcome driven approach-avoidance conflict decisions: a novel operant choice decision-making task

Neuropsychopharmacology (2021)

-

Differential effects of glutamate N-methyl-d-aspartate receptor antagonists on risky choice as assessed in the risky decision task

Psychopharmacology (2021)

-

A novel multidimensional reinforcement task in mice elucidates sex-specific behavioral strategies

Neuropsychopharmacology (2020)