Abstract

Sensorimotor synchronization (SMS) is the coordination of rhythmic movement with an external rhythm, ranging from finger tapping in time with a metronome to musical ensemble performance. An earlier review (Repp, 2005) covered tapping studies; two additional reviews (Repp, 2006a, b) focused on music performance and on rate limits of SMS, respectively. The present article supplements and extends these earlier reviews by surveying more recent research in what appears to be a burgeoning field. The article comprises four parts, dealing with (1) conventional tapping studies, (2) other forms of moving in synchrony with external rhythms (including dance and nonhuman animals’ synchronization abilities), (3) interpersonal synchronization (including musical ensemble performance), and (4) the neuroscience of SMS. It is evident that much new knowledge about SMS has been acquired in the last 7 years.

Similar content being viewed by others

The purpose of the present article is to supplement and extend a previous review of sensorimotor synchronization (SMS) studies using finger-tapping paradigms (Repp, 2005; referred to hereafter as R05). Although R05 was reasonably comprehensive, it is rapidly becoming outdated as a flood of new research is pouring in. More than enough new material has accumulated now to warrant another review. However, this article does not replace R05; rather, it is intended as a continuation and expansion.

The research covered in the present review is diverse, reflecting a broadening of the field of investigation brought about by new ideas and technologies. However, all of the research concerns SMS, defined as the coordination of rhythmic movement with an external rhythm. For space reasons, the review cannot consider such closely related topics as self-paced rhythm production, synchronization of covert internal processes to external rhythms, perception of synchrony between external signals, intrapersonal synchronization of limb movements, temporal coordination of single or nonrhythmic actions with external events, or synchronization among nonanimate agents. An exception is made in Part 4, in which neuroscience studies of rhythm perception are reviewed briefly before plunging into the neuroscience of SMS. Because of the large number of studies reviewed here, references to relevant literature cited in R05 are kept to a minimum.Footnote 1 However, some older studies not cited in R05 are included.

This review is divided into four parts. Part 1 is concerned with tapping studies, which continue to occupy an important place in SMS research, and in which the data are discrete time series. Part 2 reviews SMS studies in which various forms of continuous movement were carried out in synchrony with external rhythms. Part 3 covers the rapidly growing area of research on interpersonal synchronization. Part 4 surveys new findings in the neuroscience of SMS. Note that Parts 1–3 are concerned with behavioral studies only.

Tapping with an external rhythm

Finger tapping in synchrony with an external (usually computer-controlled) rhythm, often an isochronous metronome, remains a popular paradigm because of its simplicity and long history. An increasing number of researchers have equipment for conducting tapping studies, and some have written and made available special software for experimental control and data analysis (e.g., Elliott, Welchman, & Wing, 2009b; Finney, 2001a, b; Kim, Kaneshiro, & Berger, 2012). The basic mechanisms of SMS are still studied most conveniently with the finger-tapping paradigm, and the discrete nature of the taps makes the results particularly relevant to music performance. (Studies of the kinematics of rhythmic finger movement are reviewed later, in section 2.1.)

Asynchronies and their variability

Asynchronies (also called synchronization errors) are the basic data in any SMS study using tapping as the response. Asynchrony is defined as the difference between the time of a tap (the contact between the finger and a hard surface) and the time of the corresponding event onset in the external rhythm. The mean asynchrony is typically negative and, if so, is referred to as negative mean asynchrony (NMA). The standard deviation of asynchronies (SD asy) is an index of stability. Some studies instead employ circular statistics that yield a mean vector (angular deviation) and a circular variance (see Fisher, 1993); this approach is useful when synchronization is poor. The mean and the variability of intertap intervals (ITIs) are often reported as well, but they are of secondary importance in most SMS tasks. The tempo of the external rhythm, usually measured in terms of interonset interval (IOI) duration (or interbeat interval duration, in the case of nonisochronous rhythms or music), is an important independent variable.

Development, enhancement, and impairment of SMS ability

SMS ability takes years to develop (see also section 2.5.1). A large study by McAuley, Jones, Holub, Johnston, and Miller (2006) was primarily concerned with changes in preferred (self-paced) tapping tempo across the life span (ages 4–95), but in an electronic appendix they reported median produced ITIs during synchronization with metronomes whose IOIs ranged from 150 to 1,709 ms. Although a match between the median ITI and IOI does not necessarily imply good synchronization, a mismatch does reflect poor synchronization. It is clear from these data that 4- and 5-year-olds did not synchronize well, if at all, whereas 6- and 7-year-olds performed much better, almost at the adult level. Elderly participants retained good synchronization ability. (See also the mention of Turgeon, Wing, & Taylor, 2011, in section 2.2.) In a study using visual metronomes with IOIs ranging from 500 to 2,000 ms, Kurgansky and Shupikova (2011) found children 7–8 years of age to be generally more variable than adults, and often unable to synchronize at the fastest tempo.

Van Noorden and De Bruyn (2009) reported an extensive developmental SMS study with 600 children ranging in age from 3 to 11 years. The children listened to familiar music played at five different tempi and, after watching an animated figure demonstrating the task of synchronizing with the musical beat by tapping with a stick on a drum, continued the task. The youngest children usually tapped at a rate of 2 Hz and did not adapt to the tempo of the music, but increasing adaptation was evident from 5 years and up. Synchronization performance, as measured by the circular variance of tapping, improved throughout the age range, especially between 3 and 7 years. The authors interpreted their results in terms of the resonance theory of van Noorden and Moelants (1999), suggesting that young children have a narrow resonance curve centered near 2 Hz, which enables them to synchronize only at their preferred tempo. The resonance curve broadens with increasing age, especially toward lower frequencies.

Among adults, variability (SD asy) is generally lower for highly trained musicians than for nonmusicians (Repp, 2010b; Repp & Doggett, 2007). Krause, Pollok, and Schnitzler (2010) reported variability of ITIs, which was lowest in drummers (about 2.5 % of the mean ITI of 800 ms), and lower in professional pianists than in amateur pianists, singers, and nonmusicians. For professional percussionists playing a rhythm on a drum kit in synchrony with a metronome, Fujii et al. (2011) found a mean SD asy of about 16 ms (1.6 %) when the metronome IOI was 1,000 ms, and an SD asy of about 10 ms (2 %) when the IOI was 500 ms. They found no difference in variability among the three limbs (two hands and one foot) involved, even though the limbs were required to move at different rates. When the IOI was 300 ms, the variability approached 4 %, probably due to the biomechanical difficulty of tapping the high-hat cymbal at twice that rate with the right hand.

Repp (2010b) found no difference in SD asy between musical amateurs and nonmusicians (who had no music training at all) who tapped in synchrony with short metronome sequences having IOIs of 500 ms. Likewise, Hove, Spivey, and Krumhansl (2010) did not find any effects of music training in a group of college students who synchronized with an auditory metronome and with various visual stimuli at several tempi (see section 1.4). However, Repp, London, and Keller (2013) did find lower ITI variability in percussionists than in other musicians synchronizing with nonisochronous rhythms. Thus, it seems that only a high level of rhythmic expertise reduces the variability of tapping in SMS. Bailey and Penhune (2010) found that early-trained musicians performed better than late-trained ones in a paced rhythm reproduction task, but this difference was significant in terms only of reproduction accuracy, not of synchronization. Pecenka and Keller (2009) measured participants’ auditory imagery ability in a pitch-matching task and found that it predicted mean absolute asynchrony and SD asy in tapping with a metronome that was either isochronous or gradually changed in tempo, even when musical experience was factored out.

Individuals with motor disorders have been found to be impaired in rhythm tasks, including SMS (see also section 2.4). For example, Whitall et al. (2008) found that 7-year-old children with developmental coordination disorder (DCD) performed more variably than a control group of normally developing children, who in turn were more variable than adults. The task was tapping with a metronome at four different tempi (IOI = 313–1,250 ms), using the two hands in alternation. The authors suggested that children with DCD are deficient in their auditory–motor coupling. A few seemingly quite different disorders also lead to impaired SMS performance. For example, 7- to 11-year-old children with speech/language impairments showed greater variability than did a control group in a SMS task, though only at a stimulus rate of 2 Hz (Corriveau & Goswami, 2009). Likewise, dyslexic undergraduates showed higher variability of ITIs than did controls when tapping in synchrony with a metronome at three tempi (Thomson, Fryer, Maltby, & Goswami, 2006). Impaired speech/language ability and impaired SMS might both be associated with cerebellar dysfunctions (Nicolson & Fawcett, 2011). In addition, individuals with bipolar disorder have also been found to show slightly more variable ITIs than do controls in a synchronization task (Bolbecker et al., 2011). Analysis of their continuation tapping according to the model of Wing and Kristofferson (1973) suggested a difference in internal timekeeper variability, which according to the authors might also originate from deficient cerebellar functions. These findings seem to indicate a common neural underpinning—cerebellar dysfunction—for the SMS impairment observed in various disorders, though deficiency in other relevant cortical–subcortical networks and/or in auditory–motor coupling within the brain cannot be excluded (see section 4.2).

The negative mean asynchrony

The NMA in tapping with simple metronomes is a ubiquitous but still not fully explained finding. A frequently encountered statement in recent articles is that the NMA proves that participants anticipate rather than react to pacing stimuli, but in fact any positive asynchrony shorter than the shortest possible reaction time (about 150 ms) is still evidence of anticipation; the tap need not literally precede the stimulus (a point already made by Mates, Radil, & Pöppel, 1992, p. 701). At the same time, anticipatory responses are reactions, but to preceding stimuli: They are reactions timed so as to coincide approximately with the next target stimulus.

Białuńska, Dalla Bella, and Jaśkowski (2011) addressed a prediction made by the sensory accumulation model (Aschersleben, 2002), according to which the NMA is due to faster accumulation of sensory evidence from auditory or visual pacing stimuli than from tactile and kinesthetic feedback from taps. Białuńska et al. argued that the rate of sensory accumulation should depend on stimulus intensity, and therefore expected the NMA to decrease as the intensity of the pacing stimuli was decreased. However, varying stimulus intensity had no effect on the NMA, while it did affect simple reaction times to unpredictable stimuli in a separate condition. The authors speculated that, in the SMS task, an effect of slower sensory accumulation was canceled by lowering of a sensory threshold, controlled by attentional processes.

An alternative hypothesis is that the NMA is due to perceptual underestimation of the metronome IOI (Wohlschläger & Koch, 2000). Extra tones or movements occurring in the IOIs between pacing stimuli reduce the NMA, and such subdivision presumably reduces the underestimation of the IOI. Flach (2005) found a positive correlation between the mean asynchrony during SMS and the mean ITI of continuation tapping after the metronome had stopped, suggesting that the degree of IOI underestimation was reflected in the tempo of continuation tapping. Zendel, Ross, and Fujioka (2011) also obtained results consistent with this hypothesis: When they varied metronome IOI duration while keeping the ITI constant (1:n tapping), the NMA of musicians decreased as the IOI decreased (i.e., the more subdivision tones occurred between taps), whereas varying the ITI while keeping the metronome IOI constant (n:1 tapping) had little effect on the NMA. Repp (2008a), however, found exactly the opposite in a group of highly trained musicians: The NMA decreased as the ITI decreased, but IOI duration had little or no effect on the NMA when the ITI was constant. The reason for this difference in results is not clear. Loehr and Palmer (2009) also reported relevant results that did not conform to the IOI underestimation hypothesis. Pianists had to play isochronous melodies on an electronic piano in synchrony with a metronome (IOI = 500 ms) while they (1) heard additional subdivision tones between the beats, (2) played additional notes without hearing them, or (3) played and heard additional notes. Contrary to expectations, the NMA increased following a passively heard subdivision tone and, to a lesser extent, following an actively played subdivision tone. These findings cast doubt on the hypothesis that subdivision reduces IOI underestimation.

The NMA tends to be smaller for musicians than for nonmusicians.Footnote 2 Krause, Pollok, and Schnitzler (2010) compared the NMAs of drummers, professional pianists, amateur pianists, singers, and nonmusicians tapping with a metronome (IOI = 800 ms). Drummers showed the smallest NMA (about –20 ms), whereas others had NMAs in the vicinity of –50 ms. Fujii et al. (2011) reported that professional drummers playing three percussion instruments simultaneously in synchrony with a metronome had mean asynchronies ranging from –13 to 0 ms, depending on the instrument and tempo. A positive mean asynchrony was observed at a fast tempo close to the biomechanical limit of execution. Stoklasa, Liebermann, and Fischinger (2012) reported that musicians playing their own brass or string instrument in synchrony with a metronome showed a negligible NMA (–2 ms), unlike their tapping (–13 ms).

The NMA typically increases as the metronome IOI increases. For example, Fujii et al. (2011) found an increase in drummers’ NMAs as the metronome IOI increased from 300 to 1,000 ms. The NMA was significantly smaller for the right hand, which always moved at twice the rate of the metronome (2:1), than for the left hand and the right foot, each of which moved at half the metronome rate (1:2) in that study. Musicians performing a 1:1 tapping task also have been found to show an increase in their NMAs as IOI duration increased from 600 to 1,000 ms (Repp, 2008a) and from 260 to 1,560 ms (Zendel et al., 2011). However, according to other conditions in Repp’s (2008a) study, this increase was mainly due to the simultaneous increase in ITI duration, whereas Zendel et al. found little effect of the ITI variable. Thus, it remains unclear whether the NMA depends mainly on metronome tempo or on tapping tempo; both may play a role. Repp and Doggett (2007) examined 1:1 tapping at slow metronome tempi with IOIs ranging from 1,000 to 3,500 ms. Nonmusicians’ NMAs increased linearly as the IOI increased, whereas musicians’ NMAs were small and nearly constant (see also section 1.1.3 and note 2).

Boasson and Granot (2012) investigated the effect of pitch changes on SMS with isochronous melodies. Because performing musicians tend to accelerate when the pitch rises, it was predicted that tapping might accelerate as well. This was indeed found, with asynchronies becoming more negative during a pitch rise. Sugano, Keetels, and Vroomen (2012) used the NMA as an indicator of sensorimotor temporal recalibration. Participants tapped at a designated tempo while receiving auditory or visual feedback at one of two delays (see also section 1.3.1). Following exposure to the longer delay, participants showed an increased NMA when synchronizing with an auditory or visual pacing sequence. This adaptation effect was larger in the auditory than in the visual modality, and it transferred from the visual to the auditory modality, but not vice versa, possibly because rhythmic visual stimuli engage auditory processing, whereas the reverse may not occur.

When the task is to tap in synchrony with every tone of a nonisochronous cyclic rhythm, ITIs often exhibit characteristic distortions, similar to those observed in self-paced reproduction of the same rhythm. These distortions affect the asynchronies with individual tones in the rhythm cycle, such that a tap terminating an ITI that is too short will have a larger NMA than will a tap that terminates an ITI that is too long (Fraisse, 1966/2012). Recent examples can be found in Repp, London, and Keller (2005, 2008, 2011) for musicians tapping in synchrony with two- and three-interval rhythms. While rhythmic distortions affect local asynchronies, a global NMA tends to persist.

Variability

In 1:1 synchronization, the variability of asynchronies (SD asy) increases with both IOI and ITI duration (Repp, 2012; Zendel et al., 2011), but what kind of function describes this increase? Is it linear, reflecting some form of Weber’s law, or nonlinear? Linearity tends to hold over narrow ranges of IOIs (e.g., 500–950 ms; Lorås, Sigmundsson, Talcott, Öhberg, & Stensdotter, 2012), but wide ranges extending to long IOIs reveal nonlinearities. Repp and Doggett (2007) asked musicians and nonmusicians to tap with slow auditory metronomes whose IOIs ranged from 1,000 to 3,500 ms. For both groups, SD asy increased linearly up to 2,500 or 2,750 ms, and then increased more steeply, so that the complete functions had significant nonlinear (quadratic) trends, contrary to Weber’s law. The musicians in this study were also asked to tap in antiphase with the metronome, and their mean SD asy again increased nonlinearly with IOI duration, but with a shallower slope. This increasingly greater stability of antiphase than of in-phase tapping was attributed to subdivision of the metronome IOIs by the antiphase taps. In a follow-up study with musicians, Repp (2010a) added two further conditions, one requiring mental subdivision of IOIs while tapping in phase with the metronome, and the other requiring 2:1 tapping, which can be considered a conflation of in-phase and antiphase tapping. In all four conditions, SD asy increased smoothly but nonlinearly with IOI duration, and the differences among conditions were small initially but increased with IOI duration. Variability was highest for in-phase tapping (in which the instructions discouraged mental subdivision), slightly lower for in-phase tapping with mental subdivision, and clearly lower for 2:1 and antiphase tapping. When the 2:1 taps were separated into in-phase and antiphase taps, the antiphase taps were found to be less variable. This was attributed to anchoring of antiphase taps to the preceding tone, whereas in-phase taps seemed to be anchored more to the preceding antiphase tap than to the (more distant) preceding tone. This interpretation was supported by a constant high positive correlation between the asynchronies of successive antiphase and in-phase taps, whereas the correlation between the asynchronies of successive in-phase and antiphase taps was smaller and decreased as IOI duration increased.

A reduction in variability, previously termed the subdivision benefit (Repp, 2003), is also effected by computer-controlled physical subdivision of metronome IOIs. Unlike overt or covert subdivision by the participant, this kind of subdivision normally has no variability. Repp (2008a) confirmed that musicians’ mean SD asy decreases when the IOIs separating the metronome beats are subdivided into equal parts by one, two, or three additional tones, but only as long as those parts are at least 200–250 ms long. Zendel et al. (2011) likewise reported a subdivision benefit in musicians, and also noted a relative increase in variability for 1:3 as compared to 1:2 and 1:4 tapping (cf. Repp, 2003, 2007b).

On the basis of the distribution of asynchronies that underlies SD asy, it has been argued that a lower rate (upper IOI) limit exists for 1:1 SMS (see Repp, 2006b, for a review). The distribution has been observed to become increasingly bimodal when the IOI exceeds about 1,800 ms, due to the emergence of positive asynchronies that represent reactions to stimuli, rather than anticipations. However, when Repp and Doggett (2007) instructed participants always to predict the next stimulus and not to adopt a reactive strategy, positive asynchronies were only about as frequent as would be expected given a normal distribution with an increasingly large SD, up to IOIs of 3,500 ms. Despite greater variability, nonmusicians actually showed fewer positive asynchronies at long IOIs than did musicians, because their NMAs increased (as mentioned in section 1.1.1). No indication of an upper IOI limit for predictive SMS appeared in these data. However, it remains true that SMS becomes subjectively difficult when IOIs exceed about 1,800 ms (Bååth & Madison, 2012), and reacting to pacing tones is an effective strategy for reducing variability (while forsaking true SMS, which requires a strategy of prediction that may or may not lead to an NMA).

In a different vein, Keller, Ishihara, and Prinz (2011) asked whether the variability of tapping on one’s own body in synchrony with a metronome depends on the tactile sensitivity of the tapped-upon body part. Unexpectedly, SD asy, as well as movement amplitude and its variability, was larger for tapping on the (sensitive) left index fingertip than on the (less sensitive) left forearm. The authors attributed this to possible ambiguity about the source of sensory feedback, created by overlap of the neural representations of the two index fingers; an increase in the amplitude and timing variability of the tapping finger may facilitate disambiguation. Interestingly, SD asy was also higher when participants tapped on another person’s index finger than when they tapped on that person’s forearm. This may have been due to empathic experience of sensory feedback, as control conditions showed that the relative softness of the surface being tapped on was not responsible.

Error correction

Error correction is essential to SMS, even in tapping with an isochronous, unperturbed metronome. Two independent processes have been postulated: phase correction, a largely automatic process that does not affect the tempo of tapping, and period correction, which is usually intentional and changes the tempo. Phase correction may be based either on perceived asynchronies or on a mixture of phase resetting to the preceding stimulus and to the preceding tap, with much evidence favoring the second interpretation (see R05). Period correction may be based on a comparison of the perceived IOI duration with an internal timekeeper period (Mates, 1994) or on perceived asynchronies (Schulze, Cordes, & Vorberg, 2005).

Modeling and parameter estimation

Several two-parameter models of SMS have been proposed in the literature, with the parameters not necessarily representing phase and period correction directly. Jacoby and Repp (2012) analyzed the formal structure of four such models and showed that three (those of Hary & Moore, 1987; Michon, 1967; and Schulze et al., 2005) are mathematically equivalent instances of a general linear model, whereas one (Mates, 1994) is different and has a restricted parameter space because it contains a nonlinearity due to its assumption regarding period correction. Using newly collected data from musicians’ SMS with sequences containing tempo changes, Jacoby and Repp showed that the Mates model is contradicted by part of the data. The data, together with earlier results by Schulze et al. (2005), thus support the hypothesis that period correction is based on the most recent asynchrony. Jacoby and Repp also described and applied a new efficient method for estimating the model parameters, called bounded general least squares (bGLS), which relies on well-established matrix algebra formulations.

Using a more cumbersome iterative parameter estimation method for the Schulze et al. (2005) model, Repp and Keller (2008) simulated data obtained from musicians’ SMS with “adaptively timed” sequences. In this task, the computer controlling the metronome is programmed to carry out phase correction (and, if desired, period correction), which results in a pacing sequence whose timing is continuously modulated in response to the participant’s taps. Human and computer phase correction are additive (Vorberg, 2005). The computer’s phase correction parameter (α) can be set at positive or negative values, so that it either augments or cancels the human phase correction. Repp and Keller were able to show that the human α remained constant as the computer’s α varied between 0 and 1, even though this resulted in overcorrection (combined α > 1) when the computer’s α was large. (Repp, Keller, & Jacoby, 2012, replicated this interesting finding using the bGLS estimation method.) When the computer’s α was negative, however, SMS became rather unstable, and the simulations suggested that the human participants not only increased their α but also engaged period correction to counteract the computer’s uncooperative behavior. The adaptive-timing paradigm can be seen as a preliminary step toward an investigation of interpersonal synchronization (see section 3.1). Computer implementation of an elaborated adaptation and anticipation model (ADAM) has recently been reported by van der Steen and Keller (2012). A related study by Kelso, de Guzman, Reveley, and Tognoli (2009) is described in section 3.2.1.

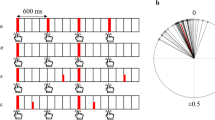

A simple way to estimate α is to introduce unpredictable local phase perturbations (phase shifts or event onset shifts; see Figs. 2 and 3 in R05) of different magnitudes in an isochronous metronome and to measure the participant’s phase correction response (PCR), which is the shift of the immediately following tap from its expected time point (see the present Fig. 1a). Linearly regressing the PCR on perturbation magnitude yields a PCR function whose slope is the estimate of α (see Fig. 1b). Note that this estimate is solely based on PCRs to perturbations, not on other intervening taps.

(a) Schematic illustration of the phase correction response (PCR) to a negative phase shift (PS) in a tone sequence. S = stimulus, R = response. (b) The mean PCR as a linear function of PS magnitude, ranging here from −10 % to 10 % of the metronome baseline interonset interval (IOI), at four IOI durations. The slope of the PCR function is an estimate of α, and it increases with IOI. Small deviations from linearity are not significant in these data. (c) Alpha estimates as a function of IOI for one group of participants as a function of IOI in three SMS conditions: metronome with phase shifts (PCR only), regular metronome (RM), and adaptively timed metronome (AT). The open circles represent the slopes from panel b. The filled circles are the corresponding bounded general least squares (bGLS; Jacoby & Repp, 2012) estimates; they are slightly smaller because bGLS regresses the PCR on the preceding (noisy) asynchrony, not on the PS. The α values for RM and AT are bGLS estimates, too. All α estimates increase rather linearly with IOI, but they differ significantly between conditions (PCR > RM > AT). Note the overcorrection (α > 1) in the PCR at IOI = 1,300 ms. Error bars represent ±1 standard error. The data in panels b and c are reproduced from “Quantifying Phase Correction in Sensorimotor Synchronization: Empirical Comparison of Different Paradigms and Estimation Methods,” by B. H. Repp, P. E. Keller, and N. Jacoby, 2012, Acta Psychologica, 139, p. 285. Copyright 2012 by Elsevier. Adapted with permission.

Repp, Keller, and Jacoby (2012) used the bGLS method as well as previously developed algorithms to estimate α for musically trained participants synchronizing with (1) an isochronous metronome, (2) a perturbed metronome containing local phase shifts, and (3) an adaptively timed metronome, each at four different base tempi (IOIs of 400–1,300 ms). The estimates obtained with the different algorithms showed reasonable agreement, and all of the α estimates increased with IOI duration (see also section 1.2.2). Remarkably, however, the PCR-based α estimates from Condition 2 above were significantly larger than the estimates from Condition 1, which in turn were larger than those from Condition 3 (see Fig. 1c). Application of the bGLS method to selected taps in Condition 2 revealed that α increased immediately following a phase shift (i.e., just for the PCR) and then dropped back to levels characteristic of unperturbed sequences. Moreover, the PCR-based α estimates were uncorrelated with estimates derived from Conditions 1 and 3, while those conditions were highly correlated. Interestingly, however, the lower α estimates derived from post-PCR taps in Condition 2 correlated with the PCR-based α estimates, not with the similarly low α estimates from the other two conditions. Although they are based on a small sample of participants, these recent results suggest that SMS with locally perturbed sequences engages a different phase correction process than does SMS with unperturbed or continuously perturbed sequences. The results also suggest that continuous timing modulations due to adaptive timing weaken sensorimotor coupling, as indexed by α. Similarly, Launay, Dean, and Bailes (2011) reported that phase correction is less vigorous in synchronization with continuously but randomly perturbed sequences than with isochronous sequences.

At a recent conference, Vorberg (2011) described a revised version of his linear phase correction model (Vorberg & Schulze, 2002) in which the internal timekeeper does not trigger responses (Wing & Kristofferson, 1973) but rather sets up temporal goal points for anticipated action effects (Drewing, Hennings, & Aschersleben, 2002). At the same meeting, Schulze, Schulte, and Vorberg (2011) reported applying a modified linear phase correction model to synchronization with nonisochronous rhythms (see also section 1.2.2). A new investigation of error correction in antiphase tapping was presented by Launay, Dean, and Bailes (2012).

A new model of SMS containing two linear and three nonlinear terms has been proposed by Laje, Bavassi, and Tagliazucchi (2013). These authors showed that their model predicts the behavioral response to all types of temporal perturbation (phase shift, event onset shift, and step change; see R05) reasonably well. However, their data were limited to a single tempo (IOI = 500 ms) and to small perturbations (<50 ms), knowledge of the type of perturbation was assumed, and the first tap following the perturbation (the PCR) was omitted from modeling. Therefore, the generality of this particular model and its superiority to linear models remain to be firmly established.

Although playing a melody on a piano is more complex than tapping, phase and period correction in synchronization with a metronome probably operate similarly. Loehr, Large, and Palmer (2011) compared linear and nonlinear models of SMS in piano playing: Pianists played a melody, composed of beat and subdivision levels, while being paced by a beat-level metronome that started out isochronously but then accelerated or decelerated, with linear changes in IOI duration. The pianists’ adaptation to the tempo changes was modeled using the linear model of Schulze et al. (2005) and a coupled nonlinear oscillator model (Large & Jones, 1999). The oscillator model was found to be superior.Footnote 3 The behavioral data showed better adaptation to decelerating than to accelerating metronomes, but in each case the asynchrony (expressed as relative phase) changed substantially, becoming negative during deceleration and positive during acceleration. Little evidence emerged of accurate prediction of tempo changes. Research by Pecenka and Keller (2011a) has indicated that prediction of gradual tempo changes is not automatic, as it is impaired in a dual-task situation that puts a load on working memory.

Taking a dynamic-systems approach, Stepp and Frank (2009) described a procedure for obtaining simultaneous estimates of coupling strength and of the amount of stochastic noise from asynchrony time series. They demonstrated the method in several simulations, but apparently it has not yet been applied in empirical studies.

All of the models mentioned so far assume that the noise in the data is Gaussian. However, asynchrony time series often exhibit positive long-term serial dependencies, also known as fractal noise. Torre and Delignières (2008b) proposed a model of SMS in which an internal timekeeper generates fractal noise while phase correction remains linear (Vorberg & Schulze, 2002). Spectral power analyses of long series of taps synchronized with a metronome (IOI = 500 ms) confirmed the presence of fractal noise, and simulations using a fractal-noise algorithm adapted by Delignières, Torre, and Lemoine (2008) yielded a reasonable approximation to the statistical properties of the data. Delignières, Torre, and Lemoine (2009) extended the fractal-noise model to antiphase (“syncopated”) tapping by assuming that participants estimate the midpoints of IOIs and use them as SMS targets. This estimation process was modeled as another source of fractal noise, which further increased the serial dependencies of asynchronies. The statistical properties of long series of in-phase and antiphase taps collected with an IOI of 800 ms were approximated well by the new model. However, this fractal-noise modeling has not yet yielded a generally applicable algorithm for estimating α.

Methods that do not take fractal noise into account will underestimate α. However, due to participants’ fatigue and attentional fluctuations, fractal noise may be more evident in the long time series (typically more than 1,000 taps) that are required to carry out spectral power analyses. Fractal noise implies high positive autocorrelations of asynchronies that decrease gradually as the lag is increased. Autocorrelations can be assessed in short trials that are presented repeatedly. Repp (2011a, Fig. 8) displayed average autocorrelation functions for musicians tapping with a regular metronome having IOIs ranging from 400 to 1,300 ms, where each individual time series (trial) encompassed just 60 taps. The lag-1 autocorrelation was positive for short IOIs but decreased as IOI increased, and reached zero at the longest IOI, indicating that significant fractal noise was present only at relatively fast tempi.Footnote 4 Moreover, Lorås et al. (2012) found hardly any lag-1 autocorrelation for tapping in a triangular spatial pattern at IOIs ranging from 500 to 950 ms.

The phase correction response

A number of recent studies from author B.H.R.’s laboratory have focused on the PCR—the immediate, largely automatic response to a metronome perturbation, which occurs even when the perturbation is not perceived consciously (see R05). Repp (2010b) investigated effects of music training on the mean PCR, using short sequences with a base IOI of 500 ms and containing phase shifts within ±10 % of IOI.Footnote 5 Unexpectedly, the mean PCR of highly trained musicians was smaller than that of musical amateurs and nonmusicians, but this difference was found to be due to three musicians who had participated in many previous tapping experiments. When the musicians were retested later, after all had participated in various tapping experiments, the mean PCR of the previously inexperienced tappers had dropped significantly, whereas that of the experienced tappers was unchanged. These results suggest that sensorimotor coupling strength does not depend on music training, but rather decreases with task experience.Footnote 6 By reacting less vigorously to perturbations and thereby spreading out the phase correction over several taps, experienced tappers decrease the variability of their asynchronies and ITIs, thus achieving smoother performance. Unlike specific task experience, age (19–98 years) does not seem to affect the efficiency of phase correction in response to phase shifts (Turgeon et al., 2011).

Several studies—all with musicians as participants—have demonstrated that the mean PCR increases with the metronome IOI. Using phase perturbations whose magnitude increased proportionally with IOI, Repp (2008c) found the increase of the PCR to be linear between IOIs of 300 and 1,200 ms. Repp (2011c) replicated this result with phase shifts of fixed size that became imperceptible as IOI increased. In both studies, significant overcorrection (mean PCR > 100 %) was observed at the longest IOIs (see also Fig. 1c). In a separate experiment, Repp (2011c) found consistent overcorrection in the IOI range between 1 and 2 s, but the mean PCR increased only slightly with IOI duration, which suggests a nonlinear increase overall. It remains unclear why overcorrection occurs at slow tempi.Footnote 7 Overcorrection is problematic for the mixed-phase-resetting hypothesis (see R05 and beginning of section 1.2), as mere phase resetting should not result in overcorrection. Moreover, the fact that overcorrection occurs even in response to subliminal perturbations (Repp, 2011c), together with the suggestion of a special phase correction process for the PCR (Repp et al., 2012), raises the intriguing possibility that the PCR is driven nonlinearly by highly accurate subconscious registration of temporal expectancy violations.

When the task is to tap in synchrony with perturbed nonisochronous rhythms, a dependency of the mean PCR on the preceding IOI duration is less clear. Repp, London, and Keller (2008) used cyclic two- and three-interval rhythms with IOIs of 400 and 600 ms in all combinations and permutations, and introduced small event onset shifts at certain points. They found a significantly larger mean PCR near the end of the longer IOI in two-interval rhythms, but not in three-interval rhythms. Repp, London, and Keller (2011) used two-interval rhythms with various IOI durations (360–840 ms) but did not find a dependency of the mean PCR on preceding IOI duration, though the mean PCR was larger with some rhythms than with others. In both studies, the mean PCR was about as large as in SMS with isochronous rhythms. The PCR is clearly reduced, however, when the preceding IOI is shorter than 300 ms (Repp, 2011d). In Repp’s (2011d) study, musicians tapped in synchrony with cyclic two-interval rhythms in which the tone initiating the shorter IOI was shifted occasionally. As that IOI was increased from 100 to 300 ms, the mean PCR increased gradually from zero to full magnitude, reaching an asymptote already at 250 ms.Footnote 8 Additional experiments in which either one tap or one tone per rhythm cycle was omitted (1:2 or 2:1 tapping) demonstrated that, for this increase in the PCR to occur, it was necessary neither to tap with the shifted tone nor for the tap exhibiting the PCR to have a synchronization target (see also R05).

The PCR function, which relates PCR magnitude to perturbation magnitude, is usually strongly linear (with slope α) for perturbations within ±10 % of the IOI (see Fig. 1b).Footnote 9 When the range of perturbation magnitudes is extended up to ±50 % of the IOI, the PCR function is typically sigmoid-shaped, with a steeper slope in the center. In other words, participants immediately correct a smaller percentage of a large than of a small perturbation. Repp (2011c) investigated whether this sigmoid shape is governed by the absolute or the relative magnitude of the phase shifts. This required varying IOI duration while holding either absolute (ms) or relative (% of IOI) phase shift magnitude constant. The results did not provide a simple answer, even though the PCR functions were consistently sigmoid. However, he did find clear evidence of an asymmetry, with the mean PCR being smaller for negative (advances) than for positive (delays) phase shifts. An interesting secondary result was that large negative phase shifts, in which a metronome tone occurred much earlier than expected, elicited an “early PCR” of the tap that was intended to coincide with the unexpectedly shifted tone. The early PCR started to emerge when the phase shift exceeded –150 ms, and reached a magnitude of about –100 ms when the phase shift was as large as –400 ms (i.e., about 25 %). The PCR of the next tap was reduced accordingly.

Making use of the fact that a PCR can be elicited by a shift in intervening subdivision tones when taps are synchronized with “beat” tones (Repp, 2008b), Repp and Jendoubi (2009) demonstrated that the PCR is triggered by a violation of temporal expectations established by preceding context, not by the temporal location (relative phase) of the shifted event in the IOI. The relative phase of cyclically repeated subdivisions did affect the asynchronies of taps, but these adaptations to a particular rhythm were clearly distinct from (and sometimes contrary to) the PCR, which occurred as an immediate response to a deviation from the current rhythm, whatever it was. A novel finding was that omission of an expected subdivision tone occurring at 2/3 of the IOI elicited a large positive PCR (i.e., a delay of the subsequent tap). This was attributed to perceptual grouping of the subdivision tone with the following beat tone.

Repp (2009a, b) attempted to use the PCR as an indirect measure of auditory stream segregation (Bregman, 1990). Musicians tapped with every third tone (the “beat”) of an isochronous sequence, and perturbations were introduced either in the beats (Repp, 2009b) or in the intervening two subdivision tones (Repp, 2009a), which had a different pitch. The results of a perceptual perturbation detection task indicated that the beat and the subdivision tones were integrated into a single stream when their pitches were two semitones apart, but not when they were 48 or 46 semitones apart. Remarkably, however, pitch separation had no effect at all on the PCR to perturbed beats: Relative to a control condition consisting only of beat tones, intervening subdivision tones reduced the mean PCR (see Repp, 2008b) regardless of pitch separation. Similarly, at the slower of two beat tempi used (IOI = 600 ms), pitch separation had no effect on the mean PCR to shifted subdivision tones; only at the faster tempo (IOI = 450 ms) was the mean PCR reduced substantially when the pitch separation was large. The conclusion was that perceptually segregated streams often still function as integrated rhythms at a sensorimotor level.

The PCR has also been used to measure perceptual centers (P-centers; Morton, Marcus, & Frankish, 1976)—the time points at which auditory events are perceived to occur, especially in a rhythmic context. The traditional paradigm for measuring the relative P-centers of two sounds is to play them cyclically in alternation and to adjust the timing of one of them until the sequence sounds isochronous. Using a set of speech syllables, Villing, Repp, Ward, and Timoney (2011) compared results obtained with the traditional method to results from a new method based on the PCR. One syllable was played in an isochronous sequence, and another syllable was substituted from time to time, with a variety of timings (i.e., phase shifts). Participants tapped in synchrony with the sequence, and the PCR to the inserted syllable was measured. The relative P-center of the inserted syllable could be inferred from the x-axis intercept of the PCR function, where PCR = 0. The results were highly similar to those obtained with the traditional method, but the PCR method offers some advantages, such as requiring no perceptual judgments. One interesting secondary finding, seen most clearly in homogeneous control sequences, was that the mean PCR decreased as the acoustic complexity of the syllable increased, reflecting increased uncertainty about the temporal location of the P-center.

Anticipatory phase correction

Anticipatory phase correction (APC) requires that a participant have advance knowledge of an upcoming perturbation. Repp and Moseley (2012) showed that advance information about the position and direction of a phase shift in an isochronous sequence enables musicians to advance or delay the tap intended to coincide with the shifted tone, and thereby to reduce the asynchrony occurring at that point. The mean PCR to the residual asynchrony was enhanced slightly relative to the mean PCR to unexpected phase shifts. In a second experiment, Repp and Moseley varied the time available for APC by varying IOI duration (400–1,200 ms) and the time at which complete advance information was supplied (one or two beats before the phase shift). From the data, APC (analogous to PCR) functions could be derived whose slope was a measure of the effectiveness of APC, comparable to α. When the advance information was supplied late, the slope increased with IOI duration up to about 1 s, and only at that point matched the slope for APC following early information, which was much less affected by IOI duration. Thus, up to 1 s was required to prepare and execute an effective APC response. There was also a clear asymmetry, with APC being less effective for negative than for positive phase shifts. These data provided evidence that phase correction can be under intentional control, even though normally it is an automatic response. As there were no carryover effects onto the next tap, it seemed that APC did not engage period correction.

Some researchers committed to a dynamic-systems perspective have promoted the idea of “strong anticipation,”Footnote 10 which they tested in a task requiring tapping in synchrony with a chaotically timed visual sequence whose IOIs varied in the range between 1 and 1.5 s (Stephen, Stepp, Dixon, & Turvey, 2008).Footnote 11 The chaotic signal was assigned three different levels of fractal structure, which were found to be mirrored by the structure of the ITI time series. The authors interpreted this correlation as evidence for strong anticipation, described as a dynamic adaptation to the global statistical structure of the environment (see also Stepp & Turvey, 2010).Footnote 12 Stephen and Dixon (2011) further expanded this account by analyzing and describing it as an instance of multifractality, “a reactive, feedforward coordination of preexisting fluctuations of very many sizes across multiple time scales” (p. 167).

The movement trajectory of synchronized tapping

The tapping cycle consists of flexion and extension phases, typically with a motionless phase in between, occurring either at the contact point (dwell time) or at the maximal extension (hold time). Accordingly, two tapping styles, “legato” and “staccato,” can be distinguished, though this distinction is rarely made in the literature or considered in instructions to participants.

Krause, Pollok, and Schnitzler (2010) found that participants moved their finger faster when tapping with an auditory metronome than with a flashing circle, and that this difference occurred mainly in the flexion phase (downward movement) of the finger, presumably reflecting stronger sensorimotor coupling. Drummers moved their fingers significantly faster during both flexion and extension than did other musicians or nonmusicians.

Hove and Keller (2010) recorded participants’ finger motions as they tapped in synchrony with a flashing square or with a display of a finger exhibiting apparent up–down motion at one of two amplitudes. Tap amplitudes were higher with the high-amplitude than with the low-amplitude finger display, suggesting an involuntary influence of perception on action. Flexion times were shorter than extension times and depended less on tempo, indicating a ballistic movement toward the contact target. The lag-1 autocorrelation of the ITIs was zero in tapping with flashes but negative (though small) in tapping with finger displays, probably reflecting better phase correction in the latter case. Small negative correlations were also observed between the asynchrony of one tap and the amplitude, extension time, and dwell time of the following tap, but not with its flexion time or movement velocity. This suggested that phase correction was implemented through adjustments of tap amplitude, extension time, and dwell time. Torre and Balasubramaniam (2009) observed a similar negative correlation between asynchrony and the following extension phase when participants tapped without contact (“in the air”) in synchrony with an auditory metronome. While there was no dwell time here, a great slowing amounting to a hold phase occurred at the end of the extension phase, which resulted in a strong asymmetry of the extension and flexion phases. A strong negative correlation was observed between the degree of this asymmetry and the SD asy, indicating more stable synchronization when the movement was less sinusoidal.

Elliott, Welchman, and Wing (2009a) compared three forms of finger action in synchrony with an auditory metronome: standard tapping, isometric force pulses applied to a sensor while maintaining contact with it, and smooth quasi-sinusoidal pressure variation applied to the sensor. Phase correction in response to an unpredictable phase shift was significantly slower in the smooth movement than in the two more discrete movements, and SD asy was also greater. The authors concluded that discrete movements provide more salient sensory information on which phase correction is based.Footnote 13

Pseudo-synchronization, feedback, and feeling in control

Pseudo-synchronization and feedback

Pseudo-synchronization occurs when participants believe that they are synchronizing with an externally controlled rhythm, but actually they are controlling (“producing”) the tones with their own taps. In other words, the tones provide “auditory feedback” about the taps, in particular about their tempo and variability.Footnote 14 Fraisse and Voillaume (1971/2009) found that, when participants were switched suddenly from SMS to pseudo-SMS without being aware of it, they accelerated their tapping progressively in the belief that it was the metronome that was accelerating. Because asynchronies during pseudo-SMS are normally close to zero, it seems that participants tried to recuperate their typical NMA by vainly trying to get ahead of the metronome. Participants who were informed about the switch showed smaller but still substantial acceleration.

Flach (2005) did not replicate these dramatic findings, perhaps because he used only a single metronome tempo (IOI = 800 ms). He found only a small and abrupt acceleration of tapping immediately after the transition from SMS to pseudo-SMS, regardless of whether or not participants were informed about the transition. The magnitude of this change in the ITI was positively correlated with the mean asynchrony preceding the transition. Thus it can be interpreted as a PCR to the sudden change in IOI (equivalent to a negative phase shift) and asynchrony (from negative to zero), and the maintenance of a slightly faster tapping tempo during pseudo-SMS can be attributed to repeated PCRs to deviations from the expected NMA. Importantly, Flach also manipulated the feedback delay during pseudo-SMS. The change in ITI after the transition depended strongly and positively on the feedback delay: When the negative asynchrony caused by delayed feedback exceeded the pretransition NMA, participants slowed down rather than sped up after the transition.

In a similar study, Takano and Miyake (2007) also varied feedback delay, but in addition varied sequence IOIs over a wide range (450–1,800 ms). Furthermore, they introduced a secondary task, silent reading, that diverted attention from the tapping task. When the tempo was slow and the feedback delay was small or zero, some participants accelerated much more than others. This tendency was absent, however, when participants were engaged in the secondary task. The authors therefore considered the acceleration a cognitively controlled form of phase correction.

In another clever experiment, Flach (2005) attempted to dissociate participants’ knowledge of (not) being in control of the tones from their behavioral responses to the transition and to event onset shifts in its vicinity. He gave participants a pitch cue to the transition from SMS to pseudo-SMS, but the actual transition occurred two tones earlier or later. He also shifted the onset of one tone before or after the transition, and that tone either did or did not coincide with the actual transition. The results, while somewhat complex, essentially indicated that knowledge of control has no influence on behavior. This is an important finding, as it suggests that SMS (and phase correction in particular) is independent of whether the timing of an external rhythm is externally or self-controlled, and also independent of participants’ belief about the locus of control.Footnote 15

Flach’s (2005) findings are relevant to a recent study by Drewing (2013) in which participants tapped in a self-paced manner while hearing feedback tones contingent on the taps. Every other feedback tone was delayed by a fixed amount, so that isochronous tapping resulted in nonisochronous feedback. Drewing found that participants tapped nonisochronously, partially compensating for the feedback delay, which resulted in more nearly (but not perfectly) isochronous feedback tones. He interpreted these results as support for the hypothesis that self-paced tapping involves the timing of integrated sensory (including auditory) consequences of movements (Drewing et al., 2002). However, if feedback tones function like pacing tones and automatically engage phase correction, a nonisochronous tapping pattern just like the one found would be predicted, with the ITIs echoing the IOIs at a lag of 1. Therefore, even though Drewing’s hypothesis is plausible, his findings do not seem to provide unambiguous support for it.

Feeling in control

Repp and Knoblich (2007) studied participants’ ability to discern whether or not they were in control of tones that they heard. Musicians tapped in synchrony with an isochronous metronome that at some point switched to feedback mode (pseudo-SMS), or the reverse. The participants knew how each trial started and had to report when the mode of control changed. The probability of detecting the change increased steeply over about six serial positions following the transition and then continued to increase more gradually until the end of the trial, where it was still well below 1. Sensorimotor cues—that is, the presence of variable asynchronies during SMS or their absence during pseudo-SMS—were important, for participants performed more poorly in a condition in which they listened passively to identical tone sequences without tapping, so that only perceptual cues of variability were available. It emerged that the transition from variability to constancy was much more difficult to detect than the opposite. This asymmetry was shown even more clearly by a group of nonmusicians (Knoblich & Repp, 2009). Participants also exhibited a bias to judge themselves as being in control. Knoblich and Repp then devised a simpler paradigm, in which nonmusicians listened to and then tried to reproduce a brief isochronous sound sequence. The reproduction taps were accompanied by sounds, which the participants had to judge as being externally controlled or self-controlled. In passive-listening conditions, the same sound sequences were played back and the same judgments had to be made. The participants were able to discriminate the two types of sequences better in the active than in the passive condition, and again showed a bias toward self-attribution of control. The difference in performance between the active and passive conditions was even more pronounced when the tempo of the externally controlled tones was varied somewhat during the reproduction phase; this increased sensorimotor cues to control (asynchronies) but decreased the salience of perceptual cues (IOI variability). Hauser et al. (2011) subsequently used this paradigm in a study of prodromal and diagnosed schizophrenics, who were expected, and indeed were found, to have an even stronger self-attribution bias than normal controls. The paradigm thus is potentially suitable for clinical assessments of the feeling of control, but it requires both an ability and a willingness to follow instructions precisely.Footnote 16

Many studies have been conducted to determine the effects of delayed or altered auditory feedback on speech production and music performance. However, Couchman, Beasley, and Pfordresher (2012) were the first to ask whether manipulated feedback affects participants’ feeling of being in control of their actions, and whether that feeling may in part be responsible for any impairment in performance. Using altered auditory feedback during performance of simple melodies on an electronic piano, they found that altered feedback did affect judgments of control as well as performance, and that disruption of performance was greatest when the feeling of control was ambiguous. However, the authors were able to conclude on the basis of correlational analyses that participants’ feelings of control did not affect their performance directly.

Tapping with composite auditory, visual, tactile, and multimodal rhythms

A composite auditory rhythm consists of several superimposed auditory sequences differing in pitch or some other acoustic attribute. In multimodal rhythms, sequences from different modalities are combined. In either case, the task may be to synchronize taps with all sequences simultaneously or to single out a target sequence and regard the other sequences as distractors.

Composite auditory rhythms

Keller and Repp (2008) investigated the effect of melodic pitch feedback on performance of a difficult SMS task. Musicians were required to tap in antiphase with a metronome using the two hands in alternation. The pitch of feedback tones controlled by the taps of each hand was manipulated to be the same as, close to, or far from the metronome pitch, with the higher feedback pitch being assigned to either the right or the left hand. The task was easiest when the feedback pitches were different from but close to the metronome pitch, which made the tones easier to integrate with the metronome into a composite melody/rhythm while maintaining a distinction between pacing and feedback tones. Performance was also better when the right hand generated a higher pitch than did the left hand, consistent with the “piano in the head” effect described by Lidji, Kolinsky, Lochy, Karnas, and Morais (2007).

Asynchronies occur naturally in the performance of musical chords. Intending to investigate whether such asynchronies facilitate synchronization, Hove, Keller, and Krumhansl (2007) required participants with or without musical training to tap in synchrony with isochronous sequences of two-tone complexes in which tones of different pitches were either synchronous or asynchronous by a small fixed amount (25–50 ms). The NMA (measured relative to the onset of the leading tone) was indeed smaller when there was an asynchrony. However, this can be interpreted as an attraction of taps toward the lagging tones, which functioned like distractors (see R05) if the leading tones are regarded as targets (though no targets had been designated by the instructions). A tendency to tap closer to the lower of the two tones was also observed. A perceptual task that estimated the P-centers of the chords (see section 1.2.2) yielded differences paralleling the changes in NMA, so that the results also could be described as participants synchronizing with P-centers. Participants with music training showed lower variability when tapping with asynchronous than with synchronous chords, whereas nonmusicians showed the opposite result.

Visual versus auditory rhythms

The variability of taps is typically greater when synchronizing with visual than with auditory metronomes, regardless of the musical training of participants (see R05). Although musical training is most relevant to SMS with auditory stimuli, Krause, Pollok, and Schnitzler (2010) recently found drummers and professional pianists to be significantly less variable than nonmusicians in tapping with visual stimuli (a flashing circle). Kurgansky (2008) studied SMS with similar visual metronomes covering a wide range of IOIs (500–2,200 ms), paying special attention to the initial “tuning-in” phase after the metronome started, for which he observed several different strategies. He also demonstrated a decrease in the lag-1 autocorrelation of asynchronies and a corresponding increase in α with increasing IOIs during steady-state SMS. Furthermore, he found an increase in positive asynchronies at long IOIs, though they remained shorter than reaction times to unpredictable visual stimuli. In a follow-up study, Kurgansky and Shupikova (2011) observed higher variability and less effective phase correction in 7- to 8-year-old children than in adults, while general performance characteristics were similar.

Lorås et al. (2012) compared tapping with auditory and visual (flashing) metronomes at IOIs ranging from 500 to 950 ms. Participants tapped here in a spatial pattern, moving around the corners of a virtual triangle. SD asy was much smaller with auditory than with visual pacing stimuli and, in each case, increased linearly with IOI. Surprisingly, the NMA for auditory stimuli was very small and independent of IOI, whereas the mean asynchrony for visual stimuli was positive and increased with IOI. By contrast, Sugano et al. (2012) found a substantial NMA with both types of pacing stimuli (IOI = 750 ms), though it was larger with auditory stimuli, whereas the variability was only slightly larger with visual than with auditory stimuli.

Static visual metronomes are difficult to synchronize with when their IOIs get shorter than 500 ms (Repp, 2003). It was long suspected that the critical IOI duration (and variability) might be lower for moving visual stimuli. This was first demonstrated by Hove and Keller (2010), who compared SMS with a flashing square to SMS with alternating images of a finger in raised and lowered positions, exhibiting apparent movement. Subsequently, Hove et al. (2010) and Ruspantini, D’Ausilio, Mäki, and Ilmoniemi (2011) reported results implying lower critical IOI durations for a bar or a finger exhibiting real up–down movement than for flashes. Moreover, Hove et al. (2010) found that an upright finger whose motion was compatible with a participant’s finger motion yielded better performance than an inverted finger that moved up when the participant’s finger moved down. However, none of these moving visual stimuli yielded SMS performance approaching that with auditory stimuli. Iversen, Patel, Nicodemus, and Emmorey (unpublished) reported that a video of a bouncing ball yielded SMS variability (SD asy) close to that observed with an auditory metronome, but Hove, Iversen, Zhang, and Repp (2013) still found a significant difference in favor of the latter. Even more effective visual stimuli for SMS than a bouncing ball may yet be found.

When participants synchronize with a target sequence of visual flashes that is accompanied by an auditory distractor sequence, participants’ taps veer in the direction of the distractor tones and react to perturbations in them (see R05). By contrast, visual distractors have hardly any effect on synchronization with auditory targets. Because some of these earlier results could have been due to misperception of the timing of visual stimuli when they occurred in the vicinity of auditory stimuli (“temporal ventriloquism”), Kato and Konishi (2006) presented target and distractor sequences in antiphase, roughly 500 ms apart, which made perceptual interactions unlikely. Nevertheless, temporal jittering of the auditory distractor sequence greatly increased SD asy for the visual target, but barely the other way around, thus replicating the earlier results.

Hove et al. (2013) pitted a bouncing ball against an auditory metronome in a target–distractor paradigm, varying the phase difference between the two sequences. For a group of musicians, auditory distractors tended to attract taps more than did visual distractors. A group of visual experts (video gamers and ball players), however, showed the opposite pattern, even though they synchronized better with unimodal auditory than with visual sequences, as did the musicians. Overall, the bouncing ball proved to be an effective competitor for an auditory metronome.

Multimodal rhythms

To compare SMS with unimodal and bimodal stimuli, Wing, Doumas, and Welchman (2010) presented tones and haptic stimuli (passive movements of a nontapping finger) individually or simultaneously. As would be predicted by a model of optimal multisensory integration (Ernst & Bülthoff, 2004), SD asy was lower in the bimodal condition than in either of the unimodal conditions, which exhibited similar variability. Adding temporal jitter to the auditory metronome increased variability much more in the unimodal auditory than in the bimodal condition, because in the latter condition participants relied in part on the unperturbed tactile stimuli. Elliott, Wing, and Welchman (2011) tested elderly participants (63–80 years) in a similar paradigm in which the phase offset between the modalities was also varied. While these participants showed greater variability than a younger comparison group, they reacted similarly to bimodal stimuli, suggesting intact multisensory integration. Elliott, Wing, and Welchman (2010) extended the paradigm to three modalities, using auditory, tactile, and visual (flashing) metronomes. All three pairwise bimodal combinations were presented, as well as unimodal conditions. In the bimodal conditions, two degrees of jitter were applied to the modality with the lower unimodal variability (auditory < tactile < visual). An optimal-integration model predicted the results for isochronous and lightly jittered conditions well, but when jitter was high, variability was larger than predicted in two of the three bimodal conditions, albeit still lower than in the relevant unimodal jittered condition. Thus, participants were able to avoid some of the effect of the jitter by relying more on the isochronous stimuli in the other modality, but not as effectively as would be predicted on the basis of optimal integration. This deviation from predictions was attributed to jitter-generated asynchronies between bimodal stimuli, whose magnitude may often have exceeded the optimal sensory integration window.

Tapping with a metrical beat

In this section, we review studies in which tapping was used primarily to indicate the most salient beat (tactus) of a rhythm. This task can be seen as a mixture of synchronized and self-paced tapping. In a nonisochronous rhythm, common in music, the beat may not always be marked explicitly by event onsets in the stimulus; such a rhythm is called syncopated. In general, the beat of a rhythm is never represented unambiguously in the sound pattern, but has to be determined by the participant, by the experimenter’s instructions, by preceding context, or by music notation (time signature). While taps must always be temporally coordinated with the external rhythm, they are synchronized with an internal periodic process that marks the beat. Consequently, taps are also synchronized with external events that happen to coincide with the beat.

Tapping with induced or imposed beats

Snyder, Hannon, Large, and Christiansen (2006) presented isochronous melodies with pitch patterns that favored either a 2–2–3 or a 3–2–2 grouping of notes—hence, a nonisochronous beat. Participants were required to tap in synchrony with a 2–2–3 or 3–2–2 drumbeat that initially accompanied the melodies, and then to continue tapping the same beat pattern, in synchrony with the melody if it continued. Participants systematically distorted the 2:3 interval ratio in the direction of 1:2, which affected the asynchronies (see section 1.1.1). Ratio production was more accurate, but SD asy was larger, in the 3–2–2 than in the 2–2–3 tapping pattern. A mismatch of melodic and drumbeat patterns increased variability, but only when 3–2–2 was the pattern being tapped. After the drumbeat stopped, the variability of ITIs was greater when the melody continued than when it did not, probably due to the phase correction required to stay in synchrony.

Fitch and Rosenfeld (2007) used nonisochronous rhythms varying in degree of syncopation. Participants had to tap along with an isochronous induction beat and then to maintain the beat in coordination with the rhythm after the induction beat stopped. As expected, measures of performance accuracy decreased as the degree of syncopation increased, regardless of tempo. Highly syncopated rhythms often made participants shift the phase of their beat, because this made the rhythms less syncopated. Using a similar synchronization–continuation paradigm, Repp, Iversen, and Patel (2008) presented highly trained musicians with rhythms adapted from Povel and Essens (1985), some of which strongly induced the feeling of a beat with an 800-ms period. An 800-ms induction beat was initially superimposed in one of four possible phases relative to the rhythm. Surprisingly, SD asy (relative to the imposed beat) tended to be lowest when the imposed beat was in antiphase with the favored beat, perhaps because the tones marking the favored beat served as effective subdivisions. However, when participants were instructed to tap in antiphase with the imposed beat, variability tended to be lowest when the imposed beat was in phase with the favored beat.

Rankin, Large, and Fink (2009) asked participants with limited music training to tap with the beat of piano music by Bach and Chopin at two designated metrical levels. The music was played either metronomically or with expressive timing. A cross-correlation analysis of IOIs and ITIs suggested that the participants were actively predicting the expressive timing in at least one of the two pieces. The expressive timing (IOI) patterns were found to exhibit fractal properties that were also reflected in the ITIs.Footnote 17

Finding the beat of music

The study by Repp, Iversen, and Patel (2008) included a beat-finding task in which participants listened to the rhythms and started tapping with their preferred beat as soon as they had decided on it. While the beat favored by the temporal structure was often chosen in strongly beat-inducing rhythms, there was also a bias to select a beat that started in phase with the first tone of the rhythm. Su and Pöppel (2012) investigated whether moving along with a rhythm facilitates the discovery of its beat. Musicians and nonmusicians were presented with nonisochronous rhythms, with the task being to discover their beat. Half of the participants were told to sit still while listening, whereas the other half was instructed to move along in any way that they liked as soon as the sequence started. When participants felt that they had found the beat, they were to start tapping it in synchrony with the continuing rhythm. While musicians performed equally well in both conditions, nonmusicians who moved were able to find a stable beat on 80 % of trials, whereas those who sat still found a beat on only 30 % of trials. Movement also reduced the time that it took the nonmusicians to find a beat.Footnote 18 The authors concluded that nonmusicians “seemed to be lacking an effective internal motor simulation that entrained to the pulse when it was not regularly present at the rhythmic surface” (p. 379).

Choosing a preferred beat (tactus) can be considered tantamount to judging the tempo of a musical rhythm. McKinney and Moelants (2006) asked participants with varying degrees of music training to tap with the beat of musical excerpts drawn from ten different genres. Martens (2011) conducted a similar study with musical excerpts taken from classical music recordings. In both studies, a resonance model (van Noorden & Moelants, 1999) did not account well for the distribution of chosen beat tempi. McKinney and Moelants found that tempo choices varied over a wide range, even for the same excerpt, and were genre-dependent: Classical music was more often associated with slow beats, whereas metal/punk music elicited fast beats. Acoustic analysis of the materials indicated that periodic dynamic accents were often responsible for a choice of beat outside the resonance curve, especially if it was a slow beat. Martens found little relation between spontaneous tapping tempo and the chosen tactus. He distinguished three groups of participants on the basis of their preferred beat level(s): “surface tappers,” (often nonmusicians) who generally tap with the fastest pulse in the music, and sometimes fail to synchronize; “variable tappers,” who choose beats of various rates; and “deep tappers,” who most often tap with a slow metrical level. Participants’ exposures to different musical styles may have been responsible for these different preferences. Moelants (2010) also used a tapping task to investigate the metrical ambiguity of musical excerpts (binary vs. ternary meter).

Madison and Paulin (2010) and London (2011) pursued the alternative idea that music has a subjective speed that is not necessarily identical with the tempo of the chosen tactus. Madison and Paulin asked listeners to rate the perceived speed of musical excerpts after “measuring” the excerpts’ tempo by having two individuals tap with the perceived beat.Footnote 19 The speed ratings indeed often deviated from the measured tempo. In one of several experiments, London asked participants to tap along with the perceived beat of artificially constructed simple rhythms, and found this not to have any effect on subjective speed ratings. In a commentary on London’s study, Repp (2011b) suggested that participants probably tapped with the beat that constituted the basis for their speed ratings; he argued against the idea that music has a speed independent of metrical structure, and in favor of a particular metrical level serving as the indicator of tempo, more in accord with McKinney and Moelants (2006) and Martens (2011). Because multiple metrical levels could serve as the tactus, musical tempo may often be ambiguous, and this ambiguity may be reflected in speed ratings.

The “groove” of music

Two studies not involving movement have been concerned with the properties of music that make listeners want to move in synchrony with it. Madison (2006) asked nonmusicians to rate a large number of musical excerpts from different genres on 14 scales, one of which was labeled “groove,” defined as “inducing movement.” A factor analysis yielded four factors, one of which loaded highly on the “groove” and “driving” scales. In a further attempt to determine what musical characteristics might predict nonmusicians’ subjective ratings of groove, Madison, Gouyon, Ullén, and Hörnström (2012) conducted detailed acoustic analyses of a large number of musical excerpts from five different genres. The predictors varied with genre, but beat salience and event density were the best (positive) predictors overall. Interestingly, deviations from temporal regularity (expressive timing) had no impact on groove ratings, nor did beat tempo (as determined by two of the authors tapping along). For jazz, no significant predictors of groove emerged.

Janata, Tomic, and Haberman (2012) conducted an extensive study of groove that included SMS. Groove ratings of musical excerpts were consistent across participants, varied across genres, were higher for fast than for slow music (tempo being here determined by an automatic algorithm; Tomic & Janata, 2008), and were highly correlated with enjoyment ratings. When participants tapped along, they reported feeling more “in the groove” and found tapping easier with high- than with low-groove excerpts, chosen according to the earlier ratings. When participants were instructed to sit still during the music, they exhibited more spontaneous body movement (especially of feet and head) when listening to high-groove music. Application of the resonator model of Tomic and Janata indicated that sensorimotor coupling strength was higher with high- than with mid- or low-groove music.

Finding a conductor’s beat

A series of studies involving SMS has investigated where in the trajectory of a conductor’s movement the beat is located. Luck and Toiviainen (2006) recorded the gestures of a student conducting an ensemble with a baton, and found that the ensemble tended to synchronize with points of maximal deceleration, and also with points of high vertical velocity, both of which precede the lowest point of the trajectory. However, the ensemble lagged behind those points, which means that they came closer to actually synchronizing with the lowest point.