- 1 Laboratory for Cognitive and Affective Neuroscience, Tilburg University, Tilburg, Netherlands

- 2 Brain and Emotion Laboratory Leuven, Department of Neurosciences, Katholieke Universiteit Leuven, Leuven, Belgium

- 3 Martinos Center for Biomedical Imaging, Massachusetts General Hospital and Harvard Medical School, Boston, MA, USA

Whole body expressions are among the main visual stimulus categories that are naturally associated with faces and the neuroscientific investigation of how body expressions are processed has entered the research agenda this last decade. Here we describe the stimulus set of whole body expressions termed bodily expressive action stimulus test (BEAST), and we provide validation data for use of these materials by the community of emotion researchers. The database was composed of 254 whole body expressions from 46 actors expressing 4 emotions (anger, fear, happiness, and sadness). In all pictures the face of the actor was blurred and participants were asked to categorize the emotions expressed in the stimuli in a four alternative-forced-choice task. The results show that all emotions are well recognized, with sadness being the easiest, followed by fear, whereas happiness was the most difficult. The BEAST appears a valuable addition to currently available tools for assessing recognition of affective signals. It can be used in explicit recognition tasks as well as in matching tasks and in implicit tasks, combined either with facial expressions, with affective prosody, or presented with affective pictures as context in healthy subjects as well as in clinical populations.

Introduction

Humans are considered to be among the most social species and there are only limited moments during which we are not interacting with conspecifics, either face to face or more indirectly for instance on the telephone. As we routinely take part in a wide range of heterogeneous social interactions on a daily basis, interpreting the intentions and emotions of others has significant adaptive value. In the most influential emotion models, emotions are considered more than emotional feelings and additionally include action components (Frijda, 1986; Damasio, 2000). One of the leading emotion theories was proposed by Frijda (1986) and it capitalizes on the function of emotions as action related mechanisms. Frijda suggests that emotions follow “appraisal” that is not necessarily consciously experienced (Frijda, 2007) and can lead to both intentional and unintentional (impulsive) actions (Frijda, 2010a,b), reactive to ongoing events (Frijda, 1986) based on a match or mismatch with our aims and goals and thereby tuning our interaction with the either social or non-social environment (Frijda, 1953, 1969, 1982). Although the focus of emotion perception research in affective neuroscience has so far been primarily on how we perceive, process, and recognize facial expressions, the theories and concepts proposed by Frijda push the envelope towards a more ambitious research instrumentarium and necessitates to move beyond investigating perception of facial expressions. Indeed, in our natural environment, faces are not perceived in isolation and usually co-occur with a wide variety of visual, auditory, olfactory, somatosensory, and gustatory stimuli. Whole body expressions are among the main visual stimulus categories that are naturally associated with faces and the neuroscientific investigation of how body expressions are processed has entered the research agenda this last decade (e.g., Hadjikhani and de Gelder, 2003; de Gelder et al., 2004), in line with the emotion theories proposed by Frijda (1986, 2007). The study of whole body expressions has significant additive value over that of facial expressions (de Gelder, 2006; Peelen and Downing, 2007; de Gelder et al., 2010). We have developed a database consisting of whole body expressions that served as stimulus materials in several experiments investigating saccades to emotional bodies (Bannerman et al., 2009, 2010), the neural basis of emotional whole body perception (Hadjikhani and de Gelder, 2003; de Gelder et al., 2004; van de Riet et al., 2009), contextual effects of whole body expressions (Van den Stock et al., 2007; Kret and de Gelder, 2010), and emotional body perception in patient populations including autism (Hadjikhani et al., 2009), prosopagnosia (Righart and de Gelder, 2007; Van den Stock et al., 2008b), neglect (Tamietto et al., 2007), and blindsight (Tamietto et al., 2009). Despite a number of previous reports on whole body expressions, dating back to the early work of Darwin (Darwin, 1872; James, 1932; Carmichael et al., 1937; Bull and Gidro-Frank, 1950; Gidro-Frank and Bull, 1950; McClenney and Neiss, 1989; Dittrich et al., 1996; Wallbott, 1998; Sprengelmeyer et al., 1999; Pollick et al., 2001; Heberlein et al., 2004), only one other validation study of a whole body expression stimulus set has been described in detail (Atkinson et al., 2004) and used in several other experiments (Atkinson et al., 2007; Peelen et al., 2007, 2009). That stimulus set consists of 10 identities (5 women) displaying whole body expressions (anger, disgust, fear, happiness, and sadness) in full light and point light displays. The bodily postures were generated by actors who had been given the instruction to express each emotion with their whole body. In developing the present stimulus set, our goal was to emphasize the action dimension of whole body expressions rather than the pure expression of inner feelings and to relate it to specific context in which the emotion expressing actions are appropriate.

Here we describe the stimulus set of whole body expressions termed the bodily expressive action stimulus test (BEAST), and we provide validation data for use of these materials by the community of emotion researchers.

Materials and Methods

Stimulus Construction

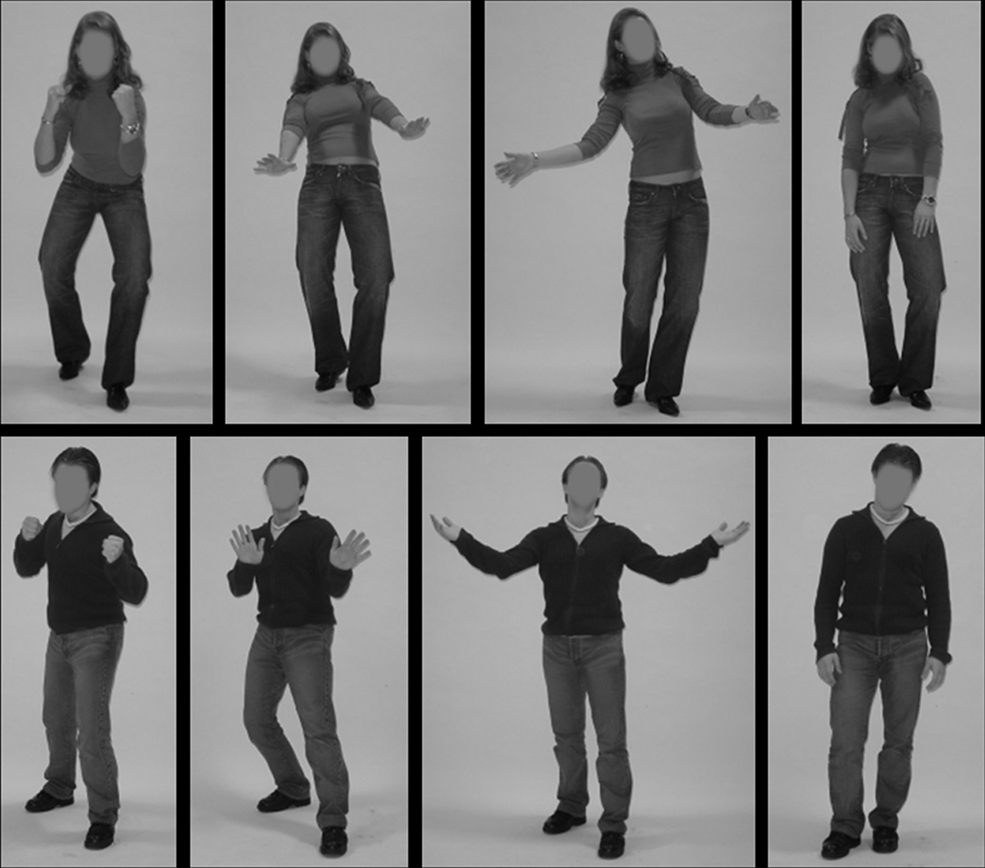

Forty-six non-professional actors (31 female) were recruited through announcements at Tilburg University. Actors participated in exchange for course credits or were paid a small amount. Additionally, to enhance motivation, actors were competing for providing the best recognized expressions and the winner received an iPod. Pictures were taken against a white background and under controlled lightening conditions in a professional photo studio with a digital camera mounted on a static tripod. Actors were individually instructed in a standardized procedure to display four expressions (anger, fear, happiness, and sadness) with the whole body, and the instructions provided a few specific and representative daily event scenarios typically associated with each emotion. For example, anger was associated with being in a quarrel and threatening to fight back; fear with a pursuing attacker; happiness with encountering an old friend not seen in years and being very pleased to see; and sadness with learning from a friend that a very dear friend has passed away. We did not include other emotions like disgust at this stage, because a pilot study indicated these were hard to recognize without information conveyed by the face. Based on the photographer’s impression of the expressiveness of the posture, in a few instances more than one picture of the same actor and expression was taken in order to enrich the total set and allow for small variations in expressiveness that may prove useful for one or another design. The total number of pictures was 254 (64 anger, 67 fear, 61 happiness, and 62 sadness). The order of the scenarios was randomized across subjects. After the photo shoot, the pictures were desaturated and the facial area was blurred. See Figure 1 for examples.

Figure 1. Examples of edited stimuli showing a female (top row) and male (bottom row) actor. The expressions display (from left to right): anger, fear, happiness, sadness.

Validation Procedure

Nineteen participants [11 female, mean (std) age: 22.5 (2.4)] took part in the validation experiment in exchange for course credits. All 254 stimuli were randomly presented one by one for 4000 ms with a 4000-ms inter-stimulus interval during which a blank screen was presented. The participants were instructed to categorize the emotion expressed in the whole body stimulus on an answering sheet in a four alternative-forced-choice task (anger, fear, happiness, sadness). The validation procedure consisted of three blocks. Duration of the first and second block was around 30 min per block and 100 pictures were presented in both blocks. The remaining 54 were presented in the third block, which lasted around 20 min. Fifteen-minute breaks were inserted between blocks, adding up to a total duration of about 120 min for the whole validation experiment.

Results

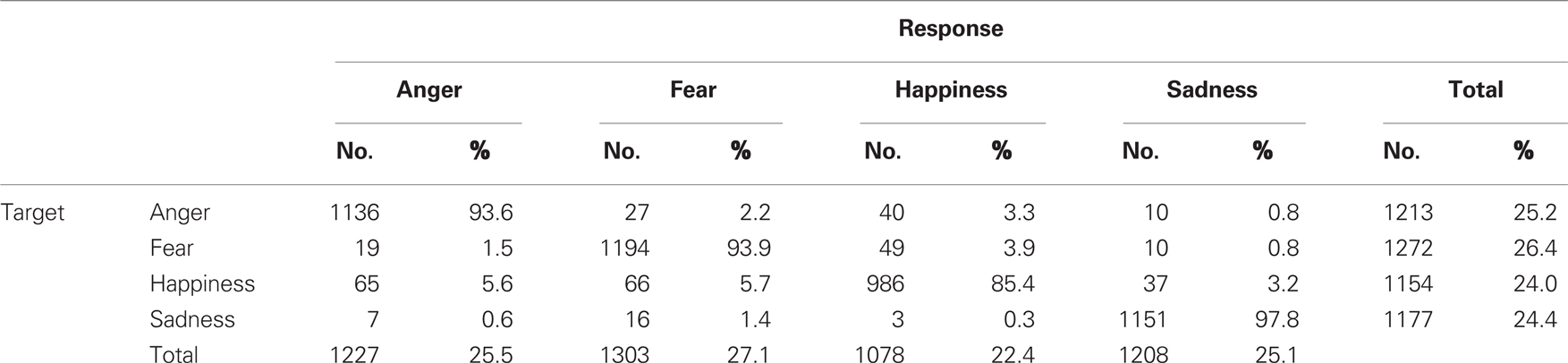

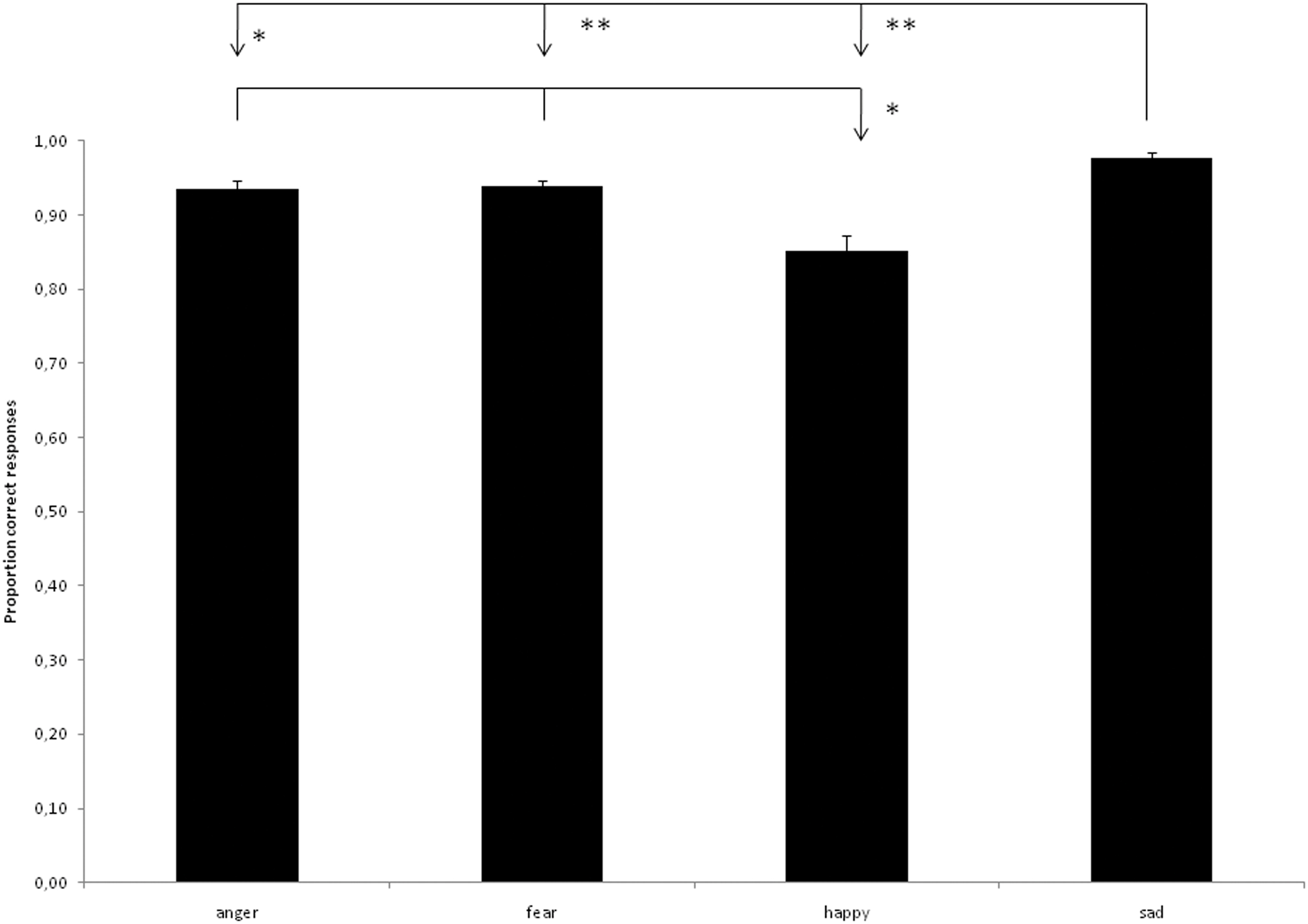

The stimuli and validation data can be downloaded at www.beatricedegelder.com/beast.html. The total number of data points added up to 4826 (19 subjects each rating 254 stimuli), of which 10 were missing values. The maximum number of missing values was 2 across participants and 3 across stimuli. We calculated the frequency of every response alternative for every stimulus condition and constructed a confusion matrix on the basis of this analysis (see Table 1). The overall rater agreement was calculated using Fleiss’ Generalized kappa and measured 0.839. Mean categorization accuracy of all stimuli was 92.5%. Bonferroni corrected paired-sample t-tests showed that sad expressions were better recognized than angry [t(18) = 2.960, p < 0.0083], fearful [t(18) = 4.332, p < 0.0004], and happy expressions [t(18) = 6.054, p < 0.0001] and that anger [t(18) = 3.670, p < 0.0017] and fear [t(18) = 3.601, p < 0.0020] expressions were recognized better than happy expressions (see Figure 2).

Table 1. Number and percentage of responses according to emotion expressed in the stimulus category.

Figure 2. Proportion correct categorizations according to bodily expression. *p < 0. 01, **p < 0.001.

Bonferroni corrected paired-sample t-tests were performed on the number of incorrect responses to investigate whether the intended target emotion was systematically confused with one specific non-target response alternative. For example, we investigated for all (intended target) angry expressions whether the number of (incorrect) “fear” responses differed from the number of (incorrect) “happy” or “sad” responses. This revealed that target angry expressions were categorized significantly more often as “happy” [t(18) = 3.174, p < 0.0053] than as “sad”; and that target fear expressions were categorized significantly more often as “angry” [t(18) = 3.426, p < 0.003] and “happy” [t(18) = 4.025, p < 0.001] than as “sad.”

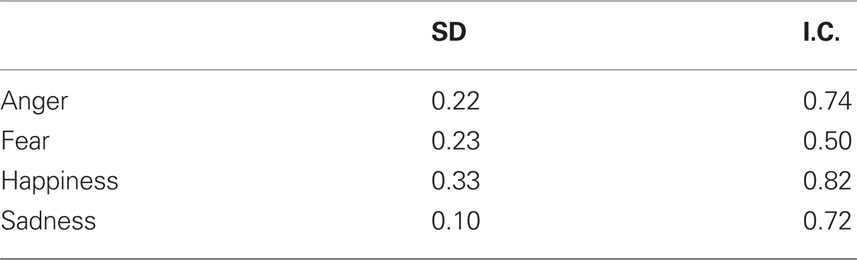

To investigate whether the variability of judgments for the different emotions represent variations, we transformed the stimulus categorizations to accuracies and calculated for every emotion the SD. The results are displayed in Table 2 and show that the variability is highest for happiness, followed by fear, anger, and sadness. Bonferroni corrected paired-sample t-tests were performed to compare the SD and showed that the variability for happiness is significantly higher than all other emotions [t(18) > 3.358, p < 0.002] and that the variability of sadness is lower than all other emotions [t(18) > 3.580, p < 0.002].

To investigate the extent to which the different stimuli expressing the same emotion inter-correlated, we computed for every emotion the Cronbach’s alpha as a measure of internal consistency. The results are displayed in Table 2 and show acceptable values for all emotions, except for fear.

To investigate inter-individual differences in emotion recognition, we correlated recognition performances for the four emotion categories over subjects. This revealed no significant correlation between any of the combinations (the highest absolute value of all correlations was below 0.40 and the lowest p value above 0.088), based on bivariate correlation tests.

Discussion

We constructed a database of 254 face-blurred whole body expressions, consisting of 46 actors expressing 4 basic emotions that can be recognized without information conveyed by the facial expression (anger, fear, happiness, and sadness) and asked participants to categorize the emotions expressed in the stimuli in a four alternative-forced-choice task. The results show that all emotions are well recognized, with sadness being the easiest, followed by fear, whereas happiness was the most difficult. The same pattern is reported for (non-exaggerated) static body expressions in a study by Atkinson et al. (2004) who used a similar validation instruction, although the procedure for constructing the stimuli differed significantly from the one followed in the present study. The convergence between the results of both studies may point to a priori differences in recognisability of bodily emotions, although the findings are influenced by task variables. In a recent study we avoided the use of verbal labels by presenting stimuli from the same database in a simultaneous match-to-sample paradigm and found that fearful expressions were the most difficult to match (Van den Stock et al., 2007). The discrepancy in the results of these studies indicates that different processes underlie verbal labeling and matching of whole body expressions. A possible explanation may be that, next to the emotional processing involved in both tasks, verbal labeling relies more on language processes and idiosyncratic semantic knowledge about features in whole body expressions (for instance that anger is associated with clenched fists), whereas matching relies more on correspondence of features between stimuli. The finding that fearful body expressions are hardest to match then indicates that this expression is more ambiguous (Van den Stock et al., 2007). This is also reflected in the internal consistency measure.

Analysis of the incorrect categorizations reveals that anger is more often confused with happiness than with sadness and that fear is more confused with anger and happiness than with sadness. It is remarkable that bodily expressions with opposite valence are more confused than expressions with similar valence. This contrasts with findings from facial expression studies, where recognition of happiness in faces is usually superior to recognition of negative emotions (e.g., Milders et al., 2003; Goeleven et al., 2008). It was also our experience during the construction of the body expression stimuli that happiness is most difficult to properly instruct as well as to perform by the actors, whereas facial expressions of happiness are very easy to instruct and perform.

An important issue concerns the fact that all the emotions were expressed voluntarily. Face emotion studies have shown differences in spontaneous and voluntary emotional expressions (Zuckerman et al., 1976). Furthermore, the actors in were instructed and motivated to express highly recognizable emotions. This does not necessarily correspond to how we express emotions in daily life and might have induced exaggerated emotional expressions. However, by presenting the actors with real life scenarios, we made an attempt to increase the ecological validity of the expressions.

Finally, we found no significant across subject correlations in emotion categorization accuracy, indicating there are no significant inter-individual differences in the recognition of whole body expressions. This means that the performance of individual subjects compared to the other subjects on for example categorization of angry whole body expressions is not systematically associated with performance on categorization of fearful, happy, or sad whole body expressions. It shows that subjects are heterogeneous in the emotional category they recognize better than others.

Explicit recognition has been tested in normal populations, in clinical populations with autism, with schizophrenia, and in individuals with prosopagnosia. The stimulus set has also proven useful for investigating high anxiety subjects (Kret and de Gelder, under review) and violent offenders (Kret and de Gelder, under review). Besides behavioral measures, EEG, MEG, and fMRI have been used to investigate the neurofunctional basis in normal and in abnormal populations. Useful information was also collected from EMG measurements and from eye movement recordings.

Another area we have begun to explore concerns cultural determinants of perception. So far one study compared Dutch and Chinese observers (Sinke et al., under review).

The role of attention and of awareness was also investigated. Implicit recognition of these bodily emotion expressions was tested in a few cases of V1 lesion that leads to clinical blindness and of hemispatial neglect associated with inattention to contralesional stimuli. Implicit recognition was also investigated in normal subjects with the use of a masking paradigm (Stienen and de Gelder, 2011).

Furthermore, the stimuli have been used in experiments using composite stimuli or combinations of a bodily expression with another source of affective information, either visual or auditory. Visual contexts that have been explored include facial expressions and environmental or social scenes (Van den Stock et al., under review). As auditory contexts we have used music fragments, spoken sentence fragments, and environmental sounds in normal (Van den Stock et al., 2008a, 2009) and clinical (Van den Stock et al., 2011) populations. Finally, a computational model for body expression recognition was developed (Schindler et al., 2008).

Conclusion

The BEAST is a valuable addition to currently available tools for assessing recognition of affective information. As has already been illustrated it can be used in explicit recognition tasks as well as in implicit ones, when combined with facial expressions or affective prosody and this in healthy subjects as well as in neurological and psychiatric populations.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Jan Van den Stock is a post-doctoral researcher for the Fonds voor Wetenschappelijk Onderzoek - Vlaanderen.

References

Atkinson, A. P., Dittrich, W. H., Gemmell, A. J., and Young, A. W. (2004). Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33, 717–746.

Atkinson, A. P., Heberlein, A. S., and Adolphs, R. (2007). Spared ability to recognise fear from static and moving whole-body cues following bilateral amygdala damage. Neuropsychologia 45, 2772–2782.

Bannerman, R. L., Milders, M., de Gelder, B., and Sahraie, A. (2009). Orienting to threat: faster localization of fearful facial expressions and body postures revealed by saccadic eye movements. Proc. R. Soc. Lond. B Biol. Sci. 276, 1635–1641.

Bannerman, R. L., Milders, M., and Sahraie, A. (2010). Attentional cueing: fearful body postures capture attention with saccades. J. Vis. 10, 23.

Bull, N., and Gidro-Frank, L. (1950). Emotions induced and studied in hypnotic subjects. Part II: the findings. J. Nerv. Ment. Dis. 112, 97–120.

Carmichael, L., Roberts, S. O., and Wessell, N. Y. (1937). A study of the judgment of manual expression as presented in still and motion pictures. J. Soc. Psychol. 8, 115–142.

Damasio, A. (2000). The Feeling of What Happens: Body, Emotion, and Consciousness. London: Random House.

de Gelder, B. (2006). Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 7, 242–249.

de Gelder, B., Snyder, J., Greve, D., Gerard, G., and Hadjikhani, N. (2004). Fear fosters flight: a mechanism for fear contagion when perceiving emotion expressed by a whole body. Proc. Natl. Acad. Sci. U.S.A. 101, 16701–16706.

de Gelder, B., Van den Stock, J., Meeren, H. K., Sinke, C. B., Kret, M. E., and Tamietto, M. (2010). Standing up for the body. Recent progress in uncovering the networks involved in the perception of bodies and bodily expressions. Neurosci. Biobehav. Rev. 34, 513–527.

Dittrich, W. H., Troscianko, T., Lea, S. E., and Morgan, D. (1996). Perception of emotion from dynamic point-light displays represented in dance. Perception 25, 727–738.

Frijda, N. H. (1953). The understanding of facial expression of emotion. Acta Psychol. (Amst.) 9, 294–362.

Frijda, N. H. (1969). “Recognition of emotion,” in Advances in Experimental Social Pyschology, Vol. 4, ed. L. Berkowitz (New York: Academic Press), 167–223.

Frijda, N. H. (1982). “The meanings of facial expression,” in Nonverbal Communication Today: Current Research, Vol. 103–120, ed. M. Ritchie-Key (The Hague: Mouton), 103–120.

Gidro-Frank, L., and Bull, N. (1950). Emotions induced and studied in hypnotic subjects. J. Nerv. Ment. Dis. 111, 91–100.

Goeleven, E., De Raedt, R., Leyman, L., and Verschuere, B. (2008). The Karolinska directed emotional faces: a validation study. Cogn. Emot. 22, 1094–1118.

Hadjikhani, N., and de Gelder, B. (2003). Seeing fearful body expressions activates the fusiform cortex and amygdala. Curr. Biol. 13, 2201–2205.

Hadjikhani, N., Joseph, R. M., Manoach, D. S., Naik, P., Snyder, J., Dominick, K., Hoge, R., Van den Stock, J., Flusberg, H. T., and de Gelder, B. (2009). Body expressions of emotion do not trigger fear contagion in autism spectrum disorder. Soc. Cogn. Affect. Neurosci. 4, 70–78.

Heberlein, A. S., Adolphs, R., Tranel, D., and Damasio, H. (2004). Cortical regions for judgments of emotions and personality traits from point-light walkers. J. Cogn. Neurosci. 16, 1143–1158.

Kret, M. E., and de Gelder, B. (2010). Social context influences recognition of bodily expressions. Exp. Brain Res. 203, 169–180.

McClenney, L., and Neiss, R. (1989). Posthypnotic suggestion: a method for the study of nonverbal communication. J. Nonverbal Behav. 13, 37–45.

Milders, M., Crawford, J. R., Lamb, A., and Simpson, S. A. (2003). Differential deficits in expression recognition in gene-carriers and patients with Huntington’s disease. Neuropsychologia 41, 1484–1492.

Peelen, M. V., Atkinson, A. P., Andersson, F., and Vuilleumier, P. (2007). Emotional modulation of body-selective visual areas. Soc. Cogn. Affect. Neurosci. 2, 274–283.

Peelen, M. V., and Downing, P. E. (2007). The neural basis of visual body perception. Nat. Rev. Neurosci. 8, 636–648.

Peelen, M. V., Lucas, N., Mayer, E., and Vuilleumier, P. (2009). Emotional attention in acquired prosopagnosia. Soc. Cogn. Affect. Neurosci. 4, 268–277.

Pollick, F. E., Paterson, H. M., Bruderlin, A., and Sanford, A. J. (2001). Perceiving affect from arm movement. Cognition 82, B51–B61.

Righart, R., and de Gelder, B. (2007). Impaired face and body perception in developmental prosopagnosia. Proc. Natl. Acad. Sci. U.S.A. 104, 17234–17238.

Schindler, K., Van Gool, L., and de Gelder, B. (2008). Recognizing emotions expressed by body pose: a biologically inspired neural model. Neural Netw. 21, 1238–1246.

Sprengelmeyer, R., Young, A. W., Schroeder, U., Grossenbacher, P. G., Federlein, J., Buttner, T., and Przuntek, H. (1999). Knowing no fear. Proc. Biol. Sci. 266, 2451–2456.

Stienen, B. M., and de Gelder, B. (2011). Fear detection and visual awareness in perceiving bodily expressions. Emotion. doi: 10.1037/a0024032. [Epub ahead of print].

Tamietto, M., Castelli, L., Vighetti, S., Perozzo, P., Geminiani, G., Weiskrantz, L., and de Gelder, B. (2009). Unseen facial and bodily expressions trigger fast emotional reactions. Proc. Natl. Acad. Sci. U.S.A. 106, 17661–17666.

Tamietto, M., Geminiani, G., Genero, R., and de Gelder, B. (2007). Seeing fearful body language overcomes attentional deficits in patients with neglect. J. Cogn. Neurosci. 19, 445–454.

van de Riet, W. A. C., Grezes, J., and de Gelder, B. (2009). Specific and common brain regions involved in the perception of faces and bodies and the representation of their emotional expressions. Soc. Neurosci. 4, 101–120.

Van den Stock, J., de Jong, J., Hodiamont, P. P. G., and de Gelder, B. (2011). Perceiving emotions from bodily expressions and multisensory integration of emotion cues in schizophrenia. Soc. Neurosci. doi: 10.1080/17470919.2011.568790. [Epub ahead of print].

Van den Stock, J., Grezes, J., and de Gelder, B. (2008a). Human and animal sounds influence recognition of body language. Brain Res. 1242, 185–190.

Van den Stock, J., van de Riet, W. A., Righart, R., and de Gelder, B. (2008b). Neural correlates of perceiving emotional faces and bodies in developmental prosopagnosia: an event-related fMRI-study. PLoS ONE 3, e3195. doi: 10.1371/journal.pone.0003195.

Van den Stock, J., Peretz, I., Grezes, J., and de Gelder, B. (2009). Instrumental music influences recognition of emotional body language. Brain Topogr. 21, 216–220.

Van den Stock, J., Righart, R., and de Gelder, B. (2007). Body expressions influence recognition of emotions in the face and voice. Emotion 7, 487–494.

Keywords: body expression, emotion, action, test validation

Citation: de Gelder B and Van den Stock J (2011) The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Front. Psychology 2:181. doi: 10.3389/fpsyg.2011.00181

Received: 28 January 2011; Accepted: 19 July 2011;

Published online: 09 August 2011.

Edited by:

Marco Tamietto, Tilburg University, NetherlandsReviewed by:

Alan J. Pegna, Geneva University Hospitals, SwitzerlandNico Frijda, Amsterdam University, Netherlands

Cosimo Urgesi, University of Udine, Italy

Copyright: © 2011 de Gelder and Van den Stock. This is an open-access article subject to a non-exclusive license between the authors and Frontiers Media SA, which permits use, distribution and reproduction in other forums, provided the original authors and source are credited and other Frontiers conditions are complied with.

*Correspondence: Beatrice de Gelder, Martinos Center for Biomedical Imaging, Massachusetts General Hospital, Room 417, Building 36, First Street, Charlestown, Boston, MA 02129, USA. e-mail: degelder@nmr.mgh.harvard.edu