- 1Centre National de la Recherche Scientifique, UMR 5549, Faculté de Médecine Purpan, Toulouse, France

- 2Université de Toulouse, Centre de Recherche Cerveau et Cognition, Université Paul Sabatier, Toulouse, France

A growing number of studies endeavor to reveal periodicities in sensory and cognitive functions, by comparing the distribution of ongoing (pre-stimulus) oscillatory phases between two (or more) trial groups reflecting distinct experimental outcomes. A systematic relation between the phase of spontaneous electrophysiological signals, before a stimulus is even presented, and the eventual result of sensory or cognitive processing for that stimulus, would be indicative of an intrinsic periodicity in the underlying neural process. Prior studies of phase-dependent perception have used a variety of analytical methods to measure and evaluate phase differences, and there is currently no established standard practice in this field. The present report intends to remediate this need, by systematically comparing the statistical power of various measures of “phase opposition” between two trial groups, in a number of real and simulated experimental situations. Seven measures were evaluated: one parametric test (circular Watson-Williams test), and three distinct measures of phase opposition (phase bifurcation index, phase opposition sum, and phase opposition product) combined with two procedures for non-parametric statistical testing (permutation, or a combination of z-score and permutation). While these are obviously not the only existing or conceivable measures, they have all been used in recent studies. All tested methods performed adequately on a previously published dataset (Busch et al., 2009). On a variety of artificially constructed datasets, no single measure was found to surpass all others, but instead the suitability of each measure was contingent on several experimental factors: the time, frequency, and depth of oscillatory phase modulation; the absolute and relative amplitudes of post-stimulus event-related potentials for the two trial groups; the absolute and relative trial numbers for the two groups; and the number of permutations used for non-parametric testing. The concurrent use of two phase opposition measures, the parametric Watson-Williams test and a non-parametric test based on summing inter-trial coherence values for the two trial groups, appears to provide the most satisfactory outcome in all situations tested. Matlab code is provided to automatically compute these phase opposition measures.

Introduction

Science has long sought to determine whether mental processes unfold continuously—like the flow of a river—or discretely over time—like the successive frames of a movie sequence (Stroud, 1956; VanRullen and Koch, 2003; VanRullen, 2016a,b). The existence of oscillatory brain rhythms at different spatial and temporal scales, and their demonstrated involvement in numerous sensory and cognitive functions (Buzsaki, 2006), could indeed imply that certain mental processes operate rhythmically, rather than strictly continuously. One convincing way to demonstrate such a rhythmic operation is by showing that the result of a given neural process varies, depending on the exact rhythmic phase at which this process is engaged1. Although this procedure has a history dating at least half a century (Callaway and Yeager, 1960; Dustman and Beck, 1965), in recent years there has been a surge of reports of such phase-dependent perception (VanRullen et al., 2011). The phase of brain oscillations at various frequencies from 2 to 20 Hz has been related to trial-by-trial fluctuations in threshold-level perception in the visual (Nunn and Osselton, 1974; Busch et al., 2009; Mathewson et al., 2009; Busch and VanRullen, 2010; Dugue et al., 2011; Fiebelkorn et al., 2013; Hanslmayr et al., 2013), auditory (Rice and Hagstrom, 1989; Ng et al., 2012; Strauss et al., 2015), and somatosensory domains (Ai and Ro, 2014); in supra-threshold perception as measured by reaction times (Callaway and Yeager, 1960; Dustman and Beck, 1965; Drewes and VanRullen, 2011), in oculomotor functions such as saccadic execution (Drewes and VanRullen, 2011; Hamm et al., 2012) and saccadic remapping (McLelland et al., 2016), in attention and visual search (Buschman and Miller, 2009; Busch and VanRullen, 2010; Dugue et al., 2015; Landau et al., 2015; Voloh et al., 2015), in temporal parsing of visual (Varela et al., 1981; Chakravarthi and VanRullen, 2012; Cravo et al., 2015; Inyutina et al., 2015) or somatosensory information (Baumgarten et al., 2015), in decision-making (Wyart et al., 2012), in the top-down influence of predictions and expectations (Arnal et al., 2015; Samaha et al., 2015; Ten Oever et al., 2015; Han and VanRullen, 2016; Sherman et al., 2016), in cross-modal integration (van Erp et al., 2014) and in short-term memory (Siegel et al., 2009; Bonnefond and Jensen, 2012; Myers et al., 2014; Leszczynski et al., 2015). Not surprisingly therefore, large-scale physiological markers of perceptual processing such as ERPs (Dustman and Beck, 1965; Jansen and Brandt, 1991; Haig and Gordon, 1998; Barry et al., 2004; Gruber et al., 2014), TMS-evoked motor potentials (Keil et al., 2014b), stimulus-evoked BOLD responses (Scheeringa et al., 2011), and fMRI network connectivity between areas (Hanslmayr et al., 2013) have also been shown to depend on oscillatory phase at (or just before) the time of stimulus onset.

To quantify the relation between oscillatory phase and a particular cognitive (or physiological) variable, a typical experimental procedure consists in repeating several instances of the same trial, yet leading to different behavioral (or physiological) responses. For example, using a threshold-stimulation procedure, successive presentations of the exact same luminous flash may give rise to a conscious detection of this stimulus in only half of the trials (Busch et al., 2009). If the cognitive function under study (here, visual detection) involves a rhythmic process, then the two trial groups (detected vs. undetected) might be found to differ in the distribution of oscillatory phases at the critical frequency, around the time of stimulus onset. Statistically evaluating this difference in oscillatory phase angle can be (and has been) done in various ways. For example, parametric tests of differences in circular distributions are available (e.g., circular Watson-Williams test), that are equivalent to a t-test for linear data. Such tests are relatively easy to perform (Baumgarten et al., 2015; Samaha et al., 2015), but require the data to verify specific constraints (e.g., normality). It is also possible to construct ad hoc measures of phase opposition between the two trial groups, and evaluate their significance using non-parametric statistics. For this, it is helpful to recognize that, if phase influences the trial outcome, then the inter-trial phase coherence (ITC) of each trial group should exceed the overall ITC (Figure 1). Thus, phase opposition measures generally involve a combination (sum or product) of ITC for each trial group, appropriately corrected (by subtraction or division) to remove the overall ITC. For example, the “phase bifurcation index” (PBI) introduced by Busch et al. (2009), and employed several times since (Hamm et al., 2012; Ng et al., 2012; Auksztulewicz and Blankenburg, 2013; Hanslmayr et al., 2013; Manasseh et al., 2013; Rana et al., 2013; Diederich et al., 2014; Park et al., 2014; Li et al., 2015; Shou and Ding, 2015; Strauss et al., 2015; van Diepen et al., 2015; Batterink et al., 2016), was based on this principle. Other analogous procedures have been described, however (Drewes and VanRullen, 2011; Dugue et al., 2011, 2015; VanRullen et al., 2011; Han and VanRullen, 2016), and there exists no systematic comparison between these various measures and, consequently, no accepted practice in this field.

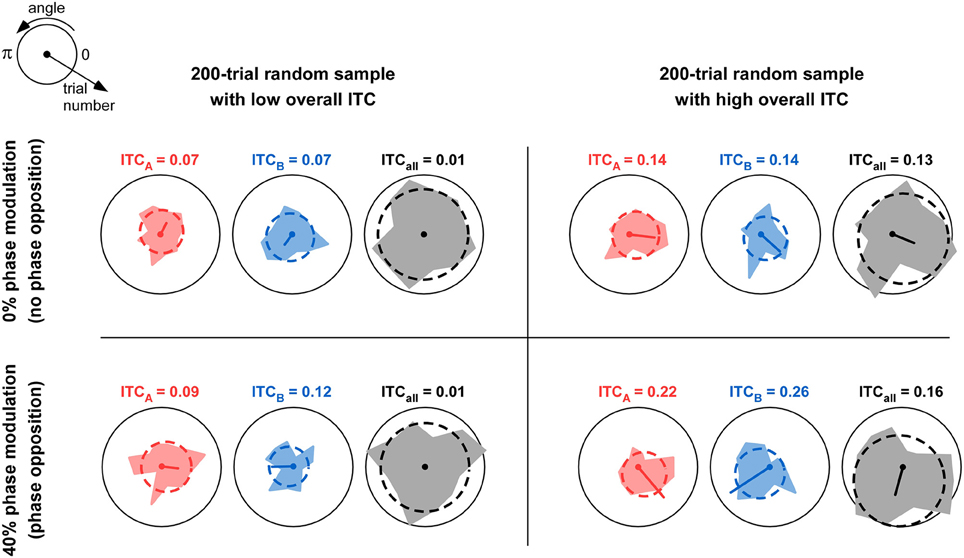

Figure 1. Comparison of overall ITC with ITC of individual trial groups. The table depicts four situations in which 200 trials were simulated. For each trial, a phase value was randomly assigned from a uniform distribution between 0 and 2π. Thus, the overall ITC is expected to be near-zero; but due to the relatively small sample size (n = 200), the observed ITCall can in fact vary between near-zero (left column) and somewhat higher values above 0.1 (right column). In each simulation, the 200 trials were divided into two groups A and B with equal probability (50%). In the top row, this probability was independent of trial phase; this would correspond to the null hypothesis of “no phase opposition.” In the bottom row, the probability of assigning each trial to group A was a cosine function of the trial phase, with 40% modulation depth (parameter mod = 0.4 in Figure 3 and Equation 9). Thus, trial groups A and B were in “phase opposition.” For each simulation, the phase distributions for trial group A, trial group B, and all trials, are shown respectively in red, blue, and gray, together with their best-fitting von Mises distributions (dashed lines). The mean resulting vector is also shown (arbitrary unit, identical in all panels); its length is the ITC value for the corresponding trial group (also indicated above each plot). For a fixed level of overall ITC (i.e., within each column), introducing phase opposition resulted in increased ITC-values for the two trial groups ITCA and ITCB (compare bottom values to top values). However, the absolute level of ITCA and ITCB is not by itself a reliable indicator of phase opposition: when (by chance) overall ITC is high (right column), the null hypothesis can result in ITCA and ITCB-values (Top right panel) that can exceed those obtained with actual phase opposition (Bottom-left panel). This explains why the overall amount of ITCall needs to be taken into account when calculating phase opposition (e.g., as a baseline against which to evaluate the ITC of individual groups ITCA and ITCB).

The present study aims to compare seven variants of phase opposition measures that have been used in previous published studies. By applying all measures to the same experimental datasets, and by systematically varying key experimental parameters in artificially constructed datasets, we can gain insight about the relative merit of each phase opposition measure, and the conditions under which it should (or should not) be applied. We hasten to note that a number of other measures might already exist and that many more could also be conceived for similar purposes—in other words, this comparison is not intended to be exhaustive, but it should at least contribute to organizing a significant portion of the existing literature.

Methods

Experimental Assumptions

In all following analyses, we shall work on (real or artificial) experimental datasets for which two possible outcomes A and B have been recorded in otherwise identical trials, resulting in two trial groups A and B. (It is worth noting, however, that both parametric and non-parametric measures of phase opposition can readily be extended to situations where the number of possible outcomes is larger than 2.)2 Each trial is also associated with a time-varying electrophysiological signal, which may represent EEG or MEG from a specific sensor, an intracranial electrode, etc.

We further assume that stimulus onset (t = 0) for every trial occurs in a temporally unpredictable manner (e.g., because of randomized inter-trial intervals). This assumption ensures that we consider truly ongoing brain activity: even if certain brain states fluctuate rhythmically, each phase of this fluctuation would be equally likely to be sampled at stimulus onset. In other words, for every frequency, the pre-stimulus phases across trials can be assumed to be sampled from a uniform distribution3. Note that this is not necessarily the case concerning the post-stimulus period, as stimulus-evoked activity (event-related potential or “ERP”) may affect the observed distribution of phases. Further, the temporal smearing of oscillatory signals caused by window-based time-frequency analysis methods (e.g., wavelet transform) can potentially result in this influence of stimulus-evoked activity being already visible in the pre-stimulus period, a phenomenon that will be explored in various upcoming simulations.

A major assumption for our analyses is that the phase of oscillatory activity at one particular time and frequency point has a significant influence on experimental outcome (i.e., trial assignment to group A or B). Consequently, the purpose of our phase opposition analyses is to reveal the oscillatory frequency involved. That is, we place ourselves in the situation of an experimenter trying to determine whether task outcome is rhythmically modulated by any oscillatory signal, and if yes, at what frequency. Phase opposition must therefore be evaluated for all time and frequency points (“time-frequency” analysis). Although we do not explore or simulate “control” datasets in which no phase modulation is present, the likelihood of detecting phase opposition at an incorrect time and/or frequency can be taken as a measure of false alarm rate or baseline (chance-level) performance for our procedures. (Note finally that, in specific cases, experimenters may have strong a priori hypothesis about the exact rhythmic frequency involved; in such cases, which we do not address here, other analyses procedures may be warranted).

When multiple datasets are recorded for a given experimental situation (e.g., multiple subjects in a given experiment), we assume that the rhythmic modulation that we aim to reveal occurs around the same time and frequency for all datasets. However, the exact phase value favoring outcome A vs. B may or may not be the same across datasets/subjects. (Different reasons may justify this assumption: various conduction delays resulting in shifts of optimal phases; differences in cortical folding resulting in signal polarity reversals, etc. For all these reasons, while the absolute phase of local cortical oscillations at the level of a given neuronal ensemble is obviously important for its operation, we generally consider the absolute phase recorded at the scalp to be irrelevant, and focus instead on the relative phase between the two trial outcomes). Consequently, our analyses shall evaluate phase opposition between trial groups (“single-trial” analysis), independently for each dataset (whose results can later be combined), rather than measuring phase opposition across datasets/subjects (between the mean phase angles of each trial group, i.e., a “group-level” analysis).

Phase Opposition Measures

Having fixed the experimental conditions common to all analyses, we now introduce the different tests of phase opposition that will be compared. All of these tests are based on a comparison of ITC measured over all trials (serving as a baseline) vs. ITC measured separately for each trial group A and B (see Figure 1). The null hypothesis tested is, therefore, that the ITC of each trial group exceeds the overall ITC. That is, if ωi is a complex number representing the oscillatory signal (at a given time and frequency) for trial i (with |ωi| and angle(ωi), respectively, representing oscillatory amplitude and phase), then ITC4 (Tallon-Baudry et al., 1996; Lachaux et al., 1999) is defined as:

with nA and nB corresponding to the numbers of trials in groups A and B, respectively, and n = nA + nB.

The various tests simply differ in the ways they combine the above three ITC-values, as well as their derivation of statistical significance.

Circular Watson-Williams test

The circular Watson-Williams test is a two-sample test for equal means, equivalent to a two-sample t-test for linear data. It assumes that each set of phases to be compared follows a von Mises circular distribution, and that the two distributions share a common concentration parameter κ. For our purposes, we adapted the implementation provided in the Matlab “circStat” (circular statistics) toolbox (Berens, 2009), in order to process multi-dimensional datasets (time-frequency matrices). This function implements the procedure described by Zar (1999), where the test statistic F defined as:

follows a F distribution with (1, n − 2) degrees of freedom. Since this parametric test statistic can be directly related to the corresponding p-value (by means of the F cumulative distribution function), no further statistical analysis was required for this test.

Phase Bifurcation Index (PBI)

We measured the phase bifurcation index as described in Busch et al. (2009):

The PBI is bounded between −1 and 1. It takes positive values when the ITC of each trial group exceeds the overall ITC, our main situation of interest. However, PBI can also become negative if the ITC of one trial group happens to fall below the overall ITC (a situation that may occur due to measurement noise, but also due to differences in ERPs shape or amplitude across the trial groups). Finally, PBI can also turn positive in rare cases where (e.g., due to measurement noise) both trial groups ITC-values are smaller than the overall ITC. It is easy to understand, therefore, that PBI can prove a rather volatile measure. Nonetheless, this volatility should also be present in surrogate distributions of PBI calculated under the null hypothesis (See Statistical Analysis), and thus it need not thwart the statistical power of the PBI measure.

Phase Opposition Product (POP)

A simple modification of the phase bifurcation index can presumably help remove some of its volatility, by subtracting the baseline quantity ITCall outside, rather than inside the product operation. Indeed, in this case a small change in either ITCA or ITCB is less likely to produce a sign reversal of the phase opposition measure. This was the logic employed in a recent study (Han and VanRullen, 2016). That is:

It is worth noting also that the baseline term— in Equation (6) will be identical in any permutation of the trial assignments (since the overall ITC does not change). Thus, this correction term, which is helpful to display and interpret raw phase opposition measures, can be simply discarded in the statistical analysis when surrogate distributions of POP are calculated under the null hypothesis (See Section Statistical Analysis). This would not be the case for the PBI measure, because the correction in that case is not a mere subtraction.

Phase Opposition Sum (POS)

Following a number of recent studies (Drewes and VanRullen, 2011; Dugue et al., 2011, 2015; Bompas et al., 2015; Inyutina et al., 2015; McLelland et al., 2016; Sherman et al., 2016), we also computed the simple sum of ITCA and ITCB (again corrected by subtracting the baseline ITCall). That is:

Just like the previous two measures, POS will be positive when the ITC of each trial group exceeds the overall ITC. However, it can be argued that using a sum instead of a product renders this measure more stable than the other two. As previously, the subtractive correction term, important for display and interpretation, can be omitted during the permutation procedure (See Section Statistical Analysis).

Statistical Analysis

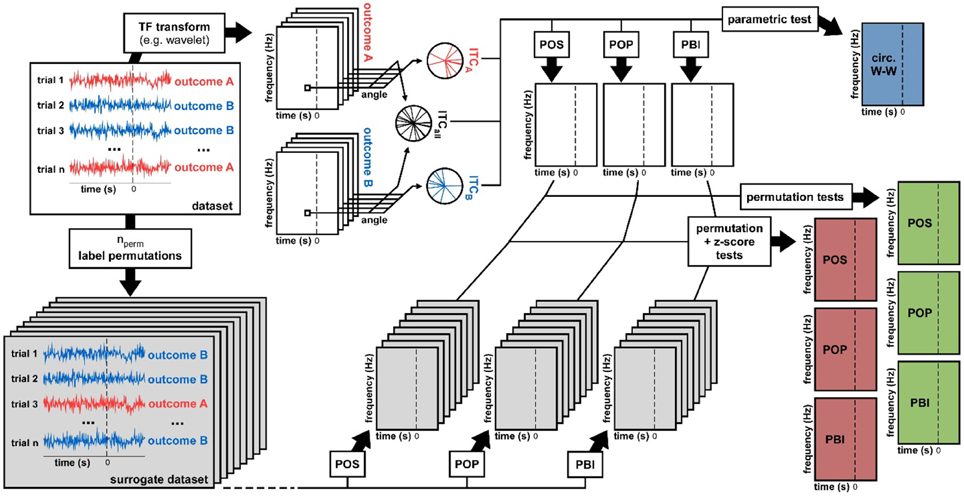

From a given dataset, we computed seven time-frequency maps of p-values, corresponding to seven distinct ways of evaluating phase opposition, as illustrated in Figure 2. The electrophysiological signal recorded on every trial was subjected to a time-frequency transform (“timefreq” function from the Matlab EEGlab toolbox, using the “wavelet” option, and frequencies increasing logarithmically from 2 to 50 Hz while the number of cycles in each wavelet increases linearly from 2 to 15 cycles). At each time and frequency point, the values ITCA, ITCB, and ITCall were computed, and from these values, four distinct time-frequency maps were obtained. One map directly contained the p-values resulting from the circular Watson-Williams test (Equation 4); this corresponds to the procedure employed for example by Baumgarten et al. (2015). The remaining three maps stored the phase opposition measures PBI, POP, and POS (Equations 5–7). In order to assess the statistical significance of these measures, two non-parametric permutation procedures were employed.

Figure 2. Data analysis methods. From a dataset we derived seven statistical measures of phase opposition. A time-frequency transform was used to extract oscillatory phase for each trial, time point, and frequency. Inter-trial coherence values were then computed for each trial group (outcome A vs. B) as well as for the entire dataset (both outcomes pooled). Using these three ITC-values, the circular Watson-Williams parametric test directly yielded a time-frequency map of p-values (top-right, blue time-frequency map). The same three ITC-values were used to calculate time-frequency maps of the phase opposition measures POS, POP, and PBI. To determine the statistical significance of these measures, two distinct non-parametric procedures were applied. The first consisted in randomly permuting the trial labels (assignment of outcome A vs. B), and for each permutation recalculating POS, POP, and PBI. The ranking (percentile) of the original dataset values against these null-hypothesis distributions could be used as a p-value (right hand-side, green time-frequency maps). Alternately, the null-hypothesis distributions could be summarized by their mean and standard deviation (across permutations), the values of the original dataset could then be expressed as a standardized z-score against the null distribution, and a p-value assigned using the normal cumulative distribution function (right hand-side, red time-frequency maps).

Permutation Test

For a given dataset, the trial assignment to group A or B was randomly permuted a number of times (nperm; here nperm = 1000, except when stated otherwise). The phase opposition measures PBI, POP, and POS were recomputed after every permutation. For each of these three measures, at each time-frequency point, the final p-value assigned was the proportion of permutations that yielded a higher measure than in the original dataset, or 1/(2nperm), whichever was highest. (As in other statistical inference tests, the p-value therefore reflects the likelihood that an experiment—in this case, a permutation—would provide equal or higher phase opposition if the null hypothesis—no phase opposition—was true). Note that low numbers of permutations limit the range of p-values that can be obtained, which can prove problematic, for example when a correction for multiple comparisons across time and frequency points is needed (e.g., with nperm = 1000, no result can survive a Bonferroni correction when the number of time-frequency points is larger than 100). On the other hand, permutations of time-frequency data are computationally intensive, and it is thus not always possible to reach adequate values of nperm.

Permutation + z-score Test

To circumvent this problem, we have suggested a streamlined procedure (Drewes and VanRullen, 2011; Dugue et al., 2011, 2015; Inyutina et al., 2015; McLelland et al., 2016; Sherman et al., 2016), in which a relatively low number of permutations is used to characterize the mean and standard deviation of the null-hypothesis distribution. The true value from the original dataset is then compared against the null-hypothesis distribution by means of a z-score. In other words, for each of the three phase opposition measures (PBI, POP, POS), for each time-frequency point, the difference between the original dataset and the mean of all permutations was expressed in units of standard deviation (across all permutations). The resulting time-frequency map of p-values was obtained by means of the normal cumulative distribution function.

Test Performance on Real Experimental Dataset

The above statistical analysis procedure describes how the seven p-value maps of phase opposition are derived from a given dataset (Figure 2). It remains to be seen how each of these seven measures will perform in situations where the “ground truth” is known (or assumed to be known). Is a significant phase opposition detected at the proper time and frequency point? Are there other, spuriously significant phase opposition values detected at erroneous time-frequency points? We first addressed these questions using a previously published experimental dataset (Busch et al., 2009) for which the existence of phase opposition around 7 Hz and 120 ms pre-stimulus could be assumed as “ground truth” (insofar as previous publication can be considered a mark of reliability).

p-value Combination

As the experiment had been performed for multiple observers (N = 12), each of whom contributed one dataset for the statistical analysis described in Section Statistical Analysis and in Figure 2, it was necessary to combine the results (time-frequency maps of p-values) across observers. For this purpose, we used the method described by (Stouffer et al., 1949), whereby each p-value is turned into an equivalent z-score (using the inverse normal cumulative distribution function Φ−1), the z-scores are combined across observers and finally turned back into probabilities (using the normal cumulative distribution function Φ), that is:

AUC

Given the conclusions of the previously published experiment (Busch et al., 2009), we considered that a phase opposition method was efficient if it detected significant pre-stimulus phase opposition within the expected time-frequency region (here, defined as a rectangular region of extent 140 ms and 4 Hz, centered on the expected phase opposition peak, at 120 ms pre-stimulus and 7 Hz) but not outside of this expected region. Since the answer can strongly depend on the choice of a significance threshold (too conservative and no phase opposition can be detected at all; too liberal and phase opposition materializes at all times and frequencies), we used an ROC procedure (Receiver-Operator Characteristic) to measure efficiency in an unbiased manner. Each possible significance threshold was used alternately, and each time the proportions of “hits” (significant p-values inside the target time-frequency region) and “false alarms” (significant p-values before stimulus onset but outside the target region) were recorded. Plotting hit rate against false alarm rate produces the ROC curve, and the area under this curve (AUC for “Area Under the ROC Curve”) can serve as a measure of sensitivity, with chance level at 0.5 and maximal performance at 1. This AUC was calculated for each of the seven phase opposition measures.

Artificial Datasets Creation

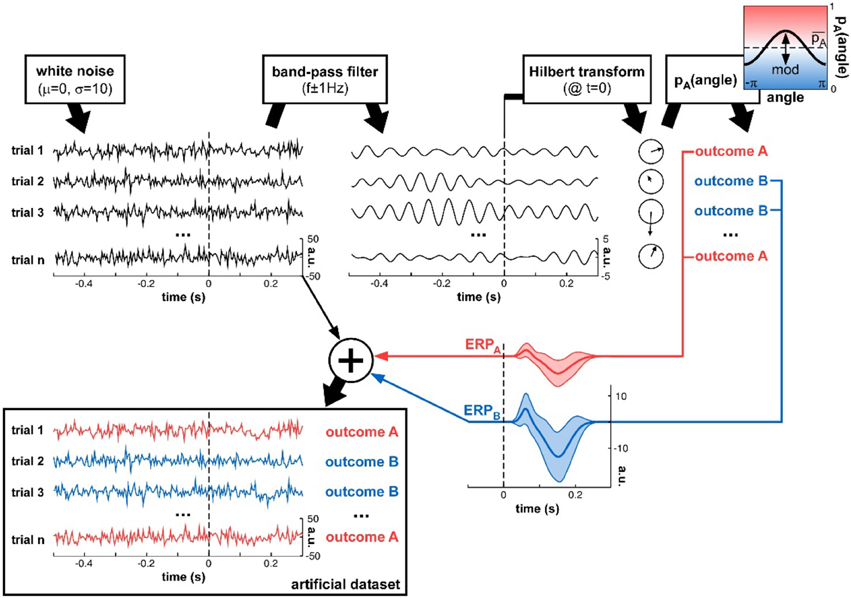

To determine and compare the sensitivity of each phase opposition measure in a variety of well-controlled situations, we also created artificial datasets, for which the parameters of oscillatory modulation could be precisely ascertained (Figure 3). Just like with real experimental data, an artificial dataset was made of n trials, each of which had a (simulated) electrophysiological signal associated with an experimental outcome A or B. The electrophysiological data of each trial was randomly initialized with white noise5. This signal was band-pass filtered around a specific frequency f (different for each simulation), and a Hilbert transform converted the resulting oscillatory waveform into complex values. The phase angle was extracted at a critical time point t (different for each simulation), and the trial outcome was decided on the basis of this phase value. The probability of outcome A was a cosine function of this phase angle6:

where is the overall probability of outcome A, and mod is the depth of the phase modulation (both variable parameters). The probability of outcome B was, therefore, pB = 1 − pA.

Figure 3. Artificial dataset creation. n trials were simulated, each with a (simulated) electrophysiological signal and an associated experimental outcome A or B. The signals were initialized with white noise, then band-pass filtered at a critical frequency f. The Hilbert transform extracted the oscillatory phase value at a critical time t. This phase was used to determine the experimental outcome for that trial: outcome A was randomly assigned with a mean probability that was modulated as a cosine function of the phase angle. An ERP (with slightly randomized latency, duration and amplitudes for both P1 and N1 components, as illustrated by the shaded areas representing standard deviation across trials), whose overall amplitude depended on the trial outcome A or B, was added to the original white noise signal, to produce the final artificial dataset.

Once the trial outcome was decided, a noisy ERP-like signal was added to each trial, with (potentially) different amplitudes for trial groups A and B. The shape of the ERP was bimodal, with a first positive peak (a “P1” component) and a second negative one (a “N1” component). The standard P1 was a Gaussian waveform, slightly different on each trial, with peak latency μ drawn from a normal distribution (mean = 65 ms, standard deviation = 10 ms) and temporal extent σ drawn from a normal distribution (mean = 8.33 ms, standard deviation = 1.66 ms; the waveform was zero-padded outside a window duration of 6σ); the maximal amplitude of the P1 was drawn from a normal distribution (mean = 1, standard deviation = 0.5, arbitrary units). Similarly, the standard N1 was a negative Gaussian waveform, slightly different on each trial, with peak latency μ drawn from a normal distribution (mean = 155 ms, standard deviation = 25 ms) and temporal extent σ drawn from a normal distribution (mean = 21.66 ms, standard deviation = 4.17 ms; the waveform was zero-padded outside a window duration of 6σ); the maximal amplitude of the N1 was drawn from a normal distribution (mean = 2, standard deviation = 1, arbitrary units). The randomly drawn P1 and N1 waveforms for each trial were summed, and then scaled by a multiplicative factor ERPA or ERPB dependent on the trial outcome A or B. Finally, this ERP was added to the white noise signal initially generated for that trial, to produce the final simulated electrophysiological signal.

In different simulations, the parameters n, f, t, mod, , ERPA, and ERPB were varied (sometimes jointly) to assess the robustness of the various phase opposition measures.

Test Performance on Artificial Datasets: Statistical Power

The statistical power of a test is the proportion of experiments that would return a positive test result, given that an effect was indeed present. For a given set of parameters, we created 100 distinct artificial datasets, and then for each phase opposition measure, we evaluated the proportion of datasets yielding a successful statistical result. Based on the time t and frequency f of phase modulation implanted into the artificial datasets (see Figure 3), we defined a target time-frequency region [centered on point (t, f), with a tolerance of ±400 ms and ±2 logarithmic frequency steps]. An experiment was classified as a “hit” if a statistically significant pre-stimulus phase opposition was detected inside the target region; a “false alarm” occurred if significant pre-stimulus phase opposition was found outside of this region. The hit rate was thus defined as the proportion of datasets with a hit (out of 100 datasets), and the false alarm rate as the proportion of datasets with a false alarm. As previously (Section AUC), the hit and false alarm rates were contingent on the choice of statistical threshold. Thus, we varied the threshold systematically, and constructed the entire ROC curve; the statistical power was defined as the area under this ROC curve. Statistical power varies between 0 and 1. A statistical power value of 0.8, for example, would indicate that 80% of experiments could detect a significant phase opposition around the correct time and frequency (without also detecting it at incorrect times and/or frequencies); this value (or a higher one) is typically considered acceptable. Note also that chance level (the statistical power of a test with zero sensitivity) is not 0.5, but rather closer to 0.2 for most of our simulations. Indeed, since an ineffective test is equally likely to produce a significant result at any time-frequency point (inside or outside the target time-frequency region), the chance level can be simply evaluated as the ratio between the numbers of pre-stimulus time-frequency points lying inside vs. outside the target time-frequency region.

Results

Real Experimental Dataset (Busch et al., 2009)

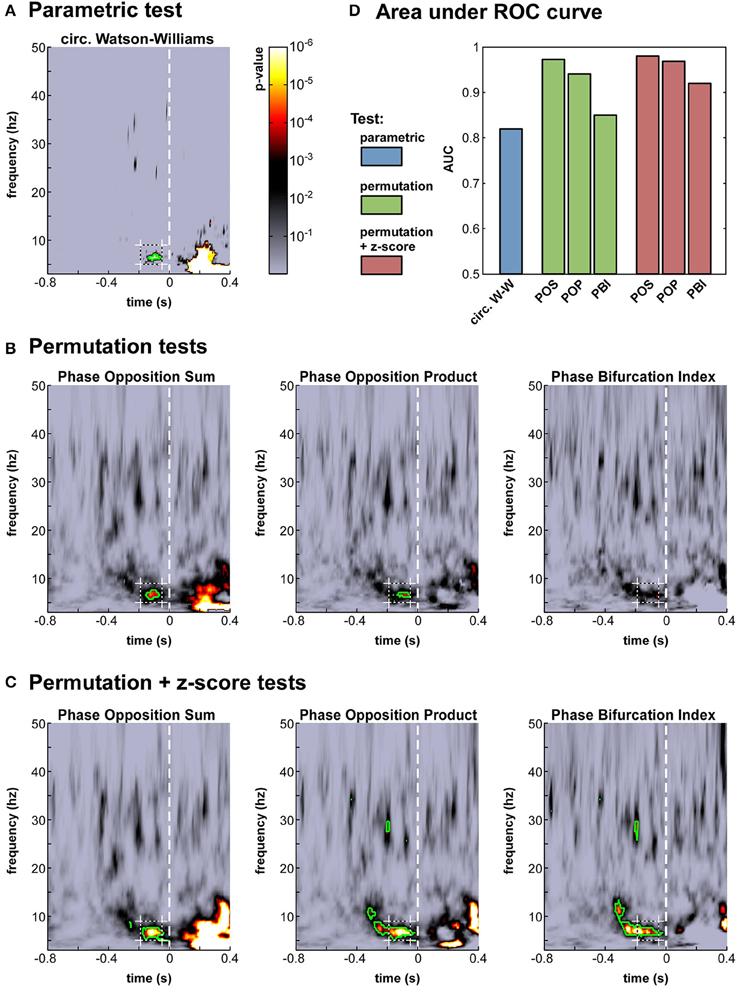

First, it appears important to verify that all the statistical measures compared here share, at least, the ability to identify phase opposition in a real experimental situation, where the perceptual outcome is known to be modulated by ongoing oscillatory phase. To this end, we re-analyzed a previously published dataset (Busch et al., 2009). In that experiment, a brief peripheral target at detection threshold was presented on every trial, after a randomized inter-trial interval, such that observers reported only about half of the targets (hits) but missed the other half (misses). The phase bifurcation index (PBI) applied to this dataset (electrode Fz) had revealed significant phase opposition between these two trial groups at 7 Hz, peaking 120 ms before stimulus onset. We then explored the performance of other phase opposition measures on this same dataset (Figure 4).

Figure 4. Phase opposition in a real-life experimental dataset (Busch et al., 2009). (A-C) Time-frequency maps of p-values on electrode Fz [colorbar in panel (A), logarithmic scale] for the parametric test (A), the three permutation tests (B) and the hybrid tests combining permutation and z-score (C). The target time-frequency region (defined based on the results from the earlier study) is indicated by a dashed box. Time-frequency points with p-values below the threshold of FDR correction for multiple comparisons are outlined in green (D). Results of an AUC procedure contrasting the proportions of significant time-frequency points inside vs. outside the target time-frequency region. The bar colors correspond to those defined in Figure 2.

The seven time-frequency maps of p-values shown in Figure 4A–C all demonstrated significant (p < 0.0002) phase opposition around the same time (−120 ms) and frequency (7 Hz) as in the original study (Busch et al., 2009). For six of these seven measures, the resulting p-values were robust to an FDR correction for multiple comparisons using alpha = 0.05 (Benjamini and Hochberg, 1995). It is worth noting that the last remaining measure was the PBI coupled with a standard permutation test (Figure 4B), precisely the one employed in the original paper. This indicates that our comparison did not unwittingly favor the initially applied measure. (In the original study, the PBI-values were first combined across observers before applying the permutation test, resulting in slightly different p-values, which were in fact robust to FDR-correction; here, p-values were first obtained for each observer and subsequently combined across observers, for consistency with the procedure applied to the circular Watson-Williams test).

The different tests should not simply be compared in terms of their best p-values around the initially expected time-frequency point; indeed, a test's efficiency also depends on its propensity to produce false positives, i.e., apparently significant phase opposition at a priori unexpected time-frequency points. To provide a comprehensive assessment of each test's efficiency, we thus performed an ROC analysis, as follows. A target time-frequency region was defined based on the findings of the original study, centered on 7 Hz and 120 ms pre-stimulus, with a tolerance of ±2 Hz and ±70 ms. For a given statistical threshold, the “hit rate” was defined as the proportion of time-frequency points inside the target time-frequency region with significant p-values (i.e., p-values below the statistical threshold), and the “false alarm rate” as the proportion of pre-stimulus time-frequency points outside this region with significant p-values. After measuring hit rates and false alarm rates for all possible statistical thresholds, an ROC curve was obtained, expressing hit rate as a function of false alarm rate. The area under this ROC curve (AUC) can be used as a threshold-independent measure of a tests' sensitivity.

We computed this AUC for all seven tests (Figure 4D). As could be expected from the p-value maps, all tests performed reliably, with AUC-values above 0.8. The most sensitive test appeared to be POS, followed by POP, and then PBI. This ranking was the same, whether a standard permutation test or a hybrid permutation + z-score test was applied, though the latter performed consistently (albeit marginally) better than the former. Finally, the circular Watson-Williams test yielded the lowest AUC value (0.82)7. Nonetheless, as will be seen later (Section Artificial datasets), the circular Watson-Williams test is often among the most sensitive measures of phase opposition.

Artificial Datasets

The confirmation of the adequacy of all seven tests on a given “reference” dataset was an essential step, but leaves open a number of questions. Would all tests prove equally robust to variations in specific properties of the dataset, such as the frequency of the rhythmic modulation, its magnitude, the number of trials collected, the presence and amplitude of post-stimulus ERPs, and so on? Answering these questions with real experimental datasets could easily require several hundred experiments, and a lifetime of data collection. To address these issues, we created instead a large number of artificial datasets, for which various parameters could be precisely controlled (Figure 3).

Each dataset included n trials with simulated electrophysiological signals (initialized as white noise) and an associated trial outcome A or B. The trial outcome was decided with a probability that was a sinusoidal function of the signal phase angle at a specific time and frequency. Finally, noisy ERP waveforms, of possibly different amplitudes for the two trial types A or B, were added to the original electrophysiological signal. The number of trials (n), the average likelihood of outcome A (), the depth (mod), frequency (f), and time (t) of phase modulation, as well as the average ERP amplitudes (ERPA and ERPB) were parameters that could be varied in distinct simulations.

For each fixed set of parameters, we generated 100 random artificial datasets and applied the seven phase opposition measures. The performance of each measure was quantified by their statistical power: the proportion of datasets (out of 100) yielding a successful statistical outcome, with significant phase opposition detected inside but not outside a target time-frequency region. This statistical power was computed using a threshold-independent AUC procedure8. Statistical power values above 0.8 are conventionally considered appropriate. Therefore, a single value describing statistical power over the 100 simulated datasets can serve as a main criterion to assess the reliability of each measure (value > 0.8). Nonetheless, for the additional purpose of comparing measures against each other, it is useful to keep in mind that differences of statistical power equal to or larger than 0.06 (e.g., 0.87 vs. 0.93) would normally be statistically significant at the p < 0.05 level using a χ2-test.

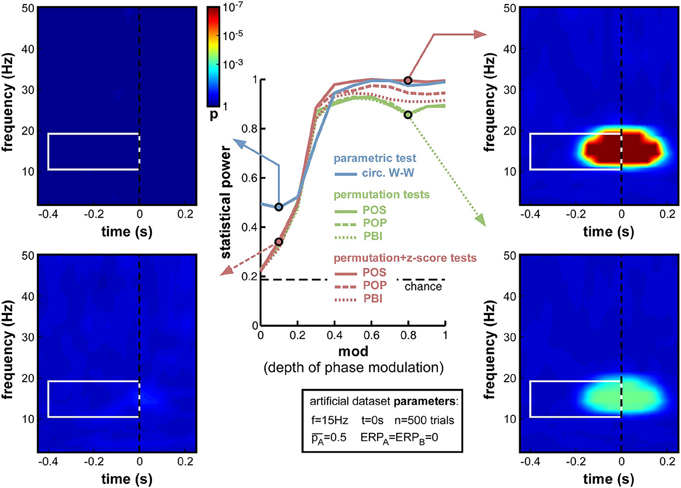

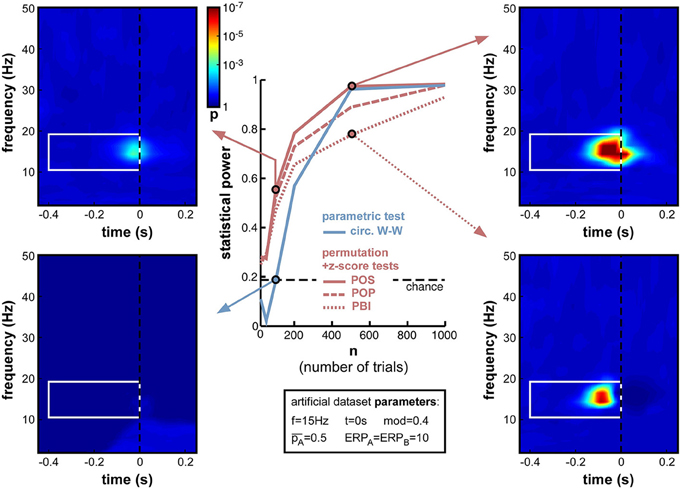

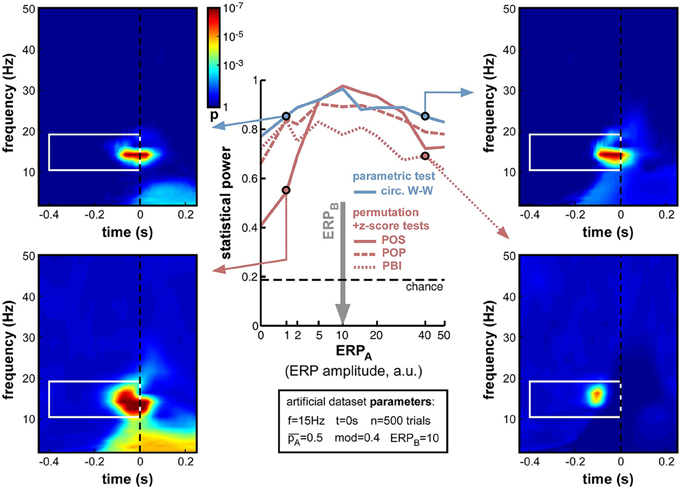

Depth of Oscillatory Phase Modulation

The first set of simulations investigated the effect of the depth of oscillatory phase modulation (Figure 5). Indeed, this manipulation is directly linked to the detectability of phase opposition: with a phase modulation of 1 the outcome likelihood changes from 100% A to 100% B between one oscillatory phase and its opposite, and thus phase opposition should reach its maximal value; whereas with 0 phase modulation the likelihood of outcome A would be constant, and there would simply be no phase opposition to detect. For this first simulation, we arbitrarily fixed the other parameters as follows: number of trials n = 500, frequency of phasic modulation f = 15 Hz, time of phasic modulation t = 0 (i.e., the 15 Hz phase value measured at stimulus onset influenced the trial outcome), equal likelihood of outcomes A and B ( = 0.5), and no ERP produced after stimulus onset (while this last assumption is unrealistic, it will allow us to independently explore the influence of ERPs in later simulations).

Figure 5. Effect of varying depth of oscillatory phase modulation. The central plot illustrates the changes in statistical power caused by the parameter variations. The line colors correspond to those used in Figures 2, 4. The solid blue line plots the statistical power of the circ. W-W test. Solid, dashed and dotted lines correspond respectively to POS, POP, and PBI, in green for the standard permutation tests, and red for the hybrid permutation + z-score tests. The fixed parameters for these simulations are listed in the box below the main plot. In this and the following figures, four representative time-frequency maps of p-values (obtained by log-averaging the p-value maps from the 100 simulated datasets) are displayed for illustrative purposes. The arrow pointing to each map identifies the measure and the parameter value being illustrated. All maps share the same color scale (see colorbar next to the top-left map). The target time-frequency region is highlighted using a white box. Significant pre-stimulus time-frequency points inside vs. outside this box count as hits and false alarms (respectively) for the calculation of statistical power.

As expected, upon decreasing the depth of modulation from 1 to 0, the statistical power of all seven measures went down (Figure 5), from high values (0.8 and above) to chance level (~0.2, defined by the ratio of time-frequency points inside vs. outside the target region9). For all measures, the tipping point was around modulation depths of 0.3–0.4: above this point, all measures performed consistently well; below this point, the decrease in statistical power accelerated. This prompted us, in all subsequent simulations, to use a value of 0.4 for the “depth of modulation” (mod) parameter, i.e., just above the “tipping point”: this still produced a satisfactory level of performance, yet also made this performance very susceptible to any deterioration induced by manipulations of the other parameters.

All seven measures behaved comparably in this first set of simulations, with minor differences in performance. At phase modulation values of 0.4 and above, both circ. W-W and POS (using the hybrid permutation + z-score test) virtually reached maximal statistical power (1), while POP and PBI (with the same hybrid test) reached, respectively, 0.97 and 0.94. The last three measures involving standard permutation tests (POS, POP, PBI) were systematically below the others, but still reached 0.92 performance. One reason why these tests might perform sub-optimally here could be an insufficient number of permutations, leading to insufficient precision of p-values. Indeed, only 1000 permutations had been used in these simulations. As can be seen in the time-frequency map on the bottom right of Figure 5, this implies that p-values from the permutation tests could not improve beyond 0.0005, even when the phasic modulation was maximal. Other tests, however, were not limited in this way, and could attain much more significant p-values (p < 10−7, see top-right time-frequency map in Figure 5). Before continuing with other parameter manipulations, therefore, we decided to assess the importance of the number of permutations used in the comparison between tests.

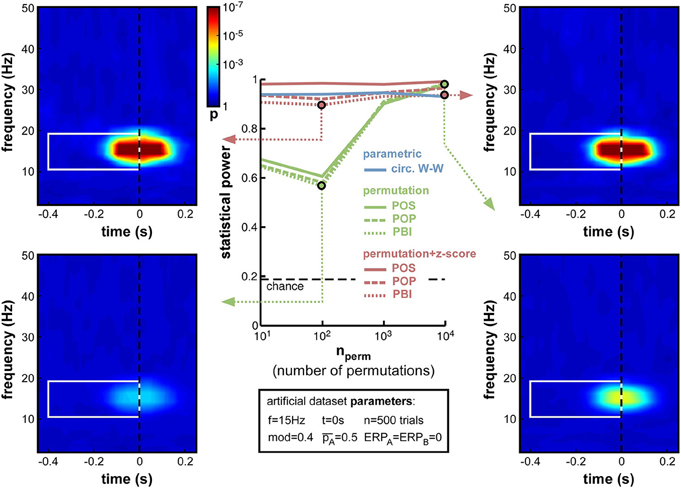

Number of Permutations

While other parameters remained fixed as before, and the modulation depth parameter was set at mod = 0.4, we varied the number of permutations from 10 to 10,000. Logically, this manipulation should only alter the non-parametric tests that rely on such permutations to estimate their null distribution. As expected, therefore, the parametric circ. W–W test was wholly unaffected by the variations (Figure 6). Although both types of non-parametric tests (permutation, or permutation + z-score) were expected to depend on permutation number, the hybrid permutation + z-score test appeared remarkably robust at low permutation numbers, with statistical power remaining above 0.9 even down to only 10 permutations (Figure 6). This suggests that the mean and standard deviation computed across only 10 surrogate (permuted) datasets are sufficiently precise estimates of the shape of the null distribution. In contrast, for the conventional permutation tests, statistical power was poor (around 0.6) with only 10 or 100 permutations, and kept improving from 1000 to 10,000 permutations (Figure 6). With that number, the permutation tests finally attained comparable statistical power to the hybrid tests.

Figure 6. Effect of varying permutation number. Artificial dataset creation parameters are identical to those used in Figure 5, with the depth of oscillatory modulation set at mod = 0.4. All four time-frequency maps illustrate the results of the PBI measure. Using a hybrid permutation + z-score test, PBI produces comparable results with 100 or 10,000 permutations (top-left and top-right). Using a standard permutation test, however, with 100 permutations the target p-values are hardly distinguishable from the background noise (bottom-left), whereas statistical power can be restored with 10,000 permutations (bottom-right).

The conclusions that can be drawn from this set of simulations are, therefore, that conventional permutation tests can be equivalent to the hybrid permutation + z-score tests, but large numbers of permutations (on the order of 10,000) are required to achieve this equivalence. Conversely, the hybrid tests can provide reliable estimates of the statistical power of a phase opposition measure, even when lower numbers of permutations are used. Consequently, both to simplify computations and to improve readability, in the following simulations we used only 1000 permutations, and shall not display anymore the results of the 3 conventional permutation tests10.

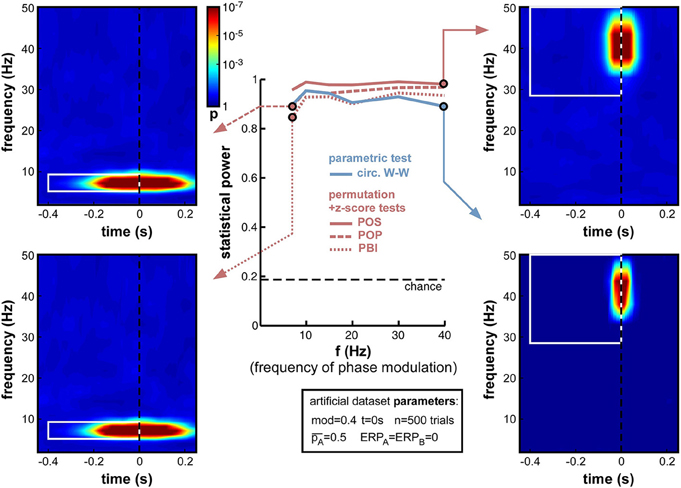

Frequency of Oscillatory Phase Modulation

A natural concern when measuring phase opposition is that the likelihood of detecting phasic modulations may depend on the frequency of the underlying oscillation. Intuitively, slower oscillations may be less prone to errors in phase measurement, simply because any fixed temporal uncertainty (say 10 ms) in electrophysiological signals would translate into smaller phase variance at slower frequencies (only 1/10th of a cycle at 10 Hz, but 1/2 of a cycle at 50 Hz). The results in Figure 7, however, demonstrate that oscillatory frequency has little influence on the statistical power of phase opposition measures under the conditions of these simulations. All measures remained over 0.8 as frequency varied from 7 to 40 Hz. Across frequencies, the highest statistical power was given by POS, followed by POP, PBI, and circ. W-W. Higher frequencies did not reduce statistical power, except for a marginal decrease at 40 Hz concerning the circ. W-W test. This robustness is likely to be specific to the conditions of these simulations: ongoing oscillations were given equal power at all frequencies (i.e., created from white noise; see Footnote 5), and no ERP was included here that could have confounded phase measurements in specific frequency bands. (A later set of simulations, in Section Frequency of oscillatory phase modulation (with ERP), will explore the effect of oscillatory frequency in the presence of sizeable ERPs.) Finally, the time-frequency maps in Figure 7 also underline that the temporal resolution of phase opposition measures, evident in the temporal spread of significant activations, is directly related to oscillatory frequency, with increased temporal precision at higher frequencies (reflecting, in part, the temporal extent of the wavelets used in the time-frequency transform).

Figure 7. Effect of varying frequency of oscillatory phase modulation (in the absence of an ERP). For improved readability, only the parametric circ. W-W test and the hybrid permutation + z-score tests are presented in this and all subsequent figures. Each conventional permutation test (not shown) consistently mirrors the patterns of the corresponding hybrid test, only with marginally lower statistical power. All tests appear relatively robust to changes in oscillatory frequency.

ERP Amplitude

The onset of a sensory stimulus at time zero is normally followed by an event-related potential (ERP in EEG, also called event-related field or ERF in MEG), reflecting the activation of the sensory and perceptual apparatus of the corresponding modality, and potentially also a number of subsequent attentional and cognitive operations (Luck, 2014). ERPs have relatively high amplitude compared to that of ongoing brain oscillations. And, most importantly for our purposes, ERPs strongly affect measurements of oscillatory phase. It is still fiercely debated whether ERPs denote a phase reset of ongoing oscillations, or are merely superimposed on them (Makeig et al., 2002; Fell et al., 2004; Mazaheri and Jensen, 2006; Min et al., 2007; Sauseng et al., 2007); but in either case, phase measurements in time-frequency windows that overlap with ERPs cannot provide an independent estimate of ongoing oscillatory phase, and in some cases (depending on the existence of phase reset, and on the relative amplitude of ongoing and evoked signals) could be entirely dominated by the phase of ERP signals.

It appears necessary, therefore, to evaluate the statistical power of phase opposition measures in the presence of ERPs. Importantly, for these simulations, we assumed that the phase of background ongoing oscillatory signals, free of any ERP, was the key element in determining perceptual outcome (we had direct access to these background oscillatory signals since they had been artificially created; see Figure 3, top right). Subsequently, these background signals were summed with ERP waveforms, and the phase opposition measures were only given to operate on the summed signals (just as would happen in a genuine experimental situation). We could thus evaluate any disruption caused by ERPs masking the relevant phase of the background signal. In this set of simulations, we systematically varied the average ERP amplitude, but maintained it equal for the two perceptual outcomes, that is, ERPA = ERPB (a later set of simulations in Section ERP amplitude difference, will explore situations where the two ERPs are unequal).

As expected, increasing ERP amplitude had a detrimental effect on all phase opposition measures (Figure 8). POS and circ. W-W were the most robust measures, retaining statistical power above 0.8 for all ERP amplitudes tested, followed by POP and PBI, which decreased to 0.67 and 0.44 statistical power, respectively. The detrimental effect of ERPs on phase opposition is particularly visible in the four time-frequency maps of Figure 8 (compared, for example, to the upper two time-frequency maps of Figure 6). The region of significant phase opposition (when present) is smaller, and its center shifted to the left, i.e., 50–100 ms earlier than the actual time t = 0 at which phase modulation was applied. This shift can be understood as ERPs (whose phase is sensibly similar across all trials) “masking” the phase opposition between trial groups. The spectral signature of ERPs, and hence the time-frequency region where ERPs can potentially affect phase opposition, is clearly visible as a lighter blue “hill” in the bottom-right time-frequency map.

Figure 8. Effect of varying ERP amplitude. Parameters ERPA and ERPB were varied together from 0 to 50 (arbitrary units, the relevant point of comparison being the standard deviation of the background noise whose oscillatory phase was used to determine perceptual outcome: this standard deviation was fixed at 10 units in all simulations). As expected, the statistical power of all measures decreased with increasing ERP amplitude. Both POS and circ. W-W retained satisfactory power (above 0.8) at all amplitudes tested, while POP and PBI fell down to 0.67 and 0.44, respectively. Time-frequency maps illustrate distortions in the phase opposition landscape caused by ERPs, in particular a leftward shift of latency (compare, for example, to upper time-frequency maps in Figure 6). The time-frequency spectral signature of ERPs is visible as a lighter blue “hill” in the bottom-right map.

A logical (albeit indirect) consequence of these simulations is that phase opposition may be less easily detected in experiments where stimulus onset is temporally predictable, because of e.g., a fixed inter-trial interval, or a preparatory cue (see also Footnote 3). Indeed, any pre-stimulus event bearing a fixed temporal relation to stimulus onset (such as the end of the preceding trial, or the onset of the preparatory cue) will evoke its own response activity pattern, affecting the phase distribution of “ongoing oscillations” in all trials, independently of the eventual trial outcome. This is more or less equivalent to the present simulations, in which a common “evoked” signal (an ERP) is affecting the phase of both trial groups in a similar manner, i.e., ERPA = ERPB (with a major difference, of course, in the time at which this influence is exerted—early vs. late in the pre-stimulus interval).

For all subsequent simulations (except the further investigations of ERPs in Section ERP amplitude difference), we shall fix the ERP amplitude parameters to ERPA = ERPB = 10, an intermediate value equivalent to the amplitude of the background noise (whose oscillatory phase serves to determine the trial outcome, see Figure 3, top-right).

Trial Number

The reliability of inter-trial coherence estimates is notoriously dependent on trial number (Moratti et al., 2007; Vinck et al., 2010). Phase opposition measures directly inherit this dependence (Equations 4–7). As trial number is a key element of any experimental design, which can limit or even forbid addressing certain experimental questions, the exact influence of this parameter (n) is important to assess.

When decreasing trial number n from 1000 down to 10 (Figure 9), the anticipated decrease of statistical power occurred for all measures, yet at slightly different rates. Non-parametric measures decreased slowly down to 200 trials, and faster afterwards (reaching levels close to chance at 50 trials and below). POS, for example, which showed the highest statistical power (0.985) at 1000 trials, remained above 0.8 down to nearly 200 trials. In contrast, the parametric measure (circ. W-W) decreased much faster. Even though it was on par with POS down to 500 trials, at 200 trials and below it had much lower statistical power than all other measures. At 100 trials it showed no significant phase opposition (chance level = 0.19; bottom-left time-frequency map), while POS could still detect phase opposition in more than half of the simulations (0.56; top-left time-frequency map).

Figure 9. Effect of varying trial number. Parameter values used for n were 10, 50, 100, 200, 500, and 1000. While all measures were comparably efficient using 1000 trials, their statistical power systematically decreased when less trials were simulated. Below 500 trials, circ. W-W decreased much faster than other measures, reaching chance performance at 100 trials (bottom-left time-frequency map), while other measures still showed above-chance performance (top-left time-frequency map).

Another lesson that can be learned from this set of simulations is that, whatever the measure used (even PBI, which so far systematically displayed the worst statistical power), a reliable estimate of phase opposition can always be obtained given enough trials (on the order of 1000 trials in these simulations; Figure 9).

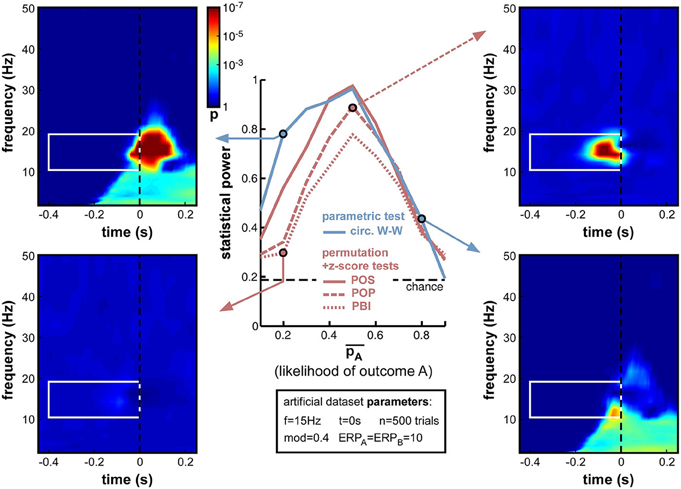

Relative Trial Number

Not only the total number of trials n, but also the relative number of trials in each group can influence phase opposition measures. In the previous simulations the experimental outcomes A and B were always equiprobable ( = 0.5). But experimental designs can often be biased (voluntarily or involuntarily) such that one outcome is more likely than the other. For example, the proportion of correct trials in a two-alternative forced-choice discrimination task should always be well above 50% (chance level); any phase opposition between correct and incorrect perceptual outcomes in such a task would thus be plagued by different trial numbers for the two groups. In turn, this difference may influence measures of phase opposition, either because one of the two groups would count an insufficient number of trials (as explored in the previous simulations, Section Trial number), and/or because the reference quantity ITCall would be biased toward ITCA or ITCB (which would consequently affect all phase opposition measures as described in Equations (4–7).

Indeed, this new set of simulations revealed that all phase opposition measures were only truly efficient when outcomes were equiprobable, i.e., = 0.5 (Figure 10). Any departure from this equilibrium was sanctioned by a loss of statistical power, most measures falling below 0.8 if one of the outcomes was twice as likely as the other (i.e., < 0.33 or > 0.66). The most robust measure against this parameter manipulation was circ. W-W, followed by POS, then by POP and PBI.

Figure 10. Effect of varying relative trial number (likelihood of outcome A). Parameter was varied from 0.1 to 0.9 in steps of 0.1. Optimal performance was obtained in a situation with equiprobable outcomes ( = 0.5), while unbalanced situations strongly impaired statistical power. Circ. W-W and POS were the most successful tests, followed by POP and PBI. The asymmetry that is particularly visible for the circ. W-W test (compare top-left to bottom-right time-frequency maps) is caused by an interaction between the phase of ERPs and the ongoing oscillatory phase that favors outcome A vs. B.

Interestingly, a strong asymmetry was apparent in the statistical power curves of the circ. W-W and POS measures (Figure 10), with lower performance when A was more likely than B (i.e., in the second half of the curves). Circ. W-W retained 0.78 statistical power with = 0.2 (see top-left time-frequency map), but fell down to 0.43 with = 0.8 (see bottom-right time-frequency map). This puzzling result (considering that A and B were arbitrarily assigned labels) can in fact be explained by Equation (9): due to the cosine function, outcome A was always maximal around phase 0, and outcome B at the opposite phase. This arbitrary choice effectively broke the symmetry of the dataset when in the presence of ERPs: the phase of the ERP signal around the critical time, similar for the two trial groups, could help (top-left map) or impair phase opposition detection (bottom-right map), depending on which of the two trial groups had a phase closest to that of the ERP11.

Overall, the strong decrease of statistical power obtained with unbalanced datasets (Figure 10) has important implications for experimental design and data analyses practices. Equiprobable outcomes should be sought whenever possible. Of course, a dataset resulting from an unbalanced experimental design can always be subsampled to restore equal numbers of trials in the two groups (e.g., by randomly rejecting trials from the group having higher likelihood), but this will necessarily come at the cost of decreasing the overall trial number, which, as demonstrated above (Figure 9), can also have potentially drastic consequences.

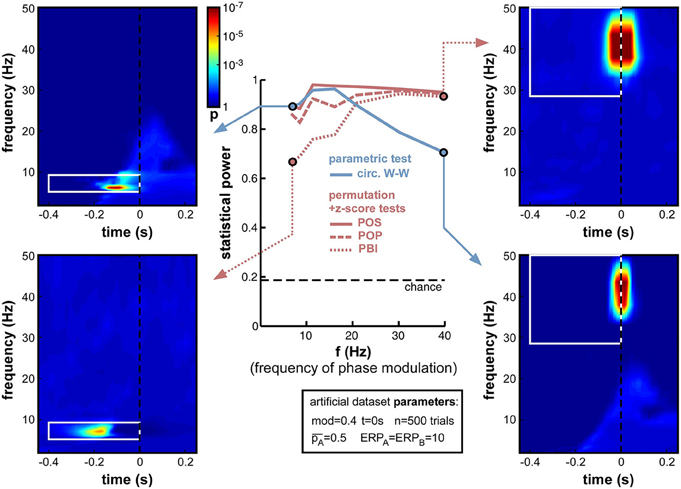

Frequency of Oscillatory Phase Modulation (with ERP)

The presence of an ERP, contaminating time-frequency estimates around stimulus onset and from roughly 0 to 30 Hz (in our simulations), impedes the statistical power of phase opposition measures when the phase modulation is applied at t = 0 and for 15 Hz ongoing oscillations (Figure 8). It is likely, however, that this contamination might differ were phase opposition applied for a different frequency or at a different time. This set of simulations and the next were designed to address these two questions.

First, we varied the oscillatory frequency f at which the phase modulation was applied, exactly as in Figure 7, but this time in the presence of a sizeable ERP (ERPA = ERPB = 10). The results revealed the same pattern as in Figure 7, only exacerbated (Figure 11). POS was the most successful measure at nearly all frequencies. Circ. W-W performed well at low frequencies (below 15 Hz, see top-left time-frequency map), but was the least successful above 20 Hz (down to 0.7 statistical power at 40 Hz, see bottom-right time-frequency map). POP and especially PBI displayed the opposite behavior, strongly affected by ERPs at low frequencies (PBI reaching down to 0.69 statistical power at 7 Hz, see bottom-left time-frequency map12), yet equivalent to the best measure (POS) at frequencies above 30 Hz (see top-right time-frequency map).

Figure 11. Effect of varying frequency of oscillatory phase modulation in the presence of an ERP. The pattern of frequency-dependence for the different phase opposition measures is similar to that observed in Figure 7, only exacerbated by the presence of an ERP. POS was consistently the highest-performing measure. Circ. W-W performed best at low frequencies (top-left time-frequency map) and worst at high frequencies (bottom-right map). PBI (and, to a lesser extent, POP) showed the opposite pattern, with maximal performance at high frequencies (top-right map) and minimal performance toward low frequencies (bottom-left map).

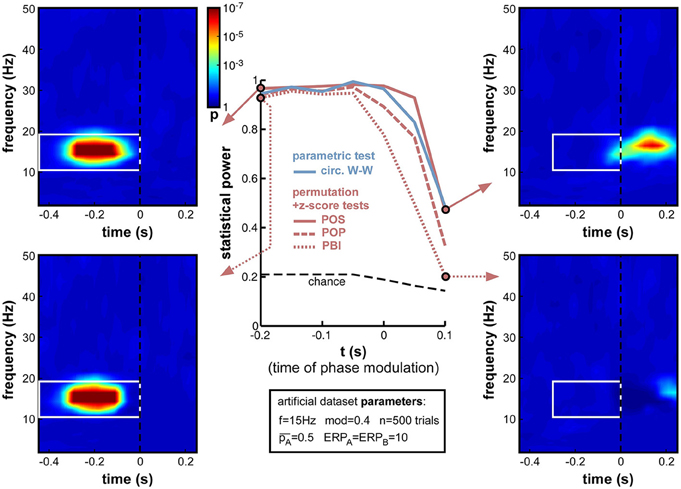

Time of Oscillatory Phase Modulation

Next, we varied the time t at which phase modulation was applied, from −200 to +100 ms relative to stimulus onset (the frequency f was maintained at 15 Hz for this set of simulations). If a sensory or cognitive function operates periodically under the effect of an ongoing brain oscillation, the moment at which oscillatory phase should maximally influence the outcome of this function (and thus, phase opposition should be maximal) is the precise moment at which this function comes into play. In all logic (and given our experimental assumption of a randomized, unpredictable stimulus onset; See Experimental assumptions), this should happen after stimulus onset, around the activation latency of the corresponding brain region or network. Unfortunately, this moment should also coincide with the emergence of ERPs, which have the power to mask phase opposition (Figure 8).

Indeed, the simulations (Figure 12) confirmed that, while all measures performed at ceiling (statistical power > 0.9) to detect phase opposition that was introduced at early latencies (at or before −50 ms pre-stimulus), their statistical power dropped to inadequate values (<0.5) by 100 ms post-stimulus, i.e., right in the middle of the ERPs. PBI plunged first, followed by POP, circ. W-W and finally POS. These last two measures were still remarkably efficient (power > 0.95) at the exact time of stimulus onset (t = 0).

Figure 12. Effect of varying time of oscillatory phase modulation. The phase that determined the trial outcome A vs. B was extracted from background electrophysiological signals (by filtering and Hilbert transform, see Figure 3) at different moments around stimulus onset. The presence of an ERP with strong phase-locking across (all) trials may be expected to obscure the underlying phase opposition at specific time-frequency points. For latencies between −200 and −50 ms, all phase opposition measures performed optimally (statistical power > 0.9). With increasing latencies, all measures deteriorated, in the following order: PBI first, followed by POP, circ. W-W and finally, POS.

It is worth insisting that our calculation of statistical power only took into account pre-stimulus time-frequency points, even in simulations where the phase opposition was actually applied at post-stimulus latencies. This choice is justified by two reasons. First, the stationarity of oscillatory signals and the window length of the filtering operations required to extract phase values should imply that information about the critical oscillatory phase is already available in the pre-stimulus time window (even when the critical time t is itself in the post-stimulus period). Second, post-stimulus “phase opposition” may be spuriously introduced by the ERPs, as illustrated for example in the bottom-right time-frequency map of Figure 10 concerning circ. W-W, or in the bottom-left map of Figure 13 concerning POS. In these maps, large time-frequency regions of (spurious) “significant phase opposition” can be observed in the post-stimulus region and at low frequencies (below 10 Hz), even though no phase modulation actually occurred in this time-frequency range. We took the point of view of an experimenter who does not have access to the ground truth, and must therefore beware that any post-stimulus effect of phase could be the product of ERP-induced artifacts; and we thus restricted our search for phase opposition to the pre-stimulus time window13.

Figure 13. Effect of varying ERP amplitude difference. ERP amplitude for one group of trials (group B) was fixed at ERPB = 10 arbitrary units (indicated by the vertical gray arrow; note the non-linear x-axis scale). For all phase opposition measures, statistical power was best when ERPA was approximately equal to ERPB, and declined both when ERPA was higher (right time-frequency maps) and when it was lower (left maps; even though the phase of group A trials could be more accurately estimated in this latter case). When ERPA and ERPB were approximately equal, POS was the best performing measure. However, the POS measure became less reliable than others when the amplitude ratio was higher than 2 or lower than 0.5 (bottom-left map). In that case, the circ. W-W test provided the highest statistical power (top time-frequency maps).

In sum, this set of simulations indicated that phase opposition becomes increasingly difficult to detect if it occurs in a time window coinciding with strong ERPs, and contrarily, increasingly easy to detect when it occurs at early latencies. Of course, this latency is not under the experimenter's control, but depends on the sensory or cognitive function under study. In practice, this implies that phase opposition experiments may be more likely to succeed when they investigate the periodicity of a “peripheral” brain function (with short activation latency, e.g., simple stimulus detection) rather than a more “central” one (with later activation latency, e.g., object recognition or categorization). In any case, POS and circ. W-W tests provide the best outcome in such situations.

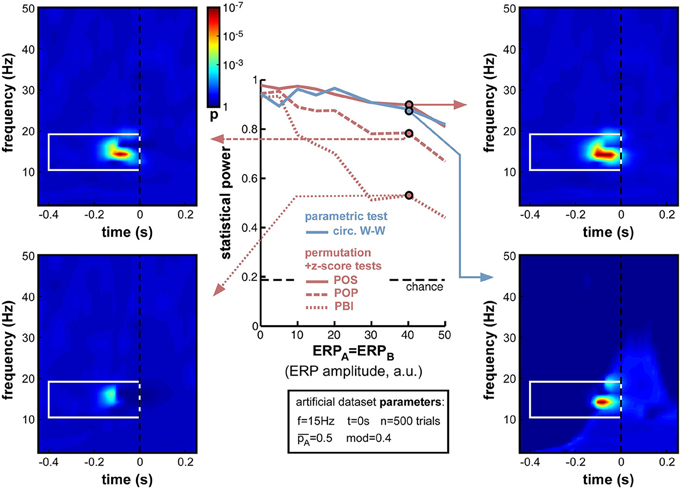

ERP Amplitude Difference

So far, the influence of ERPs on phase opposition has only been investigated in situations where both task outcomes A and B produced ERPs of similar amplitude (ERPA = ERPB). This influence was found to be already fairly detrimental in such situations (see, in particular, Figures 8, 12). But many experimental tasks can also be expected to produce different ERPs for different task outcomes. For example, the amplitude of certain ERP components is commonly found to vary, depending on the subject's behavior. In one extreme situation, with brief visual stimuli at detection threshold, Busch et al. (2009) found a strong ERP for hits but virtually no ERP amplitude for misses. As the potential masking of ongoing oscillatory signals by ERPs is directly related to ERP amplitude (whether this amplitude is the reflection of a phase reset process and/or of an additive signal), an asymmetry in ERP amplitudes for the two trial groups A and B could translate into an imbalance between the quantities ITCA and ITCB (relative to their baseline ITCall), and thus affect the calculation of phase opposition (through Equations 4–7).

To explore the effects of such an ERP imbalance14, we fixed the ERP amplitude of trial group B to ERPB = 10 (arbitrary units), and systematically varied the amplitude ERPA of the other trial group from 0 to 50. The resulting statistical power curves (Figure 13) revealed that ERP imbalance could indeed impair phase opposition: all curves peaked around ERPA = 10, that is, when the two ERPs were approximately equal. In fact, the performance decrease appeared nearly proportional to the ratio between highest and lowest ERP amplitude, regardless of whether ERPA was above or below ERPB. This means that the impairment due to ERP amplitude imbalance cannot be compensated for by one of the two trial groups having low (or even null) ERP amplitude, and thus low contamination of phase calculations. PBI was overall the least successful measure, followed by POP. While POS performed above circ. W-W (and all other measures) around the amplitude equilibrium point (ERPA between 5 and 20), this relation reversed and circ. W-W became the most successful measure when imbalance increased. In fact, POS performed worse than any other measure with ERPA ≤ 2, and worse than circ. W-W and POP (but not PBI) with ERPA ≥ 40.

To summarize this set of simulations, while it is best not to have any ERP contamination (see Figure 8), whenever ERPs are present (i.e., in most situations) it is better to have them as equal as possible for the two trial groups. Around the equilibrium point, POS has the highest statistical power, but when the ERP amplitude ratio is above 2 (or below 0.5), circ. W-W becomes preferable.

Discussion

Seven measures of phase opposition were compared in a variety of real and artificial experimental situations. All measures proved their value on a previously published dataset (Busch et al., 2009; Figure 4). On a series of artificial datasets with systematic manipulations of experimental parameters, we were able to provide a detailed assessment of all methods, and understand their common or individual reactions to changes in certain key parameters. These results informed us about the conditions in which phase opposition experiments are most likely to succeed, and about the right measure(s) to use for every situation.

Limitations

The present explorations, while hopefully useful, are necessarily incomplete. Other parameters or parameter combinations would certainly have been interesting to test. For example, the influence of ERPs (Figure 8) or of the timing of phasic modulation (Figure 12) are likely to be frequency-dependent, that is, different patterns of results might have been obtained if a slower (say, 7 Hz) or a faster (say, 30 Hz) phasic modulation frequency had been employed. In addition, changes in the shape and/or latency of ERPs between trial groups (which were not included here) could be expected to influence the statistical power of our different measures by introducing spurious phase opposition. Changes in the noise structure (e.g., from white noise to pink or brown noise; see Footnote 5) are also likely to affect the frequency-dependence of phase opposition (Figures 7, 11). The range of possible factors to consider is virtually limitless. Nonetheless, we can hope that the key conclusions obtained in the various simulations performed here could generalize to a larger range of investigations.

Similarly, the choice of seven particular phase opposition measures necessarily leaves aside a number of other alternatives. Circular-to-linear correlation can be employed, for example, in experiments with a graded task outcome (e.g., reaction time), which would have been grouped here into two outcomes A and B (e.g., faster vs. slower reaction times). Studies that are motivated by a strong a priori hypothesis about the relevant oscillatory rhythm (e.g., occipital alpha) could dispense with time-frequency phase opposition analyses altogether (comparing phase distributions across task outcomes), and instead plot task outcome as a function of phase (similar to the top-right plot in Figure 3) in order to evaluate the depth of any sinusoidal modulation. Besides, standard inter-trial coherence in Equations (4–7) could be replaced by an equivalent but unbiased measure of phase-locking such as the pairwise phase consistency or “PPC” (Vinck et al., 2010). Causal filters (Zoefel and Heil, 2013) or ERP removal and interpolation procedures (Lakatos et al., 2013; Henry et al., 2014) could be employed to avoid post-stimulus contamination of phase measurements. By no means do we wish to imply that the measures tested here are the only alternatives; however, all these measures have been employed recently by our group and others worldwide to explore phase opposition in time-frequency electrophysiological data. We thus anticipate that the comparison of these procedures should prove helpful to at least these experimenters, and hopefully also to others that may follow the same inspiration.

Key Messages

For all three ad-hoc phase opposition measures introduced here (POS, POP, PBI), a streamlined non-parametric statistical procedure using a relatively small number of surrogates (permutations) to characterize the mean and standard deviation of the null hypothesis distribution, and subsequently deriving p-values from a z-score, was found to be preferable to the standard non-parametric permutation procedure. With large numbers of permutations, the two statistical procedures were equally efficient (Figure 6), but contrary to the standard procedure, the hybrid permutation + z-score tests did not suffer noticeably when the permutation number was decreased. With 1000 permutations, as used in most of our simulations, this hybrid permutation + z-score test always outperformed the conventional permutation procedure. We thus recommend using the hybrid technique, or else using the conventional permutation method with sufficiently large numbers of permutations (at least 10,000).

No single measure was systematically better than all the other ones; rather, the optimal measure was strongly tied to the exact parameter values of the artificial dataset generation process. Across all simulations (Figures 5–13), however, the winning measure was always either POS or circ. W-W, and never POP or PBI. We thus recommend abandoning these two measures, especially PBI which we had introduced in 2009 (Busch et al., 2009) and which has been independently employed in (at least) 13 publications since (Hamm et al., 2012; Ng et al., 2012; Auksztulewicz and Blankenburg, 2013; Hanslmayr et al., 2013; Manasseh et al., 2013; Rana et al., 2013; Diederich et al., 2014; Park et al., 2014; Li et al., 2015; Shou and Ding, 2015; Strauss et al., 2015; van Diepen et al., 2015; Batterink et al., 2016). Although there is no indication that PBI would have led authors to erroneous conclusions in any of these studies, it is also clear that more accurate results (or higher statistical power) would have been achieved using other measures.

Under what conditions should the non-parametric POS measure be used, and under what conditions the parametric circ. W-W test? In many of the situations tested, both of these did provide equivalent results (and it might then be preferable to use the parametric method, if only because it does not require computationally intensive permutations). In specific cases, however, their performance was found to differ. POS was reliably better with low trial numbers (200 or less; Figure 9), for higher frequencies of phase modulation (20 Hz and above; Figures 7, 11), and for phasic modulations occurring just after stimulus onset (around 50 ms; Figure 12). In turn, circ. W-W was better in asymmetric situations where either the relative trial number (Figure 10) or the ERP amplitude (Figure 13) differed markedly between the two trial groups. As many of the relevant parameter values cannot be known in advance and are not under the experimenter's control (e.g., frequency or timing of phasic modulation), we recommend systematically using both methods. In order to make these analysis methods more easily accessible to all experimenters, and to make the results more easily comparable between studies, we provide associated Matlab code (http://www.cerco.ups-tlse.fr/~rufin/PhaseOppositionCode/) to automatically compute p-values derived from circ. W-W and POS procedures (with both standard permutation and hybrid permutation + z-score tests implemented for the latter).

Conclusion

Independent of the exact analysis procedure employed, our explorations have revealed that the likelihood for an experimenter to detect phase opposition in an electrophysiological dataset is affected by several factors. This was true, even using a constant magnitude of phasic modulation (for example, in most of our simulations a reversal of rhythmic phase produced a 40% modulation of the probability of task outcome A vs. B; Figures 6–13). Among the most important factors to consider for phase opposition analyses are the absolute and relative trial numbers (Figures 9, 10), the overall ERP amplitude (Figure 8) and any potential ERP amplitude difference between the trial groups (Figure 13), as well as the frequency (Figure 11) and exact time (Figure 12) of the phasic modulation. Throughout all these simulations, the phase bifurcation (PBI) measure performed systematically worse than others. The optimal statistical power was shared (alternately) by one parametric measure, the circular Watson-Williams test (circ. W-W) and a non-parametric one, the phase opposition sum (POS). Our conclusion is, therefore, to recommend using these two tests in any future exploration of phase opposition. The analysis code that we provide should hopefully facilitate that objective.

Author Contributions

The author confirms being the sole contributor of this work and approved it for publication.

Conflict of Interest Statement

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by an ERC Consolidator grant P-CYCLES number 614244. The author wishes to thank all past and present members of his lab for useful discussions on phase opposition, and in particular D. Lozano-Soldevilla, S. Crouzet, and B. Han for further providing helpful comments on the manuscript.

Footnotes

1. ^Other demonstrations of rhythmic operation can link pre-stimulus oscillatory power or phase synchrony to neural or perceptual output (Ergenoglu et al., 2004; Hanslmayr et al., 2005; Thut et al., 2006; Hanslmayr et al., 2007; van Dijk et al., 2008; Keil et al., 2012, 2014a).

2. ^The Watson-Williams test included in the Matlab Circular Statistics toolbox (Berens, 2009) can already handle more than 2 trial groups. The PBI, POP, and POS measures can be extended to n trial groups (n > 2) by inserting additional terms in the corresponding Equations (5–7). A detailed analysis of these measures' behavior in experiments producing three or more trial outcomes is unfortunately beyond the scope of the present study.