The nucleus accumbens as a nexus between values and goals in goal-directed behavior: a review and a new hypothesis

- 1Laboratory of Computational Embodied Neuroscience, Institute of Cognitive Sciences and Technologies, National Research Council, Rome, Italy

- 2Department of Psychology, The University of Sheffield, Sheffield, UK

Goal-directed behavior is a fundamental means by which animals can flexibly solve the challenges posed by variable external and internal conditions. Recently, the processes and brain mechanisms underlying such behavior have been extensively studied from behavioral, neuroscientific and computational perspectives. This research has highlighted the processes underlying goal-directed behavior and associated brain systems including prefrontal cortex, basal ganglia and, in particular therein, the nucleus accumbens (NAcc). This paper focusses on one particular process at the core of goal-directed behavior: how motivational value is assigned to goals on the basis of internal states and environmental stimuli, and how this supports goal selection processes. Various biological and computational accounts have been given of this problem and of related multiple neural and behavior phenomena, but we still lack an integrated hypothesis on the generation and use of value for goal selection. This paper proposes an hypothesis that aims to solve this problem and is based on this key elements: (a) amygdala and hippocampus establish the motivational value of stimuli and goals; (b) prefrontal cortex encodes various types of action outcomes; (c) NAcc integrates different sources of value, representing them in terms of a common currency with the aid of dopamine, and thereby plays a major role in selecting action outcomes within prefrontal cortex. The “goals” pursued by the organism are the outcomes selected by these processes. The hypothesis is developed in the context of a critical review of relevant biological and computational literature which offer it support. The paper shows how the hypothesis has the potential to integrate existing interpretations of motivational value and goal selection.

1. Introduction

Instrumental learning—the process of acquiring the capacity to select actions based on the utility of their outcomes—is a fundamental means through which animals adapt to changes in their environment. These changes may be profound, as the ecological niches occupied by animals can vary substantially during the life of a single individual. For example, the superficially straightforward behaviors of foraging, escaping predators, and searching for con-specifics, must be flexible and dynamically adjust to continuously changing environmental conditions. Moreover, action selection processes have to flexibly adjust on the basis of internal states and needs of the animal, as these continuously change in the course of the day. Only when there are strong invariances in the contingencies between action and valuable outcomes, based on reliable environmental and internal processes, behavior can become more regular or habitual. When this is not possible, the selection of instrumental actions is based on the current value of action outcomes, or goals (Balleine and Dickinson, 1998). Here we use the term goal to indicate the internal representation of an action outcome currently chosen as the target of the animal's behavior because of the incentive salience, or motivational value, associated with the outcome. Incentive salience has been defined as a motivational attribute that the brain assigns to stimuli if these are related to the possible satisfaction of some of the animal's homeostatic drives (Berridge, 2004; we shall see how our hypothesis expands the concept of motivational value to include value related to the novelty of outcomes). The theory proposed here is hence relevant for decisions that involve the selection of action goals on the basis of their current value for the animal, in particular “ultimate goals” consisting in the achievement or interaction with items having an intrinsic biologically saliency (e.g., food, water, and novel objects). When behavior is sensitive to the value assigned to goals and to the contingencies between goals and actions that can accomplish them, it is referred to as goal-directed behavior (Dickinson and Balleine, 1994; Balleine and Dickinson, 1998).

The processes and neural mechanisms through which the brain generates and assigns motivational incentive salience to goals is an important open problem for current neuroscientific research: this paper addresses this issue by first offering a critical review of the relevant literature, and then by proposing a novel hypothesis to solve it. The biological literature indicates that goal-directed behavior rests on the acquisition of two key types of associations: first, the associations between actions and their outcomes which have to be learned so that the animal can choose actions when their outcomes become desirable (Balleine and Dickinson, 1998; Yin and Knowlton, 2006); second, the associations between the outcomes and their current motivational value (Balleine and Dickinson, 1998; Balleine and Killcross, 2006; Yin and Knowlton, 2006; Balleine and Ostlund, 2007). Instrumental behaviors that do not rely on these two classes of associations are deemed habitual, being solely based on associations between stimuli and responses (S-R; Balleine and Dickinson, 1998; Yin and Knowlton, 2006).

The neural substrates underlying goal-directed and habitual behavior have been extensively investigated, and several key neural systems have been shown to be involved. These include the basal ganglia, a set of subcortical nuclei which form looped circuits with cortex and thalamus. The main input nucleus to the basal ganglia is the striatum, which may be partitioned, on an anatomical basis, into dorsolateral, dorsomedial and ventral territories. It is the ventral striatum, otherwise known as nucleus accumbens (NAcc), which forms a focus of the paper. The accumbens is further divided into two major sub-components: the “core” and the “shell.” Other key areas for goal-directed behavior include the limbic structures such as the amygdala and hippocampus. The amygdala is a brain system that, along with others (e.g., hypothalamus), helps homeostatic regulation of internal bodily organs (e.g., heart rate and blood pressure) and of the neuromodulators in the brain, and affects the triggering of innate behaviors such as orienting and approach. Hippocampus is a highly-associative multimodal part of the “paleocortex” (phylogenetically older than neocortex) and is strongly connected with all associative cortical areas; it plays an important role in episodic memory, consolidation of long-term memory, and higher-level cognition (e.g., planning).

While the anatomical identity of these key structures has been established, there is, as yet, no complete picture of their operation in behavioral expression, although some broad functional separation can be made. Thus, it appears that habitual behavior, and related learning processes, are rooted in the circuits involving the dorsolateral striatum and motor cortex (Packard and Knowlton, 2002; Yin et al., 2004). In contrast, goal-directed behavior relies on the networks including prefrontal cortex, dorsomedial striatum, and NAcc portions of basal ganglia, and limbic neural structures such as amygdala and hippocampus (Corbit et al., 2001; Yin et al., 2005).

Recently, the theoretical work on these issues has been corroborated by studies based on computational models and formal analyses. In particular, several concepts of the reinforcement learning (Sutton and Barto, 1998) and optimal control theory have been exploited to formally capture various features and differences of goal-directed and habitual instrumental behavior. For example, in a seminal paper, Daw et al. (2005) proposed that habitual behavior and its learning can be captured on the basis of model-free reinforcement learning, whereas the functionalities involved in goal-directed behavior can be represented through model-based reinforcement learning.

Within this framework, a key role has been ascribed to the NAcc in terms of processing of current values and reward predictions (Humphries and Prescott, 2010; Bornstein and Daw, 2011; Penner and Mizumori, 2011; Pennartz et al., 2011; Khamassi and Humphries, 2012). However, the role played by the NAcc in the interaction between values and outcomes has not been fully clarified. The NAcc, in synergy with amygdala, has been shown to play an important role also in Pavlovian (classical conditioning) processes, responsible for assigning value to previously neutral stimuli (Corbit et al., 2001; Cardinal et al., 2002b; Day et al., 2006; Day and Carelli, 2007; Yin et al., 2008; Lex and Hauber, 2010; Mannella et al., 2010). These processes have also been shown to produce “energizing” effects on instrumental behavior, e.g., causing lever pressing with higher strength and frequency, based on the value assigned to the stimulus (this phenomenon is known as “Pavlovian to Instrumental Transfer”—PIT; Corbit et al., 2001; Hall et al., 2001; Corbit and Balleine, 2011). Notwithstanding this evidence, the key contribution of NAcc to assign value to goals in goal-directed processes has not been fully spelled out. Furthermore, while the contribution of the amygdala-accumbens system is known to be important when appetitive and aversive motivational values are involved, a possible role of the hippocampal projection to accumbens in supplying goal-value has still not been clarified. It is known that the hippocampus plays a key role in goal processing (Pennartz et al., 2011) and also in the detection of the salience of stimuli based on their novelty (Lisman and Grace, 2005).

Our account seeks to unify these observations under the idea that NAcc serves to integrate different types of value sources used to select goals. This perspective will specify and articulate in a new way the classic idea that the NAcc acts as an interface between “the limbic system and the motor system” of brain (Mogenson et al., 1980). In particular, in this paper we propose an integrated system-level hypothesis to explain how various types of motivational values are transferred to goals via the NAcc, and how goals, in turn, control instrumental behavior. The hypothesis also explains the role played by the projections of amygdala and hippocampus to NAcc in defining different types of value, in particular values related to appetitive, aversive, and novel stimuli (although we will deal with aversive stimuli only marginally).

The basic idea is that Pavlovian processes in amygdala, and novelty-detection processes in hippocampus, are capable of assigning motivational value to biologically relevant stimuli and events. The NAcc collects information on motivational value from disparate sources and encodes it in an integrated way in the “common currency” of its activity. This information is then used, via ventral basal ganglia connectivity to prefrontal cortex, to select among possible future goals encoded there. Further, we propose two mechanisms for this. The first involves accumbens core which contributes to goal selection with the same mechanisms used by other striatal territories to make selections: competition between alternative options and disinhibition of thalamic targets (in this case representing goals), by basal ganglia output nuclei. In contrast, a second mechanism involving accumbens shell exploits its strong connections with the dopaminergic system to make goal selection “promiscuous.” That is, increased dopamine (DA) in accumbens shell makes selection possible with smaller input salience. This “softer” selection scheme may have two possible functions. First, it could foster goal exploration during learning phases and the parallel selection of multiple related goals and sub-goals during exploitation. Second, it could support a more effective summation of the value of outcomes, and hence a better comparison of them, when multiple sources of such value are available at the same time.

Cortex also encodes actions required to bring about the selected goals, and the associations between outcomes and actions that allow the selected goals to activate the representations of specific actions. These are internal models, specifically inverse models (action-to-outcome), usable for action deployment (Gurney et al., 2012). The action representations excited by goals through inverse models are subject to selection via basal ganglia, so allowing their behavioral expression and hence the achievement of the goals that activated them.

The rest of the paper expands the hypothesis as follows. Section 2 presents a focussed review of some main bio-behavioral neuroscientific proposals and computational models aiming to explain the learning and expression of goal-directed behavior. Based on this, section 3 first presents an evolutionary interpretation of the functions and neural structures underlying goal-directed behavior: this is a framework within which we develop the core hypothesis proposed here. The section continues by explaining the neural basis of the hypothesis. In particular it describes the three main components of the hypothesis: (1) the amygdala and hippocampus as the sources of motivational value, (2) the ventral basal ganglia (including NAcc) as the sub-system integrating motivational-value information and selecting goals on this basis, (3) the prefrontal cortex as the main component representing and predicting outcomes, and triggering the execution of actions that lead to these outcomes, based on action-outcome contingency representations. Finally, section 4 draws conclusions, in particular highlighting how the proposed hypothesis reconciles most functions attributed in the literature to NAcc. The acronyms used in the paper are listed in Table A1.

2. Goal-Directed Behavior: Current Biological and Computational Frameworks

2.1. Goal-Directed Behavior: Key Functional Processes

The definition of goal-directed behavior is based on two behavioral effects and the experimental paradigms to investigate them, namely contingency degradation and instrumental devaluation. In a typical contingency degradation experiment (e.g., Balleine and Dickinson, 1998) an animal first learns to produce an instrumental action (e.g., a lever press) to obtain a reward (e.g., a food pellet). After this training the same reward is presented to the animal independently of the production of the action, so degrading the correlation (“contingency”) between the performance of the action and the experience of its outcome. After the contingency degradation, the animal exhibits a lower probability of performing the instrumental action. These results indicate that, throughout the instrumental training, the animal learns and continuously updates the association between action and outcome. This association is then used to select the current action based on the chosen outcome. In a typical instrumental devaluation experiment (e.g., Balleine and Dickinson, 1998) an animal first learns to obtain two rewards (e.g., a food pellet and a sucrose solution) via two instrumental actions (e.g., pressing a lever and pulling a chain). Then one of the rewards is devalued, for example by letting the animal freely access it until satiation. In a subsequent test where both manipulanda (lever and chain) are presented together “in extinction”—that is without rewards—the animal tends to perform with lower probability the instrumental action corresponding to the devalued outcome.

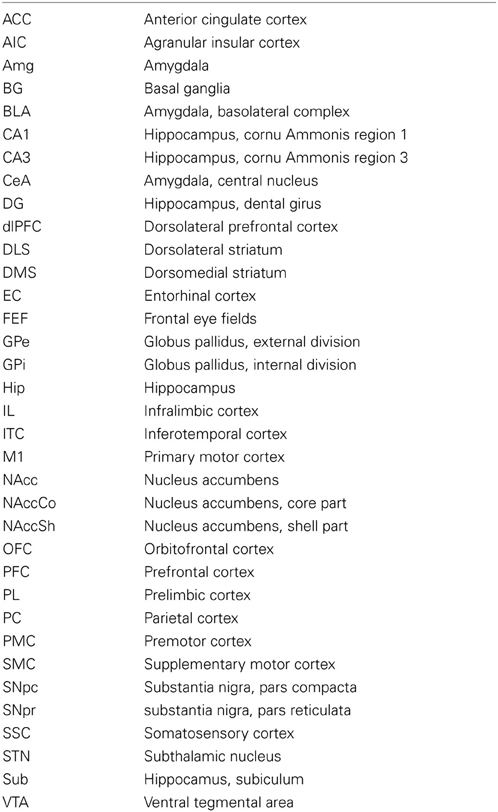

Together, the experiments of contingency degradation and devaluation capture the core functional processes behind goal-directed behavior. Figure 1 summarizes the main ideas in the literature related to the interpretation of the mechanisms underlying these processes and their relation to S-R/habitual behaviors (e.g., see Dickinson and Balleine, 1994; Balleine and Dickinson, 1998; Cardinal et al., 2002a). We now illustrate these processes in detail.

Figure 1. The major associations and processes behind instrumental habitual behavior, goal-directed behavior, Pavlovian processes, and their relations (here we describe only those relevant for this work). At the top, the diagram shows the systems processing the hedonic impact and the incentive value of stimuli, the latter important for the assignment of value to appetitive/aversive stimuli involved in goal-directed processes. The middle of the diagram shows the loop of processes involving goal-directed behavior; here the action representations are associated with outcome representations (instrumental contingency) and then these outcomes are attributed incentive value. In this way, outcomes can trigger the execution of motor responses that lead to them. The bottom of the diagram refers to Pavlovian processes, with the core association between conditioned stimuli (CS) to unconditioned stimuli (US). These have a certain value depending on the animal's internal states. Pavlovian processes can directly trigger unlearned behaviors (e.g., as in conditioned approach experiments) or influence the performance of instrumental behaviors (Pavlovian-Instrumental Transfer—PIT). The diagram also represents the formation of habits (S-R behaviors) as direct associations between stimuli (CS) and motor responses. Reprinted from Cardinal et al. (2002a), Copyright 2002, with permission from Elsevier.

The first set of processes involve the attribution of value to stimuli during consummatory behaviors (related to “liking” Berridge, 2004). These processes have overt behavioral manifestations and, according to the literature, might be related to mechanisms that are responsible for the attribution of value to outcomes in goal directed behavior. The second class of phenomena involve the complex processes behind goal-directed behavior (Dickinson and Balleine, 1994; Balleine and Dickinson, 1998). These processes are related to the representation of the associations between action representations and outcome representations (instrumental contingency) investigated in contingency degradation experiments. These mechanisms are also related to the attribution of incentive value to outcomes and the consequent recall of suitable motor responses—the processes investigated in devaluation experiments. The third class of phenomena is related to Pavlovian processes, involving the core associations between conditioned stimuli (CS) and unconditioned stimuli (US; Cardinal et al., 2002a). The US have a value depending on the animal's internal states. Pavlovian processes can directly trigger unlearned behaviors (e.g., as in conditioned approach experiments) or influence the performance of instrumental behaviors (Pavlovian-Instrumental Transfer—PIT; Corbit et al., 2007).

If instrumental actions are repeated a great number of times in constant conditions (“overtraining”) the behavior tends to become insensitive to the value of goals (McDonald and White, 1993; Yin and Knowlton, 2006). In this case, the associations between the perceived stimulus/overall context and the produced responses (S-R) are so strongly encoded that behavior becomes habitual, i.e., mainly guided by external stimuli alone.

We will show that, with respect to the views described above, our hypothesis presents three important new ideas. First, it proposes that aside from appetitive value, driving both instrumental and Pavlovian processes (and originating mainly from amygdala–Amg), a second important source of value is used to select goals, namely, “intrinsic value” related to the novelty of stimuli, and originating from the hippocampus (Hip). Second, it specifies the mechanisms of attribution of value to goals, in particular, spelling out the mechanisms through which value is generated and contributes to goal selection. Third, it highlights the importance of the representation of “inverse models” (where the activation of a goals/outcomes triggers the recall of actions) instead of the more usually emphasized “forward” or “prediction models” (where the information flow goes from “instrumental action representations” to “instrumental outcome,” see Figure 1); see Gurney et al. (2012), on the distinction between the two types of models.

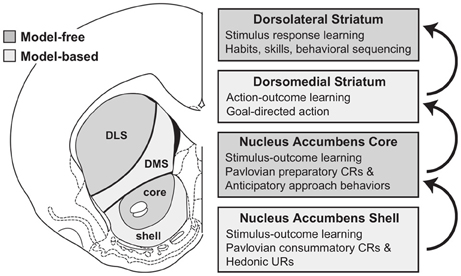

Recently, Gruber and McDonald (2012) have proposed an integrated theory on the brain system underlying goal-directed behavior dealing with some of the ideas described above. This theory has some similarities with, but also important differences from, our proposal (Figure 2). As we do here, Gruber and McDonald (2012) relate the dorsolateral striatum (DLS, in rats; “putamen” in primates) to habits and sensorimotor behavior. However, in contrast to our theory, the main processes involved in goal selection are ascribed to the dorsomedial striatum (DMS, in rats; “caudatum” in primates) and not to the NAcc. The latter is, instead, supposed to implement supportive functions such as the regulation of “energization” or “vigor” of the performed behaviors, and the triggering of behaviors which are ancillary to the main instrumental behavior (for example orienting and approaching). This proposal is part of a literature that tends to closely associate goal-directed behavior to DMS and to ascribe a motivational/supportive role to NAcc (Yin and Knowlton, 2006; Balleine et al., 2007, 2008). Although very relevant, these proposals do not fully explain, as our model does, how information on the ultimate cause of goal selection, namely value, is transmitted to goals. Equally important, the proposal does not fully explain where value originates. For example, the proposal does not explain why, in instrumental devaluation experiments, NAcc is necessary to allow a rat to decide which lever to press, given two levers instrumentally associated with two different foods, on the basis of the value currently assigned to such foods. Moreover, the proposal does not fully articulate how such value, both appetitive and related to novelty, is generated. Thus it would have difficulty in explaining why the basolateral amygdala (BLA) is necessary for the production of the devaluation effects. Our proposal explains these results and also reconciles them with the functions that Gruber and McDonald (2012) and similar proposals ascribe to NAcc. In particular, our proposal claims that: (a) In early stages of evolution, NAcc learned to play an important role in Pavlovian processes triggering a number of innate behaviors such as those related to orienting, approaching, and avoidance (see section 3.1 for details). (b) The NAcc encodes outcome value originating from different sources with a unique “currency” (namely, the activation of the representations of the possible outcomes themselves). This value representation is intimately related to DA, as NAcc is one of the main regulators of, and targets for, DA production. Such DA production is one of the main physiological correlates of vigor transferred by NAcc to lower-level striatal regions implementing actions (see section 3.5). (c) With the later evolutionary expansion of prefrontal cortex (PFC) in mammals and, hence the potentiation of their capacity to form, represent, and manage goals, the NAcc has acquired a prominent role in the assignment of value to such goals; this is the main function of NAcc expanded in this paper (see sections 3.1 and 3.5). (d) The goals selected on the basis of these mechanisms then contribute to select the actions to be performed via the top-down control exerted by the NAcc-PFC system on the lower-level associative and sensorimotor striato-cortical systems via cortical and sub-cortical inverse models (see section 3.6). The DMS plays a key role in the latter process and in the specification of sub-goals, hence its importance for learning and expressing goal-directed behavior.

Figure 2. Diagram of the rat brain illustrating a proposal on the role of DLS to select habits, of DMS to drive goal-directed behavior, and of NAcc to assign vigor to the performance of selected behaviors and to trigger ancillary behaviors such as orienting and approaching. Reprinted with permission (Gruber and McDonald, 2012).

2.2. Goal-Directed Behavior: Neural Correlates

Instrumental goal-directed behavior is supported by both the activity of cortical regions and the activity of various subcortical neural components. Balleine et al., (2009) summarize the most important neural regions needed for the acquisition and/or expression of instrumental devaluation and contingency degradation in rats based on the effect of lesions on the two behavioral effects. Among these, lesions of the prelimbic cortex (PL; Corbit and Balleine, 2003), the DMS (Yin et al., 2005), or the mediodorsal thalamus (Corbit et al., 2003; Ostlund and Balleine, 2008) result in a lack of expression of both effects. In contrast, lesions of the orbitofrontal cortex (OFC; Ostlund and Balleine, 2007a,b) or the entorhinal cortex (EC) result in a lack of expression of contingency degradation alone (but not so in primates where OFC is important for instrumental devaluation, Euston et al., 2012; Roberts, 2006). Finally, lesions of the NAcc, in particular of its core sub-component (NAccCo; Corbit and Balleine, 2003) results in a lack of expression of instrumental devaluation alone. Importantly, the acquisition and expression of instrumental devaluation is also disrupted by a damage at the level of the Amg, in particular the BLA (Balleine et al., 2003; Mannella et al., 2010).

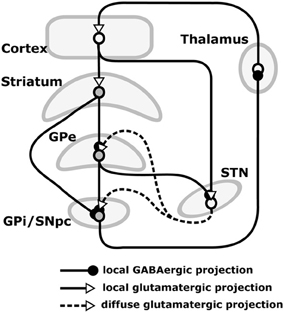

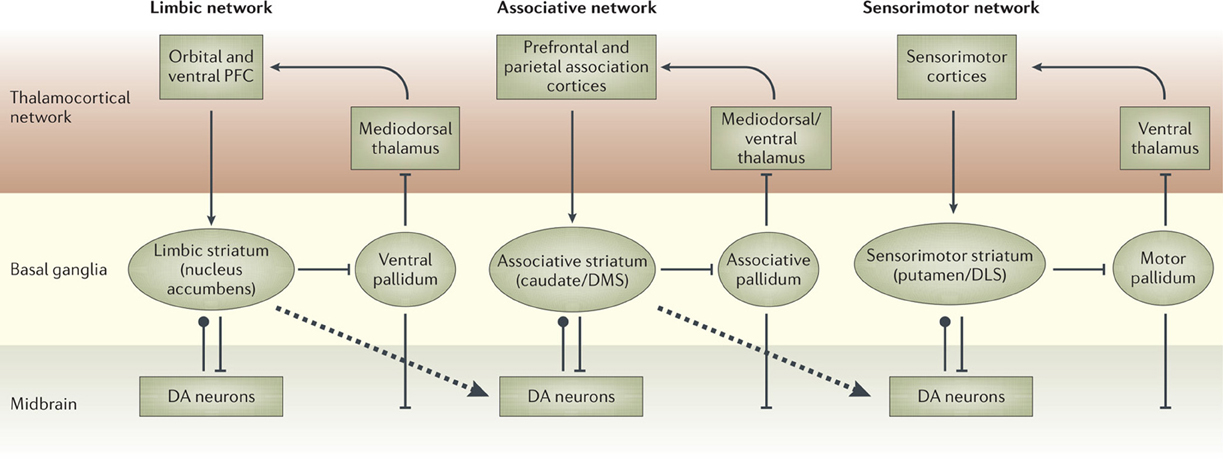

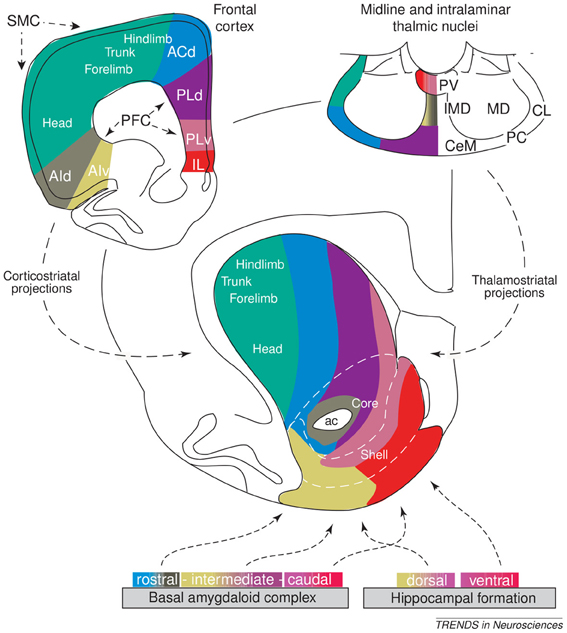

Our hypothesis must be consistent with the functional implications of these lesions, and so we now review the empirical evidence needed to make sense of them. The basal ganglia (BG) plays a variety of roles in the acquisition and expression of goal-directed behavior, with different territories of BG supporting this diversity of functions. There is a wide agreement that one major function of BG is selection (Alexander et al., 1986; Redgrave et al., 1999). The functional anatomy of BG reveals an organization supporting parallel, segregated loops through cortex whose internal structure is substantially invariant (Figure 3; this pattern is, however, different for NAcc shells – NAccSh – see below). Each loop receives the greatest part of its input from a specific cortical region and projects to the same cortical region via the thalamus. Within each loop, a cortical cell assembly associated with a particular action or another cortical content excites a focussed part of striatum. This causes inhibition of a corresponding part of the output nuclei of BG (globus pallidum pars interna—GPi, and substantia nigra pars reticulata—SNpr) which, in turn, disinhibits a restricted portion of the thalamus and the related cortex (Chevalier and Deniau, 1990). Thus, within each loop, multiple functional channels can select different cortical neural assemblies associated with action representations or other cortical contents (Mink, 1996; Redgrave et al., 1999).

Figure 3. Internal anatomy of a circuit loop through basal ganglia and cortex. GPe: globus-pallidus, external compartment; GPi: globus-pallidus, internal compartment; SNpr: substantia nigra pars reticulata; STN: sub-thalamic nucleus.

There is now a wide agreement that the functional role of the different BG loops is determined by the contents of the cortical regions they target (Alexander et al., 1986; Romanelli et al., 2005; Yin and Knowlton, 2006). In this respect, the literature often focusses on three main striato-cortical loops also relevant for our hypothesis (Figure 4); note that throughout the paper we use “striato-cortical loop” as short form for the more complete “striato-pallidal/nigral-thalamo-cortical-striatal loop.” The first sensorimotor loop, involving DLS, premotor cortex (PMC), and primary motor cortex (M1), is involved in the selection of motor actions based on sensory and motor information. Functionally, this loop plays a key role in the acquisition and expression of habitual instrumental behavior (i.e., the S-R association of Figure 1, Packard and Knowlton, 2002; Featherstone and McDonald, 2004; Yin et al., 2004.

Figure 4. The three main striato-cortical loops involving different territories of BG and cortical areas. Reprinted by permission from Macmillan Publishers Ltd: Nature Reviews Neuroscience (Yin and Knowlton, 2006), copyright 2006.

The second associative loop involves the DMS (in rats; homologous to caudate in primates) and various associative cortical areas (e.g., inferotemporal cortex—ITC; Middleton and Strick, 1996), parietal cortex (PC; Cheatwood et al., 2003), and also some regions of PFC like PL and the frontal eye fields (FEF; Room et al., 1985; Alexander et al., 1986). Functionally, this loop is involved in orientation, attention, affordance processing, and working memory, all functions related to the cortical regions involved by this loop (Burnod et al., 1999; Hikosaka et al., 2000; O'Reilly and Frank, 2006; Cisek, 2007). Given this role, the loop has been involved in learning and storing the relations between actions and outcomes (Cheatwood et al., 2003; Yin et al., 2005; Yin and Knowlton, 2006).

In contrast to the other two loops, the function of the limbic loop through BG is less clear. In rats, this loop involves the NAcc (ventral striatum) and various associative multimodal cortices, in particular the agranular insular cortex (AIC), PL, and infralimbic cortex (IL). In primates the loop also involves OFC and anterior cingulate cortex (ACC). The loop is involved in the highest cognitive processes related to goal-directed behavior and general executive function (Dalley et al., 2004; Hok et al., 2005; Ragozzino, 2007).

Biobehavioral research has produced a wide range of data based on lesion experiments, reversible inactivations, or dopaminergic depletions targeting the NAcc in behaving animals. These data indicate that NAcc is first implicated in the triggering of several low-level, fixed motor behaviors and Pavlovian conditioned responses. In particular the NAccCo is part of a network encompassing the central nucleus of Amg (CeA) and the ventral tegmental area (VTA). The VTA is one of the two major sources of DA, the other being substantia nigra pars compacta—SNpc. SNpc sends DA mainly to DMS and DLS, while VTA sends DA mainly to NAcc, PFC, Amg, Hip. The Amg-NAccCo-VTA network is the neural substrate of autoshaping, the process through which animals can be conditioned to perform various innate behaviors, such as approaching, directed to stimuli predicting rewards (Parkinson et al., 2000a,b; Cardinal et al., 2002b; see also Mannella et al., 2009, for a computational model). NAcc has also been shown to be the root of two effects of Pavlovian associations on instrumental behaviors, namely instrumental devaluation, described in section 2.1, and Pavlovian-Instrumental Transfer (PIT). In the latter, a conditioned stimulus, which has been previously associated with a reward through a Pavlovian procedure, can facilitate an increase in the execution of a previously acquired instrumental action directed to the same or to a different reward (“specific” or “general” PIT, respectively; Corbit and Balleine, 2005, 2011). The NAcc also underlies the role of DA in incentive salience; that is, the motivation to pursue rewards and sustain efforts to accomplish them (Salamone et al., 2003; Niv et al., 2007), processes related to “wanting” (Wyvell and Berridge, 2000; Peciña et al., 2006a). NAcc also plays a role in the hedonic perception of taste/rewarding stimuli (“liking”) measured in terms of specific overt behavioral manifestation (Peciña et al., 2006b).

Overall, this evidence suggests that the limbic loop plays a key role in the Pavlovian prediction of, and attribution of value to, environmental outcomes. Notwithstanding the large amount of evidence available on these processes, we still lack a specific proposal on how different types of values are processed and transmitted to goals to support their selection. Our hypothesis contributes to clarify these aspects.

2.3. Computational Approaches to Goal-Directed Behaviors

In the last decade, the theoretical understanding of habitual and goal-directed behavior has received a tremendous impetus from machine learning theories on reinforcement learning (RL), founded on dynamic programming and optimal control approaches (Bertsekas, 1987; Sutton and Barto, 1998). Since their inception (e.g., Sutton and Barto, 1987), reinforcement learning methods have strongly cross-fertilized with the bio-behavioral research on instrumental and Pavlovian learning (Houk et al., 1995b), and recently they have become the main theoretical framework to investigate decision making processes (e.g., see Montague et al., 2004; Glimcher et al., 2010). Moreover, reinforcement learning algorithms, in particular those going under the banner of temporal difference (TD) learning (Sutton and Barto, 1998), have become the main modeling tool to understand the dynamics of activation of dopaminergic neurons during conditioning experiments (Schultz et al., 1997). These models have also led to an intense effort to identify the specific neural correspondents of the various components of reinforcement learning algorithms (Houk et al., 1995a; Joel et al., 2002; Botvinick et al., 2008). These computational accounts have given key insights into goal-directed learning and behavior and represent touchstones against which we should compare the implications of our hypothesis, so we now review these models in some detail. On this basis, we will argue that our hypothesis has ramifications which go beyond the understanding given by current theoretical models.

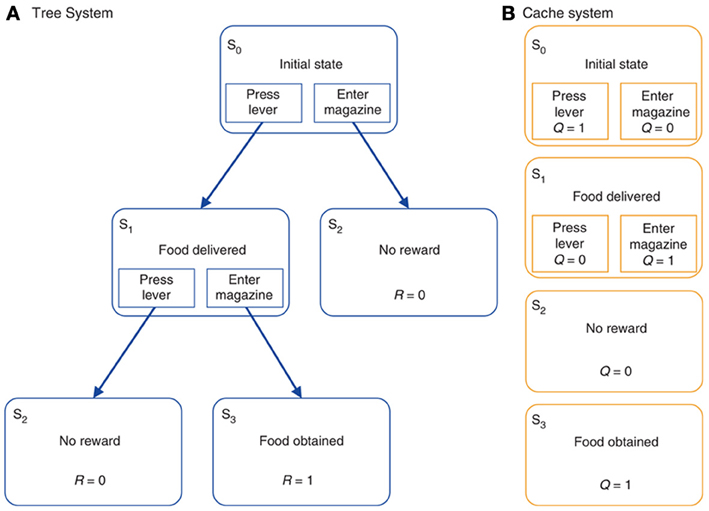

The model of Daw et al. (2005) (Figure 5) is a useful vehicle to illustrate the links between RL and biology as it also supplies formal definitions of goal-directed and habitual behavior, and thereby explains the relative strengths of these two modes of behavior in different conditions. The starting point of their analysis is the important distinction between model free reinforcement learning (MFRL) and model-based reinforcement learning (MBRL). MFRL methods, such as the actor-critic model (Sutton and Barto, 1987, 1998), are based on the storing of state values, V(s), the value assigned to state s, and the policy π(a, s) encoding the probability of executing action a given the state s. Other MFRL methods, such as Q-learning (Watkins and Dayan, 1992), use the value of state-action pairs, Q(s, a), rather than simply states alone. In both cases, “value” is formally defined as the expected sum of future discounted rewards. Value then informs how to generate the action policy either directly, as in the case of Q-learning, or indirectly, as in actor-critic methods. Estimates of the values (V or Q) are updated by the agent acting in the environment on the basis of the experienced reinforcement. MFRL models have been extensively used to capture the processes of acquisition and expression of habit-based behavior (e.g., see Joel et al., 2002; Botvinick et al., 2008).

Figure 5. A typical reinforcement learning task under either (A) goal-directed behavior (“model-based” reinforcement learning) or (B) habitual behavior (“model-free” or “cached” reinforcement learning). Reprinted by permission from Macmillan Publishers Ltd: Nature Neuroscience (Daw et al., 2005), copyright 2005.

In MBRL, the agent learns a forward model of the dynamics of the environment, formally captured by a transition function T(s', a, s) encoding the probability of visiting a new state s' when the action a is performed in state s. The system may additionally model the state-reward contingencies captured by a reward function R(s) encoding the reward obtained in a given state s. In contrast, no such explicit knowledge of the world is available to the agent in MFRL models. The internal representation of a model of the environment allows a MBRL agents to perform more powerful computations than MFRL counterparts. Indeed, using the model, the agent can to some degree evaluate actions internally, thereby making learning more efficient. Second, the transition function T is task independent, as it describes the general dynamics of the environment, and stable if the environment is “stationary” (does not change). It may therefore be used to solve multiple tasks captured by different reward functions. For example, if the agent is informed of the change of the structure of rewards it can recompute “on the fly” the values and the policy without the need of re-sampling the environment.

This flexibility of MBRL has however, some costs: due to its computational complexity, MBRL does not scale-up to state/action space domains as large as those that can be dealt with by MFRL. This complexity of MBRL arises from the memory needed to store the transition and reward functions, and the time needed to generate behavior based on the searches of the internal model. Instead, MFRL methods directly “cache” information on policies and so they readily indicate the actions to perform.

The processes of MBRL are proposed to be at the core of goal-directed behavior. In particular, the acquisition of the transition function is analogous to the learning of action-outcome associations postulated in contingency degradation experiments. Moreover, the capacity to reformulate the policy on the basis of internal simulation of the possible consequences of actions when the state-values are updated is analogous to the processes taking place in devaluation experiments, where the change of the value assigned to states (goals) is immediately reflected in different overt behaviors.

Daw et al., (2005) also indicate possible brain systems corresponding to MFRL and MBRL. In particular, the neural substrate of MFRL is the network centered on DLS. This is consistent with experiments showing that lesions of the DLS, or its dopaminergic afferents, prevents animals from becoming habitual even after over-training (Yin et al., 2004; Faure et al., 2005). In contrast, the neural substrate of MBRL is suggested to comprise various cortical (PL, OFC) and sub-cortical regions (DMS, BLA) important for devaluation effects (see section 2.2).

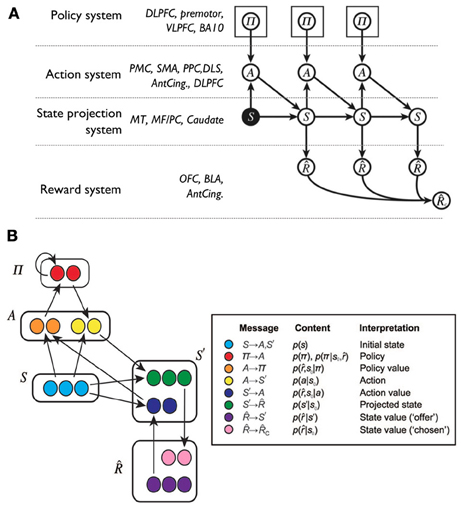

Recently, Solway and Botvinick (2012) have proposed to capture goal-directed behavior processes with probabilistic representations and Bayesian inference. In this way, different processes underlying goal-directed behavior can be isolated as terms in probabilistic expressions and then linked to brain systems implementing analogous functions. In their proposal:

where and s' are respectively future rewards and states, p(π|s, ) is the posterior probability over the policy given the current state and the rewards, and p(π) is the prior probability of the policy; other symbols have been defined earlier.

The terms in Equation 1 were instantiated in the components of a connectionist model (Figure 6) linked to possible corresponding brain areas. Thus, the prior on the policy p(π) is related to the activity of dorsolateral PFC (dlPFC), the policy function p(a|s, π) to motor cortices and DLS, the prediction of states p(s'|s, a) to associative cortex and PFC, and the reward expectation p(|s') to the activity of the OFC and BLA.

Figure 6. A Bayesian interpretation of goal-directed learning proposed by Solway and Botvinick (2012). (A) Graphical model supporting the probabilistic factorization of a model-based reinforcement learning problem, and hence of goal-directed behavior, with a list of possible biological correspondents. ACC, anterior cingulate cortex; BA, Brodmann area; BLA, basolateral amygdala; dlPFC, dorsolateral prefrontal cortex; DLS, dorsolateral striatum; MF/PC, medial frontal/parietal cortex; MT, medial temporal cortex; PFC, prefrontal cortex; PC, parietal cortex; PMC, premotor cortex; SMA, supplementary motor area; vlPFC, ventrolateral prefrontal cortex. (B) A possible neural implementation of the functional architecture: based on (A) the reader might attempt to link neural areas to the components of the architecture. Adapted and reprinted with permission (Solway and Botvinick, 2012).

The architecture of Solway and Botvinick (2012) offers a principled overall view of goal-directed behavior but does not account for a key element which is at the heart of our hypothesis, namely the proposal for a key role of the NAcc in the selection of goals within PFC on the basis information on value computed in the limbic brain.

The role of NAcc in goal-directed behavior is also the subject of other computationally-oriented accounts of goal-directed behavior, all referring directly or indirectly to the reinforcement learning framework (Bornstein and Daw, 2011; Penner and Mizumori, 2011; Khamassi and Humphries, 2012). For instance, Penner and Mizumori (2011) (Figure 7) invoke a dual actor/critic framework in which DLS and NAccCo are respectively the actor and the critic of an MFRL system, while the DMS and the NAccSh are the actor and the critic of an MBRL system.

Figure 7. The proposal of Penner and Mizumori (2011) for the possible functions of nucleus accumbens core and shell, and their relation to downstream striatal regions. Notice the role of stimulus-outcome predictor ascribed to the accumbens. Reprinted from Penner and Mizumori (2011), Copyright 2011, with permission from Elsevier.

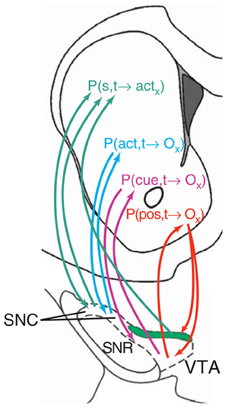

In contrast, Pennartz et al. (2011) suggest that the actor-critic schema is not the best interpretation of NAcc function (see Figure 8). In their view, different striatal regions compute predictions on outcomes (or actions) based on different types of information. Thus, NAccSh predicts outcomes on the basis of spatial features (e.g., position in space of a certain food resource in a navigation task). Instead, NAccCo predicts outcomes based on specific cues (e.g., visible landmarks). The DMS predicts outcomes based on actions (e.g., the effects of turning right). Finally, the DLS “predicts” the motor actions, considered as lower-level abstractions of outcomes.

Figure 8. An hypothesis of ventral striatum as the locus of various types of action-outcome anticipations. Reprinted from Pennartz et al., (2011), Copyright 2011, with permission from Elsevier.

The computationally grounded ideas described above clearly represent a major contribution to our understanding of goal-directed behavior. However, they either overlook the critical aspect of how goal selection is linked to value, or they diverge in the way they account for it, so highlighting the need for further clarifications of this issue grounded on available empirical evidence.

3. The Ventral Striato-Cortical Loop and Goal Selection

3.1. A System-Level Evolutionary Framework for the Hypothesis

This section proposes a framework within which we develop our hypothesis on how the NAcc assigns motivational value to goals and thereby participates in their selection. Such a hypothesis is then fully expanded in the remaining sections. We posit that the system directed to accomplish useful outcomes in higher mammals results from an evolutionary trajectory involving three successive “versions” of it having an increasing computational sophistication and power. The additional components of the more recent versions do not replace those of their predecessors, rather they work with them to produce augmented functionality.

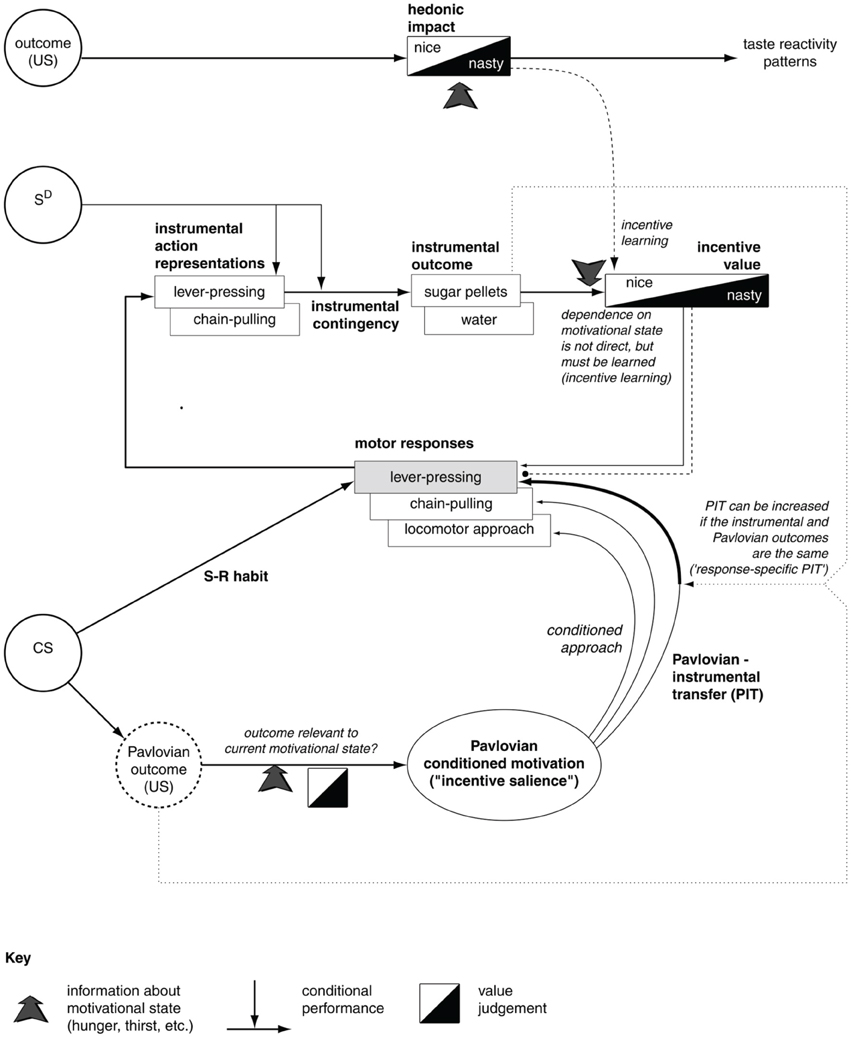

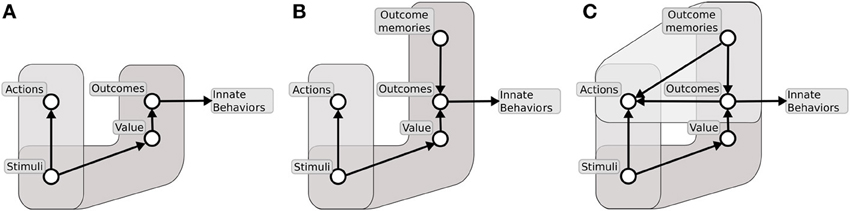

The evolutionarily first system (Figure 9A) is formed by two major components: (1) a component capable of learning instrumental habitual behaviors by trial-and-error; (2) a second one capable of forming Pavlovian stimulus-outcome associations. The neural substrate of the first component is mainly a sensorimotor system involving BG motor regions. The substrate of the second component is mainly formed by a network composed of amygdala, ventral BG and other sub-cortical structures (e.g., the hypothalamus and the periacqueductal gray) capable of expressing behaviors which are innate, or the result of early-development, triggered by Pavlovian processes (Davis and Whalen, 2001; Medina et al., 2002; Balleine and Killcross, 2006; Mirolli et al., 2010). The system under discussion is common to all vertebrates (including fish, amphibians, and reptiles), and serves the acquisition of simple behaviors through trial-and-error, the triggering of innate behaviors such as feeding, approaching, avoidance, and orienting, and the implementation of simple Pavlovian processes such as those studied in delay conditioning paradigms. None of these behaviors require the maintenance of lengthy memory traces between the conditioned and the unconditioned stimuli (Davidson and Richardson, 1970). Within this system, the ventral regions of striatum mainly support the energization and expression of innate behaviors via its connections to lower motor centers.

Figure 9. The three major systems for learning to select desired outcomes forming an evolutionary lineage used here as a background for our hypothesis. (A) First system formed by instrumental stimulus-response behaviors and simple Pavlovian processes. (B) Second system formed by instrumental stimulus-response behaviors and sophisticated Pavlovian processes supported by dynamical neural processes capable of sustained active representations of outcomes. (C) Third system formed by instrumental stimulus-response behaviors, sophisticated Pavlovian processes, and further structures allowing outcome representations to recall actions.

The second system to emerge (Figure 9B) builds on the first system, and develops the second component (2) to make it capable of generating more complex Pavlovian stimulus-outcome associations. A major contribution to this empowerment, pivoting on a fully evolved hippocampus, is the implementation of dynamical circuits capable of storing information on stimuli experienced in the recent past. This supports Pavlovian processes taking place in the Amg, and thereby allows the solution of more challenging tasks, such as those involved in trace conditioning paradigms. This enhanced Pavlovian system is possessed by more evolved vertebrates, e.g., birds, (Lucas et al., 1981), and allows them to complete more complex tasks where incentive value has to be transferred between temporally distal stimuli (Richmond and Colombo, 2002). This allows them to form conditioned (“secondary”) reinforcers quite distant from actual rewards and capable of driving the acquisition of sophisticated habit-like behaviors. In the new enhanced system, the ventral striatum continues to mainly play a role of energization of action and triggering of innate behaviors, functions still present in mammals and the third system that we now consider (Cardinal et al., 2002b; Gruber and McDonald, 2012).

The third, and evolutionarily most recent, system (Figure 9C) uses the component (1) of its predecessors, has an enhanced component (2), and acquires a third component (3). These enhancements pivot on a fully evolved neo-cortex. The enhancement of component (2) relies on cortical areas such as the AIC and the OFC, dealing with olfaction and taste, and on prefrontal-hippocampal re-entrant connections. These allow the component to have a further enhanced capacity to represent outcomes for long times with respect to Amg alone (Schoenbaum et al., 1998). The third component (3), fully developed in mammals and in particular in primates, is supported by re-entrant circuits involving ventral BG, medial and dorsal prefrontal cortex, and hippocampus, and resulting in a powerful working memory capable of representing experienced stimuli for prolonged periods of time (up to few seconds) (Rolls, 2000a; Euston et al., 2012). The component allows the formation of associations between multiple stimuli in time, and in particular to anticipate future stimuli and outcomes on the basis of the current experience (Funahashi, 2001; Dalley et al., 2004; Matsumoto and Tanaka, 2004). The overall system has an organization and implements the functions analyzed in detail in the following sections.

3.2. Outline of the Hypothesis

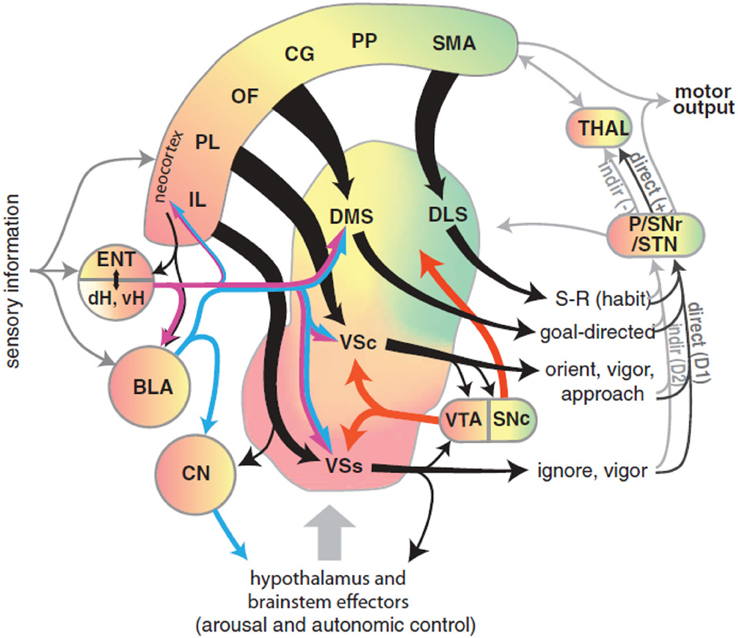

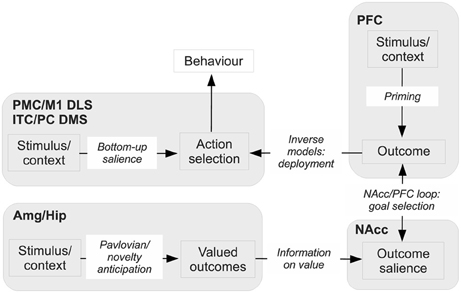

This sub-section outlines the core hypothesis proposed in the paper. The main features of the hypothesis are shown in Figure 10. We will continue to refer back to this figure throughout the rest of the paper as more detail is included in our scheme. The next four sub-sections expand the biological and behavioral evidence supporting the hypothesis in relation to the main brain systems involved (Amg, Hip, NAcc, and PFC).

Figure 10. Sketch of the main functional elements of the hypothesis, with their possible biological correspondents. Amg, amygdala; DLS, dorsolateral striatum; DMS, dorsomedial striatum; Hip, hippocampus; ITC, inferotemporal cortex; M1, primary motor cortex; NAcc, nucleus accumbens; PC, parietal cortex; PFC, prefrontal cortex; PMC, premotor cortex.

3.2.1. Amygdala

The Amg encodes unconditioned stimuli (US); that is, appetitive and aversive primary rewarding stimuli. Appetitive stimuli include, for example, food (e.g., its smell and taste), while aversive stimuli comprise objects (e.g., predators) causing body damage and pain. The Amg is one of the limbic brain systems interfacing other brain areas to the homeostatic regulatory systems of the body. On this basis the Amg can modulate the activation of its representation of primary appetitive/aversive stimuli depending on the internal state of the animal. For example, if the animal ingests a food, its representation within the Amg can have different activities, hence value, depending on the level of hunger for such food. The activation of USs in Amg can trigger a large number of unconditioned responses (e.g., startle, approach, avoidance), and participates in a number of internal regulatory functions of the body (e.g., heart-rate, salivation) and the brain (via the main neuromodulatory systems). These reactions are made possible by its diffuse projections to multiple subcortical areas and to the NAcc.

The Amg, in particular CeA, implements core Pavlovian processes through which it links representations of CS to innate, unconditioned responses (URs). Moreover, the BLA can associate the representations of CSs to those of USs. This powerful mechanism allows it to associate, in “one shot,” previously neutral stimuli with all the URs associated with any US following the CS. Importantly, this implies that, since the responses are mediated by the CS-US-UR causal chain, the BLA can also regulate the responses on the basis of the current internal value assigned to the US (see above).

3.2.2. Hippocampus

Hip is traditionally thought to play a key role in rapid formation of episodic memories and spatial maps for navigation. These memory-based processes rest on the important capacity of the Hip system to detect the novelty of stimuli, of stimuli associations, and of stimuli-context associations. Upon detection of novelty, the Hip is able to activate dopaminergic systems via its projections to NAcc, thereby supporting learning of structures targeted by DA including the Hip itself. This capacity to detect novelty also plays a second function, fundamental to our hypothesis on the attribution of value to goals: information on novelty supplied by Hip to NAcc can also be used to select goals. Indeed, aside from the appetitive/aversive value communicated to NAcc by the Amg, the novelty of a stimulus represents a fundamental component of the motivational value associated to it. This because novelty has a pivotal adaptive valence since novel objects, associations, and contexts might have potential appetitive/aversive valence initially unknown to the animal and this can be discovered only by targeting them with the needed attentional, exploratory, and learning resources.

3.2.3. Nucleus accumbens

The NAcc is a nexus for combining stimulus value computed in the Amg and Hip, and for implementing the process of selection of outcomes in synergy with PFC. Thus, NAcc receives information from Amg and Hip which represents the appetitive, aversive, or novelty value of outcomes. At the same time, based on external stimuli, working memory, and internal plans, PFC partially activates or primes its internal representations of attainable outcomes offered by the environment. PFC is part of the BG loop with NAcc, and this loop can mediate the selection of PFC outcome representations in the normal way via disinhibition of thalamo-cortical targets. The key, additional mechanism considered here is that NAcc uses the information on value from Amg and Hip to strongly bias the selection of specific goals among the multiple, partially activated outcomes encoded in PFC. The fact that NAcc activity is also based on value implies that goals with high value are selected.

The process of selection of goals in PFC is supported in two ways via the two main sub-components of NAcc, namely NAccCo and NAccSh. The NAccCo has the typical structure of the striato-pallidal-thalamo-cortical pathways; it is therefore NAccCo which mediates goal selection in PFC using the “canonical” basal-ganglia selection process described above. Instead, NAccSh contributes to the selection of goals in a different but complementary way, relying on the excitation of DA neurons. Both NAccCo and NaccSh project directly and indirectly to midbrain DA systems (respectively to SNpc and VTA) but the details of the circuits are different. In this respect, we will show that likely NAccSh acts in goal selection by exciting DA that in turns acts at NAccSh, PFC, and other targets including Hip and Amg. This dopaminergic action facilitates selection so causing a rapid switching between candidate goals (thereby promoting exploration of different goals when the animal learns to solve new problems), allows the selection of multiple goals (e.g., goals and sub-goals forming whole behavioral programmes), and facilitates the summation of value from different sources (e.g., related to appetitive/novelty value and to multiple cues and stimuli as in PIT).

3.2.4. Prefrontal cortex

As mentioned above, PFC forms a striato-cortical loop with NAcc. It is possible to distinguish three sub-systems within this loop, each performing a different function related to the anticipation of action-outcomes and the encoding of goals. The first sub-system, based on NAcc/AIC connections (in rats; in primates, also NAcc/OFC connections), contributes to select “ultimate” (distal) biologically-valuable outcomes, for example “food ingestion.” These goals are encoded in AIC and OFC in terms of their features most closely related to their appetitive aspects, in particular odor and smell. The second sub-system, based on NAccCo-PL connections, contributes to select outcomes based on their more cognitive aspects, such as their visual and auditive aspects. This system might be particularly important for encoding goals based on novelty. In primates, this system is also corroborated by the connections between PL and dlPFC, encoding not only ultimate goals but also proximal/sub-goals instrumental for the achievement of ultimate goals and initially not characterized by an intrinsic biological valence. The third system, mainly based on NAccSh-IL connections (also NAccCo-IL in primates), plays the role of avoiding the selection of Pavlovian and instrumental behaviors which are either no longer useful or even detrimental. The PFC also exchanges multiple direct and indirect connections with motor areas and modal sensory associative areas (e.g., the ITC and the PC) and uses these connections to implement sophisticated forward and inverse models that allow it to trigger the execution of suitable actions directed to pursue the selected goals.

3.2.5. The functioning of the whole system

We now present an example of how the whole system works referring to Figure 10. This example gives a first intuition of how the whole hypothesis works, while several aspects of the functioning of the various components, and the empirical evidence supporting them, are explained in detail in the following sub-sections. In the example, Amg uses the current perceived state of the world, or “input stimulus” (e.g., the sight of a lever) to activate an US associated to it (e.g., the valuable aspects of food, such as its taste and odor). We also imagine that the outcome has some novel aspects (e.g., imagine a food cooked in a novel fashion): this implies that its representation is strongly active in Hip and this contributes to increase its value. In prefrontal cortex (PFC) the same input stimulus (lever) primes a perceptually more sophisticated representation of the food outcome (e.g., not only taste and odor, but also sound/visual appearance of the food). Information on possible outcomes (PFC), on their appetitive/aversive value (Amg), and on their novelty (Hip), is integrated in accumbens where it forms their current saliency. By “saliency” we mean the overall activation of an internal representation, based on different sources of information encoding the current biological relevance of the represented item for the organism.

The entire process is supported by DA caused by NAcc-VTA/SNpc and reaching the various components of the system. Based on saliency, the NAcc-PFC loop selects the outcome in PFC having the highest saliency, so designating the goal that will drive action selection. In parallel, the input stimulus also primes the bottom-up activation of different actions within the DLS-PMC/M1 loop, but none gets enough activation to be triggered (e.g., assuming that habits are still not fully formed). However, the goal now selected in PFC leads to produce a top-down bias on the DLS-PMC-M1 loop that leads to select and perform the action that allows its accomplishment.

3.3. The Amygdala: Appetitive Motivations

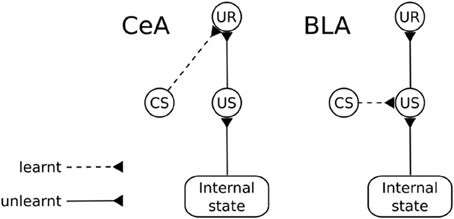

The amygdala (Amg) is formed by a group of nuclei acting as a central hub for the processing of appetitive and aversive motivational information. An important function of Amg is to trigger unconditioned behavioral responses (UR; e.g., orienting, startle, approaching) and to regulate a number of bodily processes (e.g., blood pressure, heart rate, salivation), following the perception of unconditioned stimuli (Davis and Whalen, 2001). These “primitive” responses are triggered via projections to sub-cortical structures (Behbehani, 1995; Bandler et al., 2000; Davis and Whalen, 2001; Balleine and Killcross, 2006). An important aspect of these responses is that Amg is capable of regulating their triggering “on the fly” based on the current state of the body (Hatfield et al., 1996). For example, the reactions of approach and salivation in response to a foodstuff might be inhibited if the animal has been previously satiated by that foodstuff. Amg also plays a key role in Pavlovian processes (Medina et al., 2002; Balleine and Killcross, 2006). When an animal experiences a neutral stimulus in a stable temporal relation with an “unconditioned stimulus” (US; i.e., an unlearned motivationally salient stimulus), Amg is capable of forming Pavlovian associations between them so that the neutral stimulus becomes a conditioned stimulus (CS). Such Pavlovian associations can be stimulus-specific or response-specific (Balleine and Killcross, 2006). wo different groups of nuclei within the Amg are responsible for the two kinds of associations, respectively the BLA and the CeA (Figure 11).

Figure 11. Functional differences between the basolateral amygdala (BLA) and the central nucleus of amygdala (CeA). CS, conditioned stimulus; US, unconditioned stimulus; UR, unconditioned response.

Lesion and inactivation studies of the BLA reveal that the CeA supports Pavlovian conditioning in a “US-dissociated” response-specific way, i.e., it fails to produce the behaviors typical of devaluation experiments. In particular, after BLA lesion (revealing the functioning of CeA in isolation), the animal learns to associate the CS with the same unconditioned responses (UR) that were associated with the US irrespective of the current value of the US. For example, a rat responds to a light consistently associated to food even if the animal has been satiated for that food (Hatfield et al., 1996).

In contrast, the BLA can associate a UR to the US representation so that the Pavlovian response associated with a CS remains tied to the representation of the US that caused the association (Hatfield et al., 1996). This process is based on the formation of links between the neural representations of the CS and US so that the presence of the CS recalls the internal representation of the US, including its current motivational value. Thus, changes in the reward value of the US results in changing the ability of the CS to recall the Pavlovian responses (Balleine and Killcross, 2006; Mirolli et al., 2010). The BLA, which can be considered an evolutionarily later, more sophisticated addition to the Amg complex, can exert important control functions on the activity of the CeA. In particular, it can affect its activation based on the current motivational value of stimuli in conditions where CeA alone would be insensitive to this, for example in the case mentioned above related to the responses to a CS linked to a devalued outcome (Balleine and Killcross, 2006; Mirolli et al., 2010).

Important for our hypothesis, CeA influences NAcc through the modulation of VTA dopaminergic neurons (Fudge and Haber, 2000; Fudge and Emiliano, 2003). Both Pavlovian autoshaping and general Pavlovian instrumental transfer depend on CeA, NAcc, and VTA (see section 2.2 and Corbit et al., 2007). Interestingly, the influence of the CeA over the NAcc results in US-dissociated effects as described above (Mannella et al., 2009).

The BLA sends to the NAcc one of the major afferent projection streams received by this area. As noted in section 2.2, the information conveyed through this pathway is necessary for the learning and expression of instrumental devaluation (Balleine et al., 2003). In general, the BLA conveys to NAcc information about USs and about USs predicted by CSs. Important for our goal-selection hypothesis, the level of activation of the representations of USs (i.e., outcomes) in BLA, which is modulated by the internal state of the animal as illustrated above and is communicated to NAcc, encode the value that Amg assigns to them.

3.4. The Hippocampus: Novelty and the Motivation to Explore

Another major source of projections to NAcc, especially to NAccSh, is the Hip; Voorn et al., 2004; Humphries and Prescott, 2010). The hippocampal complex comprises several areas characterized by distinct neural organization and computational mechanisms (Rolls and Treves, 1998). Among the most prominent, the enthorinal cortex (EC), relaying information from associative cortical areas (mainly PFC, PC, and ITC) to the dentate gyrus (DG) in Hip, performing recoding of its input in sparser form (thus enabling orthogonality); the CA3 layer of Hip, performing auto-associative fast memory encoding based on its multiple re-entrant connections; and CA1 layer of Hip, recoding information from the hippocampal system before relaying it (via the subiculum—Sub, and EC) back to the cortical areas projecting to Hip.

There is currently a lively debate on the nature of the information reaching NAcc from Hip which centers on two main theories: one related to the role of Hip for episodic memory and one to its role in spatial cognition and navigation (see Pennartz et al., 2011 for a review).

Hip plays a pivotal function for the fast, possibly one-shot, acquisition of integrative memories of “episodes”—specific, contextualized experiences (Eichenbaum et al., 1999; Eichenbaum et al., 2004; Smith and Mizumori, 2006; Bird and Burgess, 2008). In this respect, space is only one of several dimensions of the information stored by Hip. The stored memories last for hours or days (Rolls and Treves, 1998), and are supported by long term potentiation (Frey and Morris, 1998). The memories so formed are eventually consolidated within most of the cortical mantle with which the Hip shares important re-entrant connections (McClelland et al., 1995).

Given these properties, in particular its capacity to quickly store information about integrated context, Hip also plays a key role in spatial navigation. This is indeed one of the first and most studied functions of Hip (O'Keefe and Nadel, 1978; Mulder et al., 2004; Kumaran and Maguire, 2005). In this respect, evidence shows that Hip can form “spatial maps”—allocentric representations of space that allow animals to self-localize and navigate in space (O'Keefe and Burgess, 1996). This research has also led to several computational models of how hippocampal projections to NAcc support path integration and spatial planning. The Hip also plays a key function in decision making in goal-directed navigation tasks. For example, it has been shown that, at decision points in a maze, the rat Hip performs “mental simulations” of the possible alternative courses of actions and NAcc evaluates the outcomes (Johnson et al., 2007; Johnson and Redish, 2007; see Baldassarre, 2002, and Pezzulo et al., 2013, for some models).

In this paper, we propose a third key function of Hip-NAcc projections, but which is closely related to the role of Hip in episodic memory. Thus, we hypothesise that Hip-NAcc projections communicate novelty-related value to NAcc. The literature on novelty detection in Hip is large (see below) and much of it considers novelty detection as a process supporting the formation of episodic memories. The new aspect of our hypothesis is that we propose that hippocampal novelty detection also serves a second important function: the assignment of value to goals. Our proposal is therefore not at odds with the extensive evidence showing Hip mediating episodic memory; rather, it adds to this previously proposed function by highlighting the unifying function of novelty detection—for episodic memory or value assignment. To articulate this further, we now first review the relevance of the Hip novelty-detection capacity for memory formation, and then we expand the idea of how novelty value supports goal selection.

Novelty detection can be seen as a process required for the formation of episodic memory. An animal is continually bombarded by a large amount of sensory information, and so the detection of novelty allows filtering of stimuli and events that deserve engagement of learning processes. To this purpose, the hippocampal system and surrounding areas (e.g., the perirhinal cortex) are capable of detecting various forms of novelty, from stimulus novelty to associative novelty and contextual novelty: the literature on these topics is now very large (see Ranganath and Rainer, 2003, and Kumaran and Maguire, 2007, and for two excellent reviews).

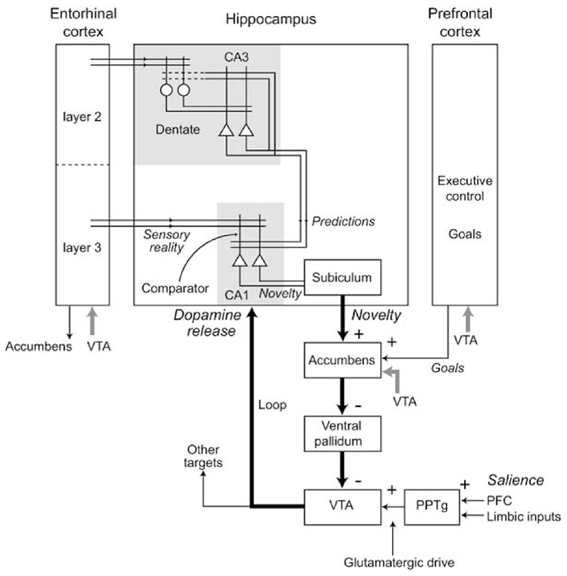

Novelty detection in Hip might be implemented by a process that compares the actual experience with the Hip predictions or memories, detecting the mismatch between them (Hasselmo et al., 1995; Lisman and Otmakhova, 2001; Meeter et al., 2004; Karlsson and Frank, 2008; Van Elzakker et al., 2008). In particular (Figure 12), it has been proposed that CA1, receiving input from both EC and CA3, might compare the memories recalled by CA3 and the “reality” received from EC, and might detect the novelty of stimuli on the basis of the mismatch between them. The novelty of an experienced stimulus/event/context does not decay with a single experience but lasts for the time needed for it to be explored and memorized (i.e., to become “familiar”).

Figure 12. Various components of the Hippocampal system underlying novelty detection in Hip, and the consequent production of dopamine via indirect connections to the VTA. Reprinted from Lisman and Grace (2005), Copyright 2005, with permission from Elsevier.

The Hip is also capable of responding to cues which predict novel stimuli—so-called “novelty anticipation” (Wittmann et al., 2007). In this case, stimuli that predict the arrival of novel patterns (e.g., images) activate Hip more strongly and also cause the dopaminergic system to fire, similarly to what happens with the anticipation of appetitive rewards. This process might be important for the assignment of novelty value to cues anticipating novel outcomes.

We now have the information needed for explaining our proposal on the role of Hip in assigning novelty-based value information to goals via NAcc. The idea is that Hip projections to NAcc have an effect that goes well beyond the indirect modulation of it via VTA DA signals. In particular, such projections are fundamental for informing NAcc of stimuli/outcomes/contexts which have a high novelty-based motivational value and this information is used by NAcc to select goals related to them. In this way, novel stimuli/outcomes/contexts become the focus of attention and exploratory activities are directed at interacting with them. This, in turn, facilitates the agent's understanding of the environmental processes producing the novel stimuli and of any role the agent might have in their causation. The adaptive utility of this is that novel objects and contexts have a high biological valence since they represent potentially important threats or opportunities. The biological importance of novelty is clearly shown by the fact that, when set in a novel environment, hungry animals prefer to explore the environment before eating available food, and by the close relation between novelty and fear-related processes (Cavigelli and McClintock, 2003). Brain imaging evidence shows a strong relation between the DA-related processes driving exploration based on novelty and the consequent possible achievement of rewards (Bunzeck et al., 2012). Having selected a goal based on its novelty (via the Hip-NAcc-PFC circuit) the accompanying release of DA in other brain areas (via Hip-NAcc-VTA) promotes the required learning of memories related to it (Lisman et al., 2011) and agent-environment interactions responsible for the novelty.

The novelty detection process of Hip also strongly interacts with DA production via NAcc. In this respect, Lisman and Grace (2005) have proposed an important theory for which Hip novelty detection modulates the activity of dopaminergic neurons of VTA via an indirect pathway involving Sub and NAcc (see Figure 12). According to this hypothesis, novelty detection in Hip would activate dopaminergic areas projecting back to Hip (aside several other cortical and sub-cortical areas) thereby supporting the formation of memories. Although DA projections to Hip are rather sparse (Gasbarri et al., 1997), the DA injected in Hip might nevertheless mediate plasticity to support the memorization of novel stimuli (Otmakova et al., 2013). In accord with the idea of DA influencing the formation of memories, it has been shown that hippocampal input to NAccSh is needed for the expression of the latent inhibition effect (Peterschmitt et al., 2005; Meyer and Louilot, 2011; Quintero et al., 2011). This effect occurs when Pavlovian conditioning is substantially slower if the CS has been previously become familiar for the animal in absence of any reward (Lubow and Moore, 1959). This is consistent with the idea that a novel CS detected by Hip causes a release of DA by activating VTA via NAcc, and this in turn enhances Pavlovian learning.

3.5. The Ventral Striatum: An Integrator of Value for Goal Selection

Within the proposed hypothesis, ventral striatum is supposed to act as a nexus for integrating stimulus value from Amg and Hip, and using it to bias goal selection in PFC. Amg and Hip are also directly connected to PFC. These connections are important for the basic functions explained with respect to the evolutionary perspective presented in section 3.1. We now analyze them as this also allows a better clarification of the different role played by the connections between those areas when they are mediated by the NAcc.

3.5.1. The function of the direct connections between amygdala/hippocampus and prefrontal cortex

The direct connections between Amg and PFC are first of all a means to enhance Amg-based Pavlovian learning processes via the working memory capabilities of PFC. This might have been an important evolutionary step leading to strengthen Pavlovian processes (Figure 9B). In particular, Amg, AIC (involved in processing smell and taste) and OFC (also involved in smell and taste processing) operate as an integrated system with OFC showing patterns of neural activity similar to those of Amg but more robust with respect to time delays (e.g., involved in trace conditioning experiments; Runyan et al., 2004) and complex situations (e.g., those involving contextual shifts; Schoenbaum et al., 2003). In section 3.1 we suggested that this system might have been a way to empower Pavlovian processes in Amg, and it might have also been a precursor for the emergence of the more sophisticated functions of PFC in goal management, especially in primates. NAcc plays an important role in these enhanced Pavlovian processes, aside its role in goal-selection illustrated below. In Particular, the NAcc might be an important behavioral output gateway of Pavlovian processes thanks to its connections to sub-cortical structures (e.g., for triggering basic behaviors such as approaching, orienting, etc., Parkinson et al., 2000b; Cardinal et al., 2002b; Gruber and McDonald, 2012).

The strong direct connections between Hip and PFC, instead, allow the Hip-PFC axis to support working memory and planning functions, thereby forming an integrated system supporting the anticipation of possible future states that might follow from the execution of actions in the current state (Fuster, 1997; Frankland and Bontempi, 2005; Bast, 2007). The formation and progressive sophistication of this system has been an important evolutionary step leading to strengthen the general “executive function” of organisms (Figure 9C). The key aspects of the relation between the two systems are that PFC can perform reasoning and planning processes by relying on dynamical mechanisms supporting working memory, while Hip can quickly form broad associations, e.g., involving multimodal stimuli and context. Together, the two mechanisms generate a powerful computational machine for supporting planning, reasoning, and executive functions (Toni et al., 2001; Bast, 2007).

3.5.2. Anatomy and connections of nucleus accumbens core and shell

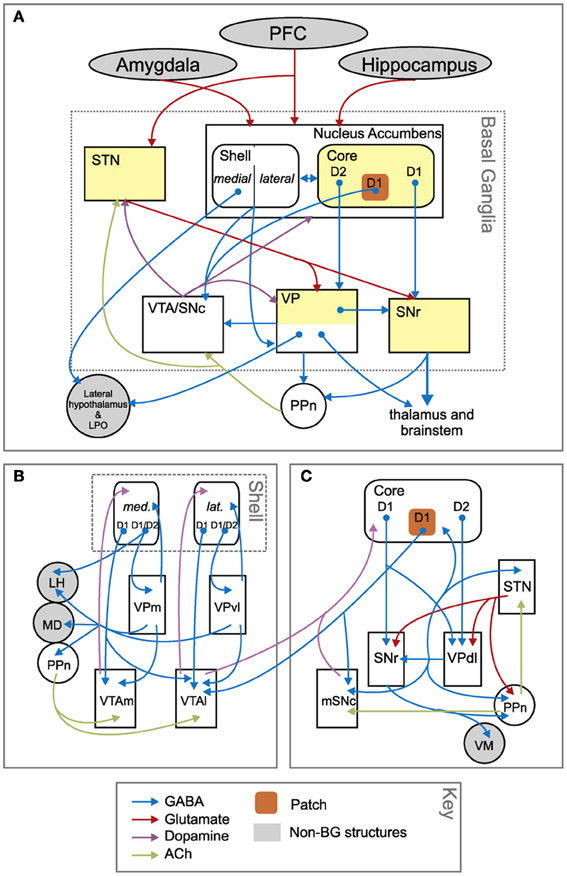

We now consider some features of NAcc internal anatomy, functioning, and external connectivity important for understanding how different sub-regions of NAcc contribute to select goals in differential ways. As already noted, it is possible to identify at least two subregions within the ventral BG, based on the circuits of NAccCo and NAccSh. These two circuits differ in cytology, micro-architecture, and afferent/efferent connections with other neural regions (Zahm, 2000; Voorn et al., 2004; Humphries and Prescott, 2010).

The BG-cortical loop involving NAccCo reproduces almost the same cytology and internal organization as the other BG-cortical loops (see Figures 3, 13), so making it ideal for implementing selection processes. In particular, NAccCo is connected to the ventral globus pallidus and SNpr, and the latter projects to thalamus which is in recurrent connectivity with cortex. The ventral globus pallidus and SNpr are also innervated by the subthalamic nucleus (STN). This micro-circuit involving striatum, STN, pallidum, and SNpr, has been closely linked with the capacity of basal-ganglia to perform the selection of the contents of the targeted cortex (Gurney et al., 2001; Humphries and Gurney, 2002). The cortical areas involved in the loops with NAccCo are AIC and PL in rats, and also OFC and ACC in primates.

Figure 13. Anatomical differences between the basal ganglia circuits involving nucleus accumbens core and shell. (A) Overall schema of the connections involving the whole nucleus accumbens. (B) Zoom on the connections involving the nucleus accumbens shell. (C) Zoom on the connections involving the nucleus accumbens core. Reprinted from Humphries and Prescott (2010), Copyright 2010, with permission from Elsevier.

In contrast, the BG circuit involving NAccSh shows some unique features in terms of both cytology and micro-architecture (see Figure 13). In particular, VP (medial and ventrolateral regions) is the only BG output nucleus of the NAccSh which so has no access to SNpr. Moreover, and importantly, the circuit has no connectivity with STN, so it is mainly formed by the “direct pathway” of BG but lacks the “indirect pathway” involving the STN. The latter feature implies that NAccSh cannot perform a strong “winner-take-all” selection as it cannot fully inhibit the non-selected competitive options in cortex (Humphries and Prescott, 2010; cf. Gurney et al., 2001, on the importance of STN for BG to perform competitive processing).

In terms of connectivity, in rats NaccSh targets the AIC, the PL and the IL. IL plays a role in inhibiting instrumental behaviors and in the extinction of Pavlovian processes (Quirk et al., 2000; Coutureau and Killcross, 2003; Rhodes and Killcross, 2004; Sotres-Bayon and Quirk, 2010). In primates, NAccSh also targets OFC and ACC.

NAccSh and NAccCo also differ in their relation to midbrain DA systems (Voorn et al., 2004; Humphries and Prescott, 2010). Thus, only a subset of NAccCo projection neurons—comprising the so-called “patch”—project to DA neurons in SNpc. DA produced by SNpc mainly targets striatum. In contrast, most projection neurons in NAccSh project to the dopamine neurons in the VTA. DA produced by VTA mainly targets NAcc, Amg, Hip, and PFC. In both NAccSh and NAccCo, the relevant parts of VP project with GABAergic (inhibitory) synapses to their respective DA systems. Critically, in the circuit with NAccSh there is no excitation of VP from STN (see above) and this might lead NAccSh to regulate DA differently with respect to NAccCo, as explained below.

3.5.3. Dopamine and goal selection